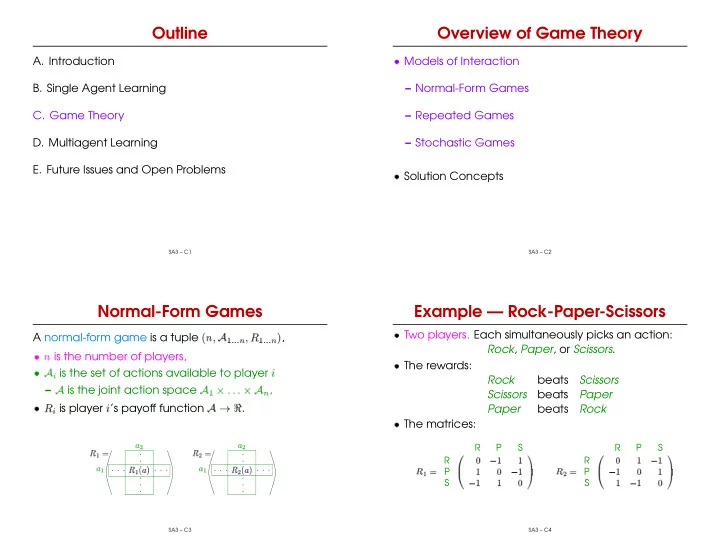

✙ ✕ � ✥ ✦ ✧ ★ ✩ ✪ ✪ ✔ � ✆ ✪ ✎ ★ ✩ � ✪ ✡ ✆ � ✚ ✑ ✗ ✜ ✖ ✛ ✗ ✙ ✚ ✘ ✛ ✖ ✗ ✘ ✖ ✘ ✖ ✗ ✖ ✖ ✜ ✙ ✏ ✑ ✘ ✩ ✦ ✧ ✁ ★ ✪ ✩ ✪ � ✪ ✟ ★ ✪ � ✪ ✩ ✪ ★ ✫ ✬ ✟ ✡ ✩ ✬ ✝ ✆ ✪ ✆ ✪ ✎ ★ ✫ � ✂ ✄ � ✥ ☞ ✡ ✟ ✟ ✟ ✝ ☛ ✙ Outline Overview of Game Theory A. Introduction Models of Interaction B. Single Agent Learning – Normal-Form Games C. Game Theory – Repeated Games D. Multiagent Learning – Stochastic Games E. Future Issues and Open Problems Solution Concepts SA3 – C1 SA3 – C2 Normal-Form Games Example — Rock-Paper-Scissors Two players. Each simultaneously picks an action: A normal-form game is a tuple ✆✞✝✠✟ , ✂☎✄ Rock , Paper , or Scissors . is the number of players, The rewards: is the set of actions available to player ✆✍✌ Rock beats Scissors is the joint action space , – ✏✒✑ Scissors beats Paper is player ’s payoff function . ☛✓✌ Paper beats Rock The matrices: R P S R P S . . . . R R . . . . . . . . . . . . P P ✢✤✣ ✢✤✭ . . . . S S . . SA3 – C3 SA3 – C4

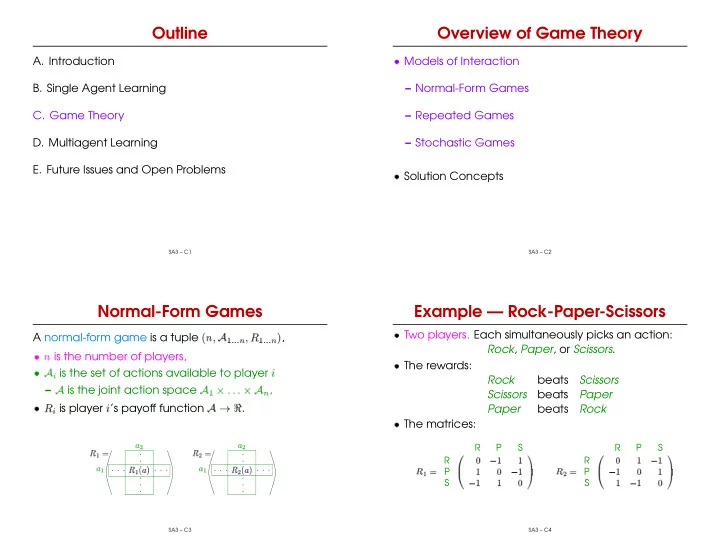

✁ ✞ ✥ � ✪ ★ ★ ✪ ✁ ✆ ✝ ✟ ✠ ✞ ✟ ☞ ✡☛ ✥ � ✪ ★ ★ ✪ ✡☛ ✟ ✢ ★ ✄ ☎ ★ ✪ ✆ � � � � ✁ ★ ✞ ✪ ✪ � ✥ ✡☛ ☞ � ✆✝ ✞ ✟ ✁ ✭ ✥ ✞ � ★ ✪ ★ ✪ ✁ ✟ ✆✝ ✞ ✟ ✟ ✥ ✠ ✡☛ ✥ ✞ ✝ � ★ ★ ✪ ✪ ✞ ✡☛ ✆✝ ★ ✞ ✟ ✞ ✟ ✠ ✡☛ ✥ ✟ � ✪ ✪ ☞ ★ ✁ ✢ ✭ ✆ ✝ ✞ ✟ ✞ ✟ � ✁ ✁ ★ ✪ � ✢ ✣ ✥ ✥ ✁ ✪ � ✂ ★ ★ ★ ✪ ✁ ✁ ✂ � ✢ ✭ ✥ ✥ ✣ ✢ � � ✁ ✪ ✂ � ✪ ★ ✪ ✪ ✢ ✣ ☎ ✥ � ★ ✪ ✩ ✄ � ✪ ✩ ✪ ✁ ✩ ✢ ✭ ✥ ✥ � ✩ � ✪ ✪ ✁ ✂ ★ ✪ ★ ★ More Examples More Examples Matching Pennies Prisoner’s Dilemma H T H T C D C D H H C C ✢✤✣ ✢✤✭ T T D D Coordination Game Three-Player Matching Pennies A B A B A A B B Bach or Stravinsky B S B S B B ✢✤✭ S S SA3 – C5 SA3 – C6 Three-Player Matching Pennies Three-Player Matching Pennies Three players. Each simultaneously picks an action: The matrices: H T H T Heads or Tails . H H ✢✤✣ ✢✤✣ T T The rewards: H H Player One wins by matching Player Two, T T Player Two wins by matching Player Three, H H Player Three wins by not matching Player One. ✢ ✍✌ ✢ ✍✌ T T SA3 – C7 SA3 – C8

✓ ☛ ✝ � ✤ ✍ ✂ ✛ ☛ ✝ ✣ ✎ � ✛ � ✌ ✞ ✁ ✌ ✁ ✎ ✎ ✄ ✗ ✝ ✘ ✙ ✝ ✗ ✚ � ✢ � ✣ ✏ ✛ ✚ ✙ � ✛ ✢ ✂ ✌ ☛ ✡ ✌ ✄ ☞ ✁ ✁ ✝ ☎ ✄ ✂ ✁ ☞ ✁ ✁ � ✌ ✁ ✌ � � ✆ ✁ � ☞ ✌ ✁ ✌ ✎ ✠ Strategies Strategies What can players do? Notation. – Pure strategies ( ): select an action. is a joint strategy for all players. – – Mixed strategies ( ): select an action according ☛✓✌ ☛✓✌ to some probability distribution. – is a joint strategy for all players except . ✁✟✞ – is the joint strategy where uses strategy ✁✟✞ and everyone else . SA3 – C9 SA3 – C10 Types of Games Repeated Games Zero-Sum Games (a.k.a. constant-sum games) You can’t learn if you only play a game once. ☛ ✌☞ Repeatedly playing a game raises new questions. Examples: Rock-paper-scissors, matching pennies. – How many times? Is this common knowledge? Team Games Finite Horizon Infinite Horizon ☛ ✒✑ – Trading off present and future reward? Examples: Coordination game. ✗✜✛ ✔✖✕ General-Sum Games (a.k.a. all games) Examples: Bach or Stravinsky, three-player matching Average Reward Discounted Reward pennies, prisoner’s dilemma SA3 – C11 SA3 – C12

✄ ✑ ✙ ✗ ✓ ✓ ✗✘ ✓ ✕ ✎ ✁ ✒ ✂ ✄ ✡ ✄ ✆ ✝ ✟ ✟ ✟ ✏ ✎ ✄ ✄ ✌ ✄ ★ ☎ ✪ ✁ ✥ � ☎ ✘ ★ ✪ ✁ � ✠ ✙ ✠ ✚ ✎ ✡ ☛ � � ✝ ✎ ✑ ✑ ✏ ✆ ✡ � ☛ ✡ ✍ ✡ ✏ ✆ ✏ ✡ ✔ ☞ ✍ ✆ ✆ ✄ ✂ ☛ ✝ ✟ ✟ ✟ ✡ ☞ ✏ � ✎ ✏ � ✡ ✕ � ✆ ✌ ✔ ✎ ✆ ✥ � ✒ ✓ ☞ ✂ ✁ ✁ � ✡ ☞ ✞ � ✏ ✎ � ✝ ✟ ✟ ✟ ✡ ✡ ✄ ✆ ✝ ✡ ✢ ✎ � ✡ ✟ ✟ ✡ ✆ ✑ ✁ ✌ ✁ � ✝ ✄ ✑ ✑ ✝ ✁ ✆ ✂✄ ✟ ✌ ✆ ✄ ✁ ✑ ✑ ✑ ✄ ☞ ✔ � ✡ ✁ � ☞ ✌ ✁ ✞ ✡ ✌ � ✁ � ✝ ✄ ✑ ✑ ✑ ✄ ✡ ✁ ☞ ✂ ☎ ✙ ✂ ✁ ✁ � ✆ Repeated Games — Strategies Repeated Games — Examples What can players do? Iterated Prisoner’s Dilemma C D C D – Strategies can depend on the history of play. C C ✢✤✣ ✢✤✭ D D where – The single most examined repeated game! – Repeated play can justify behavior that is not – Markov strategies a.k.a. stationary strategies rational in the one-shot game. ✝✠✟ – Tit-for-Tat (TFT) – -Markov strategies Play opponent’s last action (C on round 1). A 1-Markov strategy. SA3 – C13 SA3 – C14 Stochastic Games Stochastic Games — Definition A stochastic game is a tuple , is the number of agents, is the set of states, is the set of actions available to agent , MDPs Repeated Games - Single Agent - Multiple Agent – is the joint action space , ✏✒✑ - Multiple State - Single State is the transition function , is the reward function for the th agent . ☛✓✌ . Stochastic Games ✒✔✓✖✕ ✘✜✛ . . . . . . . - Multiple Agent ✓✖✕ . . - Multiple State . SA3 – C15 SA3 – C16

✄ ✩ ✟ ✪ ★ ✑ ☞ ✞ ✩ ✂ ★ ✪ ✏ � ✎ ✄ ✪ ✟ ✪ ★ ✣ ✣ ✒ ✪ ★ ✪ ✂ ✝ ✝ ✞ ✟ ✄ ✝ ✡ ☛ � ☞ ✄ ✪ ☞ ✄ ✩ ✂ ✩ ✡ ✎ ✌ ✄ ✟ ✟ ★ ✝ ✆ ✌ ✄ ✄ ✂ ☞ ✂ ✄ ✠ ✚ ✝ ✄ ✘ ✚ ✌ ✁ ✄ ✄ � ☞ ☛ ✁ � ✄ ✣ ✂ ✣ ☞ ✌ ✩ ✓ ✎ ✩ ✂ ✔ � � ✂ ✁ ✌ � ✕ ✖ ✕ ✂ ✌ � ✗ ☛ ✞ ✠ ✆ ✌ ✎ ✁ ✄ ✁ ✂ ✝ ✁ ✝ ✡ � ✁ ☞ ✁ ✄ ✄ ☞ ✂ ✁ ✁ ✂ ✄ ✡ ✆ ☞ ✆ � ✌ � ✁ ✏ ✡ ✔ ✂✄ ✁ ✌ ✏ ☞ ✁ ✂ ✙ ☎ ✡ ✢ ✆ ✁ ✡ ✄ � � � � ✌ ✂ � � � � � ✑ ✑ � ✑ � ✂ Stochastic Games — Policies Example — Soccer (Littman, 1994) What can players do? A – Policies depend on history and the current state. B where Players: Two. States: Player positions and ball possession (780). – Markov polices a.k.a. stationary policies Actions: N, S, E, W, Hold (5). Transitions: ✎ ☎✄ – Simultaneous action selection, random execution. – Collision could change ball possession. – Focus on learning Markov policies, but the learning itself is a non-Markovian policy. Rewards: Ball enters a goal. SA3 – C17 SA3 – C18 Example — Goofspiel Stochastic Games — Facts Players hands and the deck have cards . If , it is an MDP . Card from the deck is bid on secretly. If , it is a repeated game. Highest card played gets points equal to the card from the deck. If the other players play a stationary policy, it is an Both players discard the cards bid. MDP to the remaining player. Repeat for all deck cards. � ✟✞ S IZEOF ( or ) V(det) V(random) ☎ ✙✘ 4 692 15150 59KB ✂ ✍✌ – The interesting case, then, is when the other 8 47MB ★ ✍✌ 13 2.5TB agents are not stationary, i.e., are learning. SA3 – C19 SA3 – C20

Recommend

More recommend