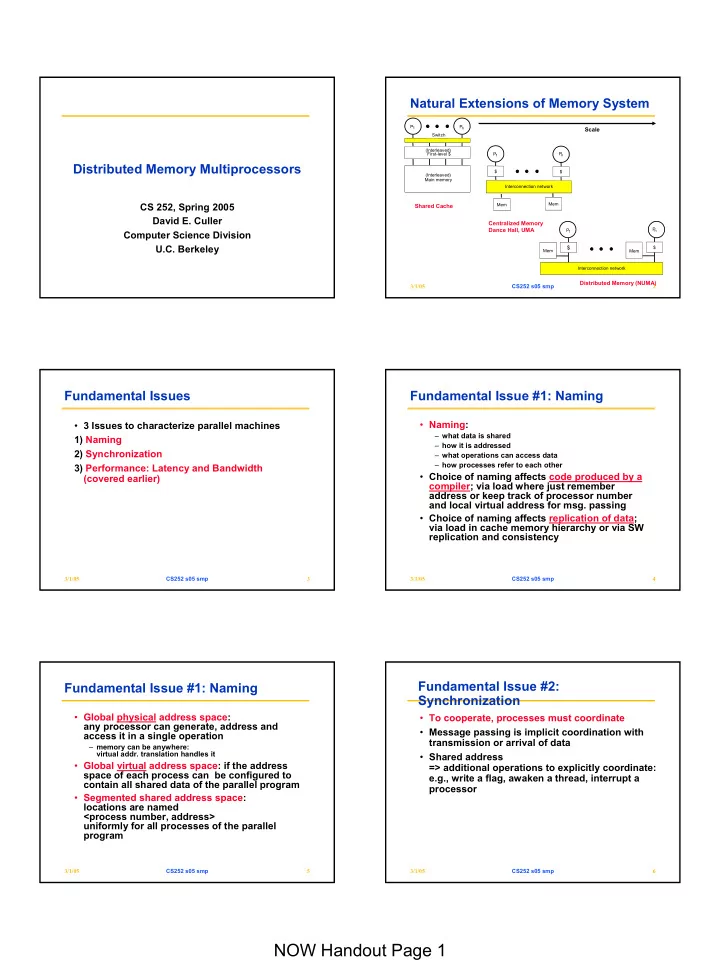

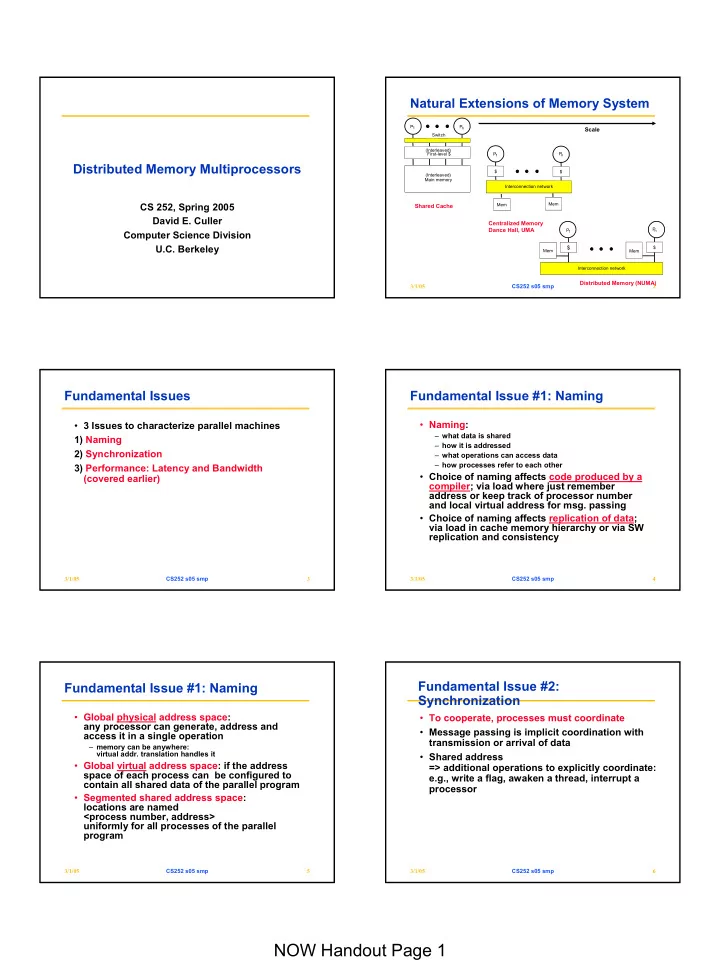

Natural Extensions of Memory System P P 1 n Scale Switch (Interleaved) First-level $ P P 1 n Distributed Memory Multiprocessors $ $ (Interleaved) Main memory Interconnection network CS 252, Spring 2005 Mem Mem Shared Cache David E. Culler Centralized Memory P Dance Hall, UMA P 1 n Computer Science Division U.C. Berkeley $ $ Mem Mem Interconnection network Distributed Memory (NUMA) 3/1/05 CS252 s05 smp 2 Fundamental Issues Fundamental Issue #1: Naming • Naming: • 3 Issues to characterize parallel machines – what data is shared 1) Naming – how it is addressed 2) Synchronization – what operations can access data – how processes refer to each other 3) Performance: Latency and Bandwidth • Choice of naming affects code produced by a (covered earlier) compiler; via load where just remember address or keep track of processor number and local virtual address for msg. passing • Choice of naming affects replication of data; via load in cache memory hierarchy or via SW replication and consistency 3/1/05 CS252 s05 smp 3 3/1/05 CS252 s05 smp 4 Fundamental Issue #2: Fundamental Issue #1: Naming Synchronization • Global physical address space: • To cooperate, processes must coordinate any processor can generate, address and • Message passing is implicit coordination with access it in a single operation transmission or arrival of data – memory can be anywhere: virtual addr. translation handles it • Shared address • Global virtual address space: if the address => additional operations to explicitly coordinate: space of each process can be configured to e.g., write a flag, awaken a thread, interrupt a contain all shared data of the parallel program processor • Segmented shared address space: locations are named <process number, address> uniformly for all processes of the parallel program 3/1/05 CS252 s05 smp 5 3/1/05 CS252 s05 smp 6 NOW Handout Page 1 9

Parallel Architecture Framework Scalable Machines • What are the design trade-offs for the spectrum Programming Model Communication Abstraction of machines between? Interconnection SW/OS • Layers: – specialize or commodity nodes? Interconnection HW – capability of node-to-network interface – Programming Model: – supporting programming models? » Multiprogramming : lots of jobs, no communication » Shared address space: communicate via memory » Message passing: send and recieve messages • What does scalability mean? » Data Parallel: several agents operate on several data – avoids inherent design limits on resources sets simultaneously and then exchange information globally and simultaneously (shared or message – bandwidth increases with P passing) – latency does not – Communication Abstraction: – cost increases slowly with P » Shared address space: e.g., load, store, atomic swap » Message passing: e.g., send, recieve library calls » Debate over this topic (ease of programming, scaling) => many hardware designs 1:1 programming model 3/1/05 CS252 s05 smp 7 3/1/05 CS252 s05 smp 8 Bandwidth Scalability Dancehall MP Organization Typical switches M M M ° ° ° Bus Scalable network S S S S Crossbar Switch Switch Switch Multiplexers P M M P M M P M M P M M ° ° ° $ $ $ $ P P P P • What fundamentally limits bandwidth? • Network bandwidth? – single set of wires • Bandwidth demand? • Must have many independent wires – independent processes? • Connect modules through switches – communicating processes? • Bus vs Network Switch? • Latency? 3/1/05 CS252 s05 smp 9 3/1/05 CS252 s05 smp 10 Generic Distributed Memory Org. Key Property • Large number of independent communication Scalable network paths between nodes => allow a large number of concurrent transactions Switch Switch Switch using different wires • initiated independently ° ° ° M CA • no global arbitration $ • effect of a transaction only visible to the nodes P involved • Network bandwidth? – effects propagated through additional transactions • Bandwidth demand? – independent processes? – communicating processes? • Latency? 3/1/05 CS252 s05 smp 11 3/1/05 CS252 s05 smp 12 NOW Handout Page 2 9

Programming Models Realized by Network Transaction Protocols Scalable Network Message CAD Database Scientific modeling Parallel applications Input Processing Output Processing ° ° ° – checks – checks CA Communication Assist CA Multiprogramming Shared Message Data Programming models – translation – translation address passing parallel – buffering – formating – action – scheduling Node Architecture M P M P Compilation Communication abstraction or library User/system boundary Operating systems support Hardware/software boundary Communication har dware • Key Design Issue: Physical communication medium • How much interpretation of the message? • How much dedicated processing in the Comm. Network Transactions Assist? 3/1/05 CS252 s05 smp 13 3/1/05 CS252 s05 smp 14 Key Properties of Shared Address Shared Address Space Abstraction Abstraction Source Destination • Source and destination data addresses are r ← [ Global address] (1) Initiate memory access Load specified by the source of the request (2) Address translation (3) Local/remote check – a degree of logical coupling and trust (4) Request transaction Read request • no storage logically “outside the address space” Read request (5) Remote memory access » may employ temporary buffers for transport Wait Memory access • Operations are fundamentally request response Read response (6) Reply transaction Read response • Remote operation can be performed on remote (7) Complete memory access memory Time – logically does not require intervention of the remote • Fundamentally a two-way request/response protocol processor – writes have an acknowledgement • Issues – fixed or variable length (bulk) transfers – remote virtual or physical address, where is action performed? – deadlock avoidance and input buffer full 3/1/05 CS252 s05 smp 15 3/1/05 CS252 s05 smp 16 • coherent? consistent? Consistency Message passing while (flag==0); A=1; print A; • Bulk transfers flag=1; P P P 2 3 1 • Complex synchronization semantics Memory Memory Memory – more complex protocols A:0 flag:0->1 – More complex action Delay 3: load A 1: A=1 2: flag=1 • Synchronous Interconnection network – Send completes after matching recv and source data sent (a) – Receive completes after data transfer complete from P P 2 3 matching send • Asynchronous – Send completes after send buffer may be reused P 1 Congested path (b) • write-atomicity violated without caching 3/1/05 CS252 s05 smp 17 3/1/05 CS252 s05 smp 18 NOW Handout Page 3 9

Recommend

More recommend