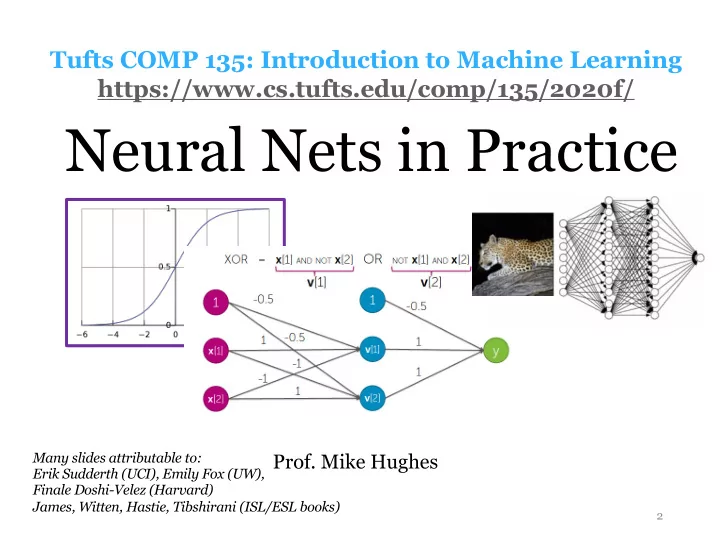

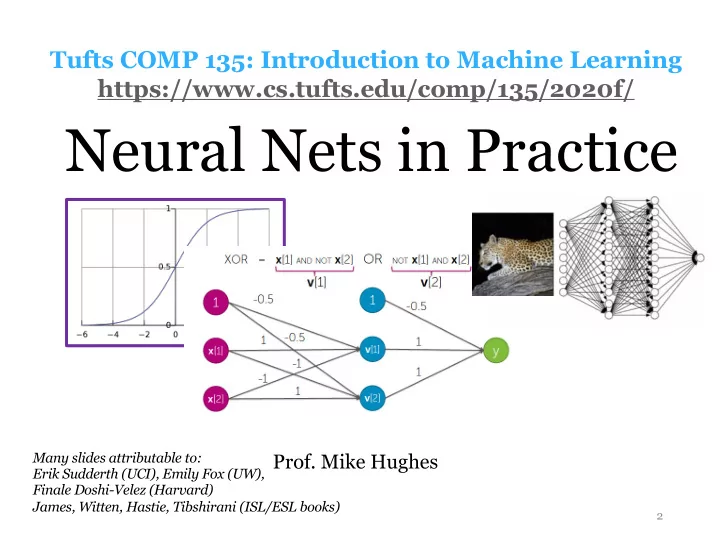

Tufts COMP 135: Introduction to Machine Learning https://www.cs.tufts.edu/comp/135/2020f/ Neural Nets in Practice Many slides attributable to: Prof. Mike Hughes Erik Sudderth (UCI), Emily Fox (UW), Finale Doshi-Velez (Harvard) James, Witten, Hastie, Tibshirani (ISL/ESL books) 2

Objectives Today : (day 13) NNs in Practice • Multi-class classification with NNs • Pros and cons of NNs • Avoiding overfitting with NNs • Hyperparameter selection • Data augmentation • Early stopping Mike Hughes - Tufts COMP 135 - Fall 2020 3

What will we learn? Evaluation Supervised Training Learning Data, Label Pairs Performance { x n , y n } N measure Task n =1 Unsupervised Learning data label x y Reinforcement Learning Prediction Mike Hughes - Tufts COMP 135 - Fall 2020 4

Task: Binary Classification y is a binary variable Supervised (red or blue) Learning binary classification x 2 Unsupervised Learning Reinforcement Learning x 1 Mike Hughes - Tufts COMP 135 - Fall 2020 5

Multi-class Classification How to do this? Mike Hughes - Tufts COMP 135 - Fall 2020 6

Binary Prediction Goal: Predict label (0 or 1) given features x x i , [ x i 1 , x i 2 , . . . x if . . . x iF ] • Input: “features” Entries can be real-valued, or “covariates” other numeric types (e.g. integer, binary) “attributes” y i ∈ { 0 , 1 } • Output: Binary label (0 or 1) “responses” or “labels” >>> yhat_N = model. predict (x_NF) >>> yhat_N[:5] [0, 0, 1, 0, 1] Mike Hughes - Tufts COMP 135 - Fall 2020 7

Binary Proba. Prediction Goal: Predict probability of label given features x i , [ x i 1 , x i 2 , . . . x if . . . x iF ] • Input: “features” Entries can be real-valued, or “covariates” other numeric types (e.g. integer, binary) “attributes” p i , p ( Y i = 1 | x i ) • Output: ˆ Value between 0 and 1 e.g. 0.001, 0.513, 0.987 “probability” >>> yproba_N2 = model. predict_proba (x_NF) >>> yproba1_N = model. predict_proba (x_NF) [:,1] >>> yproba1_N[:5] [0.143, 0.432, 0.523, 0.003, 0.994] Mike Hughes - Tufts COMP 135 - Fall 2020 8

Multi-class Prediction Goal: Predict one of C classes given features x x i , [ x i 1 , x i 2 , . . . x if . . . x iF ] • Input: “features” Entries can be real-valued, or “covariates” other numeric types (e.g. integer, binary) “attributes” • Output: y i ∈ { 0 , 1 , 2 , . . . C − 1 } Integer label (0 or 1 or … or C-1 ) “responses” or “labels” >>> yhat_N = model. predict (x_NF) >>> yhat_N[:6] [0, 3, 1, 0, 0, 2] Mike Hughes - Tufts COMP 135 - Fall 2020 9

Multi-class Proba. Prediction Goal: Predict probability of each class given features Input: x i , [ x i 1 , x i 2 , . . . x if . . . x iF ] “features” Entries can be real-valued, or other “covariates” numeric types (e.g. integer, binary) “attributes” Output: p i , [ p ( Y i = 0 | x i ) ˆ p ( Y i = 1 | x i ) . . . p ( Y i = C − 1 | x i )] “probability” Vector of C non-negative values, sums to one >>> yproba_NC = model. predict_proba (x_NF) >>> yproba_c_N = model. predict_proba (x_NF) [:,c] >>> np.sum(yproba_NC, axis=1) [1.0, 1.0, 1.0, 1.0] Mike Hughes - Tufts COMP 135 - Fall 2020 10

From Real Value to Probability probability 1 sigmoid( z ) = 1 + e − z Mike Hughes - Tufts COMP 135 - Fall 2020 11

From Vector of Reals to Vector of Probabilities z i = [ z i 1 z i 2 . . . z ic . . . z iC ] " # e z i 1 e z i 2 e z iC p i = ˆ c =1 e z ic . . . . . . P C P C P C c =1 e z ic c =1 e z ic called the “softmax” function Mike Hughes - Tufts COMP 135 - Fall 2020 12

Representing multi-class labels y n ∈ { 0 , 1 , 2 , . . . C − 1 } ∈ { − } Encode as length-C one hot binary vector y n = [¯ ¯ y n 1 ¯ y n 2 . . . ¯ y nc . . . ¯ y nC ] Examples (assume C=4 labels) class 0: [1 0 0 0] class 1: [0 1 0 0] class 2: [0 0 1 0] class 3: [0 0 0 1] Mike Hughes - Tufts COMP 135 - Fall 2020 13

“Neuron” for Binary Prediction Probability of class 1 Logistic sigmoid Linear function activation with weights w function Credit: Emily Fox (UW) Mike Hughes - Tufts COMP 135 - Fall 2020 14

Recall: Binary log loss ( 1 if y 6 = ˆ y error( y, ˆ y ) = 0 if y = ˆ y log loss( y, ˆ p ) = − y log ˆ p − (1 − y ) log(1 − ˆ p ) Plot assumes: - True label is 1 - Threshold is 0.5 - Log base 2 Mike Hughes - Tufts COMP 135 - Fall 2020 15

Multi-class log loss Input: two vectors of length C Output: scalar value (strictly non-negative) C X log loss(¯ y n , ˆ p n ) = − y nc log ˆ ¯ p nc c =1 Justifications carry over from the binary case: - Interpret as upper bound on the error rate - Interpret as cross entropy of multi-class discrete random variable - Interpret as log likelihood of multi-class discrete random variable Mike Hughes - Tufts COMP 135 - Fall 2020 16

Each Layer Extracts “Higher Level” Features Mike Hughes - Tufts COMP 135 - Fall 2020 17

Deep Neural Nets PROs CONs? • Flexible models • State-of-the-art success in many applications • Object recognition • Speech recognition • Language models • Open-source software Mike Hughes - Tufts COMP 135 - Spring 2019 18

Two kinds of optimization problem Convex Non-Convex Only one global minimum One or more local minimum If GD converges, solution is best GD solution might be much worse possible than global minimum Mike Hughes - Tufts COMP 135 - Spring 2019 19

Deep Neural Nets: Optimization is not convex Linear regression MLPs with 1+ hidden layers Logistic regression Deep NNs in general Convex Non-Convex Only one global minimum One or more local minimum If GD converges, solution is best GD solution might be much worse possible than global minimum Mike Hughes - Tufts COMP 135 - Spring 2019 20

Deep Neural Nets PROs CONs • Flexible models • Require lots of data • State-of-the-art success • Each run of SGD can in many applications take hours/days • Object recognition • Optimization not easy • Speech recognition • Will it converge? • Language models • Is local minimum good • Open-source software enough? • Hard to extrapolate • Many hyperparameters • Will it overfit? Mike Hughes - Tufts COMP 135 - Spring 2019 21

Many hyperparameters for a Deep Neural Network (MLP) • Num. layers • Num. units / layer Control model • Activation function complexity • L2 penalty strength Optimization • Learning rate quality/speed • Batch size Mike Hughes - Tufts COMP 135 - Spring 2019 22

Guidelines: complexity params • Num. units / layer • Start with similar to num. features • Add more (log spaced) until serious overfitting • Num. layers • Start with 1 • Add more (+1 at a time) until serious overfitting • L2 penalty strength scalar • Try range of values (log spaced) • Activation function • ReLU for most problems is reasonable Mike Hughes - Tufts COMP 135 - Spring 2019 23

Grid Search 1) Choose candidate values of each hyperparameter Step size/learning rate Number of hidden units 2) For each combination, assess its heldout score • We need to choose in advance: • Performance metric (e.g. AUROC, log loss, TPR at PPV > 0.98, etc.) • What is most important for your task? • Source of heldout data • Fixed validation set : Faster, simpler • Cross validation with K folds : Less noise, better use of all available data 3) Select the one single combination with best score Mike Hughes - Tufts COMP 135 - Spring 2019 24

Grid Search 1) Choose candidate values of each hyperparameter Step size/learning rate Number of hidden units 2) For each combination, assess its heldout score • We need to choose in advance: • Performance metric (e.g. AUROC, log loss, TPR at PPV > 0.98, etc.) • What is most important for your task? • Source of heldout data • Fixed validation set : Faster, simpler • Cross validation with K folds : Less noise, better use of all available data 3) Select the one single combination with best score Each trial can be parallelized. Can do for numeric or discrete variables. But, number of combinations to try can quickly grow infeasible Mike Hughes - Tufts COMP 135 - Spring 2019 25

Random Search 1) Choose candidate distributions of each hyperparameter Usually, for convenience, assume each independent 2) For each of T samples , assess heldout score • We need to choose in advance: • Performance metric (e.g. AUROC, log loss, TPR at PPV > 0.98, etc.) • What is most important for your task? • Source of heldout data • Fixed validation set : Faster, simpler • Cross validation with K folds : Less noise, better use of all available data 3) Select the one single combination with best score Mike Hughes - Tufts COMP 135 - Spring 2019 26

Recommend

More recommend