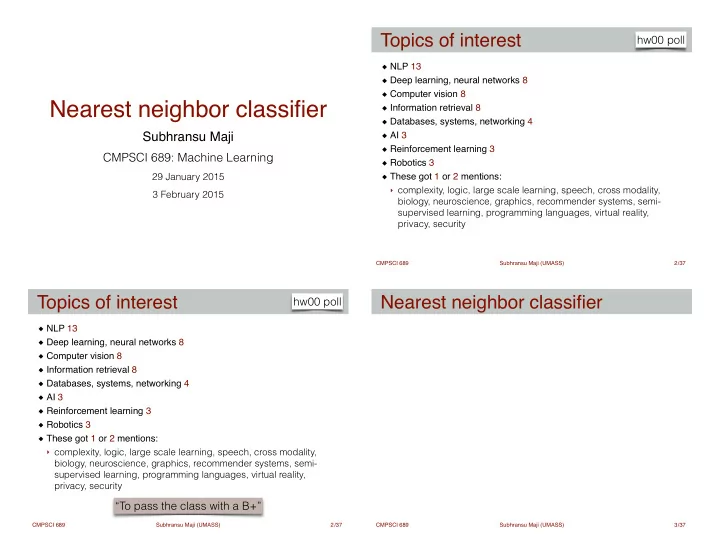

Topics of interest hw00 poll NLP 13 ! Deep learning, neural networks 8 ! Computer vision 8 ! Nearest neighbor classifier Information retrieval 8 ! Databases, systems, networking 4 ! Subhransu Maji AI 3 ! Reinforcement learning 3 ! CMPSCI 689: Machine Learning Robotics 3 ! 29 January 2015 These got 1 or 2 mentions: ! ‣ complexity, logic, large scale learning, speech, cross modality, 3 February 2015 biology, neuroscience, graphics, recommender systems, semi- supervised learning, programming languages, virtual reality, privacy, security CMPSCI 689 Subhransu Maji (UMASS) 2 /37 Topics of interest Nearest neighbor classifier hw00 poll NLP 13 ! Deep learning, neural networks 8 ! Computer vision 8 ! Information retrieval 8 ! Databases, systems, networking 4 ! AI 3 ! Reinforcement learning 3 ! Robotics 3 ! These got 1 or 2 mentions: ! ‣ complexity, logic, large scale learning, speech, cross modality, biology, neuroscience, graphics, recommender systems, semi- supervised learning, programming languages, virtual reality, privacy, security “To pass the class with a B+” CMPSCI 689 Subhransu Maji (UMASS) 2 /37 CMPSCI 689 Subhransu Maji (UMASS) 3 /37

Nearest neighbor classifier Nearest neighbor classifier Will Alice like AI? Will Alice like AI? ‣ Alice and James are similar and James likes AI. Hence, Alice must ‣ Alice and James are similar and James likes AI. Hence, Alice must also like AI. also like AI. It is useful to think of data as feature vectors ‣ Use Euclidean distance to measure similarity CMPSCI 689 Subhransu Maji (UMASS) 3 /37 CMPSCI 689 Subhransu Maji (UMASS) 3 /37 Nearest neighbor classifier Nearest neighbor classifier Will Alice like AI? Will Alice like AI? ‣ Alice and James are similar and James likes AI. Hence, Alice must ‣ Alice and James are similar and James likes AI. Hence, Alice must also like AI. also like AI. It is useful to think of data as feature vectors It is useful to think of data as feature vectors ‣ Use Euclidean distance to measure similarity ‣ Use Euclidean distance to measure similarity Data to feature vectors Data to feature vectors ‣ Binary: e.g. AI? {no, yes} ➡ {0,1} ➡ or {-20, 2} CMPSCI 689 Subhransu Maji (UMASS) 3 /37 CMPSCI 689 Subhransu Maji (UMASS) 3 /37

Nearest neighbor classifier Nearest neighbor classifier Will Alice like AI? Will Alice like AI? ‣ Alice and James are similar and James likes AI. Hence, Alice must ‣ Alice and James are similar and James likes AI. Hence, Alice must also like AI. also like AI. It is useful to think of data as feature vectors It is useful to think of data as feature vectors ‣ Use Euclidean distance to measure similarity ‣ Use Euclidean distance to measure similarity Data to feature vectors Data to feature vectors ‣ Binary: e.g. AI? {no, yes} ‣ Binary: e.g. AI? {no, yes} ➡ {0,1} ➡ {0,1} X X ➡ or {-20, 2} ➡ or {-20, 2} ‣ Nominal: e.g. color = {red, blue, green, yellow} ➡ {0,1} ⁿ ➡ or {0,1,2,3} CMPSCI 689 Subhransu Maji (UMASS) 3 /37 CMPSCI 689 Subhransu Maji (UMASS) 3 /37 Nearest neighbor classifier Nearest neighbor classifier Will Alice like AI? Will Alice like AI? ‣ Alice and James are similar and James likes AI. Hence, Alice must ‣ Alice and James are similar and James likes AI. Hence, Alice must also like AI. also like AI. It is useful to think of data as feature vectors It is useful to think of data as feature vectors ‣ Use Euclidean distance to measure similarity ‣ Use Euclidean distance to measure similarity Data to feature vectors Data to feature vectors ‣ Binary: e.g. AI? {no, yes} ‣ Binary: e.g. AI? {no, yes} ➡ {0,1} ➡ {0,1} X X ➡ or {-20, 2} ➡ or {-20, 2} ‣ Nominal: e.g. color = {red, blue, green, yellow} ‣ Nominal: e.g. color = {red, blue, green, yellow} ➡ {0,1} ⁿ ➡ {0,1} ⁿ X X ➡ or {0,1,2,3} ➡ or {0,1,2,3} ‣ Real valued: e.g. temperature ➡ copied ➡ or {low, medium, high} CMPSCI 689 Subhransu Maji (UMASS) 3 /37 CMPSCI 689 Subhransu Maji (UMASS) 3 /37

Nearest neighbor classifier Nearest neighbor classifier ( x 1 , y 1 ) , ( x 2 , y 2 ) , . . . , ( x n , y n ) Training data is in the form of ! Fruit data: ! ‣ label: {apples, oranges, lemons} ‣ attributes: {width, height} sX ( x 1 ,i − x 2 ,i ) 2 d ( x 1 , x 2 ) = Euclidean distance i height width CMPSCI 689 Subhransu Maji (UMASS) 4 /37 CMPSCI 689 Subhransu Maji (UMASS) 5 /37 Nearest neighbor classifier Nearest neighbor classifier test data test data (a, b) ? (a, b) ? CMPSCI 689 Subhransu Maji (UMASS) 5 /37 CMPSCI 689 Subhransu Maji (UMASS) 5 /37

Nearest neighbor classifier Nearest neighbor classifier test data test data (a, b) ? (a, b) ? lemon CMPSCI 689 Subhransu Maji (UMASS) 5 /37 CMPSCI 689 Subhransu Maji (UMASS) 5 /37 Nearest neighbor classifier Nearest neighbor classifier test data test data (c, d) ? CMPSCI 689 Subhransu Maji (UMASS) 5 /37 CMPSCI 689 Subhransu Maji (UMASS) 5 /37

Nearest neighbor classifier Nearest neighbor classifier test data test data (c, d) ? (c, d) ? CMPSCI 689 Subhransu Maji (UMASS) 5 /37 CMPSCI 689 Subhransu Maji (UMASS) 5 /37 Nearest neighbor classifier k-Nearest neighbor classifier test data (c, d) ? apple outlier CMPSCI 689 Subhransu Maji (UMASS) 5 /37 CMPSCI 689 Subhransu Maji (UMASS) 6 /37

k-Nearest neighbor classifier Decision boundaries: 1NN Take majority vote among the k nearest neighbors outlier What is the effect of k? CMPSCI 689 Subhransu Maji (UMASS) 6 /37 CMPSCI 689 Subhransu Maji (UMASS) 7 /37 Decision boundaries: 1NN Decision boundaries: DT What is the effect of k? CMPSCI 689 Subhransu Maji (UMASS) 7 /37 CMPSCI 689 Subhransu Maji (UMASS) 8 /37

Decision boundaries: DT Decision boundaries: DT apple no h > 7.8 yes orange yes yes w > 7.3 w > 7.3 no no CMPSCI 689 Subhransu Maji (UMASS) 8 /37 CMPSCI 689 Subhransu Maji (UMASS) 8 /37 Decision boundaries: DT Decision boundaries: DT apple apple no no h > 7.8 yes orange h > 7.8 yes orange yes yes w > 7.3 w > 7.3 no no yes lemon h > 8 h > 8 CMPSCI 689 Subhransu Maji (UMASS) 8 /37 CMPSCI 689 Subhransu Maji (UMASS) 8 /37

Decision boundaries: DT Decision boundaries: DT apple apple no no h > 7.8 yes orange h > 7.8 yes orange yes yes w > 7.3 w > 7.3 no no yes lemon yes lemon h > 8 h > 8 no no w > 6.6 CMPSCI 689 Subhransu Maji (UMASS) 8 /37 CMPSCI 689 Subhransu Maji (UMASS) 8 /37 Decision boundaries: DT Decision boundaries: DT apple apple no no h > 7.8 yes orange h > 7.8 yes orange yes yes w > 7.3 w > 7.3 no no yes lemon yes lemon h > 8 h > 8 no no yes orange yes orange w > 6.6 w > 6.6 no CMPSCI 689 Subhransu Maji (UMASS) 8 /37 CMPSCI 689 Subhransu Maji (UMASS) 8 /37

Decision boundaries: DT Decision boundaries: DT The decision boundaries ! are axis aligned for DT apple apple no no h > 7.8 yes orange h > 7.8 yes orange yes yes w > 7.3 w > 7.3 no no yes lemon yes lemon h > 8 h > 8 no no yes orange yes orange w > 6.6 w > 6.6 no no yes lemon yes lemon h > 6 h > 6 no no orange orange CMPSCI 689 Subhransu Maji (UMASS) 8 /37 CMPSCI 689 Subhransu Maji (UMASS) 8 /37 Inductive bias of the kNN classifier An example Choice of features ! “Texture synthesis” [Efros & Leung, ICCV 99] ‣ We are assuming that all features are equally important ‣ What happens if we scale one of the features by a factor of 100? Choice of distance function ! ‣ Euclidean, cosine similarity (angle), Gaussian, etc … ‣ Should the coordinates be independent? Choice of k CMPSCI 689 Subhransu Maji (UMASS) 9 /37 CMPSCI 689 Subhransu Maji (UMASS) 10 /37

An example An example “Texture synthesis” [Efros & Leung, ICCV 99] “Texture synthesis” [Efros & Leung, ICCV 99] CMPSCI 689 Subhransu Maji (UMASS) 10 /37 CMPSCI 689 Subhransu Maji (UMASS) 10 /37 An example: Synthesizing one pixel An example: Synthesizing one pixel p p input image input image synthesized image synthesized image ‣ What is ? ‣ What is ? ‣ Find all the windows in the image that match the neighborhood ‣ Find all the windows in the image that match the neighborhood ‣ To synthesize x ‣ To synthesize x ➡ pick one matching window at random ➡ pick one matching window at random ➡ assign x to be the center pixel of that window ➡ assign x to be the center pixel of that window ‣ An exact match might not be present, so find the best matches using ‣ An exact match might not be present, so find the best matches using Euclidean distance and randomly choose between them, preferring Euclidean distance and randomly choose between them, preferring better matches with higher probability better matches with higher probability Slide from Alyosha Efros, ICCV 1999 Slide from Alyosha Efros, ICCV 1999 CMPSCI 689 Subhransu Maji (UMASS) 11 /37 CMPSCI 689 Subhransu Maji (UMASS) 11 /37

Recommend

More recommend