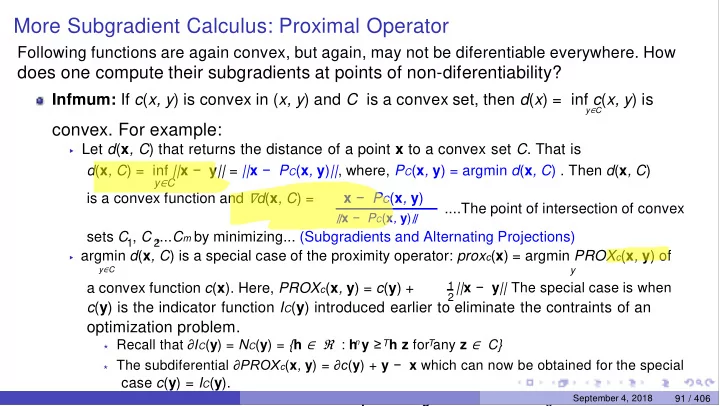

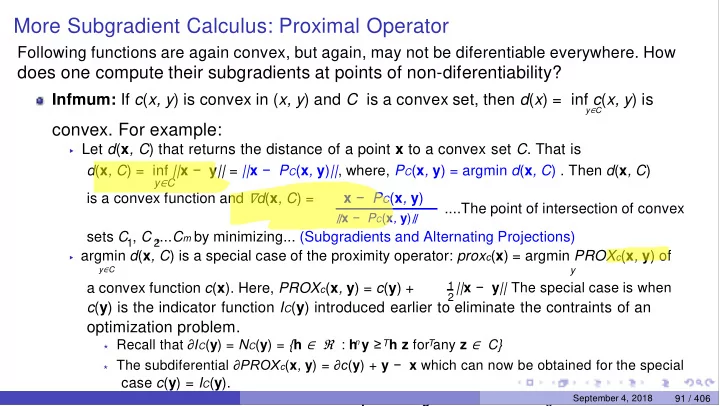

More Subgradient Calculus: Proximal Operator Following functions are again convex, but again, may not be diferentiable everywhere. How does one compute their subgradients at points of non-diferentiability? Infmum: If c ( x, y ) is convex in ( x, y ) and C is a convex set, then d ( x ) = inf c ( x, y ) is y ∈ C convex. For example: ▶ Let d ( x , C ) that returns the distance of a point x to a convex set C . That is d ( x , C ) = inf || x − y || = || x − P C ( x , y ) || , where, P C ( x , y ) = argmin d ( x , C ) . Then d ( x , C ) y ∈ C is a convex function and ∇ d ( x , C ) = x − P C ( x , y ) ....The point of intersection of convex ∥ x − P C ( x , y ) ∥ sets C , C ,... C m by minimizing... (Subgradients and Alternating Projections) 1 2 ▶ argmin d ( x , C ) is a special case of the proximity operator: prox c ( x ) = argmin PROX c ( x , y ) of y ∈ C y 2 || x − y || The special case is when a convex function c ( x ). Here, PROX c ( x , y ) = c ( y ) + 1 c ( y ) is the indicator function I C ( y ) introduced earlier to eliminate the contraints of an optimization problem. ⋆ Recall that ∂I C ( y ) = N C ( y ) = { h ∈ ℜ : h y ≥ h z for any z ∈ C} n T T ⋆ The subdiferential ∂PROX c ( x , y ) = ∂c ( y ) + y − x which can now be obtained for the special case c ( y ) = I C ( y ). ⋆ We will invoke this when we discuss the proximal gradient descent algorithm September 4, 2018 91 / 406

More Subgradient Calculus: Perspective (Advanced) Following functions are again convex, but again, may not be diferentiable everywhere. How does one compute their subgradients at points of non-diferentiability? Perspective Function: The perspective of a function f : ℜ → ℜ is the function n g : R × ℜ → ℜ n , g ( x, t ) = tf ( x / t ). Function g is convex if f is convex on { ( x, t ) |x / t ∈ d omf, t > 0 } . For example, d omg = ▶ The perspective of f ( x ) = x x is (quadratic-over-linear) function g ( x, t ) = x T x T and is convex. t ▶ The perspective of negative logarithm f ( x ) = − log x is the relative entropy function g ( x, t ) = t log t − t log x and is convex. relative to t September 4, 2018 92 / 406

Ilustrating the Why and How of (Sub)Gradient on Lasso September 4, 2018 93 / 406

Recap: Subgradients for the ‘Lasso’ Problem in Machine Learning Recall Lasso (min f ( x )) as an example to illustrate subgradients of afne composition: x 1 y is fixed 2 || y − x || + λ|| x || 2 f ( x ) = 1 The subgradients of f ( x ) are x - y + lambda s s.t: s_i = sign(x_i) if x_i != 0 o/w: 0 <= s_i <= 1 September 4, 2018 94 / 406

Recap: Subgradients for the ‘Lasso’ Problem in Machine Learning Recall Lasso (min f ( x )) as an example to illustrate subgradients of afne composition: x 1 2 || y − x || + λ|| x || 2 f ( x ) = 1 The subgradients of f ( x ) are h = x − y + λ s , where s i = sign ( x i ) if x i = 0 and s i ∈ [ − 1 , 1] if x i = 0. results from convex hull of union of subdi ff erentials Here we only see "HOW" to compute the subdi ff erential. September 4, 2018 94 / 406

Invoking "WHY" of subdi ff .. Subgradients in a Lasso sub-problem: Sufcient Condition Test We illustrate the sufcient condition again using a sub-problem in Lasso as an example. Consider the simplifed Lasso problem (which is a sub-problem in Lasso): min_x 1 2 || y − x || + λ|| x || 2 f ( x ) = 1 Recall the subgradients of f ( x ): h = x − y + λ s , where s i = sign ( x i ) if x i = 0 and s i ∈ [ − 1 , 1] if x i = 0. A solution to this problem is x_i = 0 if y_i is between -\lambda and \lambda and there exists an s_i between -1 and +1 for this case In fact this s_i = y_i / lambda September 4, 2018 95 / 406

Subgradients in a Lasso sub-problem: Sufcient Condition Test We illustrate the sufcient condition again using a sub-problem in Lasso as an example. Consider the simplifed Lasso problem (which is a sub-problem in Lasso): 1 2 || y − x || + λ|| x || 2 f ( x ) = 1 Recall the subgradients of f ( x ): h = x − y + λ s , where s i = sign ( x i ) if x i = 0 and s i ∈ [ − 1 , 1] if x i = 0. y i + λ ∗ y A solution to this problem is x = S ( y ), where S ( y ) is the soft-thresholding operator : λ λ i − λ if y i > λ if −λ ≤ y i ≤ λ S ( y ) = 0 λ if y i < −λ Now if x = S ( y ) then there exists a h ( x ) = 0. Why? If y i > λ , we have ∗ λ ∗ x − y i = −λ + λ · 1 = 0. The case of y i < λ is similar. If −λ ≤ y i ≤ λ , we have i ∗ y i y i x i − y i −y i + λ ( λ ) = 0. Here, s i = λ . = September 4, 2018 95 / 406

Proximal Operator and Sufcient Condition Test Recap: d ( x , C ) returns the distance of a point x to a convex set C . That is d ( x , C ) = inf || x − y || . Then d ( x , C ) is a convex function. 2 y ∈ C Recap: argmin || x − y || is a special case of the proximal operator: y ∈ C prox c ( x ) = argmin PROX c ( x , y ) of a convex function c ( x ). Here, y 2 || x − y || 1 2 PROX c ( x , y ) = c ( y ) + The special case is when c ( y ) is the indicator function I C ( y ) introduced earlier to eliminate the contraints of an optimization problem. ▶ Recall that ∂I C ( y ) = N C ( y ) = { h ∈ ℜ : h y ≥ h z for any z ∈ C} n T T ▶ For the special case c ( y ) = I C ( y ), the subdiferential ∂PROX c ( x , y ) = ∂c ( y ) + y − x = { h − x ∈ ℜ : h y ≥ h z for any z ∈ C} n T T that y in C ▶ As per sufcient condition for minimum for this special case, prox c ( x ) = that is closest to x September 4, 2018 96 / 406

Convexity by Restriction to line, (Sub)Gradients and Monotonicity September 4, 2018 97 / 406

Convexity by Restricting to Line A useful technique for verifying the convexity of a function is to investigate its convexity, by restricting the function to a line and checking for the convexity of a function of single variable. Theorem A function f : D → ℜ is (strictly) convex if and only if the function ϕ : D → ℜ defned below, ϕ is (strictly) convex in t for every x ∈ ℜ and for every h ∈ ℜ n n Direction vector or line We saw the connection with Here we see connection ϕ ( t ) = f ( x + t h ) R: convex di ff erentiable fn with direction, independent of di ff erentiability i ff directional deriv is convex { t| x + t h ∈ D . } with the domain of ϕ given by D = ϕ along every direction Thus, we have see that ∗ ∗ If a function has a local optimum at x , it as a local optimum along each component x i of x ∗ If a function is convex in x , it will be convex in each component x i of x September 4, 2018 98 / 406

Convexity by Restricting to Line (contd.) Proof: We will prove the necessity and sufciency of the convexity of ϕ for a convex function f . The proof for necessity and sufciency of the strict convexity of ϕ for a strictly convex f is very similar and is left as an exercise. Proof of Necessity: Assume that f is convex. And we need to prove that ϕ ( t ) = f ( x + t h ) is also convex. Let t , t ∈ D and θ ∈ [0 , 1]. Then, ( ) (for any direction h) ϕ 1 2 ϕ ( θt + (1 − θ ) t ) = f θ ( x + t h ) + (1 − θ )( x + t h ) 1 2 1 2 <= \theta f(...x_1) + (1-\theta) f(...x_2) September 4, 2018 99 / 406

Convexity by Restricting to Line (contd.) Proof: We will prove the necessity and sufciency of the convexity of ϕ for a convex function f . The proof for necessity and sufciency of the strict convexity of ϕ for a strictly convex f is very similar and is left as an exercise. Proof of Necessity: Assume that f is convex. And we need to prove that ϕ ( t ) = f ( x + t h ) is also convex. Let t , t ∈ D and θ ∈ [0 , 1]. Then, ( ) ϕ 1 2 ( ) ( ) ϕ ( θt + (1 − θ ) t ) = f θ ( x + t h ) + (1 − θ )( x + t h ) 1 2 1 2 ≤ θf ( x + t h ) + (1 − θ ) f ( x + t h ) = θϕ ( t ) + (1 − θ ) ϕ ( t ) (16) 1 2 1 2 Thus, ϕ is convex. September 4, 2018 99 / 406

Convexity by Restricting to Line (contd.) ( ) Proof of Sufciency: Assume that for every h ∈ ℜ and every x ∈ ℜ , ϕ ( t ) = f ( x + t h ) is n n convex. We will prove that f is convex. Let x , x ∈ D . Take, x = x and h = x − x . We 1 2 1 2 1 know that ϕ ( t ) = f x + t ( x − x ) is convex, with ϕ (1) = f ( x ) and ϕ (0) = f ( x ). 1 2 1 2 1 Therefore, for any θ ∈ [0 , 1] ( ) f θ x + (1 − θ ) x = ϕ ( θ ) 2 1 <= theta \phi(1) + (1-theta)\phi(0) = theta f(x2) + (1-theta) f(x1) September 4, 2018 100 / 406

Convexity by Restricting to Line (contd.) ( ) Proof of Sufciency: Assume that for every h ∈ ℜ and every x ∈ ℜ , ϕ ( t ) = f ( x + t h ) is n n convex. We will prove that f is convex. Let x , x ∈ D . Take, x = x and h = x − x . We 1 2 1 2 1 know that ϕ ( t ) = f x + t ( x − x ) is convex, with ϕ (1) = f ( x ) and ϕ (0) = f ( x ). 1 2 1 2 1 Therefore, for any θ ∈ [0 , 1] ( ) f θ x + (1 − θ ) x = ϕ ( θ ) 2 1 ≤ θϕ (1) + (1 − θ ) ϕ (0) ≤ θf ( x ) + (1 − θ ) f ( x ) (17) 2 1 This implies that f is convex. September 4, 2018 100 / 406

More on SubGradient kind of functions: Monotonicity A diferentiable function f : ℜ → ℜ is (strictly) convex, if and only if f ( x ) is (strictly) ′ increasing. Is there a closer analog for f : ℜ → ℜ ? n Ans: Yes. We need a notion of monotonicity of vectors (subgradients) September 4, 2018 101 / 406

Recommend

More recommend