Moments and independence Theorem : If X 1 , . . . , X p are - PDF document

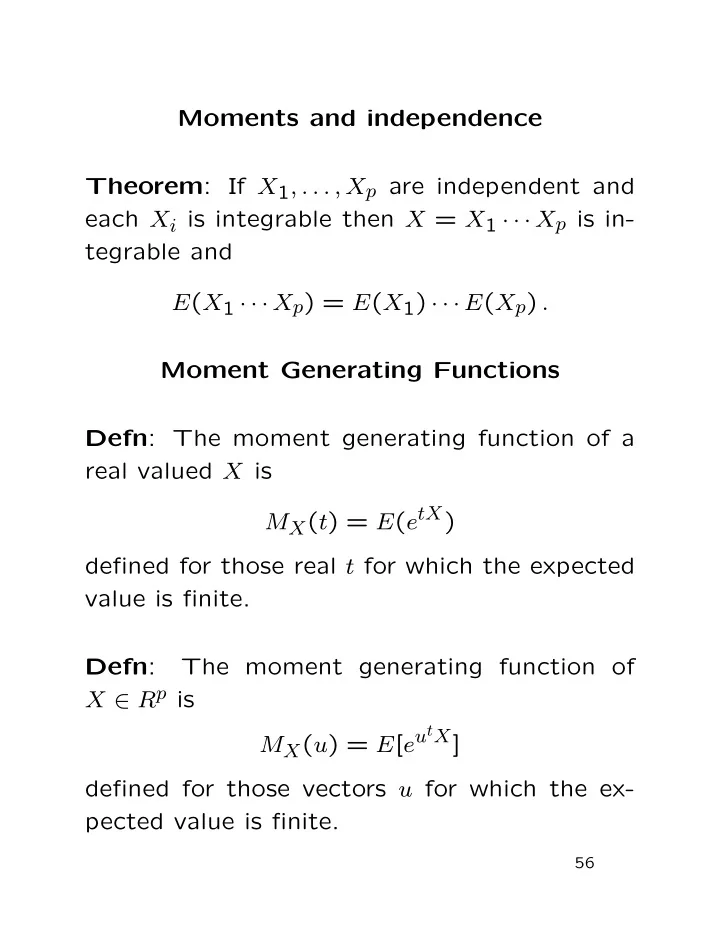

Moments and independence Theorem : If X 1 , . . . , X p are independent and each X i is integrable then X = X 1 X p is in- tegrable and E ( X 1 X p ) = E ( X 1 ) E ( X p ) . Moment Generating Functions Defn : The moment

Moments and independence Theorem : If X 1 , . . . , X p are independent and each X i is integrable then X = X 1 · · · X p is in- tegrable and E ( X 1 · · · X p ) = E ( X 1 ) · · · E ( X p ) . Moment Generating Functions Defn : The moment generating function of a real valued X is M X ( t ) = E ( e tX ) defined for those real t for which the expected value is finite. Defn : The moment generating function of X ∈ R p is M X ( u ) = E [ e u t X ] defined for those vectors u for which the ex- pected value is finite. 56

Formal connection to moments: ∞ E [( tX ) k ] /k ! � M X ( t ) = k =0 ∞ k t k /k ! . � µ ′ = k =0 Sometimes can find power series expansion of M X and read off the moments of X from the coefficients of t k /k !. Example : : X has density f ( u ) = u α − 1 e − u 1( u > 0) / Γ( α ) MGF is � ∞ e tu u α − 1 e − u du/ Γ( α ) M X ( t ) = 0 Substitute v = u (1 − t ) to get M X ( t ) = (1 − t ) − α 57

For α = 1 get exponential distribution. Have power series expansion ∞ t k � M X ( t ) = 1 / (1 − t ) = 0 Write t k = k ! t k /k !. Coeff of t k /k ! is E( X k ) = k ! Example : : Z ∼ N (0 , 1). � ∞ √ −∞ e tz − z 2 / 2 dz/ M Z ( t ) = 2 π � ∞ √ −∞ e − ( z − t ) 2 / 2+ t 2 / 2 dz/ = 2 π = e t 2 / 2 because rest of integrand is N ( t, 1) density. 58

Theorem : If M is finite for all t ∈ [ − ǫ, ǫ ] for some ǫ > 0 then 1. Every moment of X is finite. 2. M is C ∞ (in fact M is analytic). k = d k 3. µ ′ dt k M X (0). C ∞ means has continuous derivatives Note: of all orders. Analytic means has convergent power series expansion in neighbourhood of each t ∈ ( − ǫ, ǫ ). Theorem : Suppose X and Y have mgfs M X and M Y which are finite for all t ∈ [ − ǫ, ǫ ]. If M X ( t ) = M Y ( t ) for all t ∈ [ − ǫ, ǫ ] then X and Y have the same distribution. The proofs, and many other facts about mgfs, rely on techniques of complex variables. 59

MGFs and Sums If X 1 , . . . , X p are independent and Y = � X i then the moment generating function of Y is the product of those of the individual X i : E ( e tY ) = E ( e tX i ) � i or M Y = � M X i . Note: also true for multivariate X i . Example : : If X i ∼ N ( µ i , σ 2 i ) then e t ( σ i Z i + µ i ) � � M X i ( t ) =E = e tµ i M Z i ( tσ i ) = e tµ i e t 2 σ 2 i / 2 = e σ 2 i t 2 / 2+ tµ i . Moment generating function for Y = � X i is exp { σ 2 i t 2 / 2 + tµ i } � M Y ( t ) = σ 2 i t 2 / 2 + � � = exp { µ i t } which is mgf of N ( � µ i , � σ 2 i ). 60

Example : Now suppose Z 1 , . . . , Z ν independent N (0 , 1) rvs. By definition: S ν = � ν 1 Z 2 i has χ 2 ν distribution. It is easy to check S 1 = Z 2 1 has density ( u/ 2) − 1 / 2 e − u/ 2 / (2 √ π ) and then the mgf of S 1 is (1 − 2 t ) − 1 / 2 . It follows that M S ν ( t ) = (1 − 2 t ) − ν/ 2 which is mgf of Gamma( ν/ 2 , 2) rv. So: χ 2 ν dstbn has Gamma( ν/ 2 , 2) density: ( u/ 2) ( ν − 2) / 2 e − u/ 2 / (2Γ( ν/ 2)) . 61

Example : The Cauchy density is 1 π (1 + x 2 ) ; corresponding moment generating function is � ∞ e tx M ( t ) = π (1 + x 2 ) dx −∞ which is + ∞ except for t = 0 where we get 1. Every t distribution has exactly same mgf. So: can’t use mgf to distinguish such distributions. Problem: these distributions do not have in- finitely many finite moments. So: in STAT 801, develop substitute for mgf which is defined for every distribution, namely, the characteristic function. 62

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.