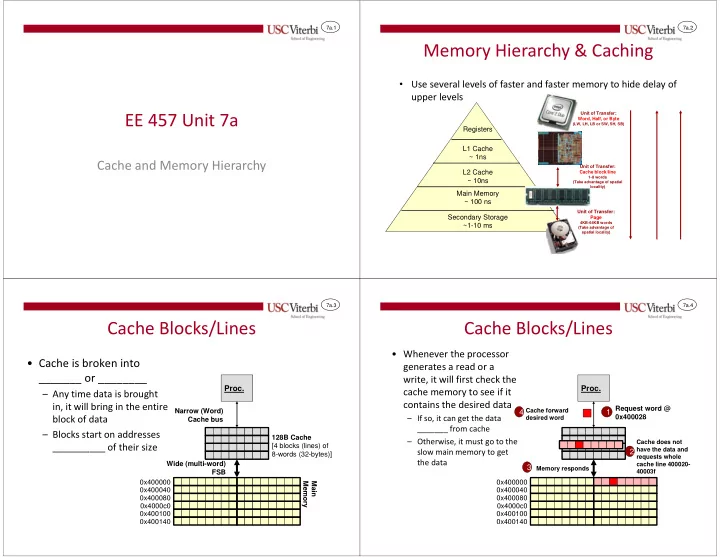

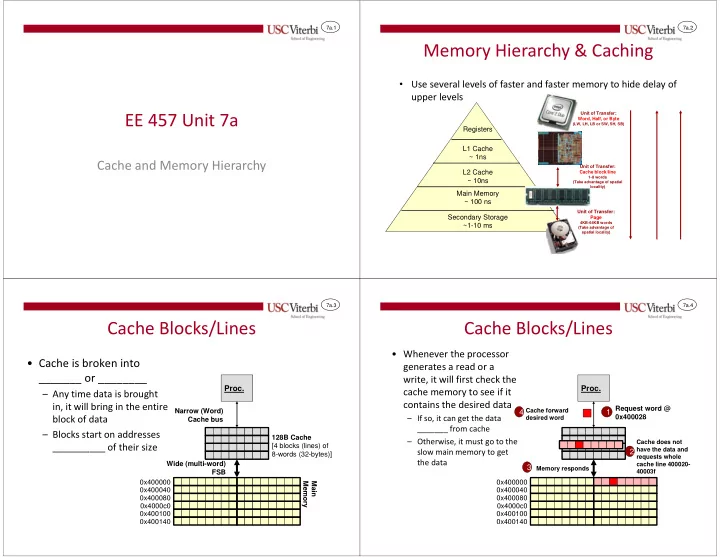

7a.1 7a.2 Memory Hierarchy & Caching • Use several levels of faster and faster memory to hide delay of upper levels EE 457 Unit 7a Unit of Transfer: Word, Half, or Byte (LW, LH, LB or SW, SH, SB) Registers L1 Cache ~ 1ns Cache and Memory Hierarchy Unit of Transfer: L2 Cache Cache block/line 1-8 words ~ 10ns (Take advantage of spatial locality) Main Memory ~ 100 ns Unit of Transfer: Secondary Storage Page 4KB-64KB words ~1-10 ms (Take advantage of spatial locality) 7a.3 7a.4 Cache Blocks/Lines Cache Blocks/Lines • Whenever the processor • Cache is broken into generates a read or a _______ or ________ write, it will first check the Proc. Proc. cache memory to see if it – Any time data is brought contains the desired data in, it will bring in the entire Request word @ Narrow (Word) 4 Cache forward 1 – If so, it can get the data 0x400028 block of data desired word Cache bus _______ from cache – Blocks start on addresses 128B Cache – Otherwise, it must go to the Cache does not __________ of their size [4 blocks (lines) of have the data and slow main memory to get 2 8-words (32-bytes)] requests whole the data Wide (multi-word) cache line 400020- 3 Memory responds 40003f FSB 0x400000 0x400000 Memory Main 0x400040 0x400040 0x400080 0x400080 0x4000c0 0x4000c0 0x400100 0x400100 0x400140 0x400140

7a.5 7a.6 Cache & Virtual Memory Cache Definitions • Exploits the Principle of Locality • Cache _____ = Desired data is in cache • Cache _____ = Desired data is not present in cache – Allows us to implement a hierarchy of memories: cache, • When a cache ________ occurs, a new block is brought from MM into MM, second storage cache – Temporal Locality: If an item is reference it will tend to be – _____________: First load the word requested by the CPU and forward it to the CPU, while continuing to bring in the remainder of the block _____________________ – _______________: First load entire block into cache, then forward requested • Examples: ________, ___________________, setting a variable word to CPU and then reusing it many times • On a ___________ we may choose to not bring in the MM block since – Spatial Locality: If an item is referenced items whose writes exhibit less locality of reference compared to reads _________________ will tend to be referenced soon When CPU writes to cache, we may use one of two policies: • • Examples: ___________ and ______________ – __________________________: Every write updates both cache and MM copies to keep them in sync. (i.e. coherent) – ____________: Let the CPU keep writing to cache at fast rate, not updating MM. Only copy the block back to MM when it needs to be replaced or flushed 7a.7 7a.8 Write Back Cache Write Through Cache • On write-hit • On write-hit • Update only cached copy • Update both cached and main memory version • Processor can continue Proc. Proc. quickly (e.g. 10 ns) • Processor may have to wait for memory to complete • Later when block is evicted, Write word (hit) Write word (hit) (e.g. 100 ns) 1 1 entire block is written back 3 (because bookkeeping is • Later when block is evicted, Cache updates kept on a per block basis) no writeback is needed 2 value & signals Cache and memory 2 processor to copies are updated • Ex: 8 words @ 100 ns 4 continue per word for writing 5 On eviction, entire 3 On eviction, entire mem. block written back block written back = 800 ns 0x400000 0x400000 0x400040 0x400040 0x400080 0x400080 0x4000c0 0x4000c0 0x400100 0x400100 0x400140 0x400140

7a.9 7a.10 Cache Definitions Fully Associative Cache Example Processor Die Main Memory • Mapping Function: The correspondence between MM blocks • Cache Mapping Example: V Tag Cache Word 0000 000-111 = 1 1 1 0 0 Data 000-111 0001 000-111 – Fully Associative and cache block frames is specified by means of a mapping = 0 1 1 0 0 Data 000-111 0010 000-111 – MM = 128 words = 1 0 1 0 0 Data 000-111 0011 000-111 function – Cache Size = = 1 1 0 0 1 Data 000-111 0100 000-111 32 words – 0101 000-111 0110 000-111 – Block Size = Tag Word – 0111 000-111 8 words CPU Address 1 0 0 0 1 1 1 1000 000- 111 – • Fully Associative 1001 000-111 • Replacement Algorithm: How do we decide which of the mapping allows a MM 1010 000-111 block to be placed 1011 000-111 current cache blocks is removed to create space for a new (associate with) ____ 1100 000-111 block Processor Core Logic 1101 000-111 ____ cache block 1110 000-111 • To determine hit/miss we – 1111 000-111 have to search – ______________ Word data corresponding to address 1111000-1111111 7a.11 7a.12 Implementation Info Fully Associative Hit Logic • Tags: Associated with each cache block frame, we have a TAG • Cache Mapping Example: to identify its ________________________ – Fully Associative, MM = 128 words (2 7 ), Cache Size = 32 (2 5 ) words, Block Size = (2 3 ) words • Valid bit: An additional bit is maintained to indicate that • Number of blocks in MM = _________________ whether the TAG is valid (meaning it contains the TAG of an actual block) • Block ID = ____________ – Initially when you turn power on the cache is empty and all valid bits • Number of Cache Block Frames = ____________ are turned to ‘0’ (invalid) – Store ____ Tags of 4-bits + 1 valid bit • _________: This bit associated with the TAG indicates when – Need 4 _________________ each of _______ the block was modified (got dirtied) during its stay in the • CAM (Content Addressable Memory) is a special memory cache and thus needs to written back to MM (used only with structure to store the tag+valid bits that takes the place of the write-back cache policy) these comparators but is too expensive

7a.13 7a.14 Fully Associative Does Not Scale Fully Associative Address Scheme • If 80386 used Fully Associative Cache Mapping : • A[1:0] unused => /BE3…/BE0 – Fully Associative, MM = 4GB (2 32 ), Cache Size = 64KB (2 16 ), Block Size = • Word bits = _____________ (16=2 4 ) bytes = 4 words • Number of blocks in MM = _______________ • Tag = Remaining bits • Block ID = _______ • Number of Cache Block Frames = _________________ – Store _______ Tags of 28-bits + 1 valid bit – Need _______ Comparators each of 29 bits ________________________ 7a.15 7a.16 Direct Mapping Address Usage Direct Mapping Cache Example Processor Die Main Memory • Limit each MM block to • Cache Mapping Example: V Tag Cache Word 00 00 000-111 00 01 1 1 1 Data 000-111 000-111 __________ location in – Direct Mapping, MM = 128 words (2 7 ), Cache Size = 32 (2 5 ) words, 0 1 1 Data 000-111 00 10 000-111 cache 1 0 1 Data 000-111 00 11 000-111 Block Size = (2 3 ) words • Cache Mapping Example: 1 1 0 Data 000-111 01 00 000-111 • Number of blocks in MM = 2 7 / 2 3 = 2 4 01 01 000-111 – Direct Mapping 01 10 000-111 Tag CBLK Word • Block ID = 4 bits – MM = 128 words 01 11 000-111 CPU Address 1 0 0 0 1 1 1 10 00 000- 111 • Number of Cache Block Frames = 2 5 / 2 3 = 2 2 = 4 – Cache Size = 10 01 000-111 32 words 10 10 000-111 – Number of "colors“ => ____ Number of Block field Bits 10 11 000-111 – Block Size = 11 00 000-111 • _______________ = 4 Groups of blocks 8 words Processor Core Logic 11 01 000-111 • Each MM block i maps to 11 10 000-111 – 2 Tag Bits 11 11 000-111 Cache frame ________ – N = # of cache frames __________ that each Tag CBLK Word BLK Tag Word map to different cache – Tag identifies which group that 2 2 3 blocks but share the Analogy Member colored block belongs same tag Block ID=4

Recommend

More recommend