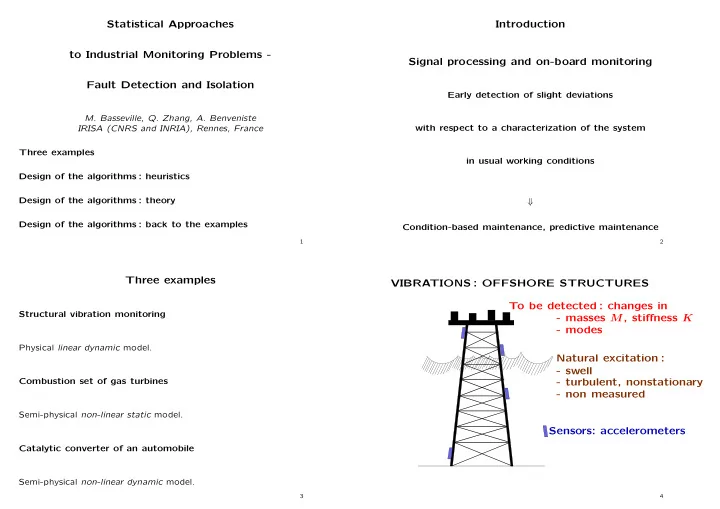

Statistical Approaches Introduction to Industrial Monitoring Problems - Signal processing and on-board monitoring Fault Detection and Isolation Early detection of slight deviations M. Basseville, Q. Zhang, A. Benveniste with respect to a characterization of the system IRISA (CNRS and INRIA), Rennes, France Three examples in usual working conditions Design of the algorithms : heuristics Design of the algorithms : theory ⇓ Design of the algorithms : back to the examples Condition-based maintenance, predictive maintenance 1 2 Three examples VIBRATIONS : OFFSHORE STRUCTURES To be detected : changes in Structural vibration monitoring - masses M , stiffness K - modes Physical linear dynamic model. Natural excitation : - swell Combustion set of gas turbines - turbulent, nonstationary - non measured Semi-physical non-linear static model. Sensors: accelerometers Catalytic converter of an automobile Semi-physical non-linear dynamic model. 3 4

VIBRATIONS: MONITORING SCHEME VIBRATIONS : ROTATING MACHINES nonstationary Structure To be detected : changes in V (physical, linear, - masses M , stiffness K dynamic model) - modes (not measured) OK? Y Ambiant excitation: Detection, - load unbalancing, frictions, steam Changed modes? diagnostics : - non stationary, non measured Which M, K failed? ζ � = 0 ? Sensors: accelerometers (on the bearings!) ζ shows if the model still fits the data 5 6 COMBUSTION : MONITORING SCHEME COMBUSTION : GAS TURBINES Turbine V Combustion Combustion (Semi-physical, chambers Turbine U nonlinear, Sensors : chambers static model) thermocouples Compressor at the exhaust Y OK? Detection, diagnostics : Did the turbine fail? ζ � = 0 ? Which chamber? To be detected : changes in burners and turbine ζ shows if the model still fits the data 7 8

DEPOLLUTION : MONITORING SCHEME AUTOMOBILE : DEPOLLUTION SYSTEM Cata.converter V Z (Semi-physical, Controlled To be diagnosed (OBD2 norm) : nonlinear, engine catalytic converter, front λ oxygen sensors dynamic model) (Simplified Oxygen model) sensor exhaust (semi-phys., Engine, Catalytic converter nonlinear, dynamic and control model) λ λ Y 1 Y 2 OK? Detection, diagnostics Converter? ζ � = 0 ? Sensors : λ oxygen gauges Sensor? 9 10 On-Board Detection : don’t re-identify! Design of the algorithms : heuristics data-to-model distance NEW REFERENCE (Model validation) DATA Modeling and identification model-to-model distance Monitoring NEW Reduction to a universal problem REFERENCE 11 12

Monitoring Modeling and identification V Z Monitored Z Monitored V U Y system U system Y U, Y observed Y = M ( θ, U ) V, Z not Y = M ( θ 0 , U ) θ : parameter θ 0 observed ζ ( θ 0 , U, Y ) � = 0 ? θ 0 = arg min θ Σ k ( Y k − M ( θ, U k )) 2 ζ ( θ, U, Y ) ∆ ∂θ Σ k ( Y k − M ( θ, U k )) 2 ∂ = Σ k C ( Y k , M ( θ 0 , U k )) = 0 or θ : or: ζ ( θ, U, Y ) ∆ = Σ k C ( Y k , M ( θ, U k )) 13 14 Reduction to a universal problem Design of the algorithms : theory � � ∆ θ 0 , Y N ζ N = ζ � = 0 ? 1 Local approach θ = θ 0 + δθ √ H 0 : H 1 : Local approach : likelihood Test θ = θ 0 against N ζ N ∼ N (0 , Σ( θ 0 )) ζ N ∼ N ( M ( θ 0 ) δθ, Σ( θ 0 )) χ 2 Local approach : other estimating/monitoring functions in ζ N Noises and uncertainty on θ 0 taken into account. 15 16

Local approach : likelihood Second order Taylor expansion of the log-likelihood ratio Log-likelihood function θ = θ 0 + δθ √ N N k =1 ln p θ ( Y k |Y k − 1 ln p θ ( Y N � 1 ) = ) 1 = ln p θ ( Y N 1 ) 1 ) ≈ δθ T ζ N ( θ 0 ) − 1 2 δθ T I ( θ 0 ) δθ S N ( θ 0 , θ ) ∆ Efficient score p θ 0 ( Y N � � ∂ ln p θ ( Y N 1 1 ) � 1 N � ζ N ( θ 0 ) ∆ � √ � √ = = k =1 . . . ≈ − 1 � 2 δθ T I ( θ 0 ) δθ � � N ∂θ N E θ 0 S N � � θ = θ 0 ≈ + 1 2 δθ T I ( θ 0 ) δθ ≈ − E θ 0 S N Fisher information matrix E θ S N ∂ 2 ln p θ ( Y N = cov ζ N ( θ ) = − 1 1 ) I ( θ ) ∆ I N ( θ ) ∆ ≈ δθ T I ( θ 0 ) δθ N →∞ I N ( θ ) , = lim N E θ ≈ cov θ S N cov θ 0 S N ∂θ 2 17 18 First order Taylor expansion of the efficient score Example : Gaussian scalar AR process p � ∂ 2 ln p θ ( Y N θ T = ( a 1 . . . a p ) � � ζ N ( θ ) ≈ ζ N ( θ 0 ) + 1 1 ) � Y k = i =1 a i Y k − i + E k , � � δθ � � ∂θ 2 N � � � θ = θ 0 E θ 0 ζ N ( θ ) ≈ − I ( θ 0 ) δθ 1 1 N k =1 Y − � √ ζ N ( θ ) = k − 1 ,p ε k ( θ ) σ 2 N 1 I( θ ) = σ 2 T p Efficient score = ML estimating function ⇐ ⇒ E θ 0 ζ N ( θ ) = 0 θ = θ 0 Efficient score ζ : vector-valued function Innovation ε : scalar function Caution : Efficient score � = innovation ! 19 20

Hypotheses testing - CLT CLT in LAN families Locally Asymptotic Normal (LAN) family (Le Cam, 1960) (i.i.d. variables, stationary Gaussian processes, stationary Markov processes) ln p θ N ( Y N 1 ) ∆ S N ( θ 0 , θ N ) = 2 δθ T I ( θ 0 ) δθ, δθ T I ( θ 0 ) δθ ) N ( − 1 p θ ( Y N P θ 0 under 1 ) S N ( θ 0 , θ ) → 2 δθ T I N ( θ 0 ) δθ + α N ( θ 0 , Y N 2 δθ T I ( θ 0 ) δθ, δθ T I ( θ 0 ) δθ ) δθ T ζ N ( θ 0 ) − 1 N ( + 1 ≈ under P θ 0 + δθ 1 , δθ ) √ N ζ N ( θ 0 ) → N (0 , I ( θ 0 )) N ( 0 , I ( θ 0 )) under P θ 0 → ζ N ( θ 0 ) → 0 a.s. under H 0 α N N ( I ( θ 0 ) δθ, I ( θ 0 )) under P θ 0 + δθ √ N Examples : i.i.d. variables, stationary Gaussian processes, stationary Markov ζ N is asymptotically a sufficient statistics. processes. 21 22 Asymptotically optimum tests for composite hypotheses Asymptotically equivalent and UMP tests (Kushnir-Pinski, 1971; Nikiforov, 1982) Asymptotically equivalent problems : 1 O( ) N (1) : P = { P θ } θ ∈ Θ ⊂ R ℓ LAN Y N N → ∞ sup θ ∈ Θ 1 p θ ( Y N 1 , 1 ) ≥ λ sup θ ∈ Θ 0 p θ ( Y N 1 ) Γ i √ H 0 = { θ ∈ Θ 0 } , H 1 = { θ ∈ Θ 1 } , Θ i = θ 0 + θ ( Y N N 1 ) p ˆ ≥ λ p θ 0 ( Y N 1 ) (2) : ζ ∼ N (Υ , I − 1 ( θ 0 )) θ − θ 0 ) T I ( θ 0 ) (ˆ N (ˆ θ − θ 0 ) ≥ λ ζ T N ( θ 0 ) I − 1 ( θ 0 ) ζ N ( θ 0 ) ≥ λ H 0 = { Υ ∈ Γ 0 } , H 1 = { Υ ∈ Γ 1 } 23 24

Local approach : other estimating functions Computation of the mean deviation Quasi-score � 1 N ∂ � � � ∆ √ ζ N ( θ 0 ) = k =1 H ( θ 0 , Y k ) = − E θ 0 � M ( θ 0 ) ∂θ H ( θ, Y k ) � � N � � θ = θ 0 � ∂ � � Estimating function � = − ∂θ E θ 0 H ( θ, Y k ) � � � � θ = θ 0 ⇐ ⇒ E θ 0 H ( θ, Y k ) = 0 θ = θ 0 � ∂ � � � = + ∂θ E θ H ( θ 0 , Y k ) � � � � θ = θ 0 Mean deviation � � ζ N ( θ 0 ) , ζ ML = cov θ 0 N ( θ 0 ) (i.i.d. case) � ∂ � � M ( θ 0 ) ∆ � = − E θ 0 ∂θ H ( θ, Y k ) � � � � θ = θ 0 25 26 First order Taylor expansion of a quasi-score θ = θ 0 + δθ Estimation efficiency and covariance of estimating fct √ N − 1 � √ ∂ � � ˆ � � � √ 1 ∂ � δθ θ N − θ 0 ≈ − � N E θ 0 ∂θ H ( θ, Y k ) ζ N ( θ 0 ) N � � � � √ � ζ N ( θ ) ≈ ζ N ( θ 0 ) + N ∂θ H ( θ, Y k ) � � � � θ = θ 0 � � �� � N N k =1 � θ = θ 0 � �� � � �� � M ( θ 0 ) − 1 | | under under | | CLT LLN ↓ ↓ θ 0 θ 0 Hence � − 1 � ˆ � � M T ( θ 0 ) Σ − 1 ( θ 0 ) M ( θ 0 ) � θ N − θ 0 cov = ∂ � � N (0 , Σ( θ 0 )) � E θ 0 ∂θ H ( θ, Y k ) � � � � θ = θ 0 � �� � = − M ( θ 0 ) 27 28

Recommend

More recommend