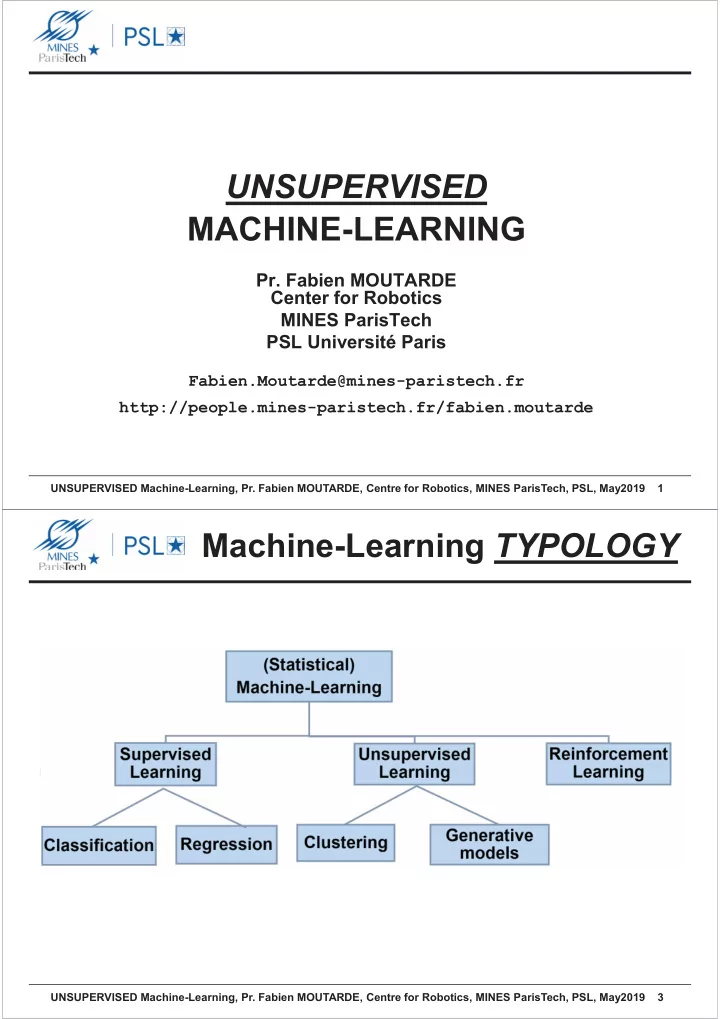

UNSUPERVISED MACHINE-LEARNING Pr. Fabien MOUTARDE Center for Robotics MINES ParisTech PSL Université Paris Fabien.Moutarde@mines-paristech.fr http://people.mines-paristech.fr/fabien.moutarde UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 1 Machine-Learning TYPOLOGY UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 3

Supervised vs UNsupervised learning Learning is called "supervised" when there are "target" values for every example in training dataset: examples = (input-output) = (x 1 ,y 1 ),(x 2 ,y 2 ), … ,(x n ,y n ) The goal is to build a (generally non-linear) approximate model for interpolation, in order to be able to GENERALIZE to input values other than those in training set "Unsupervised" = when there are NO target values: dataset = {x 1 , x 2 , … , x n } The goal is typically either to do datamining (unveil structure in the distribution of examples in input space), or to find an output maximizing a given evaluation function UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 4 Examples of UNSUPERVISED Machine-Learning Datamining (clustering) Generative Learning Generated fake faces UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 5

UNSUPERVISED learning from data Set of “input - only” (i.e. unlabeled) examples : X= {x 1 , x 2 , … , x n } ( x i ÎÂ d , often with « large d») h Î H so that criterion J(h,X) LEARNING H family of is verified or mathematical models ALGORITHM [ each h Î H à y=h(x) ] optimised Hyper-parameters for training algorithm Typical example: “clustering” • h(x) Î C={1,2, …, K} [ each i ßà a “cluster” ] • J(h,X) : dist(x i ,x j ) smaller for x i ,x j with h( x i )=h( x j ) than for x i ,x j with h( x i ) ¹ h( x j ) UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 6 C lustering (en français, regroupement ou partitionnement) Goal = identify structure in data distribution • Group together examples that are close/similar • Pb: groups not always well-defined/delimited, can have arbitrary shape, and fuzzy borders UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 7

Similarity and Distances Similarity • The larger a similarity measure, the more similar the points are • » inverse of distance How to measure distance between 2 points d(x 1 ; x 2 ) ? • Euclidian distance: d 2 (x 1 ;x 2 )= S i (x 1i -x 2i ) 2 = (x 1 -x 2 ). t (x 1 -x 2 ) [L 2 norm] • Manhattan distance: d(x 1 ;x 2 )= S i |x 1i -x 2i | [L 1 norm] • Sebestyen distance: d 2 (x 1 ;x 2 )= (x 1 -x 2 ).W. t (x 1 -x 2 ) [with W=diagonal matrix] • Mahalanobis distance: d 2 (x 1 ;x 2 )= (x 1 -x 2 ).C. t (x 1 -x 2 ) [with C=Covariance matrix] UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 8 Typology of clustering techniques • By agglomeration – Agglomerative Hierarchical Clustering, AHC [en français, Regroupement Hiérarchique Ascendant] • By partitioning – Partitionnement Hiérarchique Descendant – Spectral partitioning (separation in the space of vecteurs propres of adjacency matrix) – K-means • By modelling – Mixture of Gaussians (GMM) – Self-Organizing (Kohohen) Maps, SOM (Cartes de Kohonen) • Based on data density UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 9

Agglomerative Hierarchical Clustering (AHC) Principle: recursively, each point or cluster is absorbed by the nearest cluster Algorithm • Initialization: – Each example is a cluster with only one point – Compute the matrix M of similarities for each pair of clusters • Repeat: – Selection in M of the 2 most mutually similar clusters C i and C j – Fusion of C i and C j in a more general cluster C g – Update of M matrix, by computing similarities between C g and all pre-existing clusters Until fusion of the 2 last clusters UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 10 Distance between 2 clusters?? • Min distance (between closest points): min ( d(i,j) i Î C 1 & j Î C 2 ) • Max distance: max(d(i,j) i Î C 1 & j Î C 2 ) • Average distance: ( S i Î C1&j Î C2 d(i,j) ) / (card(C 1 ) ´ card(C 2 )) • distance between the 2 centroïds: d(b 1 ;b 2 ) • Ward distance: sqrt ( n 1 n 2 /(n 1 +n 2 ) ) ´ d (b 1 ;b 2 ) [où n i =card(C i )] Each type of clusters inter-distance è specific variant ¹ of AHC – distMin (ppV) à single-linkage – distMax à complete-linkage UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 11

AHC output = dendrogram • dendrogram = representation of the full hierarchy of successively grouped clusters • Height from a cluster to its sub-clusters » distance between the 2 merged clusters UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 12 Clustering by partitionning: K-means algorithm • Each cluster C k defined by its « centroïd » c k , which is a « prototype » (a vector template in input space); • Each training example x is « assigned » to cluster C k(x) which has centroïd nearest to x : k(x)=ArgMin k (dist(x,c k )) • ALGO : – Initialization = randomly choose K distinct points c 1 ,…, c K among training examples {x 1 ,…, x n } – REPEAT until stabilization » of all c k : • Assign each x i to cluster C k(i) which has min dist(x i ,c k(i) ) å ( ) = c x card C • Recompute centroïds c k of clusters: k k Î x C k K ( ) åå 2 = D dist c , x [This minimizes ] k = Î k 1 x C k UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 13

Other partitioning method: SPECTRAL clustering • Principle = use adjacency graph 0.1 5 1 0.9 0.8 Nodes = input examples 0.6 6 Edge values = similarities 2 0.4 (in [0;1], so 1 ßà same point) 0.8 0.5 0.2 3 4 è Graph partitioning algos (min-cut , etc… ) allow to recursively split graph in several connex componants Ex: Minimal Spanning Tree + remove edges from smallest to bigger values à single-linkage clusters UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 14 Spectral clustering algo • Compute Laplacian matrix L=D-A of the adjacency graph 0.1 5 1 x 1 x 2 X 3 x 4 x 5 x 6 0.9 0.8 x 1 1.5 -0.8 -0.6 0 -0.1 0 0.6 6 2 x 2 -0.8 1.6 -0.8 0 0 0 0.4 x 3 -0.6 -0.8 1.6 -0.2 0 0 0.8 0.5 0.2 3 x 4 0 0 -0.2 1.1 -0.4 -0.5 4 2 2 - s || s s || /2 = - A e x 5 -0.1 0 0 -0.4 1.4 -0.9 i j ij x 6 0 0 0 -0.5 -0.9 1.4 • L is symmetric è it has real and positive eigenvalues (and ┴ eigen vectors) • Compute and sort the eigenvalues, then project examples x i ÎÂ d on the k eigen vectors of highest eigen values à new input space s i ÎÂ k , in which separation in clusters will be easier 0.8 2 0.6 1.5 0.4 1 0.2 0.5 0 0 -2 -1.5 -1 -0.5 0 0.5 1 1.5 2 -0.709 -0.7085 -0.708 -0.7075 -0.707 -0.7065 -0.706 -0.5 -0.2 -1 -0.4 -1.5 -0.6 -2 -0.8 UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 15

Other UNsupervised algos • Learn the PROBABILITY DISTRIBUTION: – Restricted Boltzmann Machine (RBM) – etc… • Learn a kind of « PROJECTION » into a LOWER DIMENSION SPACE (« Manifold Learning ») : – Non-linear Principle Componant Analysis (PCA), (e.g. kernel-based) – Auto-encoders – Kohonen topological Self-Organizing Maps (SOM) – … UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 16 Restricted Boltzmann Machine • Proposed by Smolensky (1986) + Hinton (2005) • Learns the probability distribution of examples • Two-layers Neural Networks with BINARY neurons and bidirectional connections • Use: where = energy • Training: maximize product of probabilities P i P(v i ) by gradient descent with Contrastive Divergence v’ = reconstruction from h and h’ deduced from v’ UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 17

Kohonen Self-Organizing Maps (SOM) Another specific type of Neural Network OUTPUT neurons X1 X2 Xn Inputs … with a self-organizing training algorithm which generates a MAPPING from input space to the Map THAT RESPECTS THE TOPOLOGY OF DATA UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 18 Inspiration and use of SOM Biological inspiration: self-organization of regions in perception parts of brain. USE IN DATA ANALYSIS • VISUALIZE (generally in 2D) the distribution of data with a topology-preserving “projection” (2 points close in input space should be projected on close cells on the SOM) • CLUSTERING UNSUPERVISED Machine-Learning, Pr. Fabien MOUTARDE, Centre for Robotics, MINES ParisTech, PSL, May2019 19

Recommend

More recommend