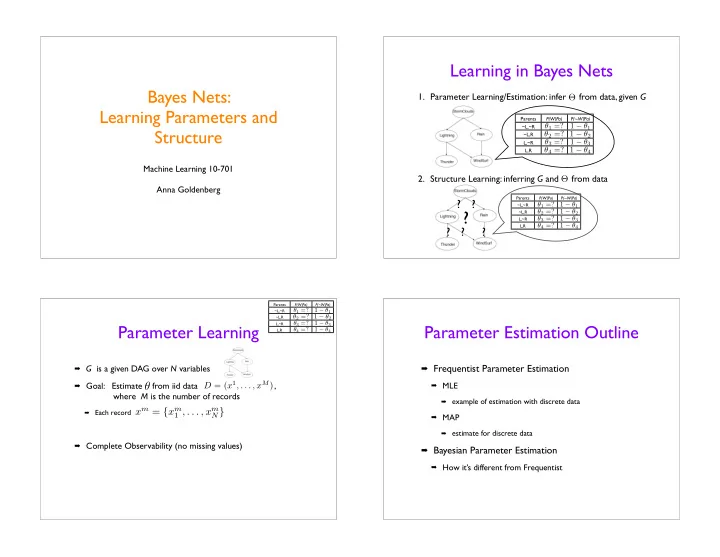

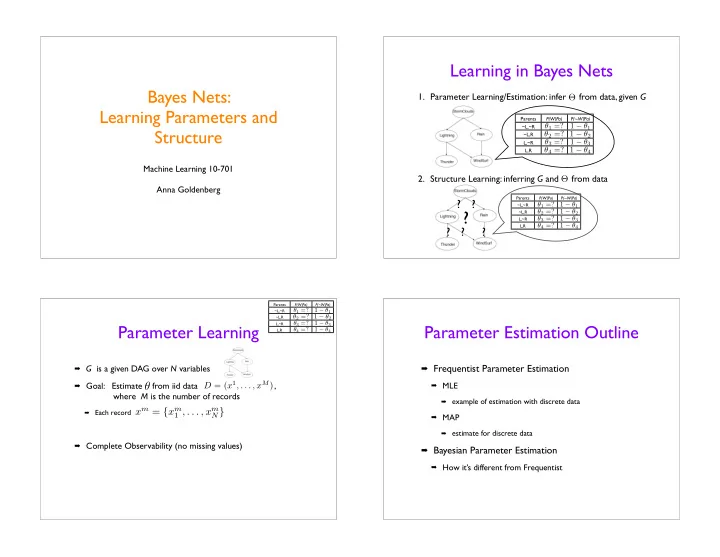

Learning in Bayes Nets Bayes Nets: 1. Parameter Learning/Estimation: infer from data, given G Θ Learning Parameters and Parents P(W|Pa) P(~W|Pa) θ 1 =? 1 − θ 1 ~L,~R Structure θ 2 =? 1 − θ 2 ~L,R θ 3 =? 1 − θ 3 L,~R θ 4 =? 1 − θ 4 L,R Machine Learning 10-701 2. Structure Learning: inferring G and from data Θ Anna Goldenberg Parents P(W|Pa) P(~W|Pa) ? ? θ 1 =? 1 − θ 1 ~L,~R ? θ 2 =? 1 − θ 2 ~L,R θ 3 =? 1 − θ 3 L,~R θ 4 =? 1 − θ 4 L,R ? ? ? Parents P(W|Pa) P(~W|Pa) θ 1 =? 1 − θ 1 ~L,~R θ 2 =? 1 − θ 2 ~L,R θ 3 =? 1 − θ 3 L,~R Parameter Learning Parameter Estimation Outline θ 4 =? 1 − θ 4 L,R � Frequentist Parameter Estimation � G is a given DAG over N variables � MLE � Goal: Estimate from iid data , D = ( x 1 , . . . , x M ) θ where M is the number of records � example of estimation with discrete data x m = { x m 1 , . . . , x m N } � Each record � MAP � estimate for discrete data � Complete Observability (no missing values) � Bayesian Parameter Estimation � How it’s different from Frequentist

Maximum Likelihood Estimator Example: MLE for one variable � � � Likelihood (for iid data): p ( x m i | x m � Variable X ~ Multinomial with K values (K-sided die) p ( D | θ ) = π i , θ ) m i � Observe M rolls: 1, 4, K, 2, ... � Log likelihood � � log p ( x m i | x m l ( θ ; D ) = log p ( D | θ ) = π i , θ ) � model , (2) � p ( X = k ) = θ k θ k = 1 m i k ˆ θ ML = arg max l ( θ ; D ) � MLE N k log ( θ k ) (1) I ( x m = k ) θ k = I ( x m = k ) log( θ k ) = � � � � � l ( θ ; D ) = log θ m k m k � advantages: has nice statistical properties Maximizing (1) subject to constraint (2): � disadvantages: can overfit θ k,ML = N k ˆ the fraction of times k occurs M Discrete Bayes Nets Continuous Variables Example: Gaussian Variables <=> One variable: X ∼ N ( µ, σ ) ML estimates: � m x m µ ML = ˆ M � Assume each CPD is represented as a table � m ( x m − ˆ µ ML ) 2 ˆ σ 2 ML = M � Loglikelihood: Similarly for several Continuous Variables Another option to estimate parameters: X i ∼ f ( Pa i , θ ) � Parameter Estimator:

Maximum A Posteriori estimate Example: MAP for Multinomial (MAP) θ N ijk � � � MLE is obtained by maximizing loglikelihood Multinomial likelihood: P ( D | θ ) = ijk m ijk � sensitive to small sample sizes ijk θ ( α ijk − 1) � ijk Dirichlet Prior: P ( θ | α ) = Z ( α ) � MAP comes from maximizing posterior θ N ijk + α ijk − 1 � Posterior: P ( θ | D, α ) ∝ p ( θ | D ) ∼ p ( D | θ ) p ( θ ) = likelihood × prior ijk ijk N ijk + α ijk ˆ � prior acts as a smoothing factor θ MAP MAP = ijk � j � ( N ij � k + α ij � k ) can be thought of as virtual pseudo counts α Bayesian vs Frequentist � Frequentist: � are unknown constants θ Questions on � MLE is a very common frequentist estimator Parameter Learning? � Bayesian θ � unknown are random variables � estimates differ based on a prior

What if G is not given? Structural Learning � When? � Constraint Based � Scientific discovery (protein networks, data mining) � Test independencies � Add edges according to the tests � Need a good model for compression, prediction... � Search and Score j_miller m_moore j_kolojejchick u_saranli � Define a selection criterion that measures goodness of a model m_derthick j_kozar � Search in the space of all models (or orders) j_harrison r_munos m_riedmiller � Mix models (recent) j_boyan m_meila a_steinfeld j_schneider b_anderson � Test for almost all independencies t_kanade k_deng a_moore l_kramer � Search and score according to possible l_baird a_ankolekar m_nechyba v_cicirello j_kubica Constraint Based Learning Constraint Based Learning � Define Conditional Independence Test Ind(X i ;X j |S) � Cons: ( O x i ,x j | s − E x i ,x j | s ) 2 χ 2 : � e.g. , � � Independence tests are less reliable on small samples E x i ,x j | s x i ,x j � One incorrect independence test might propagate far (not robust to G 2 , conditional entropy, etc. noise) � if Ind(X i ;X j |S)<p, then independence � Pros: � Choose p with care! � More global decisions => doesn’t get stuck in local minima as much � Construct model consistent with the set of independencies � Works well on sparse nets (small markov blankets, sufficient data)

Score Based Search Maximum likelihood in Outline Information Theoretic terms � Select the highest scoring model! ˆ ˆ � � log P ( D | θ G , G ) = M I ( X i | Pa X i ) − M H ( X i ) � What should the score be? � The entropy does not depend on the current model i i � Specialized structures (trees, TANs) � Thus, it’s enough to maximize mutual information! � Selection operators - how to navigate the space of models? � General case: � Same as constraint search! Theorem: maximizing Bayesian Score for d � 2 � Special case (trees): (not a tree) is NP-hard (Chickering, 2002) � have to consider only all pairs (tree => only one parent): O(N 2 ) Chow Liu tree algorithm Tree Augmented Naive Bayes � Compute empirical distribution: TAN (Friedman et al, 1997) is an extension of Chow Liu C C Naive Bayes TAN � Mutual Information: X1 X2 X3 X M X1 X2 X3 X M � Set as weight per edge between X i and X j TAN: � Find Optimal tree BN by getting the maximum spanning tree for direction: pick a random node as root ˆ ˆ Score(TAN): � � I ( X i , C ) + I ( X j , { Pa X j , C } ) direct in BFS order i j

MI Problem Penalized Likelihood Score � BIC (Bayesian Information Criterion) � Doesn’t penalize complexity: I(A,B) � I(A,{B,C}) θ ML ) − d logP ( D ) ∼ logP ( D | ˆ 2 log ( N ) , where d is the number of free parameters � Adding a parent always increases the score! � AIC (Akaike Information Criterion) � Model will overfit, since the completely connected logP ( D ) ∼ logP ( D | ˆ θ ML ) − d graph would be favored � BIC penalizes complexity more than AIC Minimum Description Length What should the score be? � Consistent : for all G’ I-equivalent to the true G and � Total number of bits needed to describe data is -log 2 P(x) all G* not equivalent to G Score(G)=Score(G’) and Score(G*)<Score(G’) � Instead - send the model and then residuals: � Decomposable : can be locally computed (for efficiency) � Score ( G ; D ) = FamScore ( X i | Pa X i ; D ) -L(D,H) = - logP(H) - log P(D|H) = -log P(H|D) + const i � The best is the one with the shortest message! Example: BIC and AIC are consistent and decomposable

Bayesian Scoring Bayesian Scoring Parameter Prior Parameter Prior � Parameter Prior - important for small datasets! � Bayes Dirichlet equivalent scoring (BDe) : α X i | P a Xi = MP � ( X i , Pa ( X i )) � Is consistent (and decomposable) � Dirichlet Parameters ( from a few slides before ) Theorem: If P(G) assigns the same prior to I-equivalent � For each possible family define a prior distribution structures and Parameter prior is Dirichlet then Bayesian score satisfies score equivalence, if and only if prior is of � Can encode it as a Bayes Net BDe form! � (Usually Independent - product of marginals) � Bayesian Scoring Structure search algorithms Structure Prior � Structure Prior - should satisfy prior modularity � Order in known � Order is unknown � Parameter Modularity: if X has the same set of parents in two different structures, then parameters should be � Search in the space of orderings the same. � Search in the space of DAGs � Search in the space of equivalence classes � Typically set to uniform. 1 � Can be a function of prior counts: α + 1

Order is unknown Order is known Search space of orderings � Select an order according to some heuristic � Suppose the total ordering is � Then for each node X i can find an optimal set of parents in � Use K2 to learn a BN corresponding to the ordering and score it � Choice of parents for X j doesn’t depend on previous X i � Maybe do multiple restarts � Need to search among all choices (where d is the maximum number of parents) for the highest local score � Most recent research: Tessier and Koller (2005) � Greedy search with known order, aka K2 algorithm is Order is unknown Exploiting Decomposable Score Search space of DAGs � Typical search operators � If the operator for edge (X,Y) is valid, then we need only to look at the families of X and Y � Add an edge � Remove an edge � e.g. for addition operator o � Reverse an edge � At most O(n 2 ) steps to get from any graph to any graph � Moves are reversible � Simplest search is Greedy Hillclimbing � Move to proposed new graph if it satisfies constraints

Recommend

More recommend