Learning Hierarchical Priors in VAEs Alexej Klushyn, Nutan Chen, Richard Kurle, Botond Cseke, Patrick van der Smagt Machine Learning Research Lab, Volkswagen Group

VAEs as a Constrained Optimisation Problem • In the context of VAEs, it is a desired ability to being able to control the reconstruction quality • Therefore, Rezende & Viola (2018) proposed to formulate the learning problem as � � � � � � ≤ κ 2 min E p D ( x ) KL q φ ( z | x ) � p ( z ) s . t . E p D ( x ) E q φ ( z | x ) C θ ( x , z ) φ � �� � � �� � optimisation objective inequality constraint • C θ ( x , z ) is defined as the reconstruction-error-related term in − log p θ ( x | z )

Hierarchical Priors for Learning Informative Latent Representations • The optimal empirical Bayes prior is the aggregated posterior distribution � � p ∗ ( z ) = E p D ( x ) q φ ( z | x ) • In order to express p ∗ ( z ) , we use a hierarchical model � p ( z ) = p Θ ( z | ζ ) p ( ζ ) d ζ • The parameters are learned by applying an importance-weighted lower bound K � p Θ ( z , ζ k ) � log 1 � � � log p ( z ) ≥ E p D ( x ) E q φ ( z | x ) E ζ 1 : K ∼ q Φ ( ζ | z ) E p ∗ ( z ) q Φ ( ζ k | z ) K k = 1 � �� � ≡ F (Θ , Φ; z )

Lagrangian & Optimisation Problem • The corresponding Lagrangian is � C θ ( x , z ) − κ 2 �� � L ( θ, φ, Θ , Φ; λ ) = E p D ( x ) E q φ ( z | x ) log q φ ( z | x ) − F (Θ , Φ; z ) + λ • As a result, we arrive to the optimisation problem M - step ���� min min max min L ( θ, φ, Θ , Φ; λ ) λ ≥ 0 s.t. Θ , Φ θ λ φ ���� � �� � empirical E - step Bayes • min θ L and max λ min φ L can be interpreted as the corresponding steps of the original EM algorithm for training VAEs

CMU Human Motion Data VHP-VAE IWAE

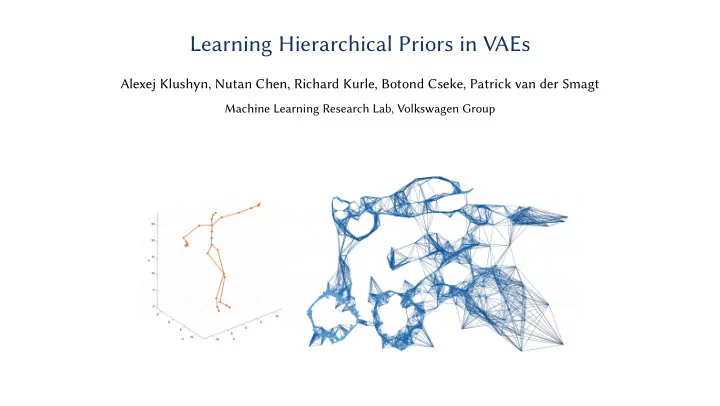

3D Faces VHP-VAE IWAE Poster #153 Today, 05:30–07:30 PM

Recommend

More recommend