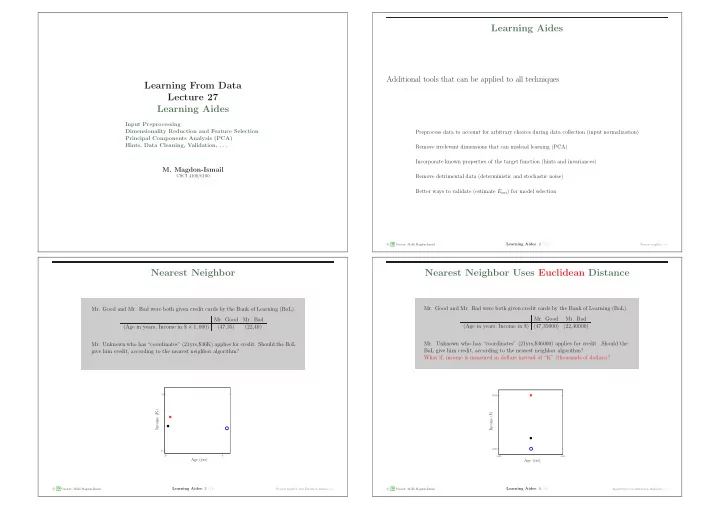

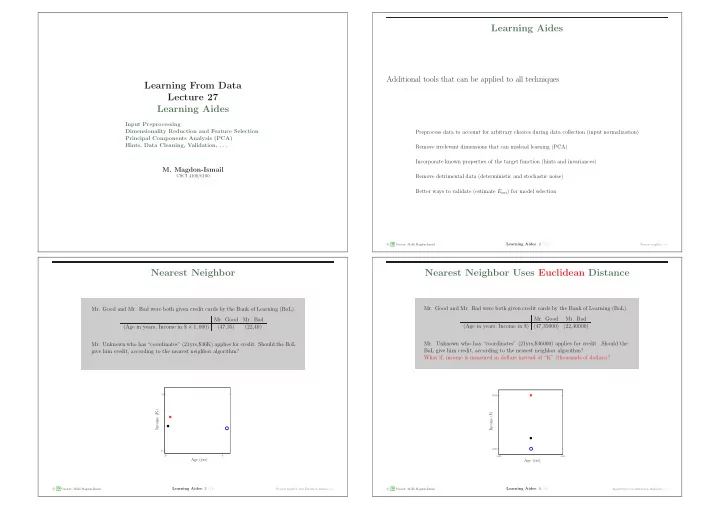

Learning Aides Additional tools that can be applied to all techniques Learning From Data Lecture 27 Learning Aides Input Preprocessing Dimensionality Reduction and Feature Selection Preprocess data to account for arbitrary choices during data collection (input normalization) Principal Components Analysis (PCA) Hints, Data Cleaning, Validation, . . . Remove irrelevant dimensions that can mislead learning (PCA) Incorporate known properties of the target function (hints and invariances) M. Magdon-Ismail CSCI 4100/6100 Remove detrimental data (deterministic and stochastic noise) Better ways to validate (estimate E out ) for model selection � A M Learning Aides : 2 /16 c L Creator: Malik Magdon-Ismail Nearest neighbor − → Nearest Neighbor Nearest Neighbor Uses Euclidean Distance Mr. Good and Mr. Bad were both given credit cards by the Bank of Learning (BoL). Mr. Good and Mr. Bad were both given credit cards by the Bank of Learning (BoL). Mr. Good Mr. Bad Mr. Good Mr. Bad (Age in years, Income in $) (47,35000) (22,40000) (Age in years, Income in $ × 1 , 000) (47,35) (22,40) Mr. Unknown who has “coordinates” (21yrs,$36000) applies for credit. Should the Mr. Unknown who has “coordinates” (21yrs,$36K) applies for credit. Should the BoL give him credit, according to the nearest neighbor algorithm? BoL give him credit, according to the nearest neighbor algorithm? What if, income is measured in dollars instead of “K” (thousands of dollars)? What if, income is measured in dollars instead of “K” (thousands of dollars)? 50 40000 Income (K) Income ($) 35000 25 20 45 -3500 3500 Age (yrs) Age (yrs) � A c M Learning Aides : 3 /16 � A c M Learning Aides : 4 /16 L Creator: Malik Magdon-Ismail Nearest neighbor uses Euclidean distance − → L Creator: Malik Magdon-Ismail Algorithms treat dimensions uniformly − →

Uniform Treatment of Dimensions Input Preprocessing is a Data Transform Most learning algorithms treat each dimension equally x t 1 x t 2 | x − x ′ | X = x n �→ z n Nearear neighbor: d ( x , x ′ ) = | . | . . x t Weight Decay: Ω( w ) = λ w t w n SVM: margin defined using Euclidean distance RBF: bump function decays with Euclidean distance g ( x ) = ˜ g (Φ( x )) . Input Preprocessing Unless you want to emphasize certain dimensions, the data should be Raw { x n } have (for example) arbitrary scalings in each dimension, and { z n } will not. preprocessed to present each dimension on an equal footing � A M Learning Aides : 5 /16 � A M Learning Aides : 6 /16 c L Creator: Malik Magdon-Ismail Input preprocessing is a data transform − → c L Creator: Malik Magdon-Ismail Centering − → Centering Normalizing z 2 z 2 x 2 x 2 z 2 centered centered raw data raw data normalized z 1 z 1 x 1 x 1 z 1 z n = Σ − 1 z n = Σ − 1 z n = x n − ¯ x z n = D x n 2 x n z n = x n − ¯ x z n = D x n 2 x n � N � N D ii = 1 Σ = 1 n = 1 D ii = 1 Σ = 1 n = 1 x n x t N X t X x n x t N X t X σ i N σ i N n =1 n =1 Σ = 1 � Σ = 1 � ¯ z = 0 σ i = 1 ˜ N Z t Z = I ¯ z = 0 σ i = 1 ˜ N Z t Z = I � A c M Learning Aides : 7 /16 � A c M Learning Aides : 8 /16 L Creator: Malik Magdon-Ismail Normalizing − → L Creator: Malik Magdon-Ismail Whitening − →

Whitening Only Use Training Data For Preprocessing WARNING! x 2 z 2 z 2 z 2 Transforming data into a more convenient format has a hidden trap which leads to data snooping. centered raw data normalized whitened When using a test set, determine the input transformation from training data only . z 1 x 1 z 1 z 1 Rule: lock away the test data until you have your final hypothesis. D train z n = Σ − 1 z n = x n − ¯ x z n = D x n 2 x n D − → input preprocessing − → g ( x ) = ˜ g (Φ( x )) 30 z = Φ( x ) snooping − Cumulative Profit % − − − 20 N � − − D ii = 1 Σ = 1 n = 1 → − x n x t N X t X − σ i N 10 − n =1 − − 0 → − − − − − − − − − − − − − − − − − − − − − − − → -10 no snooping D test � Σ = 1 ¯ z = 0 σ i = 1 ˜ N Z t Z = I 0 100 200 300 400 500 Day E test � A M Learning Aides : 9 /16 � A M Learning Aides : 10 /16 c L Creator: Malik Magdon-Ismail Only use training data − → c L Creator: Malik Magdon-Ismail PCA − → Principal Components Analysis Projecting the Data to Maximize Variance Original Data Rotated Data (Always center the data first) − − − − − − → v Original Data z = x t n v Rotate the data so that it is easy to Identify the dominant directions (information) Throw away the smaller dimensions (noise) � x 1 � � z 1 � Find v to maximize the variance of z � z 1 � → → x 2 z 2 � A c M Learning Aides : 11 /16 � A c M Learning Aides : 12 /16 L Creator: Malik Magdon-Ismail Projecting the data − → L Creator: Malik Magdon-Ismail Maximizing the variance − →

Maximizing the Variance The Principal Components z 1 = x t v 1 N � var [ z ] = 1 z 2 z 2 = x t v 2 n N n =1 v Original Data z 3 = x t v 3 . N � . = 1 . v t x n x t n v N n =1 � � v 1 , v 2 , · · · , v d are the eigenvectors of Σ with eigenvalues λ 1 ≥ λ 2 ≥ · · · ≥ λ d N � 1 = v t x n x t v n N n =1 v Original Data = v t Σ v . Theorem [Eckart-Young]. These directions give best re- construction of data; also capture maximum variance. Choose v as v 1 , the top eigenvector of Σ — the top principal component (PCA) � A M Learning Aides : 13 /16 � A M Learning Aides : 14 /16 c L Creator: Malik Magdon-Ismail − → c L Creator: Malik Magdon-Ismail PCA features for digits data − → PCA Features for Digits Data Other Learning Aides 1. Nonlinear dimension reduction: 100 x 2 x 2 x 2 80 % Reconstruction Error 60 x 1 x 1 x 1 z 2 40 2. Hints (invariances and prior information): rotational invariance, monotonicity, symmetry, . . . . 20 3. Removing noisy data: 1 0 0 50 100 150 200 not 1 k symmetry symmetry symmetry z 1 Principal components are automated Captures dominant directions of the data. intensity intensity intensity May not capture dominant dimensions for f . 4. Advanced validation techniques: Rademacher and Permutation penalties More efficient than CV, more convenient and accurate than VC. � A c M Learning Aides : 15 /16 � A c M Learning Aides : 16 /16 L Creator: Malik Magdon-Ismail Other Learning Aides − → L Creator: Malik Magdon-Ismail

Recommend

More recommend