Lambdas, Vectors, and Dynamic Logic Develop: a vector semantics - PowerPoint PPT Presentation

Lambdas, Vectors, and Dynamic Logic Develop: a vector semantics and a dynamic logic for lambda calculus models of language Mehrnoosh Sadrzadeh Queen Mary University of London Joint work with Reinhard Muskens (Tilburg) Supported by Royal

Lambdas, Vectors, and Dynamic Logic

Develop: a vector semantics and a dynamic logic for lambda calculus models of language

Mehrnoosh Sadrzadeh Queen Mary University of London Joint work with Reinhard Muskens (Tilburg) Supported by Royal Society Int. Exchange Award

Why putting lambdas and vector together?

Why putting lambdas and vector together? Because we want to develop a compositional distributional semantics for natural language.

Why putting lambdas and vector together? Because we want to develop a compositional distributional semantics for natural language. What is that?

Distributional Semantics Words that occur in similar contexts have similar meanings. “oculist and eye-doctor . . . occur in almost the same environments” “If A and B have almost identical environments. . . we say that they are synonyms.” Harris (1954) “You shall know a word by the company it keeps!” Firth (1957)

the company it keeps!”. The meaning of a word is thus related to the distribution of words around it. Imagine you had never seen the word tesg¨ uino , but I gave you the following 4 sen- Speech and Language Processing. Daniel Jurafsky & James H. Martin. Imagine you had never seen the word tesguino, but given the following text: A bottle of tesguino is on the table. Everybody likes tesguino . Tesguino makes you drunk. We make tesguino out of corn.

Imagine you had never seen the word tesguino, but given the following text: A bottle of tesguino is on the table. Everybody likes tesguino . Tesguino makes you drunk. We make tesguino out of corn. “an alcoholic drink made of corn”

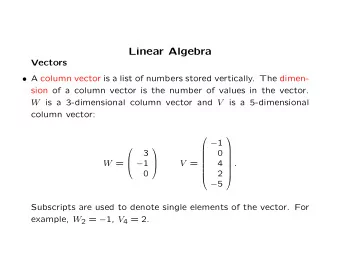

Algorithm for Word Meaning Acquisition Co-Occurrence Matrix • sugar, a sliced lemon, a tablespoonful of apricot preserve or jam, a pinch each of, their enjoyment. Cautiously she sampled her first pineapple and another fruit whose taste she likened well suited to programming on the digital computer . In finding the optimal R-stage policy from for the purpose of gathering data and information necessary for the study authorized in the aardvark ... computer data pinch result sugar ... apricot 0 ... 0 0 1 0 1 pineapple 0 ... 0 0 1 0 1 digital 0 ... 2 1 0 1 0 information 0 ... 1 6 0 4 0 Figure 15.4 Co-occurrence vectors for four words, computed from the Brown corpus, [| digital |] := (0, …, 2, 1, 0, 1, 0)

Algorithm for Word Meaning Acquisition Co-Occurrence Matrix • sugar, a sliced lemon, a tablespoonful of apricot preserve or jam, a pinch each of, their enjoyment. Cautiously she sampled her first pineapple and another fruit whose taste she likened well suited to programming on the digital computer . In finding the optimal R-stage policy from for the purpose of gathering data and information necessary for the study authorized in the aardvark ... computer data pinch result sugar ... apricot 0 ... 0 0 1 0 1 pineapple 0 ... 0 0 1 0 1 digital 0 ... 2 1 0 1 0 information 0 ... 1 6 0 4 0 Figure 15.4 Co-occurrence vectors for four words, computed from the Brown corpus, Normalised [| digital |] := (0, …, 0.25, 0.12, 0, 0.15, 0)

Word-Context Matrix • sugar, a sliced lemon, a tablespoonful of apricot preserve or jam, a pinch each of, their enjoyment. Cautiously she sampled her first pineapple and another fruit whose taste she likened well suited to programming on the digital computer . In finding the optimal R-stage policy from for the purpose of gathering data and information necessary for the study authorized in the aardvark ... computer data pinch result sugar ... apricot 0 ... 0 0 1 0 1 pineapple 0 ... 0 0 1 0 1 digital 0 ... 2 1 0 1 0 information 0 ... 1 6 0 4 0 Figure 15.4 Co-occurrence vectors for four words, computed from the Brown corpus, Intuition: a word is represented by embedding its representation into a vector space, rather than just treated as an “atom”: Q: What’s the meaning of life? A: LIFE

Automatic Meaning Acquisition Curran, 2006 Introduction: launch, implementation, advent, addition arrival, creation, inclusion Evaluation: assessment, examination, appraisal, review audit, analysis, consultation, test, verification Methods: technique, procedure, means, approach, tool, concept, practice, formula

Stronghold Semantic Similarity Dimension 1: ‘large’ 3 2 apricot information 1 digital 1 2 3 4 5 6 7 Dimension 2: ‘data’

Evaluations WordSim-353: noun pairs (cup, coffee) SimLex-999: adjective, noun, and verb pairs (cup, drink) TOEFL: 80 questions “Levied” is closest in meaning to imposed, believed, requested, correlated SCWS: 2003 words in sentences Analogy: a to b is like c to do Athens to Greece is like Oslo to Norway

Applications Drawing inferences about meanings of words. Named entity recognition, parsing, semantic role labelling., summarisation, essay marking, …

Compositional Distributional Semantics Extending distributional semantics from words to sentences

Vectors for Sentences 4 information dogs bark 3 [6,4] base 2 result mice 2 digital squeak [1,1] 1 1 2 3 4 5 6 data base 1

A direct distributional approach… At childhood we spent the summers in countryside. Khaterey daram sharbat-e albalooye anjast ast. My mother preferred the jams, however. My worst memory is being forced to take a nap in the afternoons.

A direct distributional approach… At childhood we spent the summers in countryside. Khaterey daram sharbat-e albalooye anjast ast. My mother preferred the jams, however. My worst memory is being forced to take a nap in the afternoons. “ A memory from then is their sour cherry drink”

An approach that forgets about grammar � � � � � � � � � � � � ! vampires + � � � � � � ! ! kill + � � ! vampires kill men men = vampires � � � � � � � ! ! kill � � � ! men = � � � � � � � � � � � � ! � � � � � � � � � � � � ! vampires kill men men kill vampires =

So what should we do?

Formal Semantics structure preserving map Semantics Syntax

Formal Semantics with Vectors structure preserving map Semantics Syntax Formal Vectors Grammar

Compositional Distributional Semantics structure preserving map Semantics Syntax strongly monoidal functor Pregroup Vectors Grammar Spaces Coecke, Sadrzadeh, Clark, (Lambek’s 90th Festschrift), 2010 Preller, Sadrzadeh (JoLLI), 2011

Compositional Distributional Semantics structure preserving map Semantics Syntax homomorphism Lambek Vectors Calculus Spaces Coecke, Grefenstette,Sadrzadeh, (APAL), 2013

Compositional Distributional Semantics structure preserving map Semantics Syntax homomorphism Lambek Vectors Grishin Spaces G. Wijnholds, (MSc Thesis, ILLC), 2015

structure preserving map Semantics Syntax Vector CCG Spaces Krishnamurty and Mitchell, (CVSC, ACL workshop), 2013. Maillard, Clark, Grefenstette, (Type Theory and NL, EACL workshop), 2014. Baroni, Bernardini, Zamparelli, (LILT), 2014.

structure preserving map Semantics Syntax homomorphism Vectors ACG Spaces Muskens and Sadrzadeh, DSALT , LACL 2016. journal version is to apear in JLM 2018 Royal Society International Exchange Award

structure preserving map Semantics CCG T ypes A X / Y X \ Y Rules X / Y Y = ) X X \ Y = ) X Y

structure preserving map Semantics Syntax T ypes 3 V i A U 7!

structure preserving map Semantics Syntax T ypes 3 V i A U 7! X ⌦ Y ⇤ X / Y 7! Y ⇤ ⌦ X X \ Y 7!

structure preserving map Semantics Syntax T ypes 3 V i A U 7! 3 X ⌦ Y ⇤ X / Y 7! 3 M ij , T ijk , C ijkl , · · · Y ⇤ ⌦ X X \ Y 7!

structure preserving map Semantics Syntax T ypes 3 V i A U 7! X ⌦ Y ⇤ X / Y 7! 3 T i , T i j , T i jk , T i jkl , · · · Y ⇤ ⌦ X X \ Y 7! Rules X ⌦ Y ⇤ ⌦ Y = ) X X / Y Y = ) X 7! Y ⌦ Y ⇤ ⌦ X = ) X X \ Y = ) X Y 7!

structure preserving map Semantics Syntax T ypes 3 V i A U 7! X ⌦ Y ⇤ X / Y 7! 3 T i , T i j , T i jk , T i jkl , · · · Y ⇤ ⌦ X X \ Y 7! Rules X ⌦ Y ⇤ ⌦ Y = ) X X / Y Y = ) X 7! Y ⌦ Y ⇤ ⌦ X = ) X X \ Y = ) X Y 7! 2 T i j ··· k

structure preserving map Semantics Syntax T ypes 3 V i A U 7! X ⌦ Y ⇤ X / Y 7! 3 T i , T i j , T i jk , T i jkl , · · · Y ⇤ ⌦ X X \ Y 7! Rules X ⌦ Y ⇤ ⌦ Y = ) X X / Y Y = ) X 7! Y ⌦ Y ⇤ ⌦ X = ) X X \ Y = ) X Y 7! 2 2 T i j ··· k T kl ··· w

structure preserving map Semantics Syntax T ypes 3 V i A U 7! X ⌦ Y ⇤ X / Y 7! 3 T i , T i j , T i jk , T i jkl , · · · Y ⇤ ⌦ X X \ Y 7! Rules X ⌦ Y ⇤ ⌦ Y = ) X X / Y Y = ) X 7! Y ⌦ Y ⇤ ⌦ X = ) X X \ Y = ) X Y 7! 2 2 T i j ··· k T kl ··· w

structure preserving map Semantics Syntax T ypes 3 V i A U 7! X ⌦ Y ⇤ X / Y 7! 3 T i , T i j , T i jk , T i jkl , · · · Y ⇤ ⌦ X X \ Y 7! Rules X ⌦ Y ⇤ ⌦ Y = ) X X / Y Y = ) X 7! Y ⌦ Y ⇤ ⌦ X = ) X X \ Y = ) X Y 7! 2 2 2 T i j ··· k T kl ··· w = T i j ··· l ··· w

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.