Information Retrieval What is IR? Matching Models Overview - PDF document

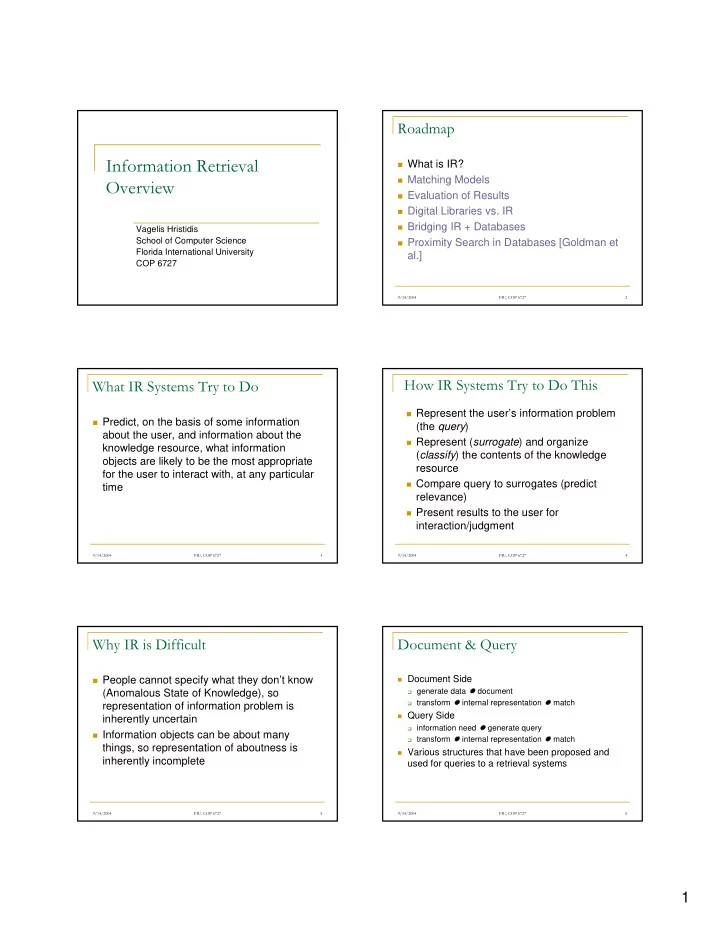

Roadmap Information Retrieval What is IR? Matching Models Overview Evaluation of Results Digital Libraries vs. IR Bridging IR + Databases Vagelis Hristidis School of Computer Science Proximity Search in Databases [Goldman

Roadmap Information Retrieval � What is IR? � Matching Models Overview � Evaluation of Results � Digital Libraries vs. IR � Bridging IR + Databases Vagelis Hristidis School of Computer Science � Proximity Search in Databases [Goldman et Florida International University al.] COP 6727 9/14/2004 FIU, COP 6727 2 How IR Systems Try to Do This What IR Systems Try to Do � Represent the user’s information problem � Predict, on the basis of some information (the query ) about the user, and information about the � Represent ( surrogate ) and organize knowledge resource, what information ( classify ) the contents of the knowledge objects are likely to be the most appropriate resource for the user to interact with, at any particular � Compare query to surrogates (predict time relevance) � Present results to the user for interaction/judgment 9/14/2004 FIU, COP 6727 3 9/14/2004 FIU, COP 6727 4 Why IR is Difficult Document & Query � Document Side � People cannot specify what they don’t know � generate data � document (Anomalous State of Knowledge), so � transform � internal representation � match representation of information problem is � Query Side inherently uncertain � information need � generate query � Information objects can be about many � transform � internal representation � match things, so representation of aboutness is � Various structures that have been proposed and inherently incomplete used for queries to a retrieval systems 9/14/2004 FIU, COP 6727 5 9/14/2004 FIU, COP 6727 6 1

The document and the query undergo parallel processes within Roadmap the retrieval system. endosystem ectosystem � What is IR? data data � Matching Models format to use internal data gather document transform transform in matching data representation process data � Evaluation of Results Matching Process � Digital Libraries vs. IR � Bridging IR + Databases � Proximity Search in Databases [Goldman et Information Need format to use internal generate transform transform query in matching al.] representation process ectosystem endosystem 9/14/2004 FIU, COP 6727 7 9/14/2004 FIU, COP 6727 8 Matching Criteria Boolean Queries � An exact match can only be found in special � Based on concepts from logic: AND, OR, NOT situations � Order of operations (two conventions) � requires precise query � NOT, AND, OR � numerical or business applications � left to right � Range Match � Standard forms � works best in a DB with defined fields � Disjunctive Normal Form (DNF) � Approximate Matching Terms, Conjuncts, Disjuncts � (P AND Q) OR (Q AND NOT R) OR (P AND R) � � Matching techniques can be combined � Conjunctive Normal Form (CNF) � eg, begin with approximate, narrow down with exact or Terms, Disjuncts , Conjuncts � range P AND (NOT Q OR R) AND (S OR NOT R) � 9/14/2004 FIU, COP 6727 9 9/14/2004 FIU, COP 6727 10 Boolean-based Matching Truth Table � Separate the documents containing a given term from those that do not. P Q NOT P P AND Q P OR Q � No similarity between document and query structure 0 0 TRUE FALSE FALSE � Proximity Judgement : Gradations of the retrieved set 0 1 TRUE FALSE TRUE 1 0 FALSE FALSE TRUE Terms Queries Mediterranean 1 1 FALSE TRUE TRUE scholarships agriculture cathedrals horiculture adventure disasters leprosy recipes bridge tennis Venus flags flags AND tennis 0 0 1 1 0 0 0 0 1 1 0 0 0 Documents leprosy AND tennis 0 1 1 0 0 0 0 0 0 0 1 1 0 Venus OR (tennis AND flags) (bridge OR flags) AND tennis 1 0 1 0 1 0 0 1 0 0 0 0 1 1 1 0 0 0 1 1 0 0 0 0 1 0 9/14/2004 FIU, COP 6727 11 9/14/2004 FIU, COP 6727 12 2

Exact Match IR Vector Queries � Advantages � Documents and Queries are vectors of terms � Actual vectors have many terms (thousands) � Efficient � Vectors can be Boolean (keyword) or weighted � Boolean queries capture some aspects of (term frequencies) information problem structure � Example terms: “dog”,”cat”,”house”, “sink”, “road”, � Disadvantages “car” � Not effective � Boolean: (1,1,0,0,0,0), (0,0,1,1,0,0) � Difficult to write effective queries � Weighted: (0.01,0.01, 0.002, 0.0,0.0,0.0) � No inherent document ranking � Queries can be weighted also* 9/14/2004 FIU, COP 6727 13 9/14/2004 FIU, COP 6727 14 Vector-based Matching: Metrics Vector-based Matching: Cosine � Metric or Distance Measure : document close � Cosine of the angle between the vectors together in the vector space are likely to be highly representing the document and the query similar � Documents “in the same direction” are closely related. Term 2 (“weather”) Axes represent terms � Transforms the angular measure into a measure ranging from 1 for the highest similarity to 0 for Documents are represented as the lowest points in the vector space B C Similarities among documents A Similarities between documents and queries Term 1 (“internet”) D 9/14/2004 FIU, COP 6727 15 9/14/2004 FIU, COP 6727 16 Example, continued Queries � Document A: “A dog and a cat.” � Queries can be represented as vectors in the same way as documents: � Dog = (0,0,0,1,0) a and cat dog frog � Vector: (2,1,1,1,0) � Frog = ( ) 2 1 1 1 0 � Document B: “A frog.” � Dog and frog = ( ) a and cat dog frog � Vector: (1,0,0,0,1) 1 0 0 0 1 9/14/2004 FIU, COP 6727 17 9/14/2004 FIU, COP 6727 18 3

Similarity measures The cosine measure � For two vectors d and d’ the cosine similarity � There are many different ways to measure how between d and d’ is given by: similar two documents are, or how similar a d × document is to a query d ' � The cosine measure is a very common similarity d d ' measure � Here d X d’ is the vector product of d and d’, � Using a similarity measure, a set of documents can calculated by multiplying corresponding frequencies be compared to a query and the most similar together document returned � The cosine measure calculates the angle between the vectors in a high-dimensional virtual space 9/14/2004 FIU, COP 6727 19 9/14/2004 FIU, COP 6727 20 Vector Space Model Example � Advantages � Let d = (2,1,1,1,0) and d’ = (0,0,0,1,0) � Straightforward ranking � dXd’ = 2X0 + 1X0 + 1X0 + 1X1 + 0X0=1 � Simple query formulation (bag of words) � |d| = √ (2 2 +1 2 +1 2 +1 2 +0 2 ) = √ 7=2.646 � Intuitively appealing � |d’| = √ (0 2 +0 2 +0 2 +1 2 +0 2 ) = √ 1=1 � Effective � Similarity = 1/(1 X 2.646) = 0.378 � Disadvantages � Let d = (1,0,0,0,1) and d’ = (0,0,0,1,0) � Unstructured queries � Similarity = 0 � Effective calculations and parameters must be empirically determined 9/14/2004 FIU, COP 6727 21 9/14/2004 FIU, COP 6727 22 Fuzzy Queries Probabilistic Queries � Fuzzy Logic: Propositions have a “truth value” � Like fuzzy queries, except they adhere to the between 0 and 1 laws of probability � Fuzzy NOT: 1-t � Can use probabilistic concepts like Baye’s � Fuzzy AND: t 1 *t 2 Theorem � Fuzzy OR: 1-(1-t 1 )*(1-t 2 ) � Term frequency data can be used to estimate � Example: probabilities � All swans are white 0.8 1 � All swans can swim 0.9 1 � White and swim 0.72 1 � White or swim 0.92 1 9/14/2004 FIU, COP 6727 23 9/14/2004 FIU, COP 6727 24 4

Natural Language Queries Vocabulary � Stopword lists � The “Holy Grail” of information retrieval � Issues in Natural Language Processing � Commonly occurring words are unlikely to give useful information and may be removed from the � syntax vocabulary to speed processing � semantics � Stopword lists contain frequent words to be � pragmatics excluded � speech understanding � Stopword lists need to be used carefully � speech generation � E.g. “to be or not to be” 9/14/2004 FIU, COP 6727 25 9/14/2004 FIU, COP 6727 26 Term weighting Normalised term frequency (tf) � A normalised measure of the importance of a word � Not all words are equally useful to a document is its frequency, divided by the � A word is most likely to be highly relevant to maximum frequency of any term in the document document A if it is: � This is known as the tf factor. � Infrequent in other documents � Document A: raw frequency vector: (2,1,1,1,0), tf vector: ( ) � Frequent in document A � This stops large documents from scoring higher � A is short � The cosine measure needs to be modified to reflect this 9/14/2004 FIU, COP 6727 27 9/14/2004 FIU, COP 6727 28 Inverse document frequency (idf) tf-idf � A calculation designed to make rare words � The tf-idf weighting scheme is to multiply more important than common words each word in each document by its tf factor � The idf of word i is given by and idf factor N � Different schemes are usually used for query = idf log i n vectors i � Where N is the number of documents and n i � Different variants of tf-idf are also used is the number that contain word i 9/14/2004 FIU, COP 6727 29 9/14/2004 FIU, COP 6727 30 5

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.