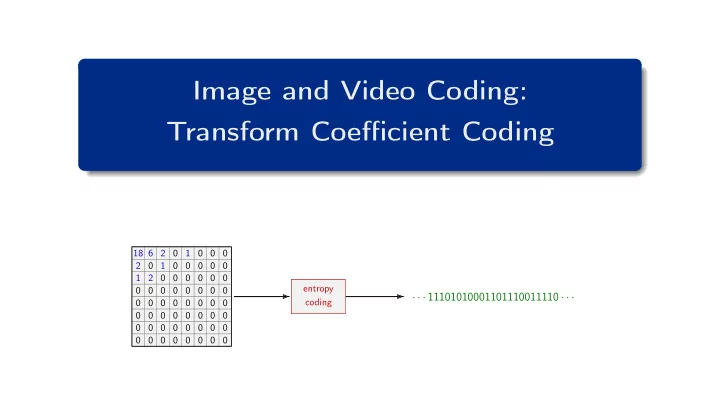

Image and Video Coding: Transform Coefficient Coding 18 6 2 0 1 0 0 0 2 0 1 0 0 0 0 0 1 2 0 0 0 0 0 0 entropy 0 0 0 0 0 0 0 0 · · · 11101010001101110011110 · · · coding 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

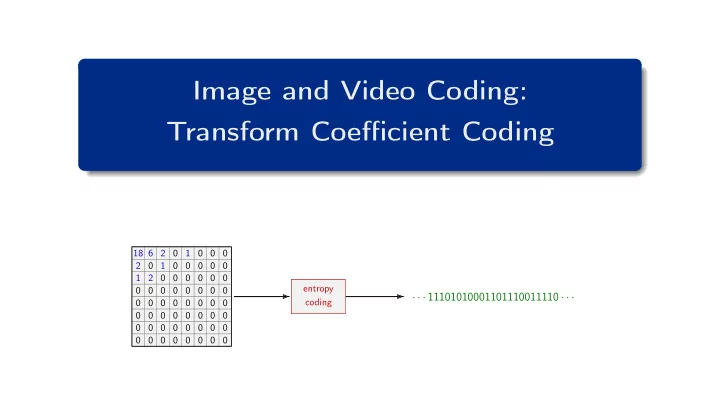

Transform Coefficient Coding 18 6 2 0 1 0 0 0 2 0 1 0 0 0 0 0 1 2 0 0 0 0 0 0 entropy transform & 0 0 0 0 0 0 0 0 · · · 1110101010011110 · · · quantization 0 0 0 0 0 0 0 0 coding 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 Entropy Coding of Quantization Indexes (for transform coefficients) Requirements: Lossless coding : Reconstruction of same indexes at decode side Efficient coding : Use as less bits as possible (on average) Transform and quantization: Quantization indexes have certain statistical properties Utilize statistical properties of quantization indexes for an efficient coding ! Heiko Schwarz (Freie Universität Berlin) — Image and Video Coding: Transform Coefficient Coding 2 / 42

Variable-Length Coding / Scalar Codes Entropy Coding Lossless Coding / Entropy Coding Maps sequence of symbols into sequence of bits Reversible Mapping: Original sequence of symbols can be reconstructed Unique Decodability Each sequence of bits can only be generated by one sequence of symbols Most important class: Prefix codes Simplest Variant: Scalar Variable-Length Codes Table that assigns a codeword to each symbol Examples : symbol codeword symbol codeword symbol codeword a 000 a 1 a 0000 b 001 b 01 b 0001 c 010 c 001 c 001 d 011 d 0001 d 01 e 100 e 00001 e 10 f 101 f 000001 f 110 g 110 g 0000001 g 1110 h 111 h 0000000 h 1111 Heiko Schwarz (Freie Universität Berlin) — Image and Video Coding: Transform Coefficient Coding 3 / 42

Variable-Length Coding / Scalar Codes Prefix Codes Property: No codeword represents a prefix of any other codeword Prefix codes can be represented as binary code trees There are no better uniquely decodable codes than the best prefix codes Uniquely and instantaneously decodable codes a (00) 0 letter codeword 0 b (010) 0 1 a 00 c (011) b 010 root 1 c 011 node d 10 d (10) 0 e 1100 1 f 1101 0 e (1100) 0 g 111 f (1101) 1 1 1 g (111) Heiko Schwarz (Freie Universität Berlin) — Image and Video Coding: Transform Coefficient Coding 4 / 42

Variable-Length Coding / Scalar Codes Coding Efficiency of Scalar Codes Design Goal : Minimize number of required bits Minimize average codeword length ¯ ℓ while retaining unique decodability � ¯ ℓ = p k ℓ k Assign shorter codewords to more probable symbols k Minimum Achievable Average Codeword Length Entropy H : Lower bound on average codeword length ¯ ℓ � ¯ H = H ( S ) = H ( p ) = − p k log 2 p k with ℓ ≥ H k Redundancy : Increase in average codeword length relative to entropy ¯ ℓ ̺ ′ = ̺ = ¯ absolute : ℓ − H ≥ 0 relative : H − 1 ≥ 0 Heiko Schwarz (Freie Universität Berlin) — Image and Video Coding: Transform Coefficient Coding 5 / 42

Variable-Length Coding / Scalar Codes Optimal Prefix Codes Prefix Codes with Zero Redundancy Necessary condition: ¯ ℓ = H � � ∀ k : p k = 2 − ℓ k p k ℓ k = − p k log 2 p k ∀ k : ℓ k = − log 2 p k k k Only possible if all probability masses represent negative integer powers of 2 Optimal Prefix Codes: Huffman Algorithm Algorithm for constructing prefix codes with minimum redundancy 1 Construct binary code tree (for given probability mass function) Select the two least likely symbols and create a parent node Consider the combination of the two symbols as new symbol Continue the algorithm until all symbols are merged into the root node 2 Construct code by labeling the branches with “0” and “1” Heiko Schwarz (Freie Universität Berlin) — Image and Video Coding: Transform Coefficient Coding 6 / 42

Variable-Length Coding / Scalar Codes Example: Construction of a Huffman Code next step: next step: next step: next step: ¯ first step: given: ℓ = 2 . 98 0.16 a 0.23 e 0.23 e 0.23 e 0.23 e 0.16 a 0.16 a 0.16 a 0.16 a 1 0.16 a label branches with 0 and 1 re-order for better readability re-order for better readability assign codewords alphabet A with pmf { p k } assign symbols and probabilities to terminal nodes H ( p ) ≈ 2 . 94 0.32 0.32 0.32 1 (follow branches from root 0.16 a 0.16 a 0.16 a 0.16 a 0.04 b 0.16 d 0.04 b 0.16 d 0.04 b 0.16 d 0 ̺ ≈ 0 . 04 to terminal nodes) 0.32 0.08 0.32 0.08 0.08 ̺ ′ ≈ 1 . 34 % 0.60 0.60 0.16 d 0.16 d 0.16 d 0.04 c 0.15 i 0.04 c 0.15 i 0.16 d 0.04 c 0.15 i 1 1 0 0.28 0.28 0.28 0.16 d 0.16 d 0.15 i 0.15 i 0.16 d 0.15 i 0.15 i 0.07 f 0.07 f 1 0.07 f 0 a k p k codewords 0.13 0.13 0.13 0.28 1.00 0.28 0.28 0.06 g 0.06 g 0.06 g 0.23 e 0.23 e 0.23 e 0.07 f 0.07 f 0.07 f 0.07 f 0 a 0.16 111 root 0.13 0.13 0.13 0.13 b 0.04 0001 0.06 g 0.06 g 0.06 g 0.06 g 0.23 e 0.23 e 0.23 e 0.07 f 0.07 f 0.07 f 1 c 0.04 0000 0 0.13 0.13 d 0.16 110 0.40 0.40 0.06 g 0.40 0.06 g 0.06 g 0.09 h 0.09 h 0.09 h 0.09 h 0.09 h 0.09 h 0.09 h 1 e 0.23 01 f 0.07 1001 0 0.17 0.17 0.17 0.17 0.17 0.17 0.17 1 0.09 h 0.09 h 0.09 h 0.04 b 0.04 b 0.04 b 0.04 b 0.04 b 0.04 b 0.04 b g 0.06 1000 0 0.08 0.08 0.08 0.08 0.08 0.08 0.08 h 0.09 001 0.15 i 0.15 i 0.15 i 0.04 c 0.04 c 0.04 c 0.04 c 0.04 c 0.04 c 0.04 c i 0.15 101 0 Heiko Schwarz (Freie Universität Berlin) — Image and Video Coding: Transform Coefficient Coding 7 / 42

Variable-Length Coding / Conditional Codes and Block Codes Inefficiency of Scalar Huffman Codes Large Probabilities binary source Scalar codes are inefficient if a probability mass p k ≫ 0 . 5 p ( s k ) codeword s k Minimum length of a codeword is 1 bit a 0.9 0 b 0.1 1 Relative redundancy can become very large ( > 100 % ) H ≈ 0.469 Random Sources with Memory ¯ ℓ = 1 ̺ ′ ≈ 113 % Strong statistical dependencies between successive symbols Symbol probabilities depend on value of previous symbols Cannot exploit dependencies with scalar code Instationary Sources Statistical properties change over time Optimal code changes over time Adjust code: Send updates for codeword table (costs bit rate) Regularly construct code in encoder/decoder based on statistics (rather complex) Heiko Schwarz (Freie Universität Berlin) — Image and Video Coding: Transform Coefficient Coding 8 / 42

Variable-Length Coding / Conditional Codes and Block Codes Conditional Codes Concept : Switch codeword table depending on preceding symbol (or function of preceding symbols) Construct codeword tables using corresponding conditional probabilities conditional Huffman code s k − 1 = a s k − 1 = b s k − 1 = c scalar Huffman code s k p ( s k | a ) codeword p ( s k | b ) codeword p ( s k | b ) codeword s k p ( s k ) codeword 0.90 0 0.15 10 0.25 10 29 / 45 0 a a b 0.05 10 0.80 0 0.15 11 b 11 / 45 10 c 0.05 11 0.05 11 0.60 0 c 5 / 45 11 ¯ ¯ ¯ ¯ ℓ a = 1 . 1 ℓ b = 1 . 2 ℓ c = 1 . 4 ℓ scal = 61 / 45 ≈ 1 . 3556 Average codeword length ¯ ℓ cond for conditional Huffman code ℓ z = 29 45 · 1 . 1 + 11 45 · 1 . 2 + 5 45 · 1 . 4 = 521 ¯ p ( z ) · ¯ � ℓ cond = 450 ≈ 1 . 1578 ∀ z ℓ cond ≤ ¯ ¯ Conditioning never decreases coding efficiency (for optimal codes): ℓ scal Heiko Schwarz (Freie Universität Berlin) — Image and Video Coding: Transform Coefficient Coding 9 / 42

Variable-Length Coding / Conditional Codes and Block Codes Block Codes Concept : Assign codewords to fixed-size blocks of N consecutive symbols Construct codeword tables using corresponding joint probabilities conditional pmf block Huffman code ( N = 2 ) block Huffman coding ¯ s k p ( s k | a ) p ( s k | b ) p ( s k | c ) s k s k + 1 p ( s k , s k + 1 ) codeword N ℓ # codewords a 0 . 90 0 . 15 0 . 25 aa 0.5800 0 1 1.3556 3 (scalar) b 0 . 05 0 . 80 0 . 15 ab 0.0322 10000 2 1.0094 9 c 0 . 05 0 . 05 0 . 60 ac 0.0322 10001 3 0.9150 27 ba 0.0367 1010 4 0.8690 81 bb 0.1956 11 5 0.8462 243 bc 0.0122 101100 6 0.8299 729 ¯ scalar : ℓ scal ≈ 1 . 3556 ca 0.0278 10111 7 0.8153 2187 ¯ conditional : ℓ cond ≈ 1 . 1578 cb 0.0167 101101 8 0.8027 6561 cc 0.0667 1001 9 0.7940 19683 ¯ ¯ ℓ 2 ≈ 2 . 0188 ℓ ≈ 1 . 0094 Two effects: Large probabilities are reduced & conditional probabilities can be taken into account Increasing block size N never decreases coding efficiency (for optimal codes) Size of codeword tables exponentially increases with block size N Heiko Schwarz (Freie Universität Berlin) — Image and Video Coding: Transform Coefficient Coding 10 / 42

Variable-Length Coding / Conditional Codes and Block Codes Efficiency and Bounds for Scalar, Conditional, and Block Huffman Codes Random process S = { S n } , alphabet A = { a } , condition C n = f ( S n − 1 , S n − 2 , · · · ) Lower Bounds on Average Codeword Length � Scalar codes : Marginal Entropy H ( S ) = − p ( a ) · log 2 p ( a ) a ∈A � Conditional codes : Conditional Entropy H ( S | C ) = − p ( a , c ) · log 2 p ( a | c ) ≤ H ( S ) a ∈A , c ∈ C H N ( S ) = − 1 ≤ H N − 1 ( S ) � Block codes : Block Entropy / N p ( a ) · log 2 p ( a ) N N N − 1 a ∈A N Entropy Rate & Fundamental Lossless Coding Theorem H N ( S ) ¯ ℓ ≥ ¯ ¯ entropy rate: H ( S ) = lim for all lossless codes: H ( S ) N N →∞ Heiko Schwarz (Freie Universität Berlin) — Image and Video Coding: Transform Coefficient Coding 11 / 42

Recommend

More recommend