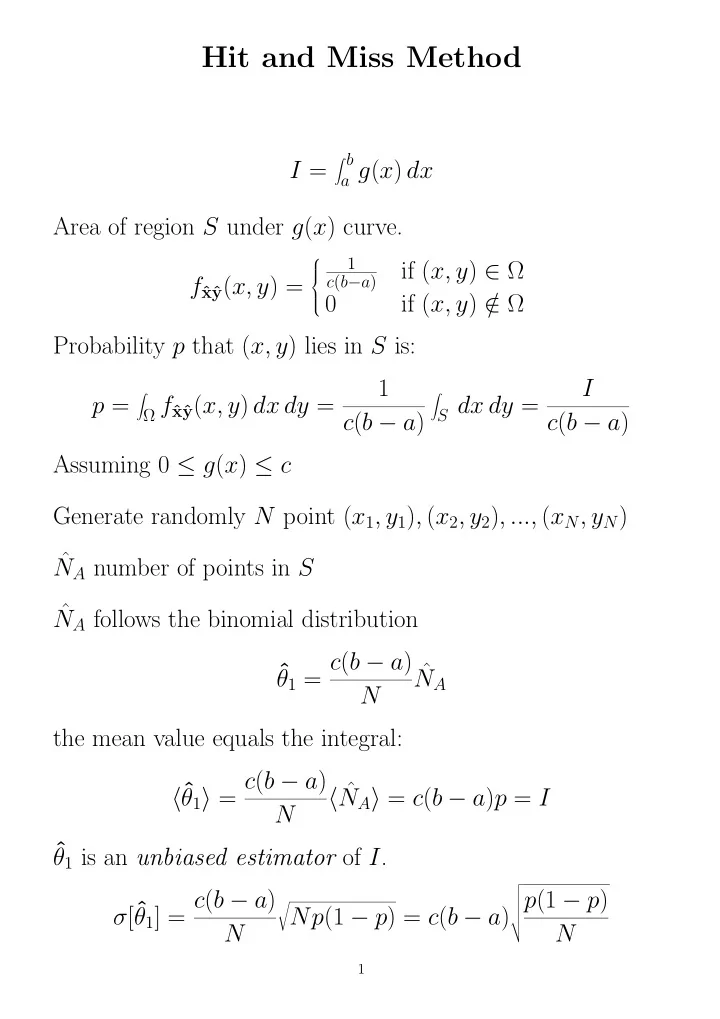

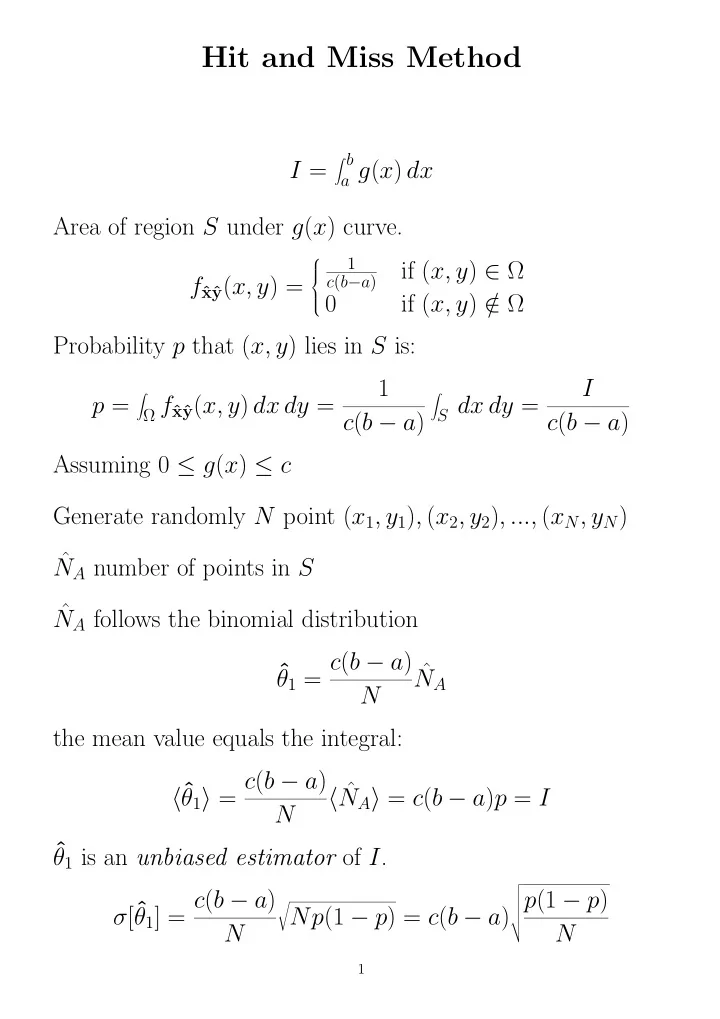

Hit and Miss Method � b I = a g ( x ) dx Area of region S under g ( x ) curve. 1 if ( x, y ) ∈ Ω c ( b − a ) f ˆ y ( x, y ) = xˆ 0 if ( x, y ) / ∈ Ω Probability p that ( x, y ) lies in S is: 1 I � � p = Ω f ˆ y ( x, y ) dx dy = S dx dy = xˆ c ( b − a ) c ( b − a ) Assuming 0 ≤ g ( x ) ≤ c Generate randomly N point ( x 1 , y 1 ) , ( x 2 , y 2 ) , ..., ( x N , y N ) ˆ N A number of points in S ˆ N A follows the binomial distribution θ 1 = c ( b − a ) ˆ ˆ N A N the mean value equals the integral: θ 1 � = c ( b − a ) � ˆ � ˆ N A � = c ( b − a ) p = I N ˆ θ 1 is an unbiased estimator of I . � θ 1 ] = c ( b − a ) � p (1 − p ) � � σ [ ˆ � Np (1 − p ) = c ( b − a ) � � N N 1

p = ˆ Replace p by its sample value: ˆ N A /N . � � ˆ p (1 − ˆ p ) � � I = ˆ θ 1 ± σ [ ˆ θ 1 ] = c ( b − a )ˆ p ± c ( b − a ) � � N Relative error: σ [ ˆ � θ 1 ] 1 − p � � θ 1 > = � � < ˆ � pN � decreases for large p . Take c = max ( g ( x )). 2

subroutine mc1(g,a,b,c,n,r,s) external g na=0 do 1 i=1,n u=ran_u() v=ran_u() if (g(a+(b-a)*u).gt.c*v) na=na+1 1 continue p=real(na)/n r=(b-a)*c*p s=sqrt(p*(1.-p)/n)*c*(b-a) return end 3

Sampling methods: Uniform Sampling � b I = a g ( x ) dx � g ( x ) f ˆ 1 � b I = a ( b − a ) g ( x ) b − a dx ≡ ( b − a ) x ( x ) dx I = ( b − a ) E x [ g ( x )] x es ˆ U ( a, b ): ˆ 1 if a ≤ x ≤ b f ˆ x ( x ) = b − a 0 otherwise Sample mean: µ N [ g ( x )] = 1 N ˆ i =1 g ( x i ) � N r.v. ˆ θ 2 = ( b − a )ˆ µ N from sampling: θ 2 = ( b − a ) 1 N ˆ i =1 g ( x i ) � N x i , i = 1 , 2 , ..., N uniformly distributed in the interval [ a, b ]. � θ 2 � = I θ 2 ± ( b − a ) σ [ g ( x )] I = ˆ θ 2 ± σ [ ˆ θ 2 ] = ˆ √ N 2 1 1 N N i =1 g ( x i ) 2 − σ 2 ˆ N = i =1 g ( x i ) � � N N 4

subroutine mc2(g,a,b,n,r,s) external g r1=0. s1=0. do 1 i=1,n u=ran_u() g0=g(a+(b-a)*u) r1=r1+g0 s1=s1+g0*g0 1 continue r1=r1/n s1=sqrt((s1/n-r1*r1)/n) r=(b-a)*r1 s=(b-a)*s1 return end 5

Method of Importance Sampling � b I = a g ( x ) f ˆ x ( x ) dx I = E x [ g ( x )] x distributed according f ˆ x ( x ). Sample mean: ˆ µ N [ g ( x )] = 1 N ˆ i =1 g ( x i ) � N x i , i = 1 , 2 , ..., N are values of the r.v. ˆ x distributed according to f ˆ x ( x ) Unbiased estimator � ˆ µ N [ g ( x )] � = I . I = ˆ µ N [ g ( x )] ± σ [ˆ µ N [ g ( x )]] µ N [ g ( x )] ± σ [ g ( x )] √ I = ˆ N � f ˆ � f ˆ x g ( x ) 2 dx − ( x g ( x ) dx ) 2 σ 2 [ g ( x )] = σ 2 Use sample variance ˆ N : 2 1 1 N N i =1 g ( x i ) 2 − σ 2 ˆ N = i =1 g ( x i ) � � N N How do we generate ˆ x i ? If u i is a ˆ U (0 , 1) variable, then: x i = F − 1 x ( u i ) ˆ 6

subroutine mc3(g,n,r,s) external g r=0. s=0. do 1 i=1,n x=ran_f() g0=g(x) r=r+g0 s=s+g0*g0 1 continue r=r/n s=sqrt((s/n-r*r)/n) return end 7

Efficiency of an integration method Computer time needed to compute a given integral with a given error ǫ . So far: σ √ ǫ = N t is the computer time needed to add a contribution to the estimator total computer time Nt ∝ tσ 2 Relative efficiency of method 1 and 2: e 12 = t 1 σ 2 1 t 2 σ 2 2 Uniform sampling is more efficient that hit and miss method. Hit and miss: σ 2 1 = c ( b − a ) I − I 2 Uniform sampling: � g ( x ) 2 dx − I 2 σ 2 2 = ( b − a ) � g ( x ) 2 dx σ 2 1 − σ 2 � � 2 = ( b − a ) cI − 8

The condition 0 ≤ g ( x ) ≤ c implies: � g ( x ) 2 dx ≤ c � g ( x ) dx = cI and σ 1 ≥ σ 2 . Furthermore, in general, t 1 > t 2 , and it follows e 12 > 1. Advantages and disadvantages of the Monte-Carlo integration Very singular function n − dimensional integral, with high n � Ω g ( x 1 , x 2 , . . . , x n ) f ˆ x n ( x 1 , x 2 , . . . , x n ) dx 1 dx 2 . . . dx n ≈ x 1 ... ˆ 1 N i =1 g ( x 1 , x 2 , . . . , x n ) � N ( x 1 , x 2 , . . . , x n ) is a random vector distributed according the probability density function f ˆ x n ( x 1 , x 2 , . . . , x n ). x 1 ... ˆ Everything is valid for sums: � g ( x ) f ˆ i g i = x ( x ) dx � by considering a discrete random variable f ˆ x ( x ) = i p i δ ( x − x i ) � 9

and g i = g ( x i ) 10

Recommend

More recommend