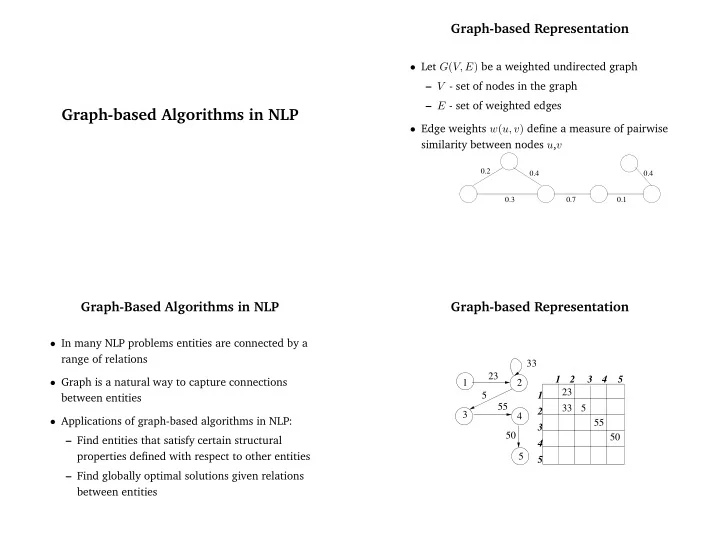

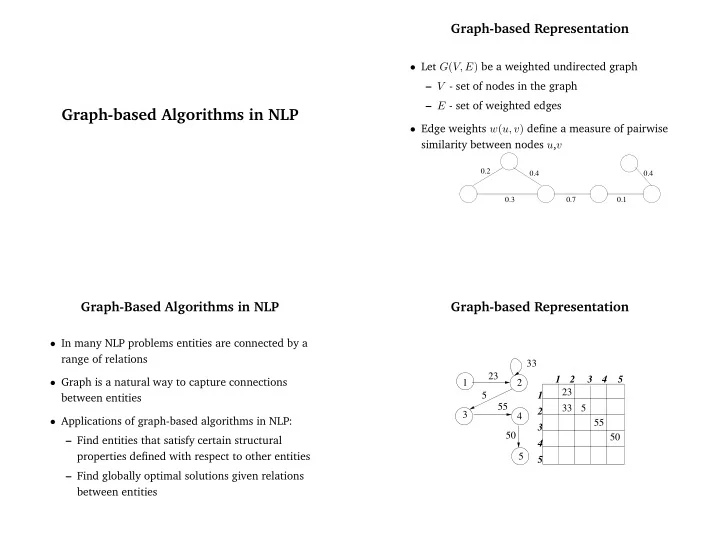

Graph-based Representation • Let G ( V, E ) be a weighted undirected graph – V - set of nodes in the graph – E - set of weighted edges Graph-based Algorithms in NLP • Edge weights w ( u, v ) define a measure of pairwise similarity between nodes u , v 0.2 0.4 0.4 0.3 0.7 0.1 Graph-Based Algorithms in NLP Graph-based Representation • In many NLP problems entities are connected by a range of relations 33 23 1 2 3 4 5 1 2 • Graph is a natural way to capture connections 23 5 1 between entities 55 33 5 2 3 4 55 • Applications of graph-based algorithms in NLP: 3 50 50 – Find entities that satisfy certain structural 4 5 properties defined with respect to other entities 5 – Find globally optimal solutions given relations between entities

Examples of Graph-based Representations Analysis of the Link Structure • Assumption: the creator of page p, by including a link to page q, has in some measure conferred authority in q Data Directed? Node Edge • Issues to consider: Web yes page link – some links are not indicative of authority (e.g., Citation Net yes citation reference relation navigational links) Text no sent semantic connectivity – we need to find an appropriate balance between the criteria of relevance and popularity Hubs and Authorities Algorithm Outline of the Algorithm (Kleinberg, 1998) • Compute focused subgraphs given a query • Iteratively compute hubs and authorities in the • Application context: information retrieval subgraph • Task: retrieve documents relevant to a given query • Naive Solution: text-based search – Some relevant pages omit query terms – Some irrelevant do include query terms We need to take into account the authority of the page! Hubs Authorities

Focused Subgraph Constructing a Focused Subgraph: Algorithm Set S c := R σ For each page p ∈ R σ • Subgraph G [ W ] over W ⊆ V , where edges Let Γ + ( p ) denote the set of all pages p points to correspond to all the links between pages in W Let Γ − ( p ) denote the set of all pages pointing to p Add all pages in Γ + ( p ) to S σ • How to construct G σ for a string σ ? If | Γ − ( p ) | ≤ d then – G σ has to be relatively small Add all pages in | Γ − ( p ) | to S σ – G σ has to be rich in relevant pages Else Add an arbitrary set of d pages from | Γ − ( p ) | to S σ – G σ must contain most of the strongest End authorities Return S σ Constructing a Focused Subgraph: Constructing a Focused Subgraph Notations base Subgraph ( σ, Eng, t, d ) root σ : a query string Eng : a text-based search engine t, d : natural numbers Let R σ denote the top t results of Eng on σ

I operation Computing Hubs and Authorities • Authorities should have considerable overlap in terms of pages pointing to them Given { y ( p ) } , compute: • Hubs are pages that have links to multiple x ( p ) ← � y ( p ) authoritative pages q :( q,p ) ∈ E • Hubs and authorities exhibit a mutually reinforcing q 1 relationship page p x[p]:=sum of y[q] q 2 for all q pointing to p q 3 Hubs Authorities O operation An Iterative Algorithm Given { x ( p ) } , compute: • For each page p , compute authority weight x ( p ) and y ( p ) ← � x ( p ) hub weight y ( p ) q :( p,q ) ∈ E – x ( p ) ≥ 0 , x ( p ) ≥ 0 q 1 p ∈ s σ ( x ( p ) ) 2 = 1 , � p ∈ s σ ( y ( p ) ) 2 = 1 – � q 2 page p • Report top ranking hubs and authorities y[p]:=sum of x[q] for all q pointed to by p q 3

Convergence Algorithm:Iterate Iterate (G,k) G: a collection of n linked paged k: a natural number Theorem: The sequence x 1 , x 2 , x 3 and y 1 , y 2 , y 3 Let z denote the vector (1 , 1 , 1 , . . . , 1) ∈ R n converge. Set x 0 := z Set y 0 := z • Let A be the adjacency matrix of g σ For i = 1 , 2 , . . . , k • Authorities are computed as the principal Apply the I operation to ( x i − 1 , y i − 1 ) , obtaining new x -weights x ′ i eigenvector of A T A Apply the O operation to ( x ′ i , y i − 1 ) , obtaining new y -weights y ′ i Normalize x ′ i , obtaining x i • Hubs are computed as the principal eigenvector of Normalize y ′ AA T i , obtaining y i Return ( x k , y k ) Algorithm: Filter Subgraph obtained from www.honda.com Honda http://www.honda.com Filter (G,k,c) G: a collection of n linked paged Ford Motor Company http://www.ford.com k,c: natural numbers Campaign for Free Speech http://www.eff.org/blueribbon.html ( x k , y k ) := Iterate ( G, k ) Welcome to Magellan! http://www.mckinley.com Report the pages with the c largest coordinates in x k as authorities Welcome to Netscape! http://www.netscape.com Report the pages with the c largest coordinates in y k as hubs LinkExchange — Welcome http://www.linkexchange.com Welcome to Toyota http://www.toyota.com

Authorities obtained from Intuitive Justification www.honda.com From The Anatomy of a Large-Scale Hypertextual Web Search Engine (Brin&Page, 1998) 0.202 Welcome to Toyota http://www.toyota.com PageRank can be thought of as a model of used behaviour. We 0.199 Honda http://www.honda.com assume there is a “random surfer” who is given a web page at 0.192 Ford Motor Company http://www.ford.com random and keeps clicking on links never hitting “back” but 0.173 BMW of North America, Inc. http://www.bmwusa.com eventually get bored and starts on another random page. The 0.162 VOLVO http://www.volvo.com probability that the random surfer visists a page is its PageR- 0.158 Saturn Web Site http://www.saturncars.com ank. And, the d damping factor is the probability at each page 0.155 NISSAN http://www.nissanmotors.com the “random surfer” will get bored and request another ran- dom page. PageRank Algorithm (Brin&Page,1998) PageRank Computation Original Google ranking algorithm • Similar idea to Hubs and Authorities Iterate PR(p) computation: • Key differences: pages q 1 , . . . , q n that point to page p d is a damping factor (typically assigned to 0.85) – Authority of each page is computed off-line C ( p ) is out-degree of p – Query relevance is computed on-line ∗ Anchor text PR ( p ) = (1 − d ) + d ∗ ( PR ( q 1 ) C ( q 1 ) + . . . + PR ( q n ) C ( q n ) ) ∗ Text on the page – The prediction is based on the combination of authority and relevance

Notes on PageRank Text as a Graph S1 S2 S6 • PageRank forms a probability distribution over web pages • PageRank corresponds to the principal eigenvector S3 S5 of the normalized link matrix of the web S4 Extractive Text Summarization Centrality-based Summarization(Radev) • Assumption: The centrality of the node is an Task: Extract important information from a text indication of its importance • Representation: Connectivity matrix based on intra-sentence cosine similarity • Extraction mechanism: – Compute PageRank score for every sentence u PageRank ( u ) = (1 − d ) PageRank ( v ) � + d N deg ( v ) v ∈ adj [ u ] , where N is the number of nodes in the graph – Extract k sentences with the highest PageRanks score

Does it work? Graph-Based Algorithms in NLP • Evaluation: Comparison with human created summary • Rouge Measure: Weighted n-gram overlap (similar • Applications of graph-based algorithms in NLP: to Bleu) – Find entities that satisfy certain structural Method Rouge score properties defined with respect to other entities Random 0.3261 – Find globally optimal solutions given relations between entities Lead 0.3575 Degree 0.3595 PageRank 0.3666 Does it work? Min-Cut: Definitions • Evaluation: Comparison with human created • Graph cut: partitioning of the graph into two summary disjoint sets of nodes A,B • Rouge Measure: Weighted n-gram overlap (similar • Graph cut weight: cut ( A, B ) = � u ∈ A,v ∈ B w ( u, v ) to Bleu) – i.e. sum of crossing edge weights Method Rouge score • Minimum Cut: the cut that minimizes Random 0.3261 cross-partition similarity Lead 0.3575 0.2 0.2 0.4 0.4 0.4 0.4 Degree 0.3595 0.3 0.7 0.1 0.3 0.7 0.1 PageRank 0.3666

Recommend

More recommend