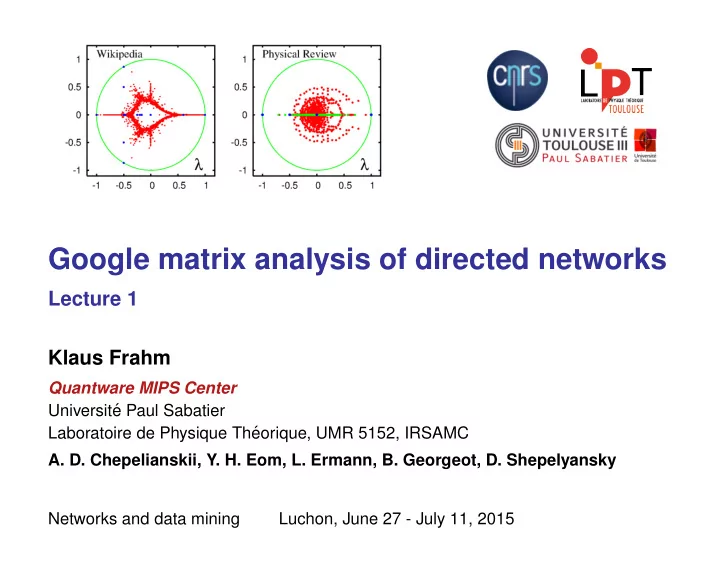

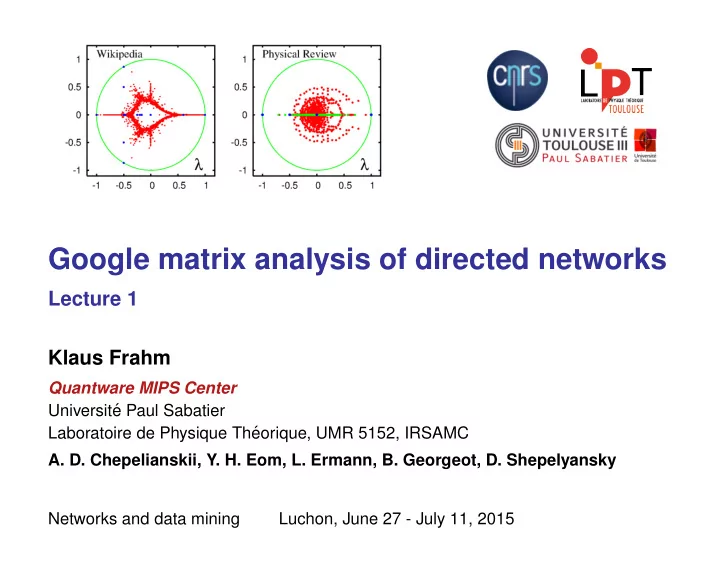

Google matrix analysis of directed networks Lecture 1 Klaus Frahm Quantware MIPS Center Universit´ e Paul Sabatier Laboratoire de Physique Th´ eorique, UMR 5152, IRSAMC A. D. Chepelianskii, Y. H. Eom, L. Ermann, B. Georgeot, D. Shepelyansky Networks and data mining Luchon, June 27 - July 11, 2015

Contents Perron-Frobenius operators . . . . . . . . . . . . . . . . . 3 “Analogy” with hamiltonian quantum systems . . . . . . . . 7 PF Operators for directed networks . . . . . . . . . . . . . . 10 PageRank . . . . . . . . . . . . . . . . . . . . . . . . . . 13 Scale Free properties . . . . . . . . . . . . . . . . . . . . . 14 Numerical diagonalization . . . . . . . . . . . . . . . . . . 15 Arnoldi method . . . . . . . . . . . . . . . . . . . . . . . . 16 Invariant subspaces . . . . . . . . . . . . . . . . . . . . . 18 University Networks . . . . . . . . . . . . . . . . . . . . . 22 Twitter network . . . . . . . . . . . . . . . . . . . . . . . . 28 References . . . . . . . . . . . . . . . . . . . . . . . . . . 31 2

Perron-Frobenius operators Consider a physical system with N states i = 1 , . . . , N and probabilities p i ( t ) ≥ 0 evolving by a discrete Markov process : � p i ( t + 1) = G ij p j ( t ) j The transition probabilities G ij provide a Perron-Frobenius matrix G such that: � G ij = 1 , G ij ≥ 0 . i Conservation of probability: � G v � 1 = � v � 1 if v i ∈ R and v i ≥ 0 ⇒ � p ( t + 1) � 1 = � p ( t ) � 1 = 1 . � G v � 1 ≤ � v � 1 for any other (complex) vector where � v � 1 = � i | v i | is the usual 1-norm. 3

In general G T � = G and eigenvalues λ may be complex. If v is a (right) eigenvector of G : G v = λ v ⇒ | λ | ≤ 1 . The vector e T = (1 , . . . , 1) is left eigenvector with λ = 1 : e T G = 1 e T ⇒ existence of (at least) one right eigenvector P for λ = 1 also called PageRank in the context of Google matrices: G P = 1 P Biorthogonality between left and right eigenvectors: w T v = 0 G v = λ v and w T G = ˜ λ � = ˜ λ w T ⇒ if λ . 4

Expansion in terms of eigenvectors: � � C j v ( j ) C j λ t j v ( j ) p (0) = ⇒ p ( t ) = j j with λ 1 = 1 and v (1) = P . If C 1 � = 0 and | λ j | < 1 for j ≥ 2 ⇒ t →∞ p ( t ) = P . lim ⇒ Powermethod to compute P Rate of convergence: ∼ | λ 2 | t = e t ln(1 − (1 −| λ 2 | )) ≈ e − t (1 −| λ 2 | ) ⇒ Problem if 1 − | λ 2 | ≪ 1 of even if | λ 2 | = 1 . 5

Complications if G is not diagonalizable The eigenvectors do not constitute a full basis and further generalized eigenvectors are required: ( λ j 1 − G ) v ( j, 0) = 0 ( λ j 1 − G ) v ( j, 1) = v ( j, 0) ( λ j 1 − G ) v ( j, 2) = v ( j, 1) . . . ⇒ Contributions ∼ t l λ t j with l = 0 , 1 , . . . in p ( t ) expansion. However, for λ 1 = 1 only l = 0 is possible since otherwise: � p ( t ) � 1 ≈ const . t l → ∞ . 6

“Analogy” with hamiltonian quantum systems i � ∂ ∂t ψ ( t ) = H ψ ( t ) where ψ ( t ) quantum state and H = H † is a hermitian (or real symmetric) operator. Expansion in terms of eigenvectors: H ϕ ( j ) = E j ϕ ( j ) � C j e − i E j t/ � ϕ ( j ) ψ ( t ) = j • H is always diagonalizable with E j ∈ R and ( ϕ ( k ) ) T ϕ ( j ) = δ kj . • Eigenvectors ϕ ( j ) are valid physical states while for PF operators only real vectors with positive entries are physical states and most eigenvectors are complex. 7

Example hamilontian operators: • Disorder Anderson model in 1 dimension: H jk = − ( δ j,k +1 + δ j,k − 1 ) + ε j δ j,k with random on-site energies ε j ∈ [ − W/ 2 , W/ 2] ⇒ localized eigenvectors ϕ l ∼ e −| l − l 0 | /ξ with localization length ξ ∼ W − 2 . General mesure of localization length by inverse participation ratio : � l ϕ 4 1 l ) 2 ∼ 1 l = ( � l ϕ 2 ξ IPR ξ • Gaussian Orthogonal Ensemble (GOE): H jk = H kj ∈ R and H jk independent random gaussian variables with: � H 2 jk � = (1 + δ jk ) σ 2 . � H jk � = 0 , 8

Universal level statistics Distribution of rescaled nearest level spacing s = ( E j +1 − E j ) / ∆ with average level spacing ∆ : • Poisson statistics: P Pois ( s ) = exp( − s ) Anderson model with ξ ≪ L ( L = system size), integrable systems, . . . • Wigner surmise: P Wig = ( πs/ 2) exp( − πs 2 / 4) GOE, Anderson model with ξ � L , generic (classically) chaotic systems, . . . 9

PF Operators for directed networks Consider a directed network with N nodes 1 , . . . , N and N ℓ links. • Define the adjacency matrix by A jk = 1 if there is a link k → j and A jk = 0 otherwise. In certain cases, when explicitely considering multiple links, one may have A jk = m where m = multiplicity of a a link (e. g. Network for integer numbers). • Define a matrix S 0 from A by sum-normalizing each non-zero column to one and keeping zero columns. • Define a matrix S from S 0 by replacing each zero column with 1 /N entries. • Same procedure for inverted network: A ∗ ≡ A T and S ∗ is obtained in the same way from A ∗ . Note: in general: S ∗ � = S T . Leading (right) eigenvector of S ∗ is called CheiRank . 10

Example: 0 1 1 0 0 1 0 1 1 0 A = 0 1 0 1 0 0 0 1 0 0 0 0 0 1 0 0 1 1 0 1 3 0 1 1 3 0 0 2 2 5 1 0 1 1 1 0 1 1 1 3 0 3 3 3 5 0 1 2 0 1 0 1 2 0 1 1 3 0 S 0 = , S = 3 5 0 0 1 0 0 1 3 0 1 3 0 0 5 0 0 0 1 0 0 0 1 1 3 0 3 5 11

The nodes with no out-going links, associated to zero columns in A , are called dangling nodes . On can formally write: S = S 0 + 1 N e d T with d = dangling vector with d j = 1 for dangling nodes and d j = 0 for other nodes and e = uniform unit vector with e j = 1 for all nodes. Damping factor Define for 0 < α < 1 , typically α = 0 . 85 , the matrix: G ( α ) = αS + (1 − α ) 1 N ee T • G is also PF operator with columns sum normalized. • G has the eigenvalue λ 1 = 1 with multiplicity m 1 = 1 and other eigenvalues are αλ j (for j ≥ 2 ) with λ j = eigenvalues of S . The right eigenvectors for λ j � = 1 are not modified (since they are orthogonal to the left eigenvector e T for λ 1 = 1 ). • Similar expression for G ∗ ( α ) using S ∗ . 12

PageRank Example for university networks of Cambridge 2006 and Oxford 2006 ( N ≈ 2 × 10 5 and N ℓ ≈ 2 × 10 6 ). � P ( i ) = G ij P ( j ) j P ( i ) represents the “importance” of “node/page i ” obtained as sum of all other pages j pointing to i with weight P ( j ) . Sorting of P ( i ) ⇒ index K ( i ) for order of appearance of search results in search engines such as Google. 13

Scale Free properties Distribution of number of in- and outgoing links for Wikipedia: 1 w in , out ( k ) ∼ , µ in = 2 . 09 ± 0 . 04 , µ out = 2 . 76 ± 0 . 06 . k µ in , out (Zhirov et al. EPJ B 77 , 523) Small world properties: “Six degrees of separation” (cf. Milgram’s ”small world experiment” 1967) 14

Numerical diagonalization • Powermethod to obtain P : rate of convergence for G ( α ) is better than ∼ α t . • Full “exact” diagonalization: possible for N � 10 4 : memory usage ∼ N 2 and computation time ∼ N 3 . • Arnoldi method to determine largest n A ∼ 10 2 − 10 4 eigenvalues: memory usage ∼ N n A + C 1 N ℓ + C 2 n 2 A and computation time ∼ N n 2 A + C 3 N ℓ n A + C 4 n 3 A . • Strange numerical problems to determine accurately “small” eigenvalues, in particular for (nearly) triangular network structure due to large Jordan-blocks ( ⇒ 3 rd lecture ). 15

Arnoldi method to (partly) diagonalize large sparse non-symmetric N × N matrices G such that the product “ G × vector” can be computed efficiently ( G may contain some constant columns ∼ e ): • choose an initial normalized vector ξ 0 (random or “otherwise”) • determine the Krylov space of dimension n A (typically: 1 ≪ n A ≪ N ) spanned by the vectors: ξ 0 , G ξ 0 , . . . , G n A − 1 ξ 0 • determine by Gram-Schmidt orthogonalization an orthonormal basis { ξ 0 , . . . , ξ n − 1 } and the representation of G in this basis: k +1 � G ξ k = H jk ξ j j =0 Note: if G = G T ⇒ H = tridiagonal symmetric and the Arnoldi method is identical to the Lanczos method . 16

• diagonalize the Arnoldi matrix H which has Hessenberg form: ∗ ∗ · · · ∗ ∗ ∗ ∗ · · · ∗ ∗ 0 ∗ · · · ∗ ∗ H = . . . . ... . . . . . . . . 0 0 · · · ∗ ∗ 0 0 · · · 0 ∗ which provides the Ritz eigenvalues that are very good aproximations to the “largest” eigenvalues of G . 1 1 0.5 (Ritz) | 10 -5 0 | λ j - λ j 10 -10 -0.5 λ 10 -15 -1 0 500 1000 1500 -1 -0.5 0 0.5 1 j Example: PF Operator for Ulam-Map ( ⇒ 2 nd lecture ) N = 16609 , N ℓ = 76058 , n A = 1500 17

Recommend

More recommend