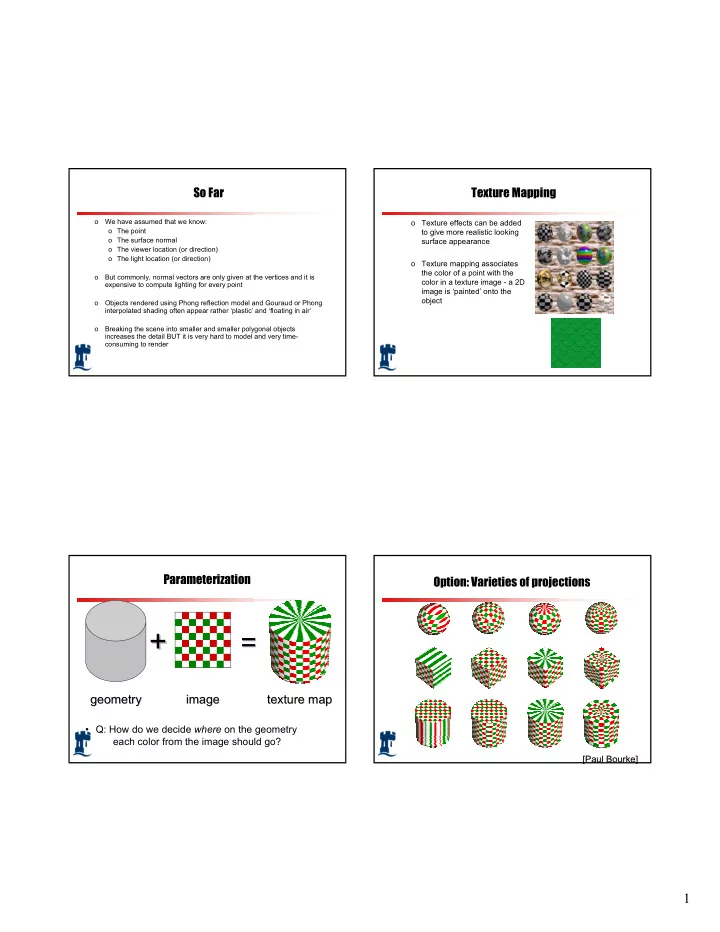

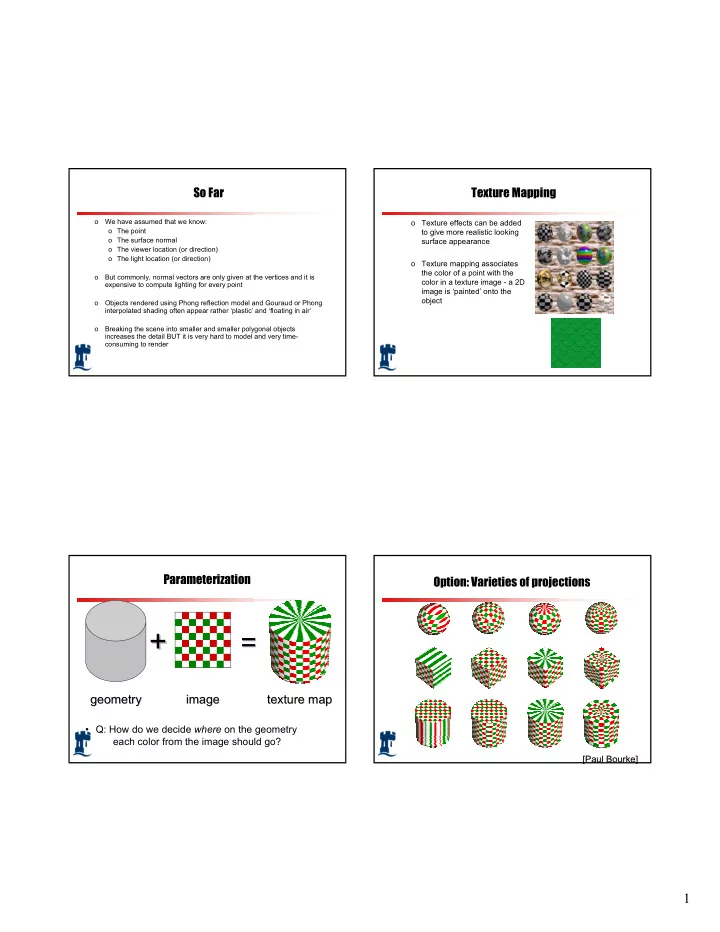

So Far Texture Mapping o We have assumed that we know: o Texture effects can be added o The point to give more realistic looking o The surface normal surface appearance o The viewer location (or direction) o The light location (or direction) o Texture mapping associates the color of a point with the o But commonly, normal vectors are only given at the vertices and it is color in a texture image - a 2D expensive to compute lighting for every point image is ‘painted’ onto the object o Objects rendered using Phong reflection model and Gouraud or Phong interpolated shading often appear rather ‘plastic’ and ‘floating in air’ o Breaking the scene into smaller and smaller polygonal objects increases the detail BUT it is very hard to model and very time- consuming to render Parameterization Option: Varieties of projections + + = = geometry geometry image image texture map texture map • Q: How do we decide where on the geometry each color from the image should go? [Paul Bourke] [Paul Bourke] 1

Option: unfold the surface How to map object to texture? o To each vertex (x,y,z in object coordinates), must associate 2D texture coordinates (s,t) o So texture fits “nicely” over object [Piponi2000] Idea: Use Map Shape Planar mapping o Like projections, drop z coord (s,t) = (x,y) o Map shapes correspond to various projections o Problems: what happens near z = 0? o Planar, Cylindrical, Spherical o First, map (square) texture to basic map shape o Then, map basic map shape to object o Or vice versa: Object to map shape, map shape to square o Usually, this is straightforward o Maps from square to cylinder, plane, sphere well defined o Maps from object to these are simply spherical, cylindrical, cartesian coordinate systems 2

Cylindrical Mapping Spherical Mapping o Cylinder: r, θ , z with (s,t) = ( θ /(2 π ),z) o Convert to spherical coordinates: use latitude/long. o Note seams when wrapping around ( θ = 0 or 2 π ) o Singularities at north and south poles Cube Mapping Cube Mapping 3

Texture Mapping Texture Mapping o Interpolation Texture Mapping (painting) o Main Issues o We need to define a mapping between the 3D world space (x,y,z) and 2D image space (s,t) ∈ [0,1] 2 o How do we map between 3D o Texture coordinates defined at vertices serve this purpose o How does the texture modify the shading of pixels? v o Specify (s,t) at each vertex: o How do we sample the texture for a fragment? V 2 V 1 V 0 u Texture Mapping Texture Mapping v (50, 50) o Interpolate in the interior: o Interpolate in the interior: v V 2 (10,10) (3, 3) V 2 V 1 (20, 3) (100, 15) V 0 (0,0) (0, 0) V 1 u Consider the y value of 3 in the interpolation across the triangle. V 0 The rendering process determines that the range of object coordinates are from u (3, 3) to (20, 3). Mapping the object on the left to the texture on the right The first co-ordinate comes from the interpolation along the left edge of the triangle at a parametric value t = 0.3. 4

Texture Mapping Texture Mapping v v (50, 50) (50, 50) o Interpolate in the interior: o Interpolate in the interior: V 2 (10,10) V 2 (10,10) (3, 3) (3, 3) (15, 15) (15, 15) V 1 (20, 3) (100, 15) V 1 (20, 3) (100, 15) V 0 (0,0) V 0 (0,0) (0, 0) (0, 0) u u The texture location for this left edge location is (15, 15). Why? The texture gives a value that will influence the shading process. Specifically, the value from the texture replaces the diffuse constant in the calculation of the Object locations are interpolated from (3, 3) to (20, 3) diffusion component in the illumination equation. By doing so, the texture values change the object appearance, but shape cues that come from the Texture locations are interpolated from (15, 15) to (100, 15) specular highlights will stay the same. Texture Mapping Texture Mapping v v (50, 50) (50, 50) o Interpolate in the interior: o Interpolate in the interior: y y V 2 (10,10) V 2 (10,10) ( u, v ) ( u, v ) ( x, y ) ( x, y ) (3, 3) (3, 3) (15, 15) (15, 15) V 1 (20, 3) V 1 (20, 3) (100, 15) (100, 15) V 0 (0,0) V 0 (0,0) (0, 0) (0, 0) x x u u ( ) ( ) • + • n Texture ( u , v ) I L N K I R V A texture location can be calculated from object location directly. In general, mapping an object with I = K I + dr i s i r ar a + x coordinates in the range from x min to x max and y coordinates in the range y min to y max into the texture r k ( ) ( ) coordinate range can be computed as: • + • n Texture ( u , v ) I L N K I R V u − u v − v = + dg i s i I K I ( ) ( ) = − = − g ag a + u max min x x v max min y y r k − min − min x x y y ( ) ( ) • + • n Texture ( u , v ) I L N K I R V max min max min = + db i s i I K I b ab a + r k u min , u max , v min , v max are determined by the range of texture values assigned to the object vertices. 5

Texture Mapping Artifacts o McMillan’s demo of this is at o Interpolate in the interior: - Painted texture http://graphics.lcs.mit.edu/classes/6.837/F98/Lecture21/Slide05.html o Another example o Simple http://graphics.lcs.mit.edu/classes/6.837/F98/Lecture21/Slide06.html o What artifacts do you see? o But can have problems – especially for highly irregular o Why? surfaces o Why not in standard Gouraud shading? o Hint: problem is in interpolating parameters Interpolating Parameters Texture Mapping o The problem turns out to be fundamental to interpolating parameters in screen-space o Uniform steps in screen space ≠ uniform steps in world space Linear interpolation Correct interpolation of texture coordinates with perspective divide Hill Figure 8.42 6

Interpolating Parameters Perspective-Correct Interpolation o Perspective foreshortening is not getting applied to our o Skipping a bit of math to make a long story short… interpolated parameters o Rather than interpolating u and v directly, interpolate u/z and v/z o Parameters should be compressed with distance o These do interpolate correctly in screen space o Linearly interpolating them in screen-space doesn’t do this o Also need to interpolate z and multiply per-pixel o Problem: we don’t know z anymore o Solution: we do know w ∝ 1/z o So…interpolate uw and vw and w , and compute u = uw/w and v = vw/w for each pixel o This unfortunately involves a divide per pixel o http://graphics.lcs.mit.edu/classes/6.837/F98/Lecture21/Slide14.html Mapping to Curved Surfaces Texture Map Filtering o Naive texture mapping aliases badly y t A pixel Y s A curved image Moire pattern s x X s o Filtering Parametric surface z x=x(u,v) The task is to map texture y=y(u,v) to surface, or to find a mapping z=z(u,v) u=as+bt+c v=ds+et+f 7

Bump Mapping How Does It Work? o This is another texturing technique o Looking at it in 1D: o Original surface o Aims to simulate a dimpled or wrinkled surface P(u) o for example, surface of an orange o Bump map b(u) o Like Gouraud and Phong shading, it is a trick o surface stays the same o Add b(u) to P(u)in o but the true normal is perturbed, to give the surface normal illusion of surface ‘bumps’ direction, N(u) o New surface normal N’(u) for reflection model Bump Mapping Example Bump Mapping Example o Texture = change in surface normal! Sphere w/ diffuse texture Sphere w/ diffuse texture Swirly bump map and swirly bump map Bump map Result 8

Illumination Maps Environment Maps o Quake introduced illumination maps or light maps to capture lighting effects in video games Texture map: Light map Texture map Images from Illumination and Reflection Maps: + light map: Simulated Objects in Simulated and Real Environments Gene Miller and C. Robert Hoffman SIGGRAPH 1984 “Advanced Computer Graphics Animation” Course Notes 9

Recommend

More recommend