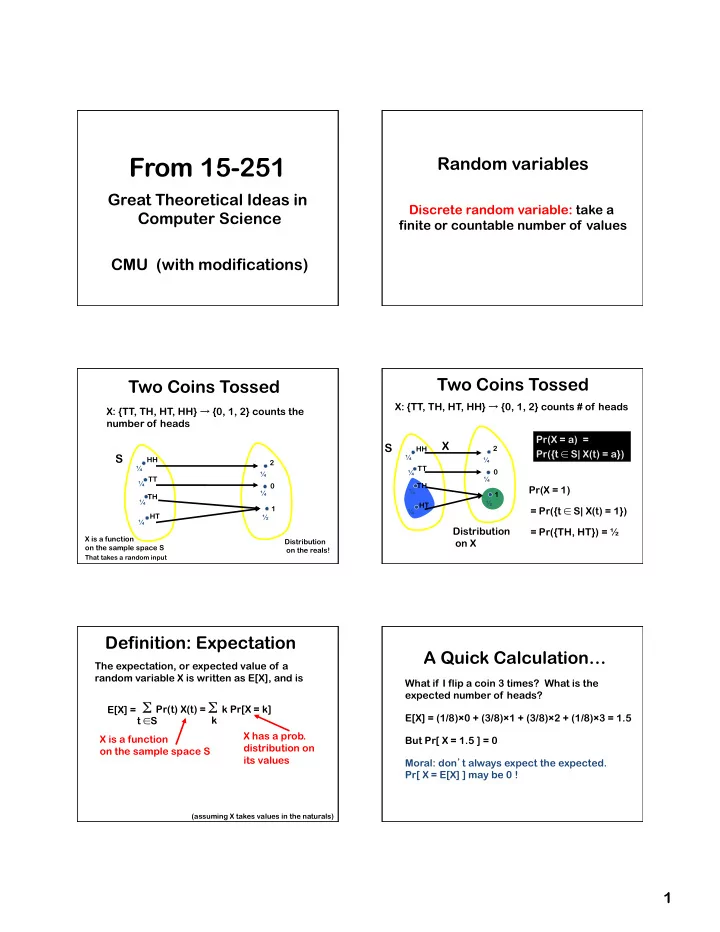

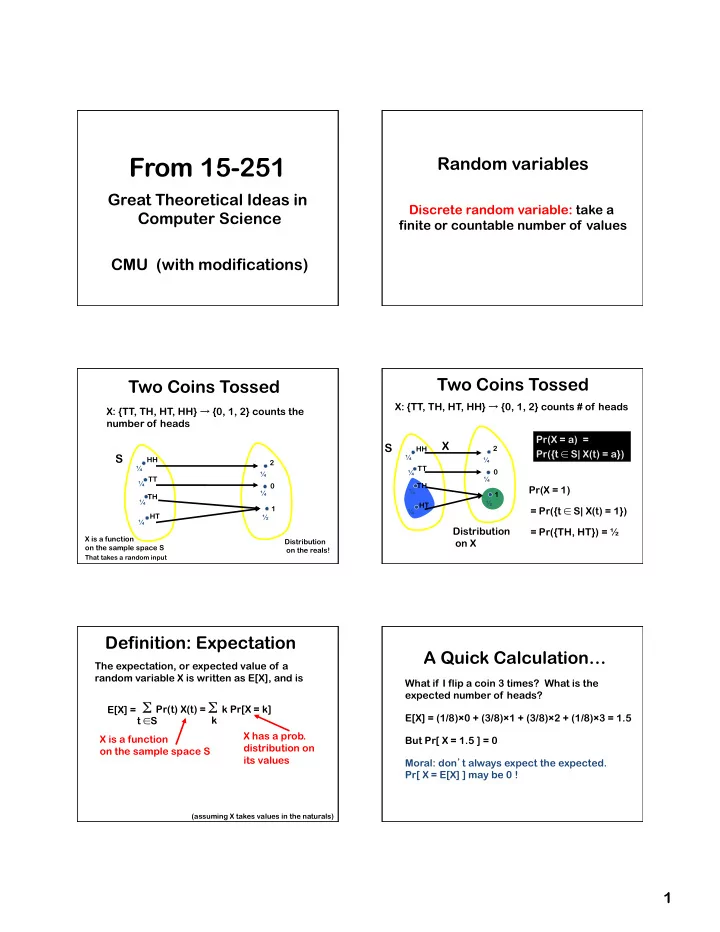

From 15-251 Random variables Great Theoretical Ideas in Discrete random variable: take a Computer Science finite or countable number of values CMU (with modifications) Two Coins Tossed Two Coins Tossed X: {TT, TH, HT, HH} → {0, 1, 2} counts # of heads X: {TT, TH, HT, HH} → {0, 1, 2} counts the number of heads Pr(X = a) = X S HH 2 Pr({t ∈ S| X(t) = a}) S ¼ HH ¼ 2 ¼ TT 0 ¼ ¼ TT ¼ ¼ 0 TH Pr(X = 1) ¼ ¼ 1 TH ¼ ½ HT 1 = Pr({t ∈ S| X(t) = 1}) ¼ HT ½ ¼ Distribution = Pr({TH, HT}) = ½ X is a function Distribution on X on the sample space S on the reals! That takes a random input Definition: Expectation A Quick Calculation… The expectation, or expected value of a random variable X is written as E[X], and is What if I flip a coin 3 times? What is the expected number of heads? Σ Pr(t) X(t) = Σ k Pr[X = k] E[X] = E[X] = (1/8) × 0 + (3/8) × 1 + (3/8) × 2 + (1/8) × 3 = 1.5 t ∈ S k X has a prob. X is a function But Pr[ X = 1.5 ] = 0 distribution on on the sample space S its values Moral: don ’ t always expect the expected. Pr[ X = E[X] ] may be 0 ! (assuming X takes values in the naturals) 1

Expecta(on of a func(on of a r.v. Functions of a R.V. X X Suppose that X is a random variable defined kPr ( X = k ) = X ( ω ) Pr ( ω ) E ( X ) = on probability space (S, p(.)) ω ∈ S k Then Y= g(X) is also a random variable on the X E ( g ( X )) = g ( k ) Pr ( X = k ) same probability space (if defined everywhere). k in range of X E.g., Y = X 2 k Z = 3 X + 2 Operations on R.V.s Random variables and You can define any random variable you expectations allow us to give want on a probability space. elegant solutions to problems Given a collection of random variables, you can sum them, take differences, or that seem really really messy… do most other math operations… E.g., (X + Y)(t) = X(t) + Y(t) (X*Y)(t) = X(t) * Y(t) (X Y )(t) = X(t) Y(t) If I randomly put 100 letters The new tool is called into 100 addressed “ Linearity of Expectation ” envelopes, on average how many letters will end up in their correct envelopes? On average, in class of size m, how many pairs of people will have the same birthday? Pretty messy with direct counting… 2

By Induction Linearity of Expectation E[X 1 + X 2 + … + X n ] = If Z = X+Y, then E[X 1 ] + E[X 2 ] + …. + E[X n ] E[Z] = E[X] + E[Y] The expectation of the sum = The sum of the expectations Without any conditions on X and Y If I randomly put 100 letters into 100 addressed Let ’ s see why envelopes, on average how Linearity of Expectation many letters will end up in their correct envelopes? is so useful… Indicator Random Variables If I randomly put 100 letters For any event A, can define the into 100 addressed “ indicator random variable ” for event A: envelopes, on average how 1 if t ∈ A many letters will end up in E[X A ] = 1 × Pr(X A = 1) = Pr(A) X A (t) = their correct envelopes? 0 if t ∉ A 1 0.05 0.05 0 0.55 0.1 Hmm… 0 0.3 0.2 0.3 0.45 ∑ k k Pr(exactly k letters end A up in correct envelopes) = ∑ k k (…aargh!!…) 3

Use Linearity of Expectation Use Linearity of Expectation Let A i be the event the i th letter ends up in Let A i be the event the i th letter ends up in its correct envelope its correct envelope 1 if A i occurs 1 if A i occurs Let X i be the Let X i be the X i = X i = 0 otherwise 0 otherwise “ indicator ” R.V. for A i “ indicator ” R.V. for A i Let Z = X 1 + … + X 100 Let Z = X 1 + … + X 100 We are asking for E[Z] We are asking for E[Z] E[X i ] = Pr(A i ) = 1/100 So E[Z] = 1 Use Linearity of Expectation So, in expectation, 1 letter will be General approach: in the same correct envelope View thing you care about as expected value of some RV Pretty neat: it doesn ’ t depend on Write this RV as sum of how many letters! simpler RVs Solve for their expectations and add them up! Ex. #2 Linearity of Expectation! We flip n coins of bias p. What is the expected number of heads? We could do this by summing Let X = number of heads when n independent coins of bias p are flipped ✓ n ◆ X X p k (1 − p ) n − k kPr ( X = k ) = k k Break X into n simpler RVs: k k But now we know a better way! 1 if the i th coin is heads X i = 0 if the i th coin is tails E[ X ] = E[ Σ i X i ] = Σ i E[ X i ] = Σ i p = np 4

Use linearity of expectation On average, in class of size m, how many pairs of people will Suppose we have m people have the same birthday? each with a uniformly chosen birthday from 1 to 365 ∑ k k Pr(exactly k pairs) X = number of pairs of people with the same birthday = ∑ k k (…aargh!!!!…) E[X] = ? X = number of pairs of people X = number of pairs of people with the same birthday with the same birthday X jk = 1 if person j and person k E[X] = ? have the same birthday; else 0 Use m(m-1)/2 indicator variables, E[X jk ] = (1/365) 1 + (1 – 1/365) 0 one for each pair of people = 1/365 X jk = 1 if person j and person k E[X] = E[ Σ 1 ≤ j < k ≤ m X jk ] have the same birthday; else 0 = Σ 1 ≤ j < k ≤ m E[ X jk ] E[X jk ] = (1/365) 1 + (1 – 1/365) 0 ✓ m ◆ = 1/365 = m(m-1)/2 × 1/365 1 = 2 365 Type Checking P( B ) B must be an event E( X ) X must be a R.V. cannot do P( R.V. ) or E( event ) 5

Recommend

More recommend