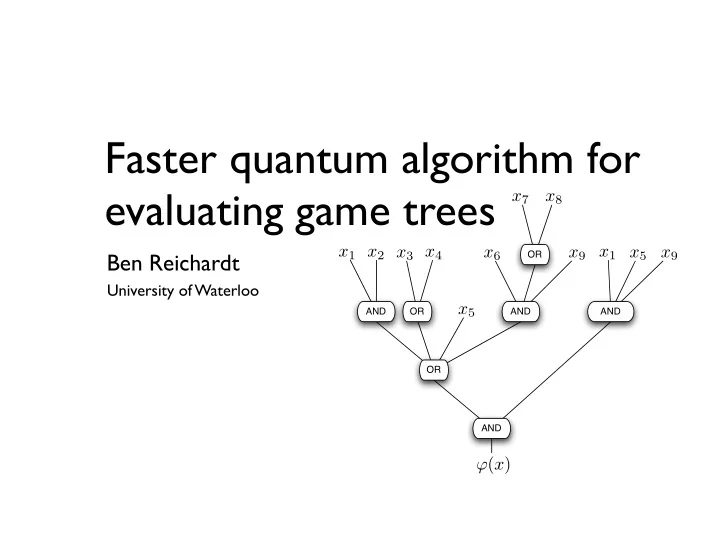

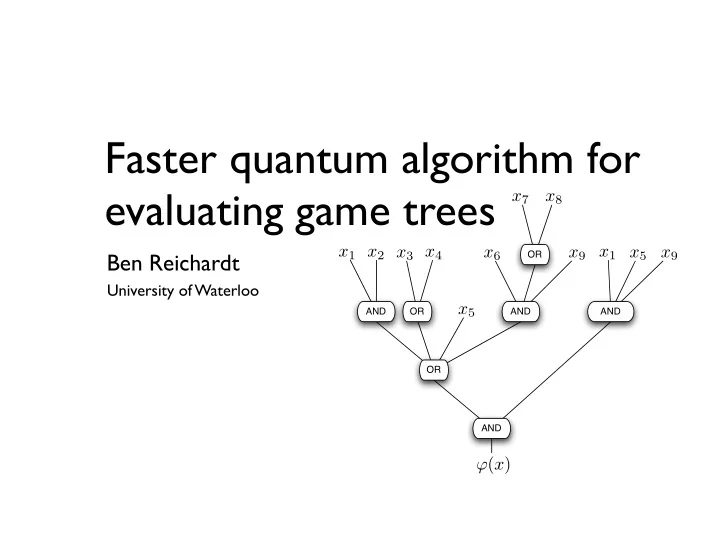

Faster quantum algorithm for evaluating game trees x 7 x 8 x 1 x 2 x 3 x 4 x 1 Ben Reichardt x 6 x 9 x 5 x 9 OR x 1 x 1 University of Waterloo x 5 AND OR AND AND OR AND ϕ ( x )

x 7 x 8 x 1 x 2 x 3 x 4 x 1 x 6 x 9 x 5 x 9 OR x 1 x 1 x 5 AND OR AND AND OR AND ϕ ( x )

Motivations: x 7 x 8 • Two-player games (Chess, Go, …) x 1 x 2 x 3 x 4 x 1 x 6 OR x 9 x 5 x 9 x 1 x 1 - Nodes ↔ game histories - White wins iff ∃ move s.t. ∀ responses, ∃ x 5 AND OR AND AND move s.t. … • Decision version of min-max tree evaluation OR - inputs are real numbers - want to decide if minimax is ≥ 10 or not AND • Model for studying effects of ϕ ( x ) composition on complexity

Deterministic decision-tree complexity = N Any deterministic algorithm for evaluating a read-once AND-OR formula must examine every leaf x 1 x 2 x 3 x 4 x 5 x 6 x 7 x 8 AND AND AND AND For balanced, binary formulas OR OR AND ϕ ( x ) α - β pruning is optimal ⇒ Randomized complexity N 0.754 [Snir ‘85, Saks & Wigderson ’86, Santha ’95] N 0.51 ≤ Randomized complexity ≤ N [Heiman, Wigderson ’91] (see also K. Amano, Session 12B Tuesday)

Deterministic decision-tree complexity = N N 0.51 ≤ Randomized complexity ≤ N Quantum query complexity = √ N (very special case of the next talk) | x ∈ { 0 , 1 } n | ( − 1) x j | j � | j � | 1 � + | 2 � �→ ( − 1) x 1 | 1 � + ( − 1) x 2 | 2 � y y r r e e u u x x q q … U 0 U 1 U T f ( x ) w/ prob. ≥ 2/3 This talk: What is the time complexity for quantum algorithms?

Farhi, Goldstone, Gutmann ’07 algorithm • Theorem ([FGG ’07]): A balanced binary AND-OR formula can be evaluated in time N ½ +o(1) . x 3 x 5 x 6 x 7 x 8 x 1 x 2 x 4 AND AND AND AND OR OR AND ϕ ( x )

Farhi, Goldstone, Gutmann ’07 algorithm • Theorem ([FGG ’07]): A balanced binary AND-OR formula can be evaluated in time N ½ +o(1) . • Convert formula to a tree, and attach a line to the root • Add edges above leaf nodes evaluating to one =0 =1

Farhi, Goldstone, Gutmann ’07 algorithm • Theorem ([FGG ’07]): A balanced binary AND-OR formula can be evaluated in time N ½ +o(1) . • Convert formula to a tree, and attach a line to the root • Add edges above leaf nodes evaluating to one x 3 x 5 x 6 x 7 x 8 x 1 x 2 x 4 AND AND AND AND OR OR AND ϕ ( x )

=0 =1 x 11 = 1 x 11 = 0 ϕ ( x ) = 0 ϕ ( x ) = 1

| ψ t � = e iA G t | ψ 0 � ϕ ( x ) = 0 ϕ ( x ) = 1

| ψ t � = e iA G t | ψ 0 � ϕ ( x ) = 0 ϕ ( x ) = 1 Wave transmits! Wave reflects!

What’s going on? Observe: State inside tree converges to energy-zero eigenstate of the graph ϕ ( x ) = 0

What’s going on? =0 =1 Observe: State inside tree converges to energy-zero eigenstate of the graph (supported on vertices that witness the formula’s value) ϕ ( x ) = 0

⇔ Energy-zero eigenvectors for AND & OR gadgets OR: AND: -1 -1 +1 +1 -1 -1 Input adds constraints +1 Together in a formula: via dangling edges: -1 0 +1 G -1 +1 •

Balanced AND-OR formula evaluation in O( √ n) time +1 +1 +1 -1 +1 · · · -1 1 2 +1 +1 2 +1 2 log 2 n = O ( √ n ) 1 Squared norm = 1 + 2 + 2 + 4 + 4 + 8 + 8 + · · · + 2

Effective spectral gap lemma If M u ≠ 0, then M u ⊥ Kernel(M ✝ ) � � � � � by the SVD M = ρ | v ρ � � u ρ | ρ projection of M u onto the � � � � � span of the left singular vectors ≤ λ u � � � � � � � of M with singular values ≤λ � � � � u � 2 = ρ 2 | � u ρ | u � | 2 � since � Π M � ρ ≤ λ

Case φ (x)=1 +1 +1 +1 -1 1/n ¼ n ¼ 1 · · · / n ¼ -1 1 2 +1 +1 2 +1 Squared norm = O( √ n) Constant overlap on root vertex

+1 Case φ (x)=1 Case φ (x)=0 +1 +1 Root vertex has 1/n � -1 Ω (1/ √ n) effective spectral gap n � 1/n · · · � -1 n ¼ +1 +1 Eigenvalue-zero eigenvector with constant +1 overlap on root vertex u � -n ¼ n ¼ 1/n ¼ 1 n ¼ 1 · · · / n ¼ M u � root projection of M u onto the � � � √ n � � span of the left singular vectors ≤ λ u � � � � � � � of M with singular values ≤λ � �

+1 Case φ (x)=1 Case φ (x)=0 +1 +1 Root vertex has n � 1/n � -1 Ω (1/ √ n) effective spectral gap n � 1/n · · · � -1 u � -n � n � 1/n � +1 +1 Eigenvalue-zero 1 n � 1/n � · · · eigenvector with constant M u +1 � overlap on root vertex Quantum algorithm: Run a quantum walk on the graph, for √ n steps from the root. • φ (x)=1 ⇒ walk is stationary • φ (x)=0 ⇒ walk mixes

⇒ Evaluating unbalanced formulas [Ambainis, Childs, Reichardt, Š palek, Zhang ’10] Proper edge weights on an unbalanced formula give √ (n · depth) queries depth n, spectral gap 1/n “Rebalancing” Theorem: O( √ n e √ log n ) For any AND-OR formula with n leaves, there is an equivalent formula with query algorithm n e √ log n leaves, and depth e √ log n [Bshouty, Cleve, Eberly ’91, Bonet, Buss ’94] Today: O( √ n log n)

OR: AND: Direct-sum composition Tensor-product composition

Direct-sum composition Tensor-product composition ∨ ∧ ∨

↔ Tensor-product composition ∨ ∧ 010 0010 1100 100 Properties • Depth from root stays ≤ 2 — 1/ √ n spectral gap • Graph stays sparse— 01010 provided composition is along the maximally 10010 unbalanced formula 00100 • Middle vertices Maximal false inputs

⇔ Final algorithm • With direct-sum composition, large depth implies small spectral gap • Tensor-product composition gives √ n-query algorithm (optimal), but graph is dense and norm too large for efficient implementation of quantum walk • Hybrid approach: • Decompose the formula into paths, longer in less balanced areas • Along each path, tensor-product • Between paths, direct-sum ∧ sort subformulas by size ∧ ∨ ∧ • Tradeoff gives 1/( √ n log n) spectral gap, while maintaining sparsity and small norm ACR Š Z ’10 today ⇒ Quantum walk has efficient implementation √ n e √ log n √ n log n (poly-log n after preprocessing) •

Recommend

More recommend