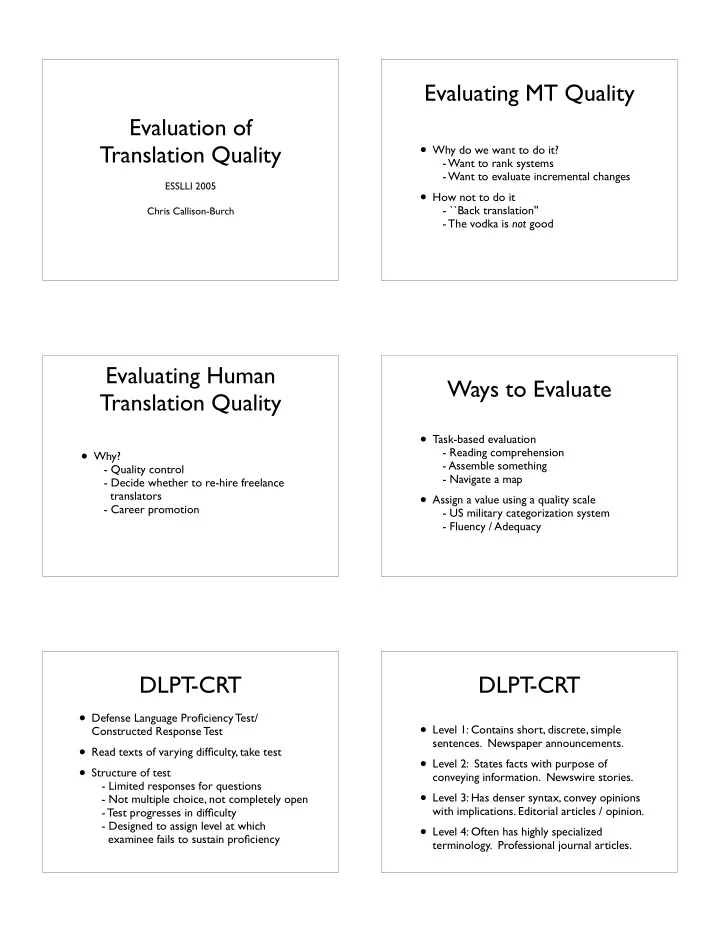

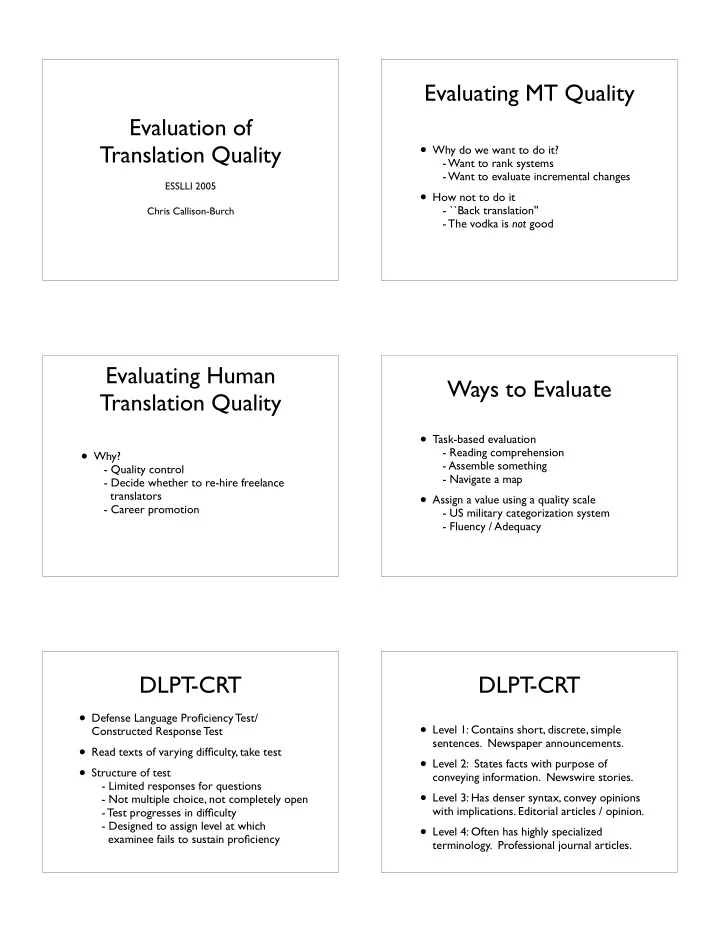

Evaluating MT Quality Evaluation of • Why do we want to do it? Translation Quality - Want to rank systems - Want to evaluate incremental changes ESSLLI 2005 • How not to do it - ``Back translation'' Chris Callison-Burch - The vodka is not good Evaluating Human Ways to Evaluate Translation Quality • Task-based evaluation • Why? - Reading comprehension - Assemble something - Quality control - Navigate a map - Decide whether to re-hire freelance • Assign a value using a quality scale translators - Career promotion - US military categorization system - Fluency / Adequacy DLPT -CRT DLPT -CRT • Defense Language Proficiency Test/ • Level 1: Contains short, discrete, simple Constructed Response Test sentences. Newspaper announcements. • Read texts of varying difficulty, take test • Level 2: States facts with purpose of • Structure of test conveying information. Newswire stories. - Limited responses for questions • Level 3: Has denser syntax, convey opinions - Not multiple choice, not completely open with implications. Editorial articles / opinion. - Test progresses in difficulty - Designed to assign level at which • Level 4: Often has highly specialized examinee fails to sustain proficiency terminology. Professional journal articles.

Human Evaluation of Fluency Machine Translation • One group has tried applying DLPT -CRT to • 5 point scale machine translation - Translate texts using MT system • 5) Flawless English - Have monolingual individuals take test 4) Good English - See what level they perform at 3) Non-native English • Much more common to have human 2) Disfluent evaluators simply assign a scale directly using 1) Incomprehensible fluency / adequacy scales Human Evaluation of MT Adequacy v. Automatic Evaluation • Human evaluation is • This text contains how much of the - Ultimately what we're interested in, but information in the reference translation: - Very time consuming • 5) All - Not re-usable 4) Most • Automatic evaluation is 3) Much 2) Little - Cheap and reusable, but - Not necessarily reliable 1) None Goals for Methodology Automatic Evaluation • No cost evaluation for incremental changes • Ability to rank systems • Comparison against reference translations • Ability to identify which sentences we're • Intuition: closer we get to human doing poorly on, and categorize errors translations, the better we're doing • Correlation with human judgments • Could use WER like in speech recognition • Interpretability of the score

Word Error Rate Problems with WER • Unlike speech recognition we don't have the • Levenshtein Distance (also "edit distance") assumptions of • Minimum number of insertions, - linearity - exact match against the referece substitutions, and deletions needed to • In machine translation there can be many transform one string into another • Useful measure in speech recognition possible (and equally valid) ways of translating a sentence - Shows how easy it is to recognize speech • Also, clauses can move around, since we're - Shows how easy it is to wreck a nice beach not doing transcription Solutions Metrics • Exact sentence match • Compare against lots of test sentences • WER • Use multiple reference translations for each • PI-WER test sentence • Bleu • Look for phrase / n-gram matches, allow • Precision / Recall movement • Meteor Bleu Example Bleu R1: It is a guide to action that ensures that the military will forever heed Party commands. • Use multiple reference translations R2: It is the Guiding Principle which guarantees • Look for n-grams that occur anywhere in the military forces always being under the command of the Party. the sentence R3: It is the practical guide for the army always • Also has ``brevity penalty" to heed the directions of the party. • Goal: Distinguish which system has better C1: It is to insure the troops forever hearing the quality (correlation with human judgments) activity guidebook that party direct. C2: It is a guide to action which ensures that the military always obeys the command of the party.

Example Bleu Example Bleu R1: It is a guide to action that ensures that the R1: It is a guide to action that ensures that the military will forever heed Party commands. military will forever heed Party commands. R2: It is the Guiding Principle which guarantees R2: It is the Guiding Principle which guarantees the military forces always being under the the military forces always being under the command of the Party. command of the Party. R3: It is the practical guide for the army always R3: It is the practical guide for the army always to heed the directions of the party. to heed the directions of the party. C1: It is to insure the troops forever hearing the C2: It is a guide to action which ensures that the activity guidebook that party direct. military always obeys the command of the party. Interpretability Automated evaluation of the score • How many errors are we making? • Because C2 has more n-grams and longer • How much better is one system compared n-grams than C1 it receives a higher score • Bleu has been shown to correlate with to another? • How useful is it? human judgments of translation quality • Bleu has been adopted by DARPA in its • How much would we have to improve to be annual machine translation evaluation useful? Evaluating an NIST MT Evaluation evaluation metric • Annual Arabic-English and Chinese-English • How well does it correlate with human competitions • 10 systems judgments? - On a system level • 1000+ sentences each - On a per sentence level • Scored by Bleu and human judgments • Data for testing correlation with human judgments of translation quality • Human judgments for translations produced by each system

Tricks with automatic Multi-reference evaluation Evaluation their lives in lost • Learning curves -- show how increasing people were killed in the fighting persons died battle training data improves statistical MT 12 *e* at least at least twelve people • Euromatrix -- create translation systems for week ’s fighting last at the took battle of fight the every pair of European languages, and give killed fighting during last week last performance scores week ’s fighting least 12 • Pang and Knight (2003) suggest using multi- at • Finite state graphs from multi-reference reference evaluation to do exact match translations -- Exact sentence matches • Combine references into a word graph with possible? hundreds of paths through it • Most paths correspond to good sentences When writing a paper • If you're writing a paper that claims that - one approach to machine translation is Final thoughts on better than another, or that - some modification you've made to a Evaluation system has improved translation quality • Then you need to back up that claim • Evaluation metrics can help, but good experimental design is also critical Invent your own Experimental Design evaluation metric • Importance of separating out training / test / • If you think that Bleu is inadequate then development sets invent your own automatic evaluation • Importance of standardized data sets metric • Can it be applied automatically? • Importance of standardized evaluation metric • Does it correlate better with human • Error analysis judgment? • Does it give a finer grained analysis of • Statistical significance tests for differences mistakes? between systems

Evaluation drives MT research Other Uses for • Metrics can drive the research for the topics that they evaluate Parallel Corpora • NIST MT Eval / DARPA Sponsorship • Bleu has lead to a focus on phrase-based Chris Callison-Burch ESSLLI 2005 translation • Minimum error rate training • Other metrics may similarly change the community's focus Statistical NLP and Cost of Creating Training Data Training Data • Creating this training data is usually time consuming and expensive • Most statistical natural language processing • As a result the amount of training data is applications require training data often limited - Statistical parsing requires treebanks - WSD requires text labeled w/ word senses • SMT is different - NER requires text w/named entities - Parallel corpora are created by other - SMT requires parallel corpora human industry - For some language pairs huge data sets are available Exploiting Three Applications of Parallel Corpora Parallel Corpora • Can we use this abundant resource for tasks • Automatic generation of paraphrases other than machine translation? • Creating training data for WSD • Can we use it to alleviate the cost of • "Projecting" annotations through parallel creating training data? corpora so that they can be applied to new • Can we use it to port resources to other languages languages?

Recommend

More recommend