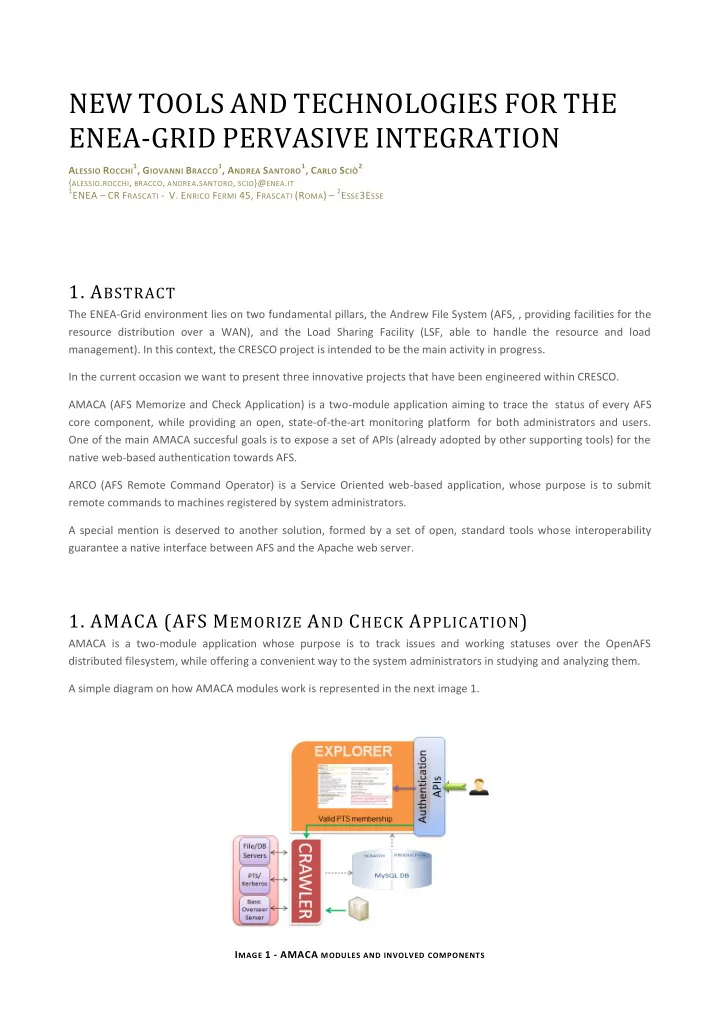

NEW TOOLS AND TECHNOLOGIES FOR THE ENEA-GRID PERVASIVE INTEGRATION A LESSIO R OCCHI 1 , G IOVANNI B RACCO 1 , A NDREA S ANTORO 1 , C ARLO S CIÒ 2 { ALESSIO . ROCCHI , BRACCO , ANDREA . SANTORO , SCIO }@ ENEA . IT 1 ENEA – CR F RASCATI - V. E NRICO F ERMI 45, F RASCATI (R OMA ) – 2 E SSE 3E SSE 1. A BSTRACT The ENEA-Grid environment lies on two fundamental pillars, the Andrew File System (AFS, , providing facilities for the resource distribution over a WAN), and the Load Sharing Facility (LSF, able to handle the resource and load management). In this context, the CRESCO project is intended to be the main activity in progress. In the current occasion we want to present three innovative projects that have been engineered within CRESCO. AMACA (AFS Memorize and Check Application) is a two-module application aiming to trace the status of every AFS core component, while providing an open, state-of-the-art monitoring platform for both administrators and users. One of the main AMACA succesful goals is to expose a set of APIs (already adopted by other supporting tools) for the native web-based authentication towards AFS. ARCO (AFS Remote Command Operator) is a Service Oriented web-based application, whose purpose is to submit remote commands to machines registered by system administrators. A special mention is deserved to another solution, formed by a set of open, standard tools whose interoperability guarantee a native interface between AFS and the Apache web server. 1. AMACA (AFS M EMORIZE A ND C HECK A PPLICATION ) AMACA is a two-module application whose purpose is to track issues and working statuses over the OpenAFS distributed filesystem, while offering a convenient way to the system administrators in studying and analyzing them. A simple diagram on how AMACA modules work is represented in the next image 1. I MAGE 1 - AMACA MODULES AND INVOLVED COMPONENTS

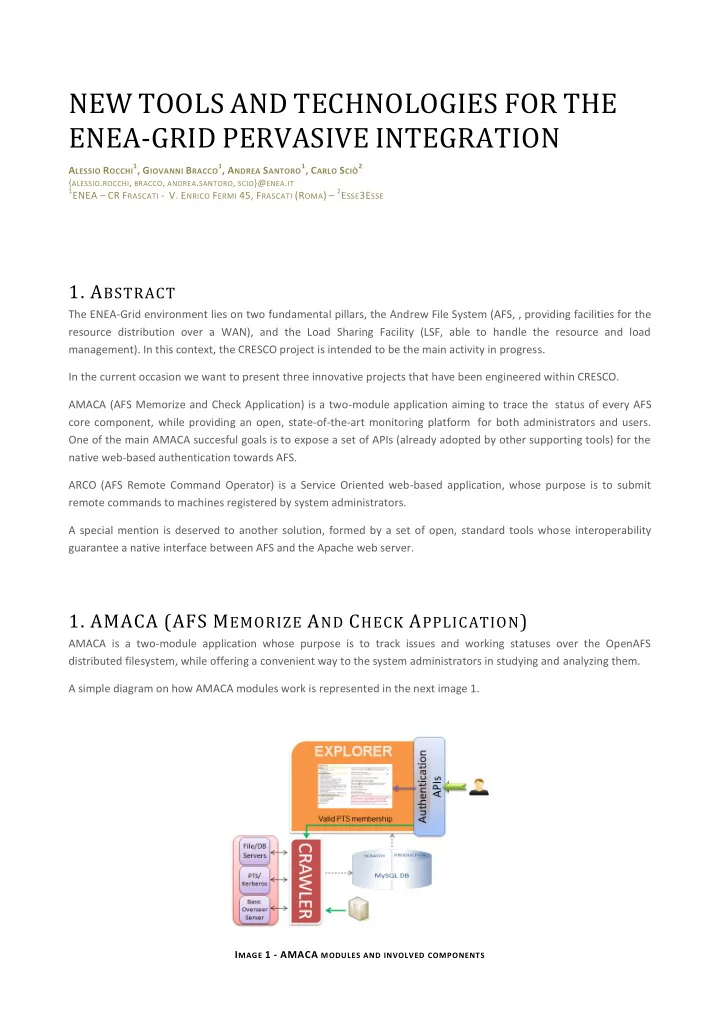

1.1 C RAWLER The crawler module of AMACA (based on N. Gruener’s AFS APIs) is a Perl application aiming to check the status of every AFS core component. The indexing results are handled by a memorization module, that is responsible to store the history of file system's events in a MySQL backend (making easier to perform subsequent fine-grained data mining operations). Every crawler invocation is differentiated by the others by an unique ID called snapshot . As the crawler can be invoked both directly (i.e.: a user belonging to a certain PTS group explicitly runs it via the web Explorer modu le) or not (i.e.: its execution is scheduled in the O.S.’s cron), also the database is splitted in two: a “scratch” portion is dedicated to the latter execution kind, so that the administrator can solve problems and see “instantly” what is the effect of his intervention without interfering with the global informations. 1.2 E XPLORER The explorer module of AMACA is a web 2.0 compliant application, written in Php with some AJAX elements, providing a comfortable interface to the analysis of the crawling results. The Explorer module provides facilities both for the visualization of “static data” (i.e.: size and number of volumes and partition, sync site, alarms about critical events, …) and interactive queries. The Explorer module is adaptive: every shown data can be shrunk to a particular snapshot/domain in order to focus the attention only on situations detected locally. 2. A UTHENTICATION API S AMACA exposes a set a well-structured interface for the user web authentication over OpenAFS. This API realizes an IPC mechanism with the PAG shell, so that an user can get access to a protected resource simply providing its ENEA- GRID username and password, in a convenient, integrated way. The authentication APIs work atomically (The PAG Shell provides an “user -aw are” isolation level for an object -the token- whose existence, otherwise, would be limited to one instance at a time, generating harmful race conditions), and securely (because of the presence of two cryptographic layers: SSL and RSA via mcrypt-lib) I MAGE 2 - A UTHENTICATION API S BUSINESS DIAGRAM 3. ARCO (AFS R EMOTE C OMMAND O PERATOR ) ARCO is an application engineered to execute commands on remote machines. Its original purpose foresaw to be fully focused on LSF multicluster, but it became soon a software capable to handle indifferently any remote tool).

I MAGE 3 - P SEUDO -UML U SE C ASE DIAGRAM With ARCO (img. 1), an administrator can register the services he wants to deal with, the machines where the services are (simply by loading files in LSF/text format, but we plan to extend the support to other file types), and perform the commands execution in a visual, convenient, secure way. Every task ARCO performs is logged (img. 2) in a MySQL backend (where also the associations machine/service are stored). I MAGE 4 - ARCO WORKING MODEL ARCO uses, on the authentication side, the same APIs than AMACA (extended to have a better support on more PTS groups checking) 4. A PACHE AND AFS INTEGRATION The work on the integration between the Apache web server and OpenAFS has involved different tasks, referring to three main scenarios: 1. We want our web server to publish the pages of users, projects and software simply by linking them into the document root to the AFS shared space where they are stored. This task is accomplished by a set of scripts that fill up the web root in an incremental way.

I MAGE 5 - C OMMON SCENARIO 2. Resources accessible only by selected users or groups (f ig. 4). This need can’t be solved by using the authentication APIs (they always need the presence of an index file for the cookie processing, while a user likes to simply share a simple list of resources), and it has to be performed at the Apache level. I MAGE 6 - S CENARIO WITH PROTECTED RESOURCES We engineered a patch for the Jan Wolter’s mod_authnz_external apache module (aiming to bypass the built -in apache authentication mechanism, in order to get it tailored on the user needs), so that it could export the current URI’s physical path. The Apache environment is passed to two other software modules, performing the control over the requestor’s membership and mapping the results on the current directory rights. I MAGE 7 - W ORKING MODEL OF THE ENTIRE SYSTEM (Partially solved) issue : slightly increasing response time: the interpreter is called at every step of the directory walking and this results in a little overhead. 3. Security: the “symlink - aware” structure could be harmful : an incautious user could create links toward sensitive system files without any control. So we had to • Force Apache to follow the link only if its owner matches the one of the link’s target (system files are usually owned by root);

• Fill in the passwd file with the AFS user names, so that it could be possible to chown every linked page to its AFS owner (and allow only root to be accepted when receiving a remote access request via ssh).

Recommend

More recommend