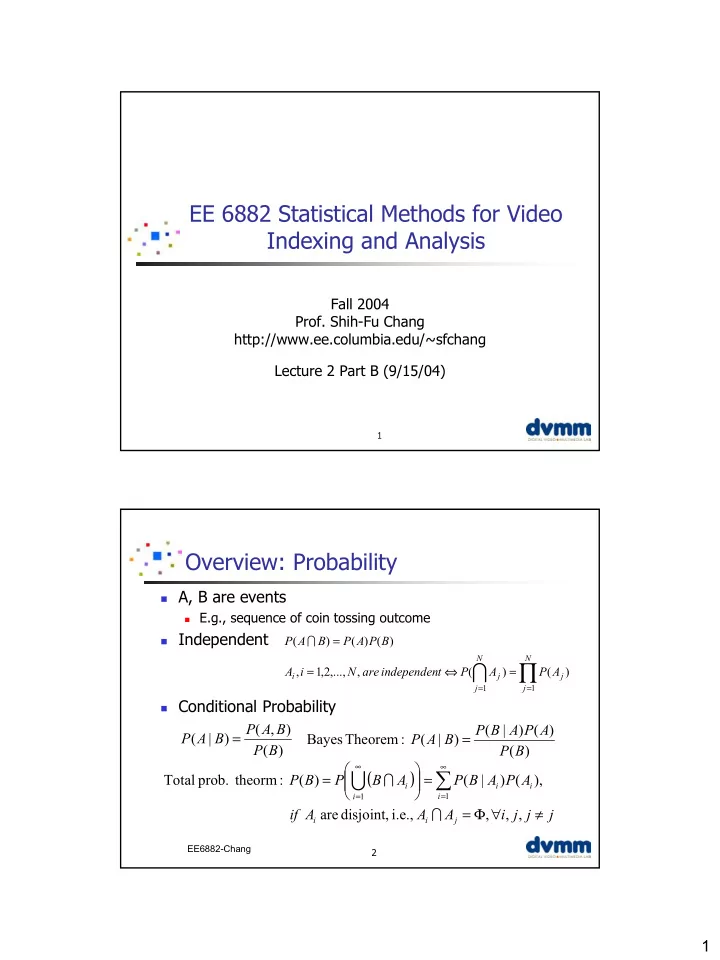

EE 6882 Statistical Methods for Video Indexing and Analysis Fall 2004 Prof. Shih-Fu Chang http://www.ee.columbia.edu/~sfchang Lecture 2 Part B (9/15/04) 1 Overview: Probability � A, B are events � E.g., sequence of coin tossing outcome � Independent = P ( A ∩ B ) P ( A ) P ( B ) N N ∏ ∩ = ⇔ = A , i 1 , 2 ,..., N , are independen t P ( A ) P ( A ) i j j = = j 1 j 1 � Conditional Probability P ( A , B ) P ( B | A ) P ( A ) = = P ( A | B ) Bayes Theorem : P ( A | B ) P ( B ) P ( B ) ∞ ∞ ( ) ∑ ∪ = = Total prob. theorm : P ( B ) P B ∩ A P ( B | A ) P ( A ) , i i i = = i 1 i 1 = Φ ∀ ≠ if A are disjoint, i.e., A ∩ A , i , j , j j i i j EE6882-Chang 2 1

Probability � Independence & mutual exclusion (uncorrelated) different = ≠ ⇒ P ( A ∩ B ) 0 , P ( A ), P ( B ) 0 uncorrelat ed A B But ≠ ⇒ P ( A ∩ B ) P ( A ) P ( B ) Not independen t � Probability of random variable { ( ] } = ∈ ∞ � Cumulative distribution function (cdf) F X ( x ) Prob X - , x { } � Probability density function (pdf) = = P ( x ) Prob X x X ′ dF ( x ) = X ′ x ,..., x are independen t iff d x ′ = x x 1 N n ∏ ≤ ≤ = ≤ ≤ P( x x ;...; x x ) P ( x x ) , i , n N , i 1 i n i k k 1 n k = k 1 EE6882-Chang 3 Probability { } � Joint distribution = ≤ ≤ F ( x , y ) Prob X x, Y y X , Y 2 ∂ � Joint density ′ ′ = f ( x , y ) F ( x , y ) X , Y X , Y ∂ ′ ∂ ′ x y ′ ′ � Marginal distribution = = x x , y y ∫ = f ( x ) f ( x , y ) dy X X , Y R � Conditional probability f ( x , y ) = X , Y f ( x y ) , note : a function of both x & y X f ( y ) Y f ( y x ) f ( x ) = Y X Bayes theorem : f ( x y ) X f ( y ) Y ∫ = Total prob. theorem : f ( x ) f ( x | y ) f ( y ) dy X X Y EE6882-Chang 4 2

Probability � Expectation (expected value) ∫ = E ( x ) xf ( x ) dx , X ∫ = E ( g ( x )) g ( x ) f ( x ) dx X � E.g., mean, variance, moments, central moments � Integral become summation if x is discrete EE6882-Chang 5 Entropy m ∞ ∑ ∫ Entropy (bits) = − = − H p log p H p ( x ) log p ( x ) dx � i 2 i 2 − ∞ = i 1 Given same mean and variance, � = + π σ H 0 . 5 log ( 2 ) Gaussian has the max entropy gau 2 � ≠ 0 if x a Dirac delta distribution has the δ − = ( x a ) � ∞ = lowest entropy, if x a ⇒ = −∞ H d For discrete x and arbitrary function � ≤ f(.) H ( f ( x )) H ( x ) Processing never increases entropy � for discrete variables Because prob. cannot be split to two � different values after processing EE6882-Chang 6 3

Relative Entropy � Also called Kullback-Leibler (K-L) Distance � A measure of ‘distance’ between 2 distributions ∑ q ( x ) ( ) = D p ( x ), q ( x ) q ( x ) log KL p ( x ) x ∞ q ( x ) ∫ = or q ( x ) log dx p ( x ) - ∞ ( ) ( ) ≥ = ⋅ = ⋅ D KL 0 , and 0 i ff p q � � Not necessarily symmetric, may not satisfy triangular inequality EE6882-Chang 7 Mutual Information Reduction in uncertainty about one variable due to the knowledge � of the other one, e.g., p(x) and q(y). Can be different variables and different distributions. = − I ( p ; q ) H ( p ) H ( p | q ) r ( x , y ) ∑∑ = r ( x , y ) log , where r(x,y) is the joint prob. ; p ( x ) q ( y ) x y ( ) = D r ( x , y ), p ( x ) q ( y ) KL H ( p , q ) I ( p;q )=0 iff p, q are independent � H ( p | q ) Symmetric I ( p;q )= I ( q;p ) H ( q|p ) � But I ( p;q ) is not a metric � � E.g., if p ( x ) =q ( y ), I ( p;q ) may not be 0 H ( p ) H ( q ) � H ( p, q ) = H ( p ) + H ( q|p ) = H ( q ) + H ( p|q ) I ( p , q ) EE6882-Chang 8 4

Random Number Generation P(u) P(v) u v T u v What’s the relationship between u & v? � hist equal demo F(u) F(v) ( ) ( ) ′ ′ = 1 F v F u 1 V U t ( ) t − 1 ′ = ′ v F F u V U u v v’ u’ Note all random number generators in Matlab use rand() or randn() (I.e., � ‘uniform’ or ‘normal’ distribution) Remember to change the initial state of rand() & randn() � E,g: ini-sta; rand(‘state’,ini-sta); randn(‘state’, ini-sta); � If there is no analytical form for CDF, how to generate samples? � EE6882-Chang 9 Gaussian Distribution Gaussian distribution � 1 ( ) 1 − − µ 2 x = 2 σ p ( x ) e 2 πσ 2 2 − µ ≤ σ ≅ Pr[ x ] 0 . 68 Multivariate Gaussian � − 1 ( ) ( ) 1 T − − 1 − − µ ≤ σ ≅ x µ Σ x µ Pr[ x 2 ] 0 . 95 = p ( x ) e 2 ( ) π D / 2 − µ ≤ σ ≅ 2 Σ Pr[ x 3 ] 0 . 997 µ Mahalanobi s distance from x to where x , µ are D - dimensiona l vectors = − µ σ r x / × Σ : D D matrix x 2 x 2 2 σ 0 = 1 Σ General Σ 2 σ 0 2 x 1 x 1 EE6882-Chang 10 5

Generate Samples Of Gaussian 1 1 2 − x µ = σ = → = 2 x ~ N ( x | 0 , 1 ), 0 , 1 p ( x ) e 2 π 2 1 1 1 2 x x ∫ ∫ = = + = − 2 F ( x ) p ( t ) dt erf x , where erf ( x ) e t dt (error function) π − ∞ 2 2 2 0 u = u ~ uniform ( 0 , 1 ), F u Step 1: Generate uniform samples Step 2: 1 1 1 ( ) ( ) ( ) − = = = + → = 1 = − F u u F x erf x x F u erfinv ( 2 u 1 ) U X X 2 2 2 Step 3: Generate Step 4: Scale & shift x ~ N ( x | 0 , I ) ( ) = Σ + µ µ Σ 1 : D D 1 / 2 z x ~ N , 1 1 − 2 x = Π D d p ( x ) e 2 = d 1 π 2 See GauNorm demo EE6882-Chang 11 Random Generators In Matlab Function Name Purpose rand Generate uniformly distributed samples in the interval [0,1], 1-D randn Create samples with standard normal distribution, N(0, 1), 1-D random A function to generate samples with specified distribution, 1-D normrnd Generate normally distributed samples. 1-D Other similar generators include Betarnd, binornd, chi2rnd, exprnd, evrnd, frnd, gamrnd, geornd, hygernd, iwishrnd, lognrnd, nbinrnd, ncfrnd, nctrnd, ncx2rnd, normrnd, poissrnd, raylrnd, trnd, unidrnd, unifrnd, wblrnd, wblrnd, wishrnd mvnrnd Generate multivariate normally distributed samples. A similar generator is mvtrnd, which creates multivariate t distribution. NOTE : All of these generators, except rand and randn, are included in Statistical Toolbox. See ‘randtool’ and ‘disttool’ demos in Matlab EE6882-Chang 12 6

Gaussian Used In Regression Model the joint distribution − 2 D Gaussian T − 1 µ Σ Σ µ x x 1 1 = − − x xx yx − x p ( x , y ) exp µ Σ Σ µ y y π D / 2 Σ 2 ( 2 ) y xy yy y Conditional distribution of y given x p ( y|x ) = p ( y | x ) ( ) − − µ + Σ Σ 1 − µ Σ − Σ Σ 1 Σ N y ( x ), y yx xx x yy yx xx xy x EE6882-Chang 13 Discriminant Analysis � x x(2) Given a feature vector � x x k = xx g ( ), 1,2 C 1 Discriminant functions � k x x x x k Assign to class if . . . . . � � . > ≠ g ( ) x g x i ( ), k . . . C 2 k i discriminant function: closeness � x(1) � ( ) g =1/distance x,C k k Likelihood where C : centroid of class k k discriminant function: Likelihood P ( x|C 1 ) P ( x|C 2 ) � � = g prob x C ( | ) k k 2 = µ σ p x C ( | ) N ( , ) 1 or Gaussian Mixture α + − α N (1 ) N x 1 1 1 2 EE6882-Chang 14 7

Discriminant Analysis � Example of discriminant function � Linear and quadratic discriminant x(2) x(2) � g x > ( ) 0 + + + + + + + Decision o + + + + + + + + + + o + + o oo + oo boundary Decision + + + + o + + o o o + + + o o boundary o o o o o o � o o o g x < ( ) 0 x(1) x(1) � � = + g x ( ) ax bx 2 2 = + + g x ( ) ax bx cx x 1 2 1 2 1 2 EE6882-Chang 15 Decision Tree: Non-parametric x(2) � Find most opportunistic + + + + + dimension in each step + + TH1 + + � Selection criterion + + o o o o o o + o o o o + � Entropy o o x(1) TH2 � Variance before / after � Stop criterion x(3) � Avoid overfitting x(2)>TH1 Y N C + x(1)>TH2 Y N See ‘classification’ demo in statistics toolbox C o C + EE6882-Chang 16 8

Recommend

More recommend