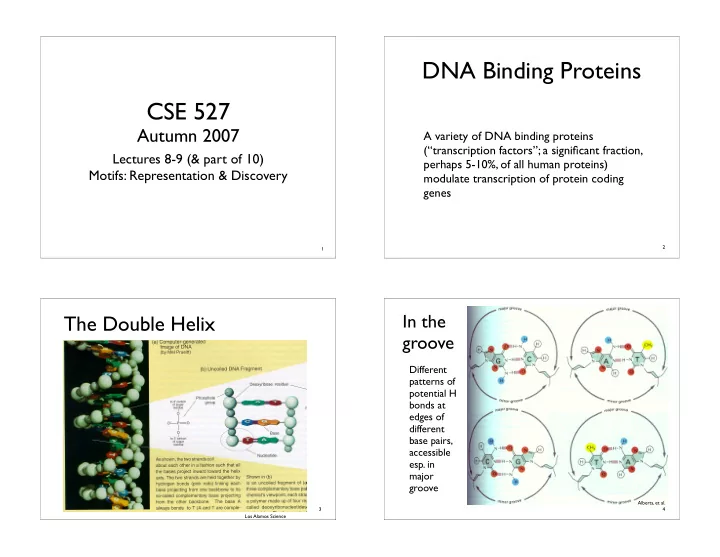

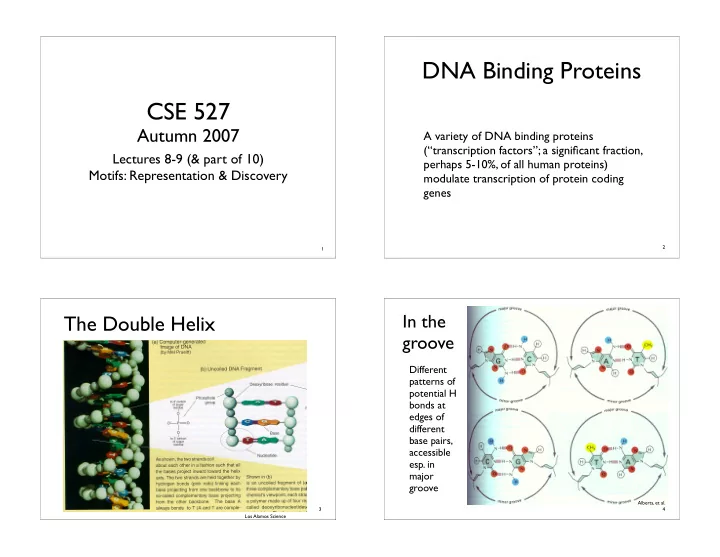

DNA Binding Proteins CSE 527 Autumn 2007 A variety of DNA binding proteins (“transcription factors”; a significant fraction, Lectures 8-9 (& part of 10) perhaps 5-10%, of all human proteins) Motifs: Representation & Discovery modulate transcription of protein coding genes 2 1 In the The Double Helix groove Different patterns of potential H bonds at edges of different base pairs, accessible esp. in major groove Alberts, et al. 3 4 Los Alamos Science

Helix-Turn-Helix DNA Binding Motif H-T -H Dimers Alberts, et al. Bind 2 DNA patches, ~ 1 turn apart Increases both specificity and affinity 5 6 Alberts, et al. Leucine Zipper Motif Zinc Finger Motif Homo-/hetero-dimers and combinatorial control Alberts, et al. Alberts, et al. 7 8

But the overall Some Protein/DNA DNA binding interactions well-understood “code” still defies prediction 9 10 DNA binding site Bacterial Met Repressor a beta-sheet DNA binding domain summary Negative feedback loop: high Met level ⇒ repress Met synthesis genes • complex “code” • short patches (6-8 bp) • often near each other (1 turn = 10 bp) • often reverse-complements SAM (Met • not perfect matches derivative) Alberts, et al. 11 12

Sequence Motifs E. coli Promoters “TATA Box” ~ 10bp upstream of Last few slides described structural motifs in transcription start proteins TACGAT How to define it? TAAAAT Equally interesting are the DNA sequence Consensus is TATAAT TATACT motifs to which these proteins bind - e.g. , BUT all differ from it GATAAT one leucine zipper dimer might bind (with Allow k mismatches? TATGAT varying affinities) to dozens or hundreds of TATGTT Equally weighted? similar sequences Wildcards like R,Y? ({A,G}, {C,T}, resp.) 13 14 E. coli Promoters TATA Box Frequencies • “TATA Box” - consensus TATAAT ~ pos 1 2 3 4 5 6 base 10bp upstream of transcription start • Not exact: of 168 studied (mid 80’s) A 2 95 26 59 51 1 – nearly all had 2/3 of TAxyzT C 9 2 14 13 20 3 – 80-90% had all 3 – 50% agreed in each of x,y,z G 10 1 16 15 13 0 – no perfect match • Other common features at -35, etc. T 79 3 44 13 17 96 15 16

Scanning for TATA TATA Scores A C pos G 1 2 3 4 5 6 T base A -36 19 1 12 10 -46 A C G C -15 -36 -8 -9 -3 -31 T G -13 -46 -6 -7 -9 -46 (?) A C G T 17 -31 8 -9 -6 19 T 17 18 Stormo, Ann. Rev. Biophys. Biophys Chem, 17, 1988, 241-263 Weight Matrices: Score Distribution Statistics (Simulated) Assume: 3500 f b,i = frequency of base b in position i in TATA 3000 f b = frequency of base b in all sequences 2500 2000 Log likelihood ratio, given S = B 1 B 2 ...B 6 : 1500 1000 500 � � log “promoter” P(S | � � ) i 6 1 = = � log � � , f i � = B � i � , 1 6 i i B log � i � f � � � P(S | “nonpromot � er”) i = 6 � � � f i 1 � � B B f � � i = � � 0 -150 -130 -110 -90 -70 -50 -30 -10 10 30 50 70 90 Assumes independence 19 20

Score Distribution Neyman-Pearson (Simulated) Given a sample x 1 , x 2 , ..., x n , from a distribution f 3500 (...| � ) with parameter � , want to test 3000 hypothesis � = � 1 vs � = � 2 . 2500 Might as well look at likelihood ratio: 2000 1500 f( x 1 , x 2 , ..., x n | � 1 ) 1000 > � f( x 1 , x 2 , ..., x n | � 2 ) 500 0 -150 -130 -110 -90 -70 -50 -30 -10 10 30 50 70 90 (or log likelihood ratio ) 21 22 Weight Matrices: What’s best WMM? Chemistry Given 20 sequences s 1 , s 2 , ..., s k of length 8, assumed to be generated at random Experiments show ~80% correlation of log according to a WMM defined by 8 x (4-1) likelihood weight matrix scores to measured parameters � , what’s the best � ? binding energy of RNA polymerase to variations on TATAAT consensus E.g., what’s MLE for � given data s 1 , s 2 , ..., s k ? [Stormo & Fields] Answer: count frequencies per position. 23 24

Another WMM example Non-uniform Background 8 Sequences: Freq. Col 1 Col 2 Col3 • E. coli - DNA approximately 25% A, C, G, T A .625 0 0 ATG C 0 0 0 • M. jannaschi - 68% A-T, 32% G-C ATG G .250 0 1 ATG LLR from previous ATG T .125 1 0 LLR Col 1 Col 2 Col 3 ATG example, assuming - � - � A .74 GTG - � - � - � LLR Col 1 Col 2 Col 3 C GTG f A = f T = 3 / 8 - � A 1.32 - � - � G 1.00 3.00 TTG f C = f G = 1 / 8 - � - � - � - � C T -1.58 1.42 Log-Likelihood Ratio: - � G 0 2.00 e.g., G in col 3 is 8 x more likely via WMM - � T -1.00 2.00 f x i ,i , f x i = 1 than background, so (log 2 ) score = 3 (bits). log 2 f x i 4 25 26 WMM: How “Informative”? Relative Entropy Mean score of site vs bkg? For any fixed length sequence x , let AKA Kullback-Liebler Distance/Divergence, P(x) = Prob. of x according to WMM AKA Information Content Q(x) = Prob. of x according to background Given distributions P , Q Relative Entropy: P ( x ) log P ( x ) � H ( P || Q ) = � 0 P ( x ) � Q ( x ) H ( P || Q ) = P ( x ) log 2 Q ( x ) x ∈ Ω x ∈ Ω -H(Q||P) H(P||Q) Notes: H(P||Q) is expected log likelihood score of a sequence randomly chosen from WMM ; Let P ( x ) log P ( x ) Q ( x ) = 0 if P ( x ) = 0 [since lim y → 0 y log y = 0] -H(Q||P) is expected score of Background Undefined if 0 = Q ( x ) < P ( x ) 27 28

WMM Scores vs Relative Entropy For WMM, you can show (based on the assumption of independence between H(P||Q) = 5.0 3500 columns), that : 3000 -H(Q||P) = -6.8 2500 H ( P || Q ) = � i H ( P i || Q i ) 2000 where P i and Q i are the WMM/background 1500 distributions for column i. 1000 500 0 -150 -130 -110 -90 -70 -50 -30 -10 10 30 50 70 90 29 WMM Example, cont. Pseudocounts Freq. Col 1 Col 2 Col3 A .625 0 0 Are the - � ’s a problem? C 0 0 0 G .250 0 1 Certain that a given residue never occurs T .125 1 0 in a given position? Then - � just right Uniform Non-uniform Else, it may be a small-sample artifact LLR Col 1 Col 2 Col 3 LLR Col 1 Col 2 Col 3 A 1.32 - � - � A .74 - � - � Typical fix: add a pseudocount to each observed - � - � - � - � - � - � C C count—small constant (e.g., .5, 1) - � - � G 0 2.00 G 1.00 3.00 T -1.00 2.00 - � T -1.58 1.42 - � Sounds ad hoc ; there is a Bayesian justification RelEnt .70 2.00 2.00 4.70 RelEnt .51 1.42 3.00 4.93 31 32

WMM Summary How-to Questions • Weight Matrix Model (aka Position Specific Scoring Matrix, Given aligned motif instances, build model? PSSM, “possum”, 0th order Markov models) - Frequency counts (above, maybe with pseudocounts) • Simple statistical model assuming independence between adjacent positions Given a model, find (probable) instances • To build: count (+ pseudocount) letter frequency per - Scanning, as above position, log likelihood ratio to background • To scan: add LLRs per position, compare to threshold Given unaligned strings thought to contain a • Generalizations to higher order models (i.e., letter motif, find it? (e.g., upstream regions for co-expressed frequency per position, conditional on neighbor) also genes from a microarray experiment) possible, with enough training data - Hard... rest of lecture. 33 34 Motif Discovery: Motif Discovery 4 example approaches Brute Force Greedy search Unfortunately, finding a site of max relative entropy in a set of unaligned sequences is Expectation Maximization NP-hard [Akutsu] Gibbs sampler 35 36

Greedy Best-First Approach Brute Force [Hertz & Stormo] Input: Input: usual “greedy” problems astronomically sloooow • Seqs s 1 , s 2 , ..., s k (len ~n, say), each w/ one instance • Sequence s 1 , s 2 , ..., s k ; motif length I ; “breadth” d of an unknown length l motif Algorithm: Algorithm: problem: • create singleton set with each length l • create singleton set with each length l subsequence of each s 1 , s 2 , ..., s k X subsequence of each s 1 , s 2 , ..., s k (~ nk sets) • for each set, add each possible length l X X • for each set, add each possible length l subsequence not already present subsequence not already present (~ n 2 k(k-1) sets) • compute relative entropy of each • repeat until all have k sequences (~ n k k! sets) • discard all but d best • compute relative entropy of each; pick best • repeat until all have k sequences 37 38 Expectation Maximization MEME Outline [MEME, Bailey & Elkan, 1995] Input (as above): Typical EM algorithm: Sequence s 1 , s 2 , ..., s k ; motif length l ; background • Parameters � t at t th iteration, used to estimate model; again assume one instance per sequence where the motif instances are (the hidden variables) (variants possible) • Use those estimates to re-estimate the parameters � Algorithm: EM to maximize likelihood of observed data, giving � t+1 Visible data: the sequences • Repeat Hidden data: where’s the motif � 1 Key: given a few good matches to best motif, if motif in sequence i begins at position j Y i,j = expect to pick out more 0 otherwise Parameters � : The WMM 39 40

Recommend

More recommend