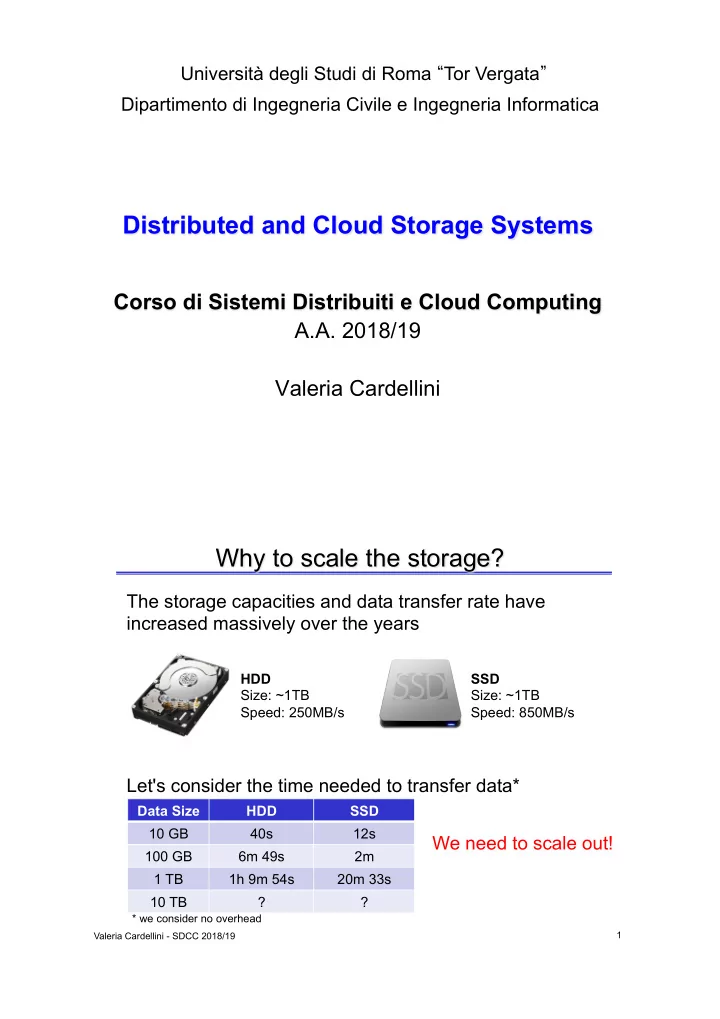

Università degli Studi di Roma “ Tor Vergata ” Dipartimento di Ingegneria Civile e Ingegneria Informatica Distributed and Cloud Storage Systems Corso di Sistemi Distribuiti e Cloud Computing A.A. 2018/19 Valeria Cardellini Why to scale the storage? The storage capacities and data transfer rate have increased massively over the years HDD SSD Size: ~1TB Size: ~1TB Speed: 250MB/s Speed: 850MB/s Let's consider the time needed to transfer data* Data Size HDD SSD 10 GB 40s 12s We need to scale out! 100 GB 6m 49s 2m 1 TB 1h 9m 54s 20m 33s 10 TB ? ? * we consider no overhead 1 Valeria Cardellini - SDCC 2018/19

General principles for scalable data storage • Scalability and high performance – To face the continuous growth of data to store – Use multiple storage nodes • Ability to run on commodity hardware – Hardware failures are the norm rather than the exception • Reliability and fault tolerance – Transparent data replication • Availability – Data should be available when needed – CAP theorem: trade-off with consistency Valeria Cardellini - SDCC 2018/19 2 Solutions for scalable data storage Various forms of scalable data storage: • Distributed file systems – Manage (large) files on multiple nodes – Examples: Google File System, Hadoop Distributed File System • NoSQL databases (more generally, NoSQL data stores) – Simple and flexible non-relational data models – Horizontal scalability and fault tolerance – Key-value, column family, document, and graph stores – Examples: BigTable, Cassandra, MongoDB, HBase, DynamoDB – Existing time series databases are built on top of NoSQL databases (examples: InfluxDB, KairosDB) • NewSQL databases – Add horizontal scalability and fault tolerance to the relational model – Examples: VoltDB, Google Spanner 3 Valeria Cardellini - SDCC 2018/19

Scalable data storage solutions The whole picture of the different solutions Valeria Cardellini - SDCC 2018/19 4 Data storage in the Cloud • Main goals: – Massive scaling “on demand” (elasticity) – Data availability – Simplified application development and deployment • Some storage systems offered only as Cloud services – Either directly (e.g., Amazon DynamoDB, Google Bigtable, Google Cloud Storage) or as part of a programming environment • Other proprietary systems used only internally (e.g., Dynamo, GFS) 5 Valeria Cardellini - SDCC 2018/19

Distributed file systems • Represent the primary support for data management • Manage data storage across a network of machines • Provide an interface whereby to store information in the form of files and later access them for read and write operations – Using the traditional file system interface • Several solutions with different design choices – GFS , Apache HDFS (GFS open - source clone): designed for batch applications with large files – Alluxio: in-memory (high-throughput) storage system – Lustre, Ceph: designed for high performance Valeria Cardellini - SDCC 2018/19 6 Where to store data? • Memory I/O vs. disk I/O • See “Latency numbers every programmer should know” http://bit.ly/2pZXIU9 7 Valeria Cardellini - SDCC 2018/19

Case study: Google File System • Distributed fault-tolerant file system implemented in user space • Manages (very) large files: usually multi-GB • Divide et impera : file divided into fixed-size chunks • Chunks : – Have a fixed size – Transparent to users – Each chunk is stored as plain file • Files follow the write-once, read-many-times pattern – Efficient append operation: appends data at the end of a file atomically at least once even in the presence of concurrent operations (minimal synchronization overhead) • Fault tolerance, high availability through chunk replication S. Ghemawat, H. Gobioff, S.-T. Leung, "The Google File System”, ACM SOSP ‘03. Valeria Cardellini - SDCC 2018/19 8 GFS operation environment 9 Valeria Cardellini - SDCC 2018/19

GFS: architecture • Master – Single, centralized entity (this simplifies the design) – Manages file metadata (stored in memory) • Metadata: access control information, mapping from files to chunks, chunk locations – Does not store data (i.e., chunks) – Manages chunks: creation, replication, load balancing, deletion Valeria Cardellini - SDCC 2018/19 10 GFS: architecture • Chunkservers (100s – 1000s) – Store chunks as files – Spread across cluster racks • Clients – Issue control (metadata) requests to GFS master – Issue data requests directly to GFS chunkservers – Cache metadata but not data (simplifies the design) 11 Valeria Cardellini - SDCC 2018/19

GFS: metadata • The master stores three major types of metadata: – File and chunk namespace (directory hierarchy) – Mapping from files to chunks – Current locations of chunks • Metadata are stored in memory (64B per chunk) – Pro: fast; easy and efficient to scan the entire state – Con : the number of chunks is limited by the amount of memory of the master: "The cost of adding extra memory to the master is a small price to pay for the simplicity, reliability, performance, and flexibility gained" • The master also keeps an operation log with a historical record of metadata changes – Persistent on local disk – Replicated – Checkpoint for fast recovery Valeria Cardellini - SDCC 2018/19 12 GFS: chunk size • Chunk size is either 64 MB or 128 MB – Much larger than typical block sizes • Why? Large chunk size reduces: – Number of interactions between client and master – Size of metadata stored on master – Network overhead (persistent TCP connection to the chunk server over an extended period of time) • Potential disadvantage – Chunks for small files may become hot spots 13 Valeria Cardellini - SDCC 2018/19

GFS: fault-tolerance and replication • The master replicates (and maintains the replication) of each chunk on several chunkservers – At least 3 replicas on different chunkservers – Replication based on primary-backup schema – Replication degree > 3 for highly requested chunks • Multi-level placement of replicas – Different machines, local rack + reliability and availability – Different machines, different racks + aggregated bandwidth • Data integrity – Chunk divided in 64KB blocks; 32B checksum for each block – Checksum kept in memory – Checksum is checked every time a client reads data Valeria Cardellini - SDCC 2018/19 14 GFS: master operations • Stores metadata • Manages and locks namespace – Namespace represented as a lookup table • Periodic communication with each chunkserver – Sends instructions and collects chunkserver state ( heartbeat messages) • Creates, re-replicates, rebalances chunks – Balances the disk space utilization and load balancing – Distributes replicas among racks to increase fault-tolerance – Re-replicates a chunk as soon as the number of available replicas falls below a user-specified goal 15 Valeria Cardellini - SDCC 2018/19

GFS: master operations (2) • Garbage collection – File deletion logged by the master – File renamed to a hidden name with deletion timestamp: its deletion is postponed – Deleted files can be easily recovered in a limited timespan • Stale replica detection – Chunk replicas may become stale if a chunkserver fails or misses updates to the chunk – For each chunk, the master keeps a chunk version number – Chunk version number updated for each chunk mutation – The master removes stale replicas in its regular garbage collection Valeria Cardellini - SDCC 2018/19 16 GFS: system interactions • Files are hierarchically organized in directories – There is no data structure that represents a directory • A file is identified by its pathname – GFS does not support aliases • GFS supports traditional file system operations (but no Posix API) – create , delete , open , close , read , write • Also supports two special operations: – snapshot : makes a copy of a file or a directory tree almost instantaneously (based on copy-on-write techniques) – record append : atomically appends data to a file; multiple clients can append to the same file concurrently without fear of overwriting one another’s data 17 Valeria Cardellini - SDCC 2018/19

GFS: system interactions 1 2 3 4 • Read operation - Data flow is decoupled from control flow (1) Client sends master: read(file name, chunk index) (2) Master’s reply: chunk ID, chunk version number, locations of replicas (3) Client sends “closest” chunkserver w/replica: read(chunk ID, byte range) (4) Chunkserver replies with data Valeria Cardellini - SDCC 2018/19 18 GFS: mutations • Mutations are write or append – Mutations are performed at all the chunk's replicas in the same order (3): Client sends • Based on lease mechanism: data to closest replica first – Goal: minimize management overhead at the master – Master grants chunk lease to primary replica – Primary picks a serial order for all the mutations to the chunk – All replicas follow this order when • Data flow is decoupled from applying mutations control flow – Primary replies to client, see (7) • To fully utilize network – Leases renewed using periodic bandwidth, data are pushed heartbeat messages between linearly along a chain of master and chunkservers chunkservers 19 Valeria Cardellini - SDCC 2018/19

Recommend

More recommend