CS 744: MAPREDUCE Shivaram Venkataraman Fall 2020 ANNOUNCEMENTS - PowerPoint PPT Presentation

back ! welcome CS 744: MAPREDUCE Shivaram Venkataraman Fall 2020 ANNOUNCEMENTS Assignment 1 deliverables Code (comments, formatting) papers # sections evaluation in Report to Similar Partitioning analysis (graphs,

back ! welcome CS 744: MAPREDUCE Shivaram Venkataraman Fall 2020

ANNOUNCEMENTS • Assignment 1 deliverables – Code (comments, formatting) papers # sections evaluation in – Report to Similar → • Partitioning analysis (graphs, tables, figures etc.) • Persistence analysis (graphs, tables, figures etc.) • Fault-tolerance analysis (graphs, tables, figures etc.) • See Piazza for Spark installation

Applications MapReduce Machine Learning SQL Streaming Graph → Computational Engines HFS - Scalable Storage Systems Resource Management center Data arch → Datacenter Architecture

↳ BACKGROUND: PTHREADS set limited og arap i. Bharal in parallel memory void *myThreadFun(void *vargp) heap - this { execute } sleep(1); printf(“Hello World\n"); two thread Locks . Cvs return NULL; 2 . ↳ Synchronization } create int main() → threads { across pthread_t thread_id_1, thread_id_2; - pthread_create(&thread_id_1, NULL, myThreadFun, NULL); → Muth - are pthread_create(&thread_id_2, NULL, myThreadFun, NULL); Single are ~ pthread_join(thread_id_1, NULL); pthread_join(thread_id_2, NULL); both exit(0); for wait threads } them of

↳ Supercomputing n - I 0,1 2 BACKGROUND: MPI . - - n go , , , fine grained processes for ranks synchronization n get ttam mpirun -n 4 -f host_file ./mpi_hello_world int main(int argc, char** argv) { MPI_Init(NULL, NULL); . C- n ) // Get the number of processes { int world_size; MPI_Comm_size(MPI_COMM_WORLD, &world_size); processes → of ferial // Get the rank of the process for this job hosts int world_rank; program - MPI_Comm_rank(MPI_COMM_WORLD, &world_rank); in - are =host-fk# [ // Print off a hello world message printf("Hello world from rank %d out of %d processors\n", world_rank, world_size); - ji ; - // Finalize the MPI environment. * me menage : . MPI_Finalize(); send a to D } prom - tend D D D ardeer MPI + .

MOTIVATION Build Google Web Search - Crawl documents, build inverted indexes etc. Need for programmers want Didn't - automatic parallelization it about - worry to - network, disk optimization - crashes mpirun → - handling of machine failures MPI \ DDD DX ,

OUTLINE - Programming Model - Execution Overview - Fault Tolerance - Optimizations

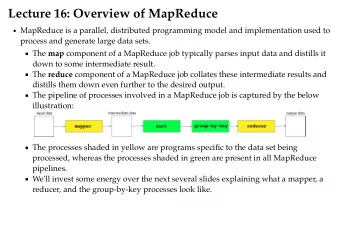

PROGRAMMING MODEL ÷÷÷÷÷÷ Data type: Each record is (key, value) Map function: (K in , V in ) à list(K inter , V inter ) - - - - - Reduce function: - (K inter , list(V inter )) à list(K out , V out ) -

↳ Example: Word Count in :* def mapper(line): def for word in for in line.split(): T ifeng.de?ntermediate output(word, 1) value def reducer(key, values): def output(key, sum(values)) cow . 2) list G. D Courses → ,

trashing GFL , data chunked he → 10*12=0 is # Word Count Execution shared brown - 535%2=1 =fFrkEeas Input Map mappers - Shuffle & Sort Reduce Output g. thegn ? ? ( ' ) Heil ) Ike ! ) Che , Partition 3 the , Chunk the quick function Map : - → → brown fox : Reduce . intermediate i - . . . key , values ; the fox ate Map → the mouse brown , 2 ( the , l ) brown the , I ) . ( : Reduce how now I Map brown → cow

I the , 2 the I Ft : " he :b , ' . # em → Word Count Execution ' . the , I combiner k ⇒ t Input Map Shuffle & Sort Reduce Output files the, 1 fetch port 0 I ? 0 the , I numbered → ① quick , ' # brown, 1 the quick brown, 2 Map fox, 1 brown fox fox, 2 Reduce how, 1 ' ID tox , ' brown , how, 1 now, 1 sina.IE#e now, 1 brown, 1 the, 1 the, 3 the fox ate Map fox, 1 the mouse the, 1 ate, 1 - quick, 1 Reduce cow, 1 port D how now ate, 1 mouse, 1 Map ' D brown mouse, 1 quick, 1 cow cow, 1

ASSUMPTIONS Only have tasks Failures norm are → i. passing shared message or memory No intermediate KV than . other . abundant ( disk ) cheap Local is storage 2 , . model this written in be Applications can s . collection ) ( records sploltalde Input is 4 .

ASSUMPTIONS 1. Commodity networking, less bisection bandwidth 2. Failures are common 3. Local storage is cheap 4. Replicated FS

MapReduce frameworks Word Count Execution MRjT ) launching A tasks ↳ Reduce ( by tasks Master → MR Submit a Job , users conflation JobTracker , Schedule tasks → Automatically with locality split work events - - Tony detim - Map - Map Map * worker how now law the quick the fox ate brown - brown fox the mouse cow - 5 I 4 3 2

Fault Recovery be still read ( replication ) If a task crashes: Input can – Retry on another node → – If the same task repeatedly fails, end the job Map Map Map → how now the quick the fox ate brown brown fox the mouse cow

Fault Recovery If a node crashes: – Relaunch its current tasks on other nodes What about task inputs ? File system replication ④ 3 ' 2 Map Map Map ④ how now the quick the fox ate brown brown fox the mouse cow

Fault Recovery than other much slower runs task mappers a f) Assumption If a task is going slowly (straggler): / about the = → something – Launch second copy of task on another node is making node – Take the output of whichever finishes first it slow → Deterministic IM Che , . ) D¥ s¥ . ⑦ ÷÷ Map Map Map , O how now the quick the fox ate the quick X brown brown fox the mouse brown fox cow

MORE DESIGN Fuentes checkpoints master failing probability of Master failure the job → retry → Metadata chunks are Gfs where tasks Map Locality → Task Granularity

MAPREDUCE: SUMMARY - Simplify programming on large clusters with frequent failures data Intermediate I " - Limited but general functional API ¥÷ .mn?-:.s i . - Map, Reduce, Sort . - No other synchronization / communication " data " push - Fault recovery, straggler mitigation through retries

DISCUSSION https://forms.gle/mAHD4QuMXko7vnjB6

DISCUSSION List one similarity and one difference between MPI and MapReduce MapReduce MPI Parallel confuting Similarity = perf µ .gs tinted model programing Expressive Diff tolerance Fault storage Patti 't Intermediate Message fine grained ( network ) pairs KV

↳ DISCUSSION Indexing pipeline where you start with HTML documents. You want to index the documents after removing the most commonly occurring words. . . ) 1. Compute most common words. ( the , a . . . 2. Remove them and build the index. What are the main shortcomings of using MapReduce to do this? words the → list of ① common to access How inputs read same ops MR ② Both repeated disk I/o avoid to compose

Wd resiliency go , staffman failures , fast without than longer ↳ Barely any failures pot liked process zoo Af \ need to also redone is map if of the parts redo O wiggle .

Jeff Dean, LADIS 2009

NEXT STEPS inputs 15,000 program → grey " " µ " • Next lecture: Spark " " " " t • Assignment 1: Use Piazza!

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.