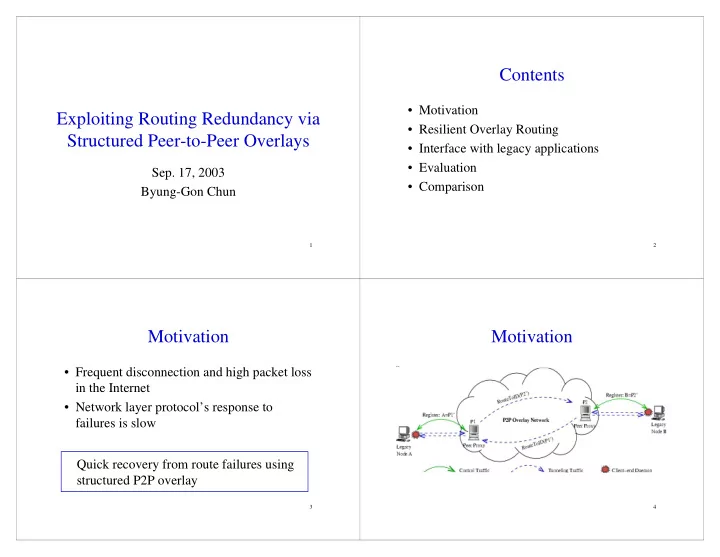

Contents • Motivation Exploiting Routing Redundancy via • Resilient Overlay Routing Structured Peer-to-Peer Overlays • Interface with legacy applications • Evaluation Sep. 17, 2003 • Comparison Byung-Gon Chun 1 2 Motivation Motivation • Frequent disconnection and high packet loss in the Internet • Network layer protocol’s response to failures is slow Quick recovery from route failures using structured P2P overlay 3 4

Resilient Overlay Routing Basics • Basics • Use the KBR of structured P2P overlays [API] • Route failure detection • Backup links maintained for fast failover • Route failure recovery • Proximity-based neighbor selection • Routing redundancy maintenance • Proximity routing with constraints • Note that all packets go through multiple overlay hops. 5 6 Failure Detection Failure Detection • Failure recovery time ~ failure detection time • Link quality estimation using loss rate when backup paths are precomputed – L n = (1-alpha) L n-1 + alpha L p • Periodic beaconing • TBC - metric to capture the impact on the – Backup link probe interval = Primary link probe interval*2 physical network • Number of beacons per period per node - log(N) – TBC = beacons/sec * bytes/beacon * IP hops vs. O(<D>) for unstructured overlay • PNS incurs a lower TBC • Routing state updates – log 2 N vs. O(E) for link state protocol Structured overlays can do frequent beaconing for fast failure detection ? 7 8

How many paths? Failure Recovery • Recall the geometry paper • Exploit backup links – Ring - (log N)! Tree – 1 • Two polices presented in [Bayeux] • Tree with backup links • First reachable link selection (FRLS) – First route whose link quality is above a defined threshold 9 10 Failure Recovery • Constrained multicast (CM) – Duplicate messages to multiple outgoing links – Complementary to FRLS. Triggered when no link meets the threshold – Duplicate message drop at the path-converged nodes • Path convergence ! 11 12

Routing Redundancy Interface with legacy applications Maintenance • Replace the failed route and restore the pre-failure • Transparent tunneling via structured level of path redundancy overlays • Find additional nodes with a prefix constraint • When to repair? – After certain number of probes failed – Compare with the lazy repair in Pastry • Thermodynamics analogy – active entropy reduction [K03] 13 14 Tunneling Redundant Proxy Management • Legacy node A, B, Proxy P • Register with multiple proxies • Registration • Iterative routing between the source proxy – Register an ID - P(A) (e.g. P-1) and a set of destination proxies – Establish a mapping from A’s IP to P(A) • Path diversity • Name resolution and Routing – DNS query – Source daemon diverts traffic with destination IP reachable by overlay – Source proxy locates the destination overlay ID – Route through overlay – Destination proxy forwards to the destination daemon 15 16

Deployment Simulation Result Summary • 2 backup links • What’s the incentive of ISPs? • PNS reduces TBC (up to 50%) – Resilient routing as a value-added service • Latency cost of backup paths is small (mostly less • Cross-domain deployment than 20%) – Merge overlays • Bandwidth overhead of constrained multicast is low (mostly less than 20%) – Peering points between ISP’s overlays • Failures close to destination are costly. • Hierarchy - Brocade • Tapestry finds different routes when the physical link fails. 17 18 Microbenchmark Summary • 200 nodes on PlanetLab Small gap with 2 backup links • Alpha ~ between 0.2 and 0.4 ? • Route switch time – Around 600ms when the beaconing period is 300ms • Latency cost ~ 0 – Sometimes reduced latency in the backup paths – artifacts of small network • CM – Bandwidth*Delay increases less than 30% • Beaconing overhead 19 20 – Less than 7KB/s for beacon period of 300ms

Self Repair Comparison • RON • Tapestry ( L=3 ) – Use one overlay hop (IP) for – Use (multiple) overlay hops for all normal op. and one indirect hop packet routing for failover – Endpoints choose routes – Prefixed routes – O(<D>) probes D=O(N) – O(logN) probes – O(E) messages E=O(N 2 ) – O(log 2 N) messages – Average of k samples – EWMA – Probe interval 12s – Probe interval 300ms – Failure detection 19s – Failure detection 600ms – 33Kbps probe overhead for 50 – < 56Kbps probe overhead for 200 nodes (extrapolation: 56kbps nodes around 70 nodes) 21 22

Recommend

More recommend