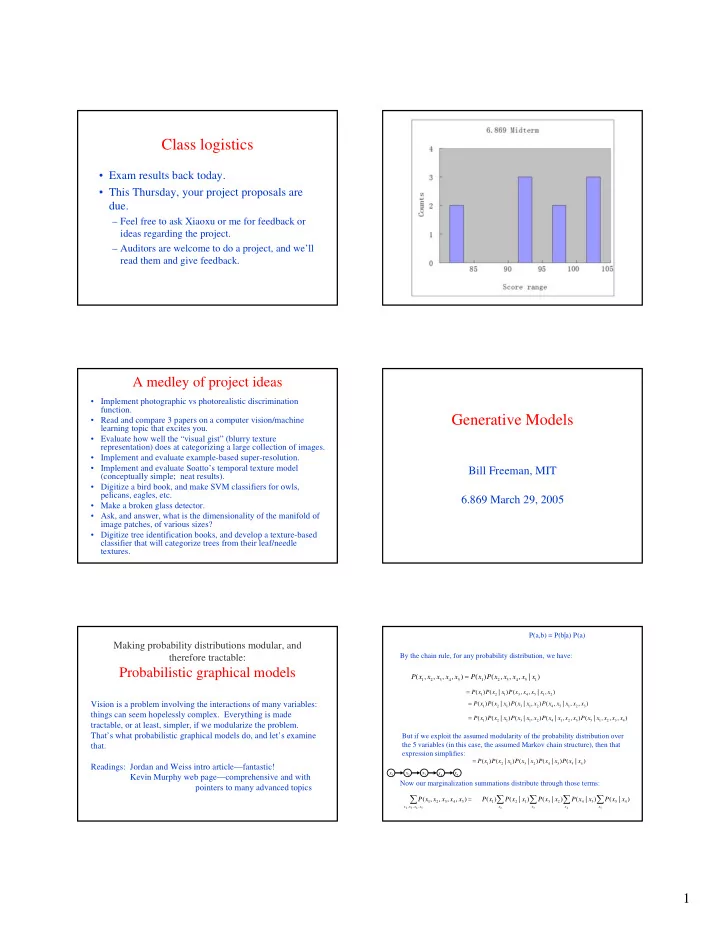

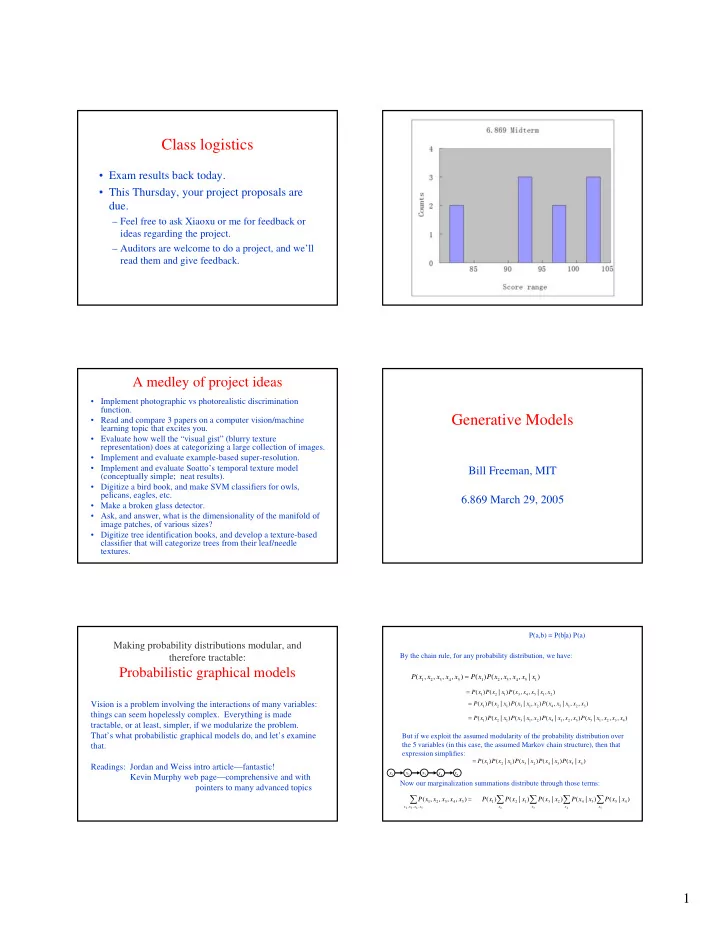

Class logistics • Exam results back today. • This Thursday, your project proposals are due. – Feel free to ask Xiaoxu or me for feedback or ideas regarding the project. – Auditors are welcome to do a project, and we’ll read them and give feedback. A medley of project ideas • Implement photographic vs photorealistic discrimination function. Generative Models • Read and compare 3 papers on a computer vision/machine learning topic that excites you. • Evaluate how well the “visual gist” (blurry texture representation) does at categorizing a large collection of images. • Implement and evaluate example-based super-resolution. • Implement and evaluate Soatto’s temporal texture model Bill Freeman, MIT (conceptually simple; neat results). • Digitize a bird book, and make SVM classifiers for owls, pelicans, eagles, etc. 6.869 March 29, 2005 • Make a broken glass detector. • Ask, and answer, what is the dimensionality of the manifold of image patches, of various sizes? • Digitize tree identification books, and develop a texture-based classifier that will categorize trees from their leaf/needle textures. P(a,b) = P(b|a) P(a) Making probability distributions modular, and By the chain rule, for any probability distribution, we have: therefore tractable: Probabilistic graphical models = P ( x , x , x , x , x ) P ( x ) P ( x , x , x , x | x ) 1 2 3 4 5 1 2 3 4 5 1 = ( ) ( | ) ( , , | , ) P x P x x P x x x x x 1 2 1 3 4 5 1 2 = Vision is a problem involving the interactions of many variables: ( ) ( | ) ( | , ) ( , | , , ) P x P x x P x x x P x x x x x 1 2 1 3 1 2 4 5 1 2 3 things can seem hopelessly complex. Everything is made = ( ) ( | ) ( | , ) ( | , , ) ( | , , , ) P x P x x P x x x P x x x x P x x x x x 1 2 1 3 1 2 4 1 2 3 5 1 2 3 4 tractable, or at least, simpler, if we modularize the problem. That’s what probabilistic graphical models do, and let’s examine But if we exploit the assumed modularity of the probability distribution over that. the 5 variables (in this case, the assumed Markov chain structure), then that expression simplifies: = P ( x ) P ( x | x ) P ( x | x ) P ( x | x ) P ( x | x ) 1 2 1 3 2 4 3 5 4 Readings: Jordan and Weiss intro article—fantastic! x x x x x Kevin Murphy web page—comprehensive and with 1 2 3 4 5 Now our marginalization summations distribute through those terms: pointers to many advanced topics ∑ ∑ ∑ ∑ ∑ ∑ = ( , , , , ) ( ) ( | ) ( | ) ( | ) ( | ) P x x x x x P x P x x P x x P x x P x x 1 2 3 4 5 1 2 1 3 2 4 3 5 4 , , , x x x x x x x x x 2 3 4 5 1 2 3 4 5 1

Belief propagation Performing the marginalization by doing the partial sums is called “belief propagation”. Another modular probabilistic structure, more common in vision problems, is an undirected graph: ∑ ∑ ∑ ∑ ∑ ∑ = P ( x , x , x , x , x ) P ( x ) P ( x | x ) P ( x | x ) P ( x | x ) P ( x | x ) 1 2 3 4 5 1 2 1 3 2 4 3 5 4 x , x , x , x x x x x x x x x x x 2 3 4 5 1 2 3 4 5 1 2 3 4 5 In this example, it has saved us a lot of computation. Suppose each variable has 10 discrete states. Then, not knowing the special structure The joint probability for this graph is given by: of P, we would have to perform 10000 additions (10^4) to marginalize = Φ Φ Φ Φ P ( x , x , x , x , x ) ( x , x ) ( x , x ) ( x , x ) ( x , x ) over the four variables. 1 2 3 4 5 1 2 2 3 3 4 4 5 But doing the partial sums on the right hand side, we only need 40 additions (10*4) to perform the same marginalization! Φ Where is called a “compatibility function”. We can ( x 1 x , ) 2 define compatibility functions we result in the same joint probability as for the directed graph described in the previous slides; for that example, we could use either form. Markov Random Fields MRF nodes as pixels • Allows rich probabilistic models for images. • But built in a local, modular way. Learn local relationships, get global effects out. Winkler, 1995, p. 32 MRF nodes as patches Network joint probability image patches 1 ∏ ∏ = Ψ Φ ( , ) ( , ) ( , ) P x y x x x y i j i i Z scene patches , i j i image scene Scene-scene Image-scene Φ ( x i , y i ) compatibility compatibility image function function neighboring local Ψ ( x i , x j ) scene nodes observations scene 2

In order to use MRFs: Outline of MRF section • Inference in MRF’s. • Given observations y, and the parameters of the MRF, how infer the hidden variables, x? – Gibbs sampling, simulated annealing – Iterated condtional modes (ICM) • How learn the parameters of the MRF? – Variational methods – Belief propagation – Graph cuts • Vision applications of inference in MRF’s. • Learning MRF parameters. – Iterative proportional fitting (IPF) Belief propagation messages Beliefs A message: can be thought of as a set of weights on each of your possible states To find a node’s beliefs: Multiply together all the messages coming in to that node. To send a message: Multiply together all the incoming messages, except from the node you’re sending to, then multiply by the compatibility matrix and marginalize ∏ over the sender’s states. = k ( ) ( ) b x M x j j j j j ∑ ∏ ∈ = ψ ( ) j k k N j ( ) ( , ) ( ) M x x x M x i i ij i j j j ∈ x k N ( j ) \ i j i j j i = Optimal solution in a chain or tree: Belief, and message updates Belief Propagation ∏ = k ( ) ( ) b x M x j • “Do the right thing” Bayesian algorithm. j j j j ∈ k N ( j ) • For Gaussian random variables over time: Kalman filter. • For hidden Markov models: forward/backward algorithm (and MAP ∑ ∏ = ψ j k ( ) ( , ) ( ) M x x x M x i i ij i j j j variant is Viterbi). ∈ x k N ( j ) \ i j i j i = 3

Justification for running belief propagation No factorization with loops! in networks with loops • Experimental results: = Φ x mean ( x , y ) 1 MMSE 1 1 x – Error-correcting codes Kschischang and Frey, 1998; 1 Φ Ψ sum ( x , y ) ( x , x ) McEliece et al., 1998 2 2 1 2 x 2 Freeman and Pasztor, 1999; Φ Ψ Ψ sum ( , ) ( , ) – Vision applications x y x x ( , ) x x 3 3 2 3 Frey, 2000 1 3 x 3 • Theoretical results: y 2 – For Gaussian processes, means are correct. Weiss and Freeman, 1999 – Large neighborhood local maximum for MAP. y 3 y 1 Weiss and Freeman, 2000 x 2 – Equivalent to Bethe approx. in statistical physics. Yedidia, Freeman, and Weiss, 2000 x 3 x 1 – Tree-weighted reparameterization Wainwright, Willsky, Jaakkola, 2001 Statistical mechanics interpretation Free energy formulation U - TS = Free energy Defining − − Ψ = ( , ) / Φ = E x x T E ( x ) / T ( x , x ) e i j ( x ) e i ij i j i i ∑ P ( x 1 x , ,...) U = avg. energy = then the probability distribution p ( x , x ,...) E ( x , x ,...) 2 1 2 1 2 states T = temperature that minimizes the F.E. is precisely ∑ − p ( x , x ,...) ln p ( x , x ,...) S = entropy = the true probability of the Markov network, 1 2 1 2 states ∏ ∏ = Ψ Φ ( , ,...) ( , ) ( ) P x x x x x 1 2 ij i j i i ij i Mean field approximation to free energy Approximating the Free Energy [ ( , ,..., )] F p x x x Exact : 1 2 N F [ b i x ( )] Mean Field Theory : i U - TS = Free energy [ ( ), ( , )] F b x b x x Bethe Approximation : i i ij i j ∑∑ ∑∑ = + ( ) ( ) ( ) ( , ) ( ) ln ( ) F b b x b x E x x b x T b x Kikuchi Approximations : MeanField i i i j j ij i j i i i i ( ) , ij x x i x F [ b ( x ), b ( x , x ), b ( x x , x ),....] i j i , i i ij i j ijk i j k The variational free energy is, up to an additive constant, equal to the Kllback-Leibler divergence between b(x) and the true probability, P(x). ∏ KL divergence: b ( x ) i ∑ ∏ = ( || ) ( ) ln i D b P b x KL i ( ) P x x , 2 x ,... i 1 4

Recommend

More recommend