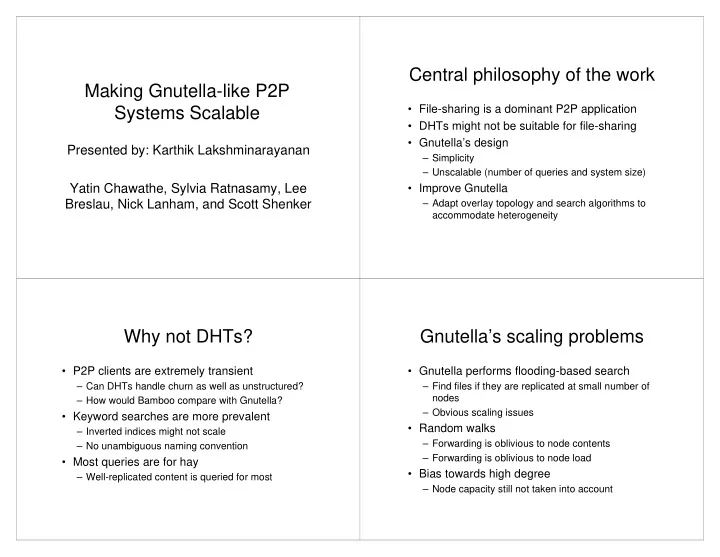

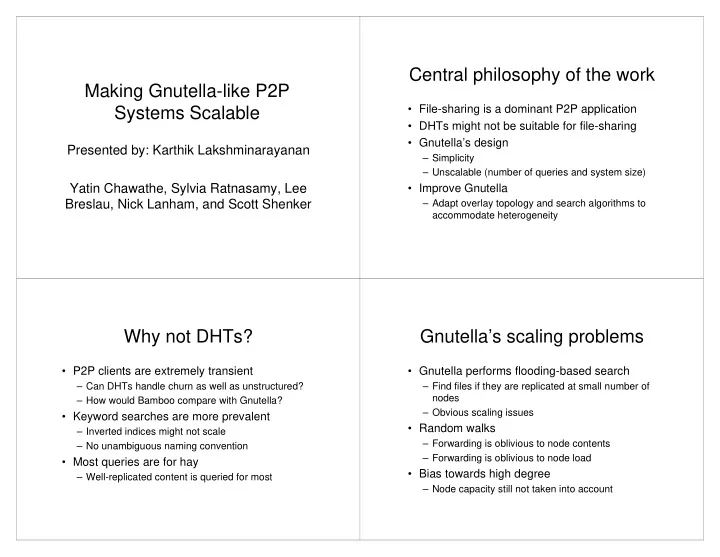

Central philosophy of the work Making Gnutella-like P2P • File-sharing is a dominant P2P application Systems Scalable • DHTs might not be suitable for file-sharing • Gnutella’s design Presented by: Karthik Lakshminarayanan – Simplicity – Unscalable (number of queries and system size) Yatin Chawathe, Sylvia Ratnasamy, Lee • Improve Gnutella Breslau, Nick Lanham, and Scott Shenker – Adapt overlay topology and search algorithms to accommodate heterogeneity Why not DHTs? Gnutella’s scaling problems • P2P clients are extremely transient • Gnutella performs flooding-based search – Can DHTs handle churn as well as unstructured? – Find files if they are replicated at small number of nodes – How would Bamboo compare with Gnutella? – Obvious scaling issues • Keyword searches are more prevalent • Random walks – Inverted indices might not scale – Forwarding is oblivious to node contents – No unambiguous naming convention – Forwarding is oblivious to node load • Most queries are for hay • Bias towards high degree – Well-replicated content is queried for most – Node capacity still not taken into account

GIA design 1. Topology adaptation 1. Dynamic topology adaptation – Nodes are close to high-capacity nodes • Goal: Make high-capacity nodes have high 2. Active flow control scheme degree (i.e., more neighbors) – Avoid overloaded hot-spots – Explicitly handles heterogeneity • Each node has a level of satisfaction, S 3. One-hop replication of pointers to content – S = 0 if no neighbors (dissatisfied) – Allows high-capacity nodes to answer more – S = 1 if enough good neighbors (fully satisfied) queries – S is a function of capacity, degree, age of 4. Search protocol neighbors and capacity of node – Based on random walks towards high-capacity – Improve the neighbor set as long as S < 1 nodes Exploit heterogeneity 1. Topology adaptation 2. Proactive flow control • Improving neighbor set • Allocate tokens to neighbors based on processing capability – Pick a new neighbor – Cannot perform arbitrary dropping due to – Decide whether to preempt an existing random walk mechanism of GIA neighbor • Depends on degree, capacity of neighbors • Allocation is proportional to neighbors’ • Asymmetric links? capacities • Issues – Incentive to announce true capacities – Avoid oscillations – use hysteresis • Uses token assignment based on SFQ – Converge to a stable state

3. One-hop replication 4. Search protocol • Biased random walk • Each GIA node maintains index of contents of all neighbors – Pick highest capacity node to which it has tokens – If no tokens, queues till tokens arrive • Exchanged during neighbor setup • TTLs to bound duration of random walks • Periodically incrementally updated • Book-keeping • Flushed on node failures – Maintain list of neighbors to which a query (unique GUID) has been forwarded Simulation results System model • Compare four systems – FLOOD: TTL-scoped, random topologies • Capacities of nodes based on UW study – RWRT: Random walks, random topologies – Separated by 4 orders of magnitude – SUPER: Supernode-based search • Query generation rate for each node – GIA: search using GIA protocol suite – Limited by node capacity • Metric: • Keyword queries are performed – Success-rate, Delay, Hop-count – Files are randomly replicated • Knee/collapse point at a particular query rate • Control traffic consumes resources – Collapse point : • Use uniformly random graphs • Per-node query rate at the knee – Prevent bias against FLOOD and RWRT • Aggregate throughput that the system can sustain

Single search response Questions addressed by 1000 simulations Collapse Point (qps/node) GIA: N=10,000 SUPER: N=10,000 10 RWRT: N=10,000 • What is the relative performance of the FLOOD: N=10,000 0.1 four algorithms? • Which of the GIA components matters 0.001 the most? 0.00001 • What is the impact of heterogeneity? 0.01 0.1 1 Replication Rate (percentage) • How does the system behave in the • GIA outperforms SUPER, RWRT & FLOOD by many face of transient nodes? orders of magnitude in terms of aggregate query load • Also scales to very large size network as replication factor determines scalability Factor Analysis Impact of Heterogeneity Algorithm Collapse Algorithm Collapse point point RWRT 0.0005 GIA 7 GIA – OHR RWRT+OHR 0.004 0.005 GIA – BIAS 6 RWRT+BIAS 0.0015 GIA – TADAPT 0.2 RWRT+TADAPT 0.001 GIA – FLWCTL 2 RWRT+FLWCTL 0.0006 10000 nodes, 0.1% replication 10000 nodes, 0.1% replication • GIA improves under heterogeneity • Large CP-HC for GIA under uniform capacities as • No single component is useful by itself; the queries are directed towards high capacity nodes combination of all of them is what makes GIA scalable

Node failures Implementation 1000 • Capacity settings replication rate = 1.0% 100 replication rate = 0.5% Collapse point – Bandwidth, CPU, disk access replication rate = 0.1% (qps/node) 10 – Configured by user 1 • Satisfaction level 0.1 – Based on capacity, degree, age of neighbors and 0.01 10000 nodes GIA system capacity of node 0.001 – Adaptation interval I = T. K -(1-s) , K = degree of 10 100 1000 10000 aggressiveness Per-node max-lifetime (seconds) • Query resilience – Keep-alive message periodically sent • Even under heavy churn GIA outperforms the other – Optimizations on adaptation to avoid query dropping algorithms (under no churn) by many orders of magnitude Deployment Conclusions • GIA: scalable Gnutella – 3–5 orders of magnitude improvement in system capacity • Unstructured approach is good enough! – DHTs may be overkill – Incremental changes to deployed systems • Ran GIA on 83 nodes of PlanetLab for 15 min • Can DHTs be used for file-sharing at all? • Artificially imposed capacities on nodes • Progress of topology adaptation shown

Recommend

More recommend