Caching / Performance ofgset 1 data valid tag data valid tag - PowerPoint PPT Presentation

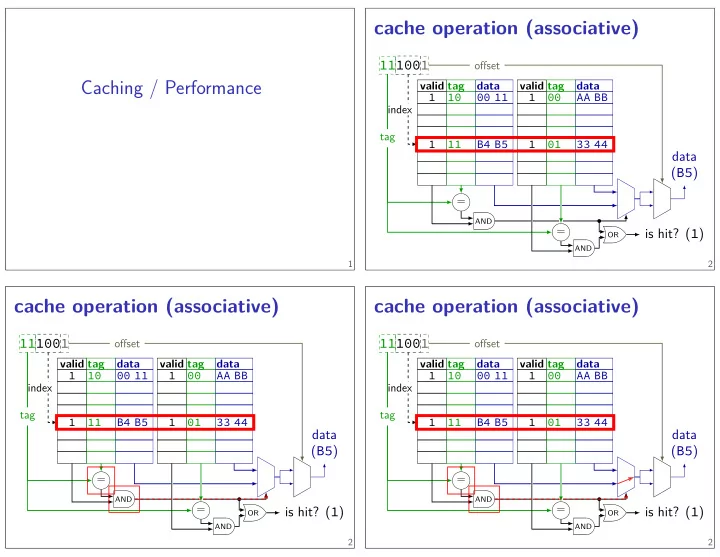

Caching / Performance ofgset 1 data valid tag data valid tag cache operation (associative) 2 ( B5 ) data is hit? ( 1 ) 00 11 OR AND AND tag = = index 1 11 10 1 33 44 = ( B5 ) data ofgset is hit? ( 1 ) OR AND AND tag =

Caching / Performance ofgset 1 data valid tag data valid tag cache operation (associative) 2 ( B5 ) data is hit? ( 1 ) 00 11 OR AND AND tag = = index 1 11 10 1 33 44 = ( B5 ) data ofgset is hit? ( 1 ) OR AND AND tag = index 00 1 11 100 33 44 01 1 B4 B5 11 1 AA BB 100 01 1 1 index 1 11 100 33 44 01 1 B4 B5 11 AA BB = 00 1 00 11 10 1 data valid tag data valid tag cache operation (associative) = tag 1 data B4 B5 11 1 AA BB 00 1 00 11 10 1 valid tag AND data valid tag cache operation (associative) 2 ( B5 ) data ofgset is hit? ( 1 ) OR AND 2

writing to caches option 2: write-back (later) … when replaced — send value to memory to ABCD write 10 (later) (confmicts) from 0x11CD read 10 (dirty) writing to caches ABCD : 10 option 1: write-through — always to 0xABCD write 10 to 0xABCD write 10 RAM 3 CPU CPU option 2: write-back (later) … when replaced — send value to memory to ABCD write 10 (later) (confmicts) from 0x11CD read 10 (dirty) Cache ABCD : 10 option 1: write-through — always to 0xABCD write 10 to 0xABCD write 10 RAM Cache writing to caches CPU (dirty) to ABCD write 10 (later) (confmicts) from 0x11CD read 10 option 2: write-back (later) ABCD : 10 3 option 1: write-through — always to 0xABCD write 10 to 0xABCD write 10 RAM Cache … when replaced — send value to memory writing to caches 3 option 2: write-back (later) … when replaced — send value to memory to ABCD write 10 (later) (confmicts) from 0x11CD read 10 (dirty) CPU ABCD : 10 option 1: write-through — always to 0xABCD write 10 to 0xABCD write 10 RAM Cache 3

writeback policy mem[0x62] index valid write-allocate 6 index 0, tag 000001 writing 0xFF into address 0x04 ? 0 0 0 mem[0x63] 011000 value dirty valid 1 1 1 mem[0x60]* 011000 1 0 mem[0x01] index valid tag tag 1 1 index 0, tag 000001 writing 0xFF into address 0x04 ? 0 0 0 mem[0x63] mem[0x62] 011000 1 1 value dirty mem[0x60]* 011000 1 0 mem[0x01] mem[0x00] 000000 1 0 LRU 000000 mem[0x00] 0 1 0 mem[0x63] mem[0x62] 011000 1 1 1 mem[0x60]* 011000 0 0 mem[0x01] mem[0x00] 000000 1 0 LRU value dirty tag value dirty valid tag LRU 0 6 changed value! value dirty tag value dirty valid tag index valid write-allocate 5 guess: not read soon? send write through to memory write-no-allocate fetch rest of cache block, replace written part write-allocate two options: block not yet in cache processor writes less than whole cache block allocate on write? 4 needs to be written if evicted 1 = dirty (difgerent than memory) 2 -way set associative, 4 byte blocks, 2 sets mem[0x61]* 1 2 -way set associative, LRU, writeback 2 -way set associative, LRU, writeback mem[0x61]* 1 mem[0x61]* 1 step 1: fjnd least recently used block

write-allocate write-no-allocate 000000 1 0 LRU value dirty tag value dirty valid tag index valid 6 mem[0x01] step 3b: update LRU information step 3a: read in new block – to get mem[0x05] step 2: possibly writeback old block index 0, tag 000001 writing 0xFF into address 0x04 ? 0 0 0 mem[0x63] mem[0x62] mem[0x00] 0 1 writing 0xFF into address 0x04 ? write bufger 0xABCD: 10 to 0xABCD write 10 RAM Cache CPU fast writes 7 step 1: is it in cache yet? 0 1 0 0 mem[0x63] mem[0x62] 011000 1 1 1 mem[0x60]* 011000 index valid 011000 1 1 0 0 mem[0x63] mem[0x62] 011000 1 1 1 mem[0x60]* 011000 0 writing 0xFF into address 0x04 ? mem[0x01] mem[0x00] 000000 1 0 LRU value dirty tag value dirty valid tag 0 0 index 0, tag 000001 LRU 1 mem[0x05] 0xFF 011000 1 0 mem[0x01] mem[0x00] 000000 0 1 8 value dirty step 2: possibly writeback old block 6 write-allocate index valid tag value dirty valid tag 2 -way set associative, LRU, writeback 2 -way set associative, LRU, writeback mem[0x61]* 1 step 1: fjnd least recently used block step 1: fjnd least recently used block 2 -way set associative, LRU, writeback mem[0x61]* 1 step 2: no, just send it to memory

matrix sum [1][1] miss iter. 0 [0][0] matrix sum: spatial locality 10 cache block? 8-byte matrix in memory (4 bytes/row) … … iter. 9 iter. 8 iter. 1 [1][0] iter. 7 [0][7] … iter. 6 [0][6] hit iter. 5 [0][5] miss iter. 4 [0][1] hit (same block as before) hit (same block as before) … cache block? 8-byte matrix in memory (4 bytes/row) … … iter. 9 [1][1] iter. 8 [1][0] iter. 7 [0][7] iter. 6 [0][2] [0][6] hit iter. 5 [0][5] miss iter. 4 [0][4] hit (same block as before) iter. 3 [0][3] miss iter. 2 [0][4] iter. 3 int sum1( int matrix[4][8]) { miss iter. 4 [0][4] hit (same block as before) iter. 3 [0][3] miss iter. 2 [0][2] hit (same block as before) iter. 1 [0][1] iter. 0 [0][5] [0][0] matrix sum: spatial locality 9 matrix[0][0] , [0][1] , [0][2] , …, [1][0] … access pattern: } } } sum += matrix[i][j]; for ( int j = 0; j < 8; ++j) { for ( int i = 0; i < 4; ++i) { int sum = 0; miss iter. 5 [0][3] cache block? miss iter. 2 [0][2] hit (same block as before) iter. 1 [0][1] miss iter. 0 [0][0] matrix sum: spatial locality 10 8-byte hit matrix in memory (4 bytes/row) … … iter. 9 [1][1] iter. 8 [1][0] iter. 7 [0][7] … iter. 6 [0][6] 10

block size and spatial locality iter. 0 iter. 12 [0][3] iter. 8 [0][2] iter. 4 [0][1] [0][0] iter. 16 matrix sum: bad spatial locality 13 evicted for 4 iterations miss unless value not cache block? 8-byte matrix in memory (4 bytes/row) [0][4] [0][5] … … evicted for 4 iterations miss unless value not cache block? 8-byte matrix in memory (4 bytes/row) … iter. 5 iter. 20 [1][1] iter. 1 [1][0] iter. 28 [0][7] iter. 24 [0][6] … iter. 5 larger blocks — exploit spatial locality for ( int i = 0; i < 4; ++i) { matrix[0][0] , [1][0] , [2][0] , …, [0][1] , … access pattern: } } } sum += matrix[i][j]; for ( int j = 0; j < 8; ++j) { matrix sum: bad spatial locality // swapped loop order int sum = 0; int sum2( int matrix[4][8]) { alternate matrix sum 11 less good at exploiting temporal locality … but larger blocks means fewer blocks for same size 12 [0][0] [1][1] [0][5] iter. 1 [1][0] iter. 28 [0][7] iter. 24 [0][6] iter. 20 iter. 16 iter. 0 [0][4] iter. 12 [0][3] iter. 8 [0][2] iter. 4 [0][1] 13

confmict misses? more associativity — less likely to have problems 1KB fully assoc. 8-way 2-way direct-mapped Cache size data cache miss rates: LRU replacement policies SPEC CPU2000 benchmarks, 64B block size depends on program; one example: cache organization and miss rate 15 etc. 6.97% really hard to avoid cache confmicts with matrices, associativity: avoiding confmicts 14 set index 0? (8 total sets) set index 4? set index 3? set index 2? set index 1? set index 0? cache block? 8-byte matrix in memory (4 bytes/row) 8.63% 5.63% [2][1] 0.50% http://research.cs.wisc.edu/multifacet/misc/spec2000cache-data/ Data: Cantin and Hill, “Cache Performance for SPEC CPU2000 Benchmarks” 0.0006% 0.0006% 0.001% 0.27% 128KB 0.001% 0.10% 0.37% 0.66% 64KB 0.56% 5.34% 0.86% 1.59% 16KB 1.90% 2.03% 2.60% 3.70% 4KB 3.05% 3.30% 4.23% 5.71% 2KB iter. 11 iter. 3 [0][0] iter. 28 8-byte matrix in memory (4 bytes/row) iter. 11 [2][1] iter. 3 [2][0] … … iter. 9 [1][1] iter. 1 [1][0] [0][7] set index 0? iter. 24 [0][6] iter. 20 [0][5] iter. 16 [0][4] iter. 12 [0][3] iter. 8 [0][2] iter. 4 [0][1] iter. 0 cache block? set index 1? [2][0] iter. 16 … … iter. 9 [1][1] iter. 1 [1][0] iter. 28 [0][7] iter. 24 [0][6] iter. 20 [0][5] [0][4] set index 2? iter. 12 [0][3] iter. 8 [0][2] iter. 4 [0][1] iter. 0 [0][0] confmict misses? 14 set index 0? (8 total sets) set index 4? set index 3? 16

cache organization and miss rate miss: read 2 mem[3] *, mem[0] miss: mem[0] ; mem[3] hit: read 0 mem[3] , mem[1] * miss: mem[0] ; mem[3] read 3 mem[2] ; mem[3] mem[0] *, mem[1] miss: mem[0] ; mem[1] miss: read 1 mem[0] , —* miss: mem[0] ; — miss: miss: miss: depends on program; one example: read 2 real question: what do typical programs do? step 1: fjll the cache general constructing bad access patterns in 18 mem[1] *, mem[2] miss: mem[2] ; mem[1] hit: mem[1] , mem[3] * mem[2] , mem[0] * hit: mem[2] ; mem[1] hit: read 1 mem[2] *, mem[3] miss: mem[2] ; mem[3] hit: read 3 read 0 fully-associative (1 set) direct-mapped (2 sets) 6.97% 3.70% 4KB 3.05% 3.30% 4.23% 5.71% 2KB 5.34% 5.63% 8.63% * = least recently used 1KB fully assoc. 8-way 2-way direct-mapped Cache size data cache miss rates: LRU replacement policies SPEC CPU2000 benchmarks, 64B block size 2.60% 2.03% 1.90% 16KB making LRU look bad 17 least recently used exploits temporal locality is LRU always better? 16 http://research.cs.wisc.edu/multifacet/misc/spec2000cache-data/ Data: Cantin and Hill, “Cache Performance for SPEC CPU2000 Benchmarks” 0.0006% 0.0006% 0.001% 0.27% 128KB 0.001% 0.10% 0.37% 0.66% 64KB 0.50% 0.56% 0.86% 1.59% 19 step 2: keep accessing the thing just replaced typically: locality (spatial and temporal) typically: some confmicts in low-order bits

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.

![1 Web Traffic Characterization Zipf Web Traffic Characterization Zipf [Breslau/Cao99] and](https://c.sambuz.com/987174/1-s.webp)