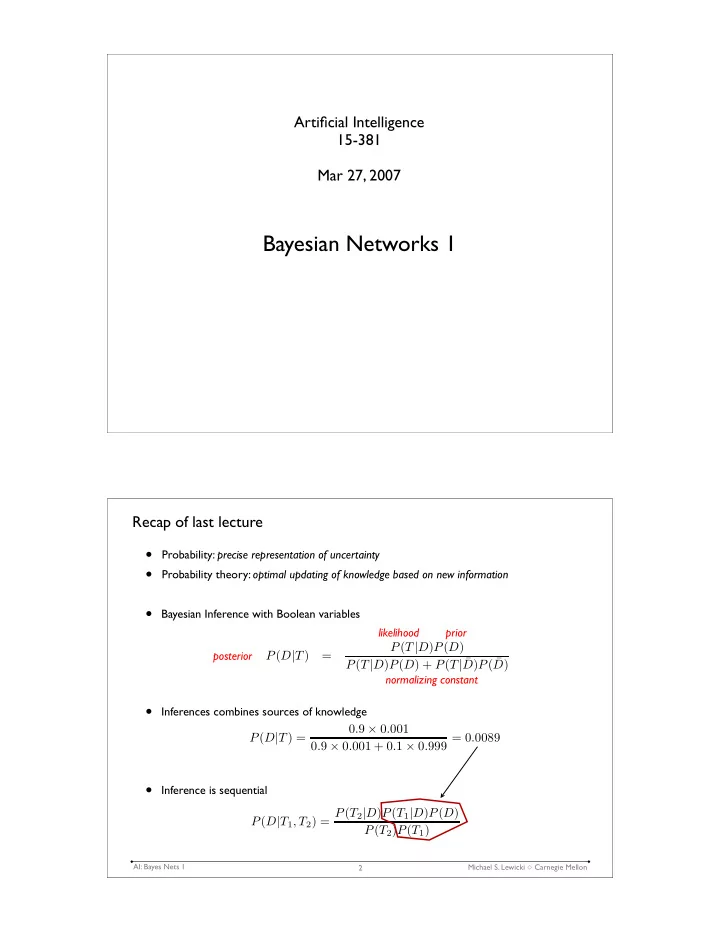

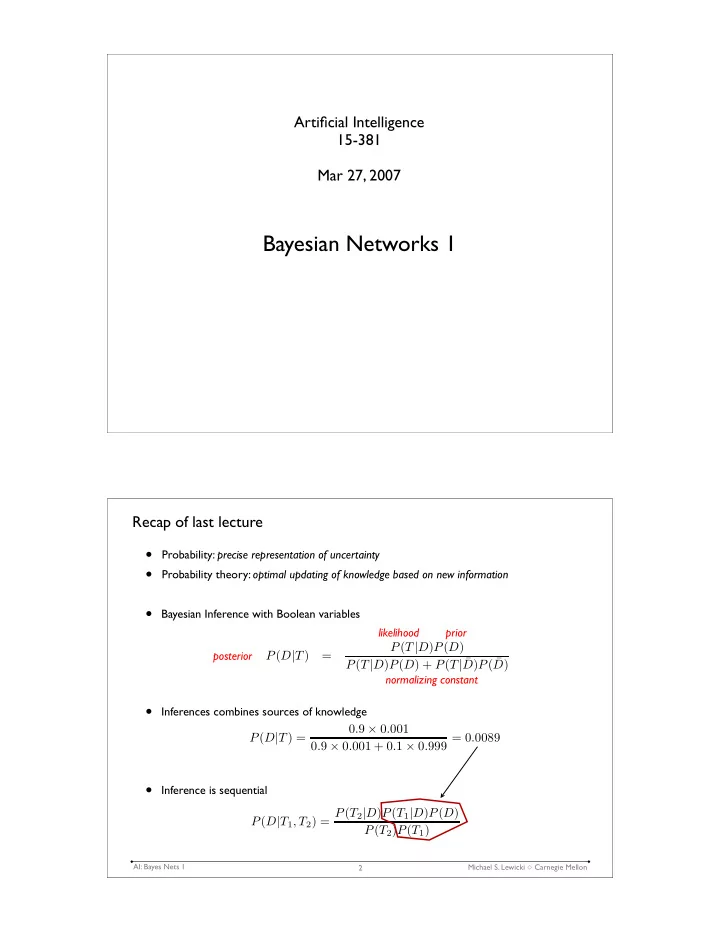

Artificial Intelligence 15-381 Mar 27, 2007 Bayesian Networks 1 Recap of last lecture • Probability: precise representation of uncertainty • Probability theory: optimal updating of knowledge based on new information • Bayesian Inference with Boolean variables likelihood prior P ( T | D ) P ( D ) posterior P ( D | T ) = P ( T | D ) P ( D ) + P ( T | ¯ D ) P ( ¯ D ) normalizing constant • Inferences combines sources of knowledge 0 . 9 × 0 . 001 P ( D | T ) = 0 . 9 × 0 . 001 + 0 . 1 × 0 . 999 = 0 . 0089 • Inference is sequential P ( D | T 1 , T 2 ) = P ( T 2 | D ) P ( T 1 | D ) P ( D ) P ( T 2 ) P ( T 1 ) AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 2

Bayesian inference with continuous variables (recap) likelihood prior p ( θ | y, n ) = p ( y | θ , n ) p ( θ | n ) � p ( y | n ) = p ( y | θ , n ) p ( θ | n ) d θ posterior normalizing constant � n � likelihood p ( θ | y, n ) ∝ θ y (1 − θ ) n − y (Binomial) y p(y| ! =0.05, n=5) p(y| ! =0.35, n=5) p(y| ! =0.2, n=5) p(y| ! =0.5, n=5) 1 2 3 4 5 6 1 2 3 4 5 6 1 2 3 4 5 6 1 2 3 4 5 6 y y y y p( ! | y=1, n=5) p( ! | y=0, n=0) prior (uniform) posterior (beta) 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 ! 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 ! AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 3 Today: Inference with more complex dependencies • How do we represent (model) more complex probabilistic relationships? • How do we use these models to draw inferences? AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 4

Probabilistic reasoning • Suppose we go to my house and see that the door is open. - What’s the cause? Is it a burglar? Should we go in? Call the police? - Then again, it could just be my wife. Maybe she came home early. • How should we represent these relationships? AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 5 Belief networks • In Belief networks, causal relationships are represented in directed acyclic graphs. • Arrows indicate causal relationships between the nodes. wife burglar open door AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 6

Types of probabilistic relationships • How do we represent these relationships? Direct cause Indirect cause Common cause Common effect A A A A B B B B C C C P(B|A) P(B|A) P(B|A) P(C|A,B) P(C|B) P(C|A) C is independent Are B and C Are A and B of A given B independent? independent? AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon Belief networks • In Belief networks, causal relationships are represented in directed acyclic graphs. • Arrows indicate causal relationships between the nodes. wife burglar How can we We need more determine what information. is happening What else can before we go in? we observe? open door AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 8

Explaining away • Suppose we notice that the car is in the garage. • Now we infer that it’s probably my wife, and not a burglar. • This fact “explains away” the hypothesis of a burglar. wife burglar Note that there is no direct causal link between “burglar” and “car in garage”. Yet, seeing the car changes our beliefs car in garage open door about the burglar. AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 9 Explaining away • Suppose we notice that the car is in the garage. • Now we infer that it’s probably my wife, and not a burglar. • This fact “explains away” the hypothesis of a burglar. • We could also notice the door was damaged, in which case we reach the opposite conclusion. How do we make this wife burglar inference process more precise? Let’s start by writing down the conditional probabilities. car in garage open door damaged door AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 10

Defining the belief network • Each link in the graph represents a conditional relationship between nodes. • To compute the inference, we must specify the conditional probabilities. wife burglar car in garage open door damaged door AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 11 Defining the belief network • Each link in the graph represents a conditional relationship between nodes. • To compute the inference, we must specify the conditional probabilities. • Let’s start with the open door. What do we specify? Check: Does this column W B P(O|W,B) have to sum to one? What else do we F F 0.01 need to specify? F T 0.25 No! Only the T F 0.05 The priors full joint probabilities. T T 0.75 distribution wife burglar does. This is a conditional distribution. But note that: car in garage open door damaged door P(¬O|W,B) = 1- P(O|W,B) AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 12

Defining the belief network • Each link in the graph represents a conditional relationship between nodes. • To compute the inference, we must specify the conditional probabilities. • Let’s start with the open door. What do we specify? W B P(O|W,B) What else do we F F 0.01 need to specify? F T 0.25 P(W) T F 0.05 The priors probabilities. 0.05 T T 0.75 wife burglar car in garage open door damaged door AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 13 Defining the belief network • Each link in the graph represents a conditional relationship between nodes. • To compute the inference, we must specify the conditional probabilities. • Let’s start with the open door. What do we specify? W B P(O|W,B) What else do we F F 0.01 need to specify? F T 0.25 P(W) T F 0.05 P(B) The priors probabilities. 0.05 T T 0.75 0.001 wife burglar car in garage open door damaged door AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 14

Defining the belief network • Each link in the graph represents a conditional relationship between nodes. • To compute the inference, we must specify the conditional probabilities. • Let’s start with the open door. What do we specify? W B P(O|W,B) F F 0.01 F T 0.25 P(W) T F 0.05 P(B) Finally, we specify 0.05 T T 0.75 0.001 the remaining wife burglar conditionals W P(C|W) F 0.01 T 0.95 car in garage open door damaged door AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 15 Defining the belief network • Each link in the graph represents a conditional relationship between nodes. • To compute the inference, we must specify the conditional probabilities. • Let’s start with the open door. What do we specify? W B P(O|W,B) F F 0.01 F T 0.25 P(W) T F 0.05 P(B) Finally, we specify 0.05 T T 0.75 0.001 the remaining wife burglar conditionals W P(C|W) B P(D|B) F 0.01 F 0.001 T 0.95 T 0.5 car in garage open door damaged door Now what? AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 16

Calculating probabilities using the joint distribution • What the probability that the door is open, it is my wife and not a burglar, we see the car in the garage, and the door is not damaged? • Mathematically, we want to compute the expression: P(o,w,¬b,c,¬d) = ? • We can just repeatedly apply the rule relating joint and conditional probabilities. - P(x,y) = P(x|y) P(y) AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 17 Calculating probabilities using the joint distribution • The probability that the door is open, it is my wife and not a burglar, we see the car in the garage, and the door is not damaged. • P(o,w,¬b,c,¬d) = P(o|w,¬b,c,¬d)P(w,¬b,c,¬d) = P(o|w,¬b)P(w,¬b,c,¬d) = P(o|w,¬b)P(c|w,¬b,¬d)P(w,¬b,¬d) = P(o|w,¬b)P(c|w)P(w,¬b,¬d) = P(o|w,¬b)P(c|w)P(¬d|w,¬b)P(w,¬b) = P(o|w,¬b)P(c|w)P(¬d|¬b)P(w,¬b) = P(o|w,¬b)P(c|w)P(¬d|¬b)P(w)P(¬b) wife burglar car in garage open door damaged door AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 18

Calculating probabilities using the joint distribution • P(o,w,¬b,c,¬d) = P(o|w,¬b)P(c|w)P(¬d|¬b)P(w)P(¬b) = 0.05 � 0.95 � 0.999 � 0.05 � 0.999 = 0.0024 • This is essentially the probability that my wife is home and leaves the door open. W B P(O|W,B) F F 0.01 F T 0.25 P(W) T F 0.05 P(B) 0.05 T T 0.75 0.001 wife burglar W P(C|W) B P(D|B) F 0.01 F 0.001 T 0.95 T 0.5 car in garage open door damaged door AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 19 Calculating probabilities in a general Bayesian belief network A C E B D • Note that by specifying all the conditional probabilities, we have also specified the joint probability. For the directed graph above: P(A,B,C,D,E) = P(A) P(B|C) P(C|A) P(D|C,E) P(E|A,C) • The general expression is: P ( x 1 , . . . , x n ) ≡ P ( X 1 = x 1 ∧ . . . ∧ X n = x n ) n � = P ( x i | parents( X i )) i =1 � With this we can calculate (in principle) the probability of any joint probability. � This implies that we can also calculate any conditional probability. AI: Bayes Nets 1 Michael S. Lewicki � Carnegie Mellon 20

Recommend

More recommend