Austere Flash Caching with Deduplication and Compression Qiuping - PowerPoint PPT Presentation

Austere Flash Caching with Deduplication and Compression Qiuping Wang * , Jinhong Li * , Wen Xia # Erik Kruus ^ , Biplob Debnath ^ , Patrick P. C. Lee * * The Chinese University of Hong Kong (CUHK) # Harbin Institute of Technology, Shenzhen ^ NEC

Austere Flash Caching with Deduplication and Compression Qiuping Wang * , Jinhong Li * , Wen Xia # Erik Kruus ^ , Biplob Debnath ^ , Patrick P. C. Lee * * The Chinese University of Hong Kong (CUHK) # Harbin Institute of Technology, Shenzhen ^ NEC Labs 1

Flash Caching Ø Flash-based solid-state drives (SSDs) • ü Faster than hard disk drives (HDD) • ü Better reliability • û Limited capacity and endurance Ø Flash caching • Accelerate HDD storage by caching frequently accessed blocks in flash 2

Deduplication and Compression Ø Reduce storage and I/O overheads Ø Deduplication (coarse-grained) • In units of chunks (fixed- or variable-size) • Compute fingerprint (e.g., SHA-1) from chunk content • Reference identical (same FP) logical chunks to a physical copy Ø Compression (fine-grained) • In units of bytes • Transform chunks into fewer bytes 3

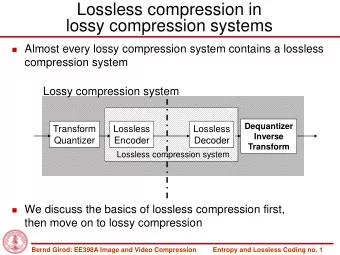

Deduplicated and Compressed Flash Cache Ø LBA : chunk address in HDD; FP : chunk fingerprint Ø CA : chunk address in flash cache (after dedup’ed + compressed) Read/write LBA-index Chunking LBA, CA LBA à FP LBA, CA Deduplication … FP-index and compression FP à CA, length Dirty list RAM I/O SSD Variable-size compressed chunks (after deduplication) Fixed-size HDD chunks 4

Memory Amplification for Indexing Ø Example: 512-GiB flash cache with 4-TiB HDD working set Ø Conventional flash cache • Memory overhead: 256 MiB LBA (8B) à CA (8B) Ø Deduplicated and compressed flash cache • LBA-index: 3.5 GiB LBA (8B) à FP (20B) • FP-index: 512 MiB FP (20B) à CA (8B) + Length (4B) • Memory amplification: 16x • Can be higher 5

Related Work Ø Nitro [Li et al., ATC’14] • First work to study deduplication and compression in flash caching • Manage compressed data in Write-Evict Units (WEUs) Ø CacheDedup [Li et al, FAST’16] • Propose dedup-aware algorithms for flash caching to improve hit ratios They both suffer from memory amplification! 6

Our Contribution Ø AustereCache : a deduplicated and compressed flash cache with austere memory-efficient management • Bucketization • No overhead for address mappings • Hash chunks to storage locations • Fixed-size compressed data management • No tracking for compressed lengths of chunks in memory • Bucket-based cache replacement • Cache replacement per bucket • Count-Min Sketch [Cormode 2005] for low-memory reference counting Ø Extensive trace-driven evaluation and prototype experiments 7

Bucketization Ø Main idea • Use hashing to partition index and cache space Bucket • (RAM) LBA-index and FP-index • (SSD) metadata region and data region • Store partial keys (prefixes) in memory … … … • Memory savings slot Ø Layout • Hash entries into equal-sized buckets mapping / data • Each bucket has fixed-number of slots 8

(RAM) LBA-index and FP-index Ø (RAM) LBA-index and FP-index FP-index LBA-index Bucket Bucket … … … … … … slot slot LBA-hash prefix FP hash Flag FP-hash prefix Flag • Locate buckets with hash suffixes • Match slots with hash prefixes • Each slot in FP-index corresponds to a storage location in flash 9

(SSD) Metadata and Data Regions Ø (SSD) Metadata region and data region Bucket Bucket … … … … … … slot slot Metadata Data region region FP List of LBAs Chunk • Each slot has full FP and list of full LBAs in metadata region • For validation against prefix collisions • Cached chunks in data region 10

Fixed-size Compressed Data Management Ø Main idea • Slice and pad a compressed chunk into fixed-size subchunks 20KiB 32KiB 8KiB each Compress Slice and Pad Ø Advantages • Compatible with bucketization • Store each subchunk in one slot • Allow per-chunk management for cache replacement 11

Fixed-size Compressed Data Management Ø Layout • One chunk occupies multiple consecutive slots • No additional memory for compressed length FP-index Bucket FP-hash prefix Flag … … RAM SSD Chunk … … … … FP List of LBAs Length Subchunk Metadata Region Data Region 12

Bucket-based Cache Replacement Ø Main idea • Cache replacement in each bucket independently • Eliminate priority-based structures for cache decisions LBA-index FP-index Reference • Combine recency and deduplication Counter Slot Slot • LBA-index: least-recently-used policy 2 Recent • FP-index: least-referenced policy … 3 … • Weighted reference counting based on … … recency in LBAs Old … … … 13

Sketch-based Reference Counting Ø High memory overhead for complete reference counting • One counter for every FP-hash Ø Count-Min Sketch [Cormode 2005] • Fixed memory usage with provable error bounds +1 count = minimum counter FP-hash +1 h indexed by (i, H i (FP-hash)) +1 w 14

Evaluation Ø Implement AustereCache as a user-space block device • ~4.5K lines of C++ code in Linux Ø Traces • FIU traces: WebVM, Homes, Mail • Synthetic traces: varying I/O dedup ratio and write-read ratio • I/O dedup ratio: fraction of duplicate written chunks in all written chunks Ø Schemes • AustereCache: AC-D, AC-DC • CacheDedup: CD-LRU-D, CD-ARC-D, CD-ARC-DC 15

Memory Overhead AC-D AC-DC CD-LRU-D CD-ARC-D CD-ARC-DC 1000 1000 1000 Memory (MiB) Memory (MiB) Memory (MiB) 100 100 100 10 10 10 1 1 1 12.5 25 37.5 50 62.5 75 87.5 100 12.5 25 37.5 50 62.5 75 87.5 100 12.5 25 37.5 50 62.5 75 87.5 100 Cache Capacity (%) Cache Capacity (%) Cache Capacity (%) (a) WebVM (b) Homes (c) Mail Ø AC-D incurs 69.9-94.9% and 70.4-94.7% less memory across all traces than CD-LRU-D and CD-ARC-D, respectively. Ø AC-DC incurs 87.0-97.0% less memory than CD-ARC-DC. 16

Read Hit Ratios AC-D AC-DC CD-LRU-D CD-ARC-D CD-ARC-DC 100 50 100 40 Read Hit (%) Read Hit (%) Read Hit (%) 75 75 30 50 50 20 25 25 10 0 0 0 12.5 25 37.5 50 62.5 75 87.5 100 12.5 25 37.5 50 62.5 75 87.5 100 12.5 25 37.5 50 62.5 75 87.5 100 Cache Capacity (%) Cache Capacity (%) Cache Capacity (%) (a) WebVM (b) Homes (c) Mail Ø AC-D has up to 39.2% higher read hit ratio than CD-LRU-D, and similar read hit ratio as CD-ARC-D Ø AC-DC has up to 30.7% higher read hit ratio than CD-ARC-DC 17

Write Reduction Ratios AC-D AC-DC CD-LRU-D CD-ARC-D CD-ARC-DC 80 80 80 Write Rd. (%) Write Rd. (%) Write Rd. (%) 60 60 60 40 40 40 20 20 20 0 0 0 12.5 25 37.5 50 62.5 75 87.5 100 12.5 25 37.5 50 62.5 75 87.5 100 12.5 25 37.5 50 62.5 75 87.5 100 Cache Capacity (%) Cache Capacity (%) Cache Capacity (%) (a) WebVM (b) Homes (c) Mail Ø AC-D is comparable as CD-LRU-D and CD-ARC-D Ø AC-DC is slightly lower (by 7.7-14.5%) than CD-ARC-DC • Due to padding in compressed data management 18

Throughput AC-D AC-DC CD-LRU-D CD-ARC-D CD-ARC-DC 100 100 Thpt (MiB/s) Thpt (MiB/s) 75 75 50 50 25 25 0 0 20 40 60 80 9:1 7:3 5:5 3:7 1:9 I/O Dedup Ratio (%) Write-to-Read Ratio (b) Throughput vs. write-to-read (a) Throughput vs. I/O dedup ratio (I/O dedup ratio 50%) ratio (write-to-read ratio 7:3) Ø AC-DC has highest throughput • Due to high write reduction ratio and high read hit ratio Ø AC-D has slightly lower throughput than CD-ARC-D • AC-D needs to access the metadata region during indexing 19

CPU Overhead and Multi-threading 6025 Ø Latency (32 KiB chunk write) Fingerprint 6000 Compression Latency (us) 5975 Lookup • HDD (5,997 µs) and SSD (85 µs) Update 100 SSD • AustereCache (31.2 µs) (fingerprinting 15.5 µs) 75 HDD 50 • Latency hidden via multi-threading 25 0 Ø Multi-threading (write-read ratio 7:3) 250 50% dedup • 50% I/O dedup ratio: 2.08X 80% dedup Thpt (MiB/s) 200 150 • 80% I/O dedup ratio: 2.51X 100 • Higher I/O dedup ratio implies less I/O to flash 50 à more computation savings via multi-threading 0 1 2 4 6 8 Number of threads 20

Conclusion Ø AustereCache: memory efficiency in deduplicated and compressed flash caching via • Bucketization • Fixed-size compressed data management • Bucket-based cache replacement Ø Source code: http://adslab.cse.cuhk.edu.hk/software/austerecache 21

Thank You! Q & A 22

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.