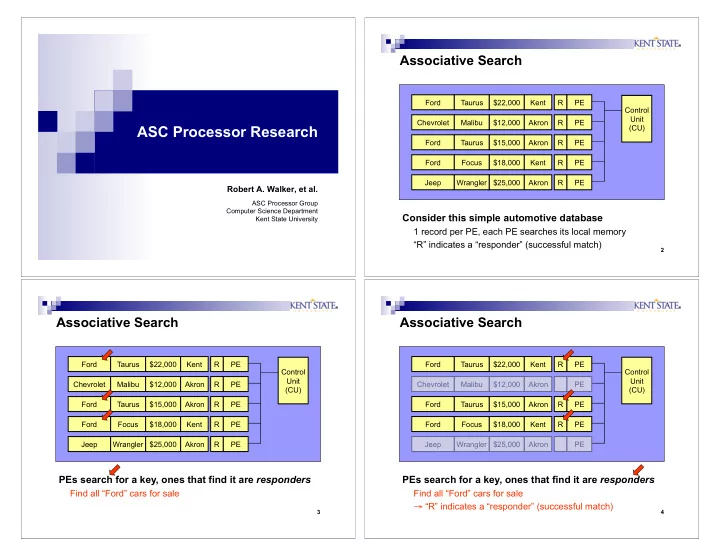

Associative Search Ford Taurus $22,000 Kent R PE Control Unit Chevrolet Malibu $12,000 Akron R PE ASC Processor Research (CU) Ford Taurus $15,000 Akron R PE Ford Focus $18,000 Kent R PE Jeep Wrangler $25,000 Akron R PE Robert A. Walker, et al. ASC Processor Group Computer Science Department Consider this simple automotive database Kent State University 1 record per PE, each PE searches its local memory “R” indicates a “responder” (successful match) 2 Associative Search Associative Search Ford Taurus $22,000 Kent R PE Ford Taurus $22,000 Kent R PE Control Control Unit Unit Chevrolet Malibu $12,000 Akron R PE Chevrolet Malibu $12,000 Akron PE (CU) (CU) Ford Taurus $15,000 Akron R PE Ford Taurus $15,000 Akron R PE Ford Focus $18,000 Kent R PE Ford Focus $18,000 Kent R PE Jeep Wrangler $25,000 Akron R PE Jeep Wrangler $25,000 Akron PE PEs search for a key, ones that find it are responders PEs search for a key, ones that find it are responders Find all “Ford” cars for sale Find all “Ford” cars for sale � “R” indicates a “responder” (successful match) 3 4

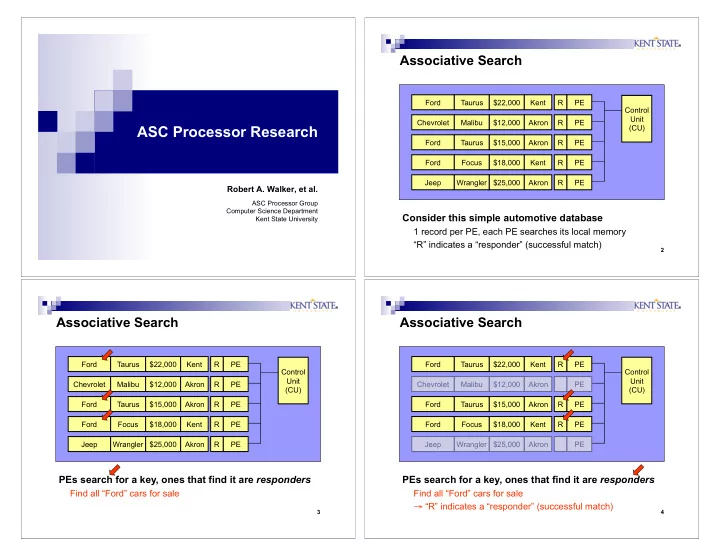

Associative Search Associative Search Ford Taurus $22,000 Kent R PE Ford Taurus $22,000 Kent PE Control Control Unit Unit Chevrolet Malibu $12,000 Akron PE Chevrolet Malibu $12,000 Akron PE (CU) (CU) Ford Taurus $15,000 Akron R PE Ford Taurus $15,000 Akron R PE Ford Focus $18,000 Kent R PE Ford Focus $14,000 Kent PE Jeep Wrangler $25,000 Akron PE Jeep Wrangler $25,000 Akron PE PEs perform a global minimum search PE with the minimum value is now the only responder Find the “Ford” car with the lowest price Find the “Ford” car with the lowest price 5 6 Development of an ASC Processor Associative Memory PE Search � � 2001-02 — First 4-PE prototype w/ associative search, Broadcast responder resolution, max/min search, not implemented Memory PE Broadcast/Reduction Network PE Interconnection Network � � 2003 — Scalable ASC Processor w/50 PEs, implemented on Responder Memory PE APEX 20K1000E Processing � � 2004 — Scalable ASC Processor w/ 1-D and 2-D network, AnyResponders Memory PE demonstrated on VLDC string-matching example & image Control PickOne processing example (edge detection using convolution) Unit Memory PE Global Reduction � � 2005 — Scalable ASC Processor w/ pipelined PEs and reconfigurable network, to be demonstrated Memory PE Maximum/ � � 2005 — Scalable ASC Processor w/ augmented reconfigurable minimum network and row/column broadcast, demonstrated on exact Memory PE and approximate match LCS example Memory PE � � 2006 — MASC Processor � � 2008 — Multithreaded ASC Processor Associative SIMD Array 7 8

Scalable ASC Processor 1-D and 2-D PE Interconnection Network This version of ASC processor supports both a 1-D and 2-D PE interconnection network for those applications that require a network 9 10 ASC Processor’s Pipelined Architecture Edge Detection Using Convolution � � Five single-clock-cycle pipeline stages are split between the SIMD Control Unit (CU) and the PEs � � In the Control Unit � � Instruction Fetch (IF) � � Part of Instruction Decode (ID) � � In the Scalar PE (SPE), in each Parallel PE (PPE) � � Rest of Instruction Decode (ID) � � Execute (EX) � � Memory Access (MEM) � � Data Write Back (WB) 11 12

Pipelined ASC Processor Processing Element (PE) Mask Control Unit (CU) Parallel PE (PPE) Array Comparator Instruction Memory IF/ID Latch Data Memory MEM/WB Latch EX/MEM Latch Data Switch ID/EX Latch Register File Decoder Immediate MUX Data Register File Broadcast Register ID/EX Latch Data � � Comparator implements associative search, pushes EX/MEM Latch ‘1’ onto top of stack for responders, ‘0’ otherwise Data Memory MEM/WB Latch � � Top of mask of ‘0’ disables ID/EX Latch Sequential PE (SPE) 13 14 Reconfigurable PE Network Reconfigurable PE Network � � Our pipelined ASC Processor also has a reconfigurable PE Control Unit (CU) Parallel PE (PPE) Array interconnection network Instruction Memory � � Reconfigurable PE network supports associative computing by IF/ID Latch allowing arbitrary PEs in the PE Array to be connected via � � Linear array (currently implemented), or Decoder Immediate Data � � 2D mesh (shown in the next chapter) without the restriction of physical adjacency Register File Broadcast Register � � Each PE in the PE Array can choose its own connectivity ID/EX Latch Data � � Responders choose to stay in the PE interconnection network, and EX/MEM Latch � � Non-Responders choose to stay out of the PE interconnection network, so that they are bypassed by any inter-PE Data Memory communication MEM/WB Latch Sequential PE (SPE) 15 16

Reconfigurable Network Implementation Overview of LCS Algorithm Register Immediate Register Data Data � � Given two strings, find the LCS common to both File (from SPE) (from CU) strings Left Right Neighbor Neighbor Data Switch � � Example: Top of Mask Stack � � String 1: AGACTGAGGTA � � String 2: ACTGAG Comparator & ID/EX Latch � � AGACTGAGGTA � � Data switch � � - -ACTGAG - - - list of possible alignments � � Passes register, broadcast, and immediate data to the PE � � - -ACTGA - G- - and to its two neighbors � � A- -CTGA - G- - � � Routes data from the PE’s neighbors to its EX stage � � A- -CTGAG - - - � � Reconfigurable network — supports Bypass Mode to � � The time complexity of this algorithm is clearly remove the PE non-responders from the network O(nm) � � Will be needed by MASC Processor 17 18 Overview of LCS Algorithm PE’s Form Coteries A G A C T G A G G T A 0 0 0 0 0 0 0 0 0 0 0 0 A 0 1 1 1 1 1 1 1 1 1 1 1 C 0 1 1 1 2 2 2 2 2 2 2 2 T 0 1 1 1 2 3 3 3 3 3 3 3 G 0 1 2 2 2 3 4 4 4 4 4 4 1 2 3 3 3 4 5 5 5 5 5 A 0 5 x 5 coterie network with switches shown in “arbitrary” G 0 1 2 3 3 3 4 5 6 6 6 6 settings. Shaded areas denotes coterie (the set of PEs Sharing same circuit) 19 20

Reconfigurable 2D Network LCS on Reconfigurable 2D Network � � Key to reconfigurability is the Data Switch inside each PE: A G A C T G A C T G A � � The Data Switch is A 1,1 1,2 1,3 1,4 1,5 1,6 1,7 1,8 1,9 1,10 1,11 N expanded to connect to its four neighbors (N-E-S-W) 0 0 0 0 0 0 0 0 to form a 2D 2,1 2,4 2,8 C Reconfigurable Network � � Data switch has bypass 0 0 0 0 0 0 0 0 3,1 3,5 3,9 W E T mode to allow PE communication to skip non- 0 0 0 0 0 0 0 4,1 4,2 4,6 4,10 G responders, so as to support associative 0 0 0 0 0 0 0 5,1 5,3 5,7 5,11 A computing S 0 0 0 0 0 0 0 0 6,1 6,8 DATA Communication 6,4 C 21 22 MASC Architecture Multiple Instruction stream MASC is an MSIMD TM POOL IS POOL (multiple SIMD) version TM0 TM1 IS0 IS1 IS2 of ASC that supports TM2 multiple Instruction Streams (ISs) Task Allocation Control and In our dynamic MASC Control Signal Instructions Processor, tasks are assigned to available ISs from a common PE PE pool as those ISs PE0 PE1 (n-1) (n) become available Task Manager (TM) and Instruction Stream (IS) Pools in the MASC Processor 23 24

Broadcast/Reduction Bottleneck Instruction Types � � Time to perform a broadcast � � Scalar instructions or reduction increases as � � Execute entirely within the control unit the number of PEs increases � � Broadcast/Parallel instructions � � Even for a moderate number of PEs, this time can � � Execute within the PE array dominate the machine cycle � � Use the broadcast network to transfer instruction and data time � � Reduction instructions � � Pipelining reduces the cycle time but increases the � � Execute within the PE array latency � � Use the broadcast network to transfer instruction and data � � Additional latency causes � � Use the reduction network to combine data from PEs pipeline hazards 25 26 Scalar Pipeline Hazards in a Scalar Pipeline � � Instruction Fetch (IF) � � Instruction Decode (ID) � � Execute (EX) � � Memory Access (M) � � Write Back (W) 27 28

Pipeline Organization Hazards � � Separate paths for each instruction type so instructions only go through stages that they use � � Stalls less often than a unified pipeline organization 29 30 Effect of Hazards on Pipeline Utilization Multithreading � � Pipelining alone cannot eliminate hazards caused by broadcast and reduction latencies � � Solution: use instructions from multiple threads to keep the pipeline full � � Instructions from different threads are independent so they cannot generate stalls due to data dependencies � � As long as there are a sufficient number of threads, it is possible to fill any number of stall cycles 31 32

Recommend

More recommend