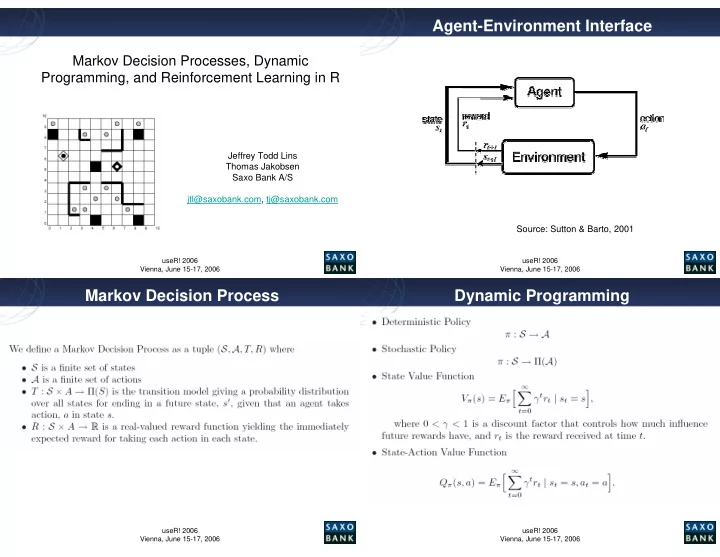

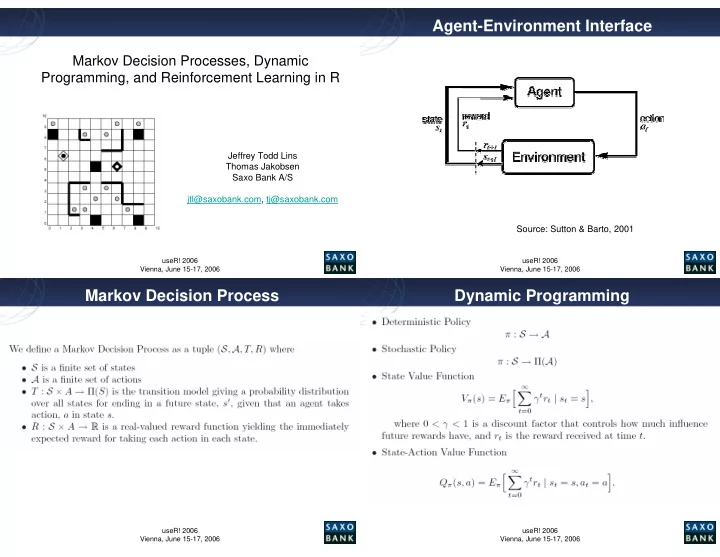

Agent-Environment Interface Markov Decision Processes, Dynamic Programming, and Reinforcement Learning in R • Click to edit Master text styles • Click to edit Master text styles • Second level • Second level • Third level • Third level • Fourth level • Fourth level Jeffrey Todd Lins Thomas Jakobsen • Fifth level • Fifth level Saxo Bank A/S jtl@saxobank.com, tj@saxobank.com Source: Sutton & Barto, 2001 useR! 2006 useR! 2006 Vienna, June 15-17, 2006 Vienna, June 15-17, 2006 Markov Decision Process Dynamic Programming • Click to edit Master text styles • Click to edit Master text styles • Second level • Second level • Third level • Third level • Fourth level • Fourth level • Fifth level • Fifth level useR! 2006 useR! 2006 Vienna, June 15-17, 2006 Vienna, June 15-17, 2006

Bellman Equation Bellman Optimality Equation • Click to edit Master text styles • Click to edit Master text styles • Second level • Second level • Third level • Third level • Fourth level • Fourth level • Fifth level • Fifth level useR! 2006 useR! 2006 Vienna, June 15-17, 2006 Vienna, June 15-17, 2006 Value Iteration Policy Iteration • Click to edit Master text styles • Click to edit Master text styles • Second level • Second level • Third level • Third level • Fourth level • Fourth level • Fifth level • Fifth level useR! 2006 useR! 2006 Vienna, June 15-17, 2006 Vienna, June 15-17, 2006

Reinforcement Learning Temporal Difference Learning • Click to edit Master text styles • Click to edit Master text styles • Second level • Second level • Third level • Third level • Fourth level • Fourth level • Fifth level • Fifth level useR! 2006 useR! 2006 Vienna, June 15-17, 2006 Vienna, June 15-17, 2006 Q-Learning Linear Architectures • Click to edit Master text styles • Click to edit Master text styles • Second level • Second level • Third level • Third level • Fourth level • Fourth level • Fifth level • Fifth level useR! 2006 useR! 2006 Vienna, June 15-17, 2006 Vienna, June 15-17, 2006

Least Squares TD Learning Examples of RL in Finance • Click to edit Master text styles • Click to edit Master text styles Performance Functions and Reinforcement Learning for Trading Systems and Portfolios . • Second level • Second level John Moody, Lizhong Wu, Yuansong Liao & Matthew Saffell. Journal of Forecasting, Volume 17, Pages 441-470, 1998. • Third level • Third level • Fourth level • Fourth level Intraday FX trading: Reinforcement learning vs evolutionary learning . M. A. H. Dempster, T. W. Payne, & V. S. Romahi. Working Paper No. 23/01, • Fifth level • Fifth level Judge Institute of Management, University of Cambridge, 2001. useR! 2006 useR! 2006 Vienna, June 15-17, 2006 Vienna, June 15-17, 2006 Advantages of RL in R References • Click to edit Master text styles • Click to edit Master text styles Richard Sutton and Andrew Barto. Reinforcement Learning: An Introduction. •Vectorized Programming The MIT Press, Cambridge, Massachusetts, 1998. • Second level • Second level •Flexible, Interactive Simulation Environment • Third level • Third level Michail G. Lagoudakis and Ronald Parr. “Least-Squares Policy Iteration,” Journal •Wide Range of Possibilities for Linear Basis Functions of Machine Learning Research , 4, 2003, pp. 1107-1149. • Fourth level • Fourth level • Interface to Existing Packages: HMMs, SVMs, GAs, • Fifth level • Fifth level Neural Networks useR! 2006 useR! 2006 Vienna, June 15-17, 2006 Vienna, June 15-17, 2006

Recommend

More recommend