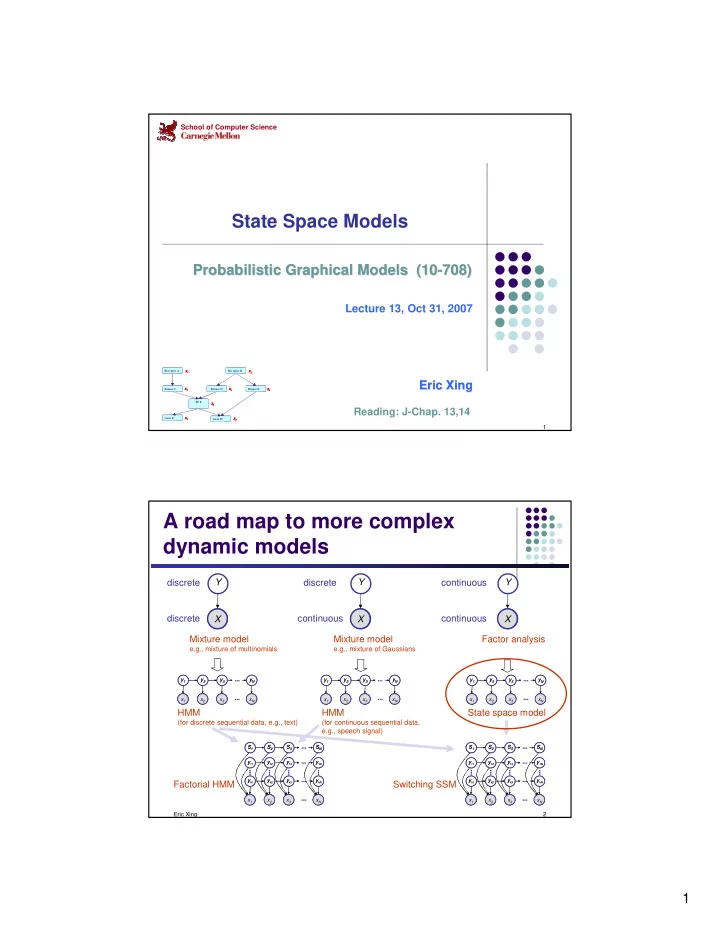

School of Computer Science State Space Models Probabilistic Graphical Models (10- Probabilistic Graphical Models (10 -708) 708) Lecture 13, Oct 31, 2007 Receptor A Receptor A X 1 X 1 X 1 Receptor B Receptor B X 2 X 2 X 2 Eric Xing Eric Xing Kinase C Kinase C X 3 X 3 X 3 Kinase D Kinase D X 4 X 4 X 4 Kinase E Kinase E X 5 X 5 X 5 TF F TF F X 6 X 6 X 6 Reading: J-Chap. 13,14 Gene G Gene G X 7 X 7 X 7 Gene H Gene H X 8 X 8 X 8 1 A road map to more complex dynamic models Y Y Y discrete discrete continuous A A A X X X discrete continuous continuous Mixture model Mixture model Factor analysis e.g., mixture of multinomials e.g., mixture of Gaussians ... ... ... ... ... ... y 1 y 1 y 2 y 2 y 3 y 3 y N y N y 1 y 1 y 2 y 2 y 3 y 3 y N y N y 1 y 1 y 2 y 2 y 3 y 3 y N y N ... ... ... ... ... ... A A A A A A A A A A A A A A A A A A A A A A A A x 1 x 1 x 2 x 2 x 3 x 3 x N x N x 1 x 1 x 2 x 2 x 3 x 3 x N x N x 1 x 1 x 2 x 2 x 3 x 3 x N x N HMM HMM State space model (for discrete sequential data, e.g., text) (for continuous sequential data, e.g., speech signal) ... ... ... ... S 1 S 1 S 2 S 2 S 3 S 3 S N S N S 1 S 1 S 2 S 2 S 3 S 3 S N S N ... ... ... ... y 11 y 11 y 12 y 12 y 13 y 13 y 1N y 1N y 11 y 11 y 12 y 12 y 13 y 13 y 1N y 1N ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... y k1 y k1 y k2 y k2 y k3 y k3 y kN y kN y k1 y k1 y k2 y k2 y k3 y k3 y kN y kN Factorial HMM Switching SSM ... ... ... ... A A A A A A A A A A A A A A A A x 1 x 1 x 2 x 2 x 3 x 3 x N x N x 1 x 1 x 2 x 2 x 3 x 3 x N x N Eric Xing 2 1

State space models (SSM): � A sequential FA or a continuous state HMM � x = x + A G ... X 1 X 2 X 3 X N � � � � − � y = x + C � � − � � � � � � � Q � R ~ ( ; ), ~ ( ; ) � � ... A A A A Y 1 Y 2 Y 3 Y N � x Σ � ~ ( ; ), � 0 This is a linear dynamic system. � In general, � x = x + f G ( ) � � � � − � y = g x + ( ) � � − � � where f is an (arbitrary) dynamic model, and g is an (arbitrary) observation model Eric Xing 3 LDS for 2D tracking � Dynamics: new position = old position + ∆× velocity + noise (constant velocity model, Gaussian noise) ∆ x 1 x 1 1 0 0 t t − 1 ∆ x x 2 2 0 1 0 t = t − + 1 noise x 1 x 1 � 0 0 1 0 � − t t 1 x 2 x 2 � 0 0 0 1 � t t − 1 � Observation: project out first two components (we observe Cartesian position of object - linear!) � � � � � � � � � � � � � = + noise � � � � � � � � � � � � � � � Eric Xing 4 2

The inference problem 1 � Filtering � given y 1 , …, y t , estimate x t: y P x ( | ) t 1 t : The Kalman filter is a way to perform exact online inference (sequential � Bayesian updating) in an LDS. It is the Gaussian analog of the forward algorithm for HMMs: � ∑ � � = � y = α � ∝ � = � � = � − = � α ( X | ) ( y | X ) ( X | X ) � � � � � � � � � � � − : � α α α α t 0 1 2 ... X 1 X 2 X 3 X t ... A Y 1 Y 2 Y 3 Y t Eric Xing 5 The inference problem 2 � Smoothing � given y 1 , …, y T , estimate x t (t<T) The Rauch-Tung-Strievel smoother is a way to perform exact off-line � inference in an LDS. It is the Gaussian analog of the forwards- backwards (alpha-gamma) algorithm: γ γ γ γ t 0 1 2 α α α α t 0 1 2 ... X 1 X 2 X 3 X t ... A Y 1 Y 2 Y 3 Y t ∑ = = γ ∝ α γ p i y i i P X j X j j ( X | ) ( | ) t T t t t + i t + 1 : 1 1 j Eric Xing 6 3

2D tracking filtered Eric Xing 7 Kalman filtering in the brain? Eric Xing 8 4

Kalman filtering derivation � Since all CPDs are linear Gaussian, the system defines a large multivariate Gaussian. � Hence all marginals are Gaussian. Hence we can represent the belief state � ( X t | y 1:t ) as a Gaussian � ≡ E y � y x ˆ | ( X | , , ) mean � � � � � � y � y P ≡ E T ( X X | , , ) covariance . � � � � � � � | − � P It is common to work with the inverse covariance (precision) matrix ; � � | � this is called information form. Eric Xing 9 Kalman filtering derivation � Kalman filtering is a recursive procedure to update the belief state: X 1 ... X t X t+1 Predict step: compute � ( X t+1 | y 1:t ) from prior belief � � ( X t | y 1:t ) and dynamical model � ( X t+1 | X t ) --- time update A A Y 1 Y t Update step: compute new belief � ( X t+1 | y 1:t+1 ) from X 1 ... X t X t+1 � prediction � ( X t+1 | y 1:t ), observation y t+1 and observation model � ( y t+1 | X t+1 ) --- measurement update A A A Y 1 Y t Y t+1 Eric Xing 10 5

Predict step � � � x = x + � Dynamical Model: A G � Q , ~ ( ; ) � � � � � + One step ahead prediction of state: X 1 ... X t X t+1 � x y y x = E = A ˆ ( X | , � , ) ˆ � + � � � + � � � � � | | A A Y 1 Y t P = E − x − x T y y ( X ˆ )(X ˆ ) | , � , ) + + + + + t t t t t t t t t 1 | 1 1 | 1 1 | 1 x x y y = E A + G w − A + G w − T ( X ˆ )( X ˆ ) | , � , ) t t t + t t t t + t t 1 | 1 | 1 = + AP A GQG T t t | ... X 1 X t X t+1 y x = C + v v � R � Observation model: , ~ ( 0 ; ) t t t t A A A Y 1 Y t Y t+1 One step ahead prediction of observation: � y y � y y x E = E C + = C ( Y | , � , ) ( X | , � , ) ˆ � + � � � � + � � + � � � � + � � | − y − y T y y = T + E CP C ˆ ˆ � ( Y )(Y ) | , , ) R t + t + t t + t + t t t + t 1 1 | 1 1 | 1 1 | − y − x T y y = E CP ˆ ˆ � ( Y )(X ) | , , ) + + + + + t t t t t t t t t 1 1 | 1 1 | 1 1 | Eric Xing 11 Update step � Summarizing results from previous slide, we have � ( X t+1 , Y t+1 | y 1:t ) ~ � ( m t+1 , V t+1 ), where � � � � ˆ � � � � � + = | � � + � � � + � � = , | | , � � � � + � � + �� �� � � � ˆ + � + � � � � � � � � | + + | | � Remember the formulas for conditional Gaussian distributions: x x µ Σ Σ � � � �� �� � µ Σ = � ( | , ) ( , ) x x µ Σ Σ � � � �� �� � � x x x m V x = x m � V � = � � ( ) ( | , ) ( ) ( | , ) � � � � � � � � � � � | | m � m − � x = µ = µ + Σ Σ − µ ( ) � � � � � �� �� � � | V � = Σ V � = Σ − Σ Σ − Σ � �� � � �� �� �� �� | Eric Xing 12 6

Kalman Filter � Measurement updates: x x = + K − ˆ ˆ ˆ (y C x ) � � � � � � � � � + + + + + + | | t 1 t 1 | t = − P P KCP � � � � � � � � � � + + + + | | | where K t+1 is the Kalman gain matrix � K = P C T CP C T + R -1 ( ) � � � � � � � � + + + | | � Time updates: x = x A ˆ ˆ � + � � � � | | = + T P AP A GQG � � � � � + | | � K t can be pre-computed (since it is independent of the data). Eric Xing 13 Example of KF in 1D � Consider noisy observations of a 1D particle doing a random walk: � � � � � = + σ � = � + � � � σ � � , ~ ( , ) , ~ ( , ) � � � � � � � � � − − | = + T = σ + σ � � � � KF equations: P AP A GQG = A = ˆ ˆ ˆ , � + � � � � � � � � + � ��� ��� | | | K = P C T CP C T + R -1 = σ + σ σ + σ + σ ( ) ( )( ) � + � � + � � � + � � � � � � � | | ( ) σ + σ + σ z x ˆ t x t + z t t x = x + K z C x = 1 | ˆ ˆ ( - ˆ ) t + t + t + t t + + + 1 | 1 1 | 1 t 1 t 1 | t σ + σ + σ t x z ( ) σ + σ σ = = � � � P P - KCP � � � � � � � � � � + + + + | | | σ + σ + σ � � � Eric Xing 14 7

Recommend

More recommend