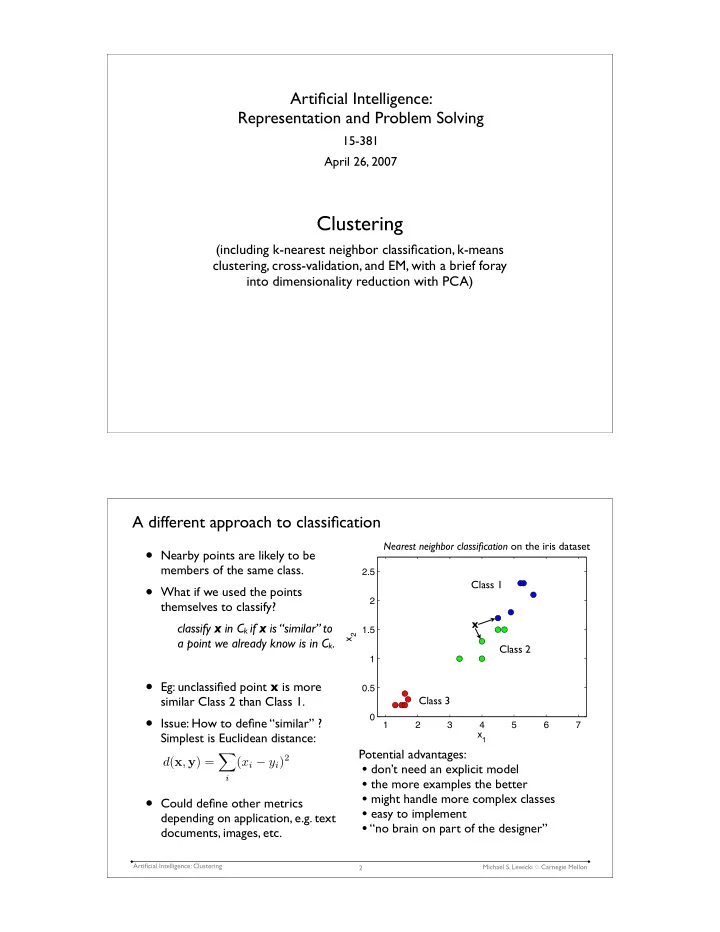

Artificial Intelligence: Representation and Problem Solving 15-381 April 26, 2007 Clustering (including k-nearest neighbor classification, k-means clustering, cross-validation, and EM, with a brief foray into dimensionality reduction with PCA) A different approach to classification Nearest neighbor classification on the iris dataset • Nearby points are likely to be members of the same class. 2.5 Class 1 • What if we used the points 2 themselves to classify? x classify x in C k if x is “similar” to 1.5 x 2 a point we already know is in C k . Class 2 1 • Eg: unclassified point x is more 0.5 similar Class 2 than Class 1. Class 3 • Issue: How to define “similar” ? 0 1 2 3 4 5 6 7 Simplest is Euclidean distance: x 1 Potential advantages: � ( x i − y i ) 2 d ( x , y ) = • don’t need an explicit model i • the more examples the better • might handle more complex classes • Could define other metrics • easy to implement depending on application, e.g. text • “no brain on part of the designer” documents, images, etc. Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 2

A complex, non-parametric decision boundary • How do we control the complexity of this model? - difficult • How many parameters? - every data point is a parameter! • This is an example of a non-parametric model, ie where the model is defined by the data. (Also, called, instance based) • Can get very complex decision boundaries example from Martial Herbert Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 3 Example: Handwritten digits • Use Euclidean distance to see which known digit is closest to each class. • But not all neighbors are the same: example nearest neighbors example from Sam Roweis • “k-nearest neighbors”: look at k-nearest neighbors and from LeCun etal, 1998 choose most frequent. digit data available at: http://yann.lecun.com/exdb/mnist/ • Cautions: can get expensive to find neighbors Digits are just represented as a vector. Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 4

The problem of using templates (ie Euclidean distance) • Which of these is more like the performance results of various classifiers (from http://yann.lecun.com/exdb/mnist/) example? A or B? error rate on example A B Classifier test data (%) linear 12.0 k=3 nearest neighbor 5.0 from Simard etal, 1998 (Euclidean distance) 2-layer neural network 4.7 • Euclidean distance only cares about (300 hidden units) nearest neighbor how many pixels overlap. 3.1 (Euclidean distance) • Could try to define a distance metric k-nearest neighbor 1.1 that is insensitive to small deviations (improved distance metric) in position, scale, rotation, etc. convolutional neural net 0.95 • Digit example: - 60,000 training images, best (the conv. net with 0.4 elastic distortions) - 10,000 test images - no “preprocessing” humans 0.2 - 2.5 Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 5 Clustering

Clustering: Classification without labels: • In many situations we don’t have labeled training data, only unlabeled data. • Eg, in the iris data set, what if we were just starting and didn’t know any classes? 2.5 2 petal width (cm) 1.5 1 0.5 0 1 2 3 4 5 6 7 petal length (cm) Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 7 Types of learning supervised reinforcement unsupervised reinforcement desired output { y 1 , . . . , y n } model output model model model { θ 1 , . . . , θ n } { θ 1 , . . . , θ n } { θ 1 , . . . , θ n } next week world world world (or data) (or data) (or data) Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 8

A real example: clustering electrical signals from neurons amplifier filters software A/D analysis electrode oscilloscope Basic problem: only information is signal. The true classes are always unknown. Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 9 An extracellular waveform with many different neural “spikes” 0 5 10 15 20 25 msec How do we sort the di ff erent spikes? Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 10

Sorting with level detection � 0.5 0 0.5 1 1.5 � 0.5 0 0.5 1 1.5 msec msec Level detection doesn’t always work. Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 11 Why level detection doesn’t work � 0.5 0 0.5 1 1.5 � 0.5 0 0.5 1 1.5 msec msec peak amplitude: neuron 2 background peak amplitude: amplitude neuron 1 amplitude A B One dimension is not su ffi cient to separate the spikes. Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 12

Idea: try more features max amplitude min amplitude � 0.5 0 0.5 1 1.5 msec What other features could we use? Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 13 Maximum vs minimum 250 200 spike maximum ( µ V) 150 100 50 0 � 200 � 150 � 100 � 50 0 spike minimum ( µ V) This allows better discrimination than max alone, but is it optimal? Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 14

Try different features width height � 0.5 0 0.5 1 1.5 msec What other features could we use? Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 15 Height vs width 400 350 300 spike height ( µ V) 250 200 150 100 50 0 0 0.5 1 1.5 spike width (msec) This allows allows better discrimination. How can we choose more objectively? Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 16

Brief foray: dimensionality reduction (in this modeling data with a normal distribution) Can we model the signal with a better set of features? • Idea: model the distribution of the data. This is density estimation • We’ve done this before: - Bernoulli distribution of coin flips: unsupervised learning � n � p ( y | θ , n ) = θ y (1 − θ ) n − y y 1 2 3 4 5 6 7 - Gaussian distribution of iris data: model { θ 1 , . . . , θ n } 2.5 2 petal width (cm) 1.5 1 data 0.5 (neural signals) 0 1 2 3 4 5 6 7 petal length (cm) 1 � − 1 � 2 σ 2 ( x − µ ) 2 p ( x | µ, σ ) = exp √ 2 πσ Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 18

“Modeling” data with a Gaussian • a Gaussian (or normal) distribution is “fit” to data with things you’re already familiar with. 1 � − 1 � 2 σ 2 ( x − µ ) 2 p ( x | µ, σ ) = exp √ 2 πσ µ = 1 � x n mean N n σ 2 = 1 � ( x n − µ ) 2 variance N n • A multivariate normal is the same but in d-dimensions � − 1 � N ( µ , Σ ) = (2 π ) − d/ 2 | Σ | − 1 / 2 exp 2( x − µ ) T Σ − 1 ( x − µ ) µ = 1 � ˆ x n N n 1 ˆ � Σ ij = ( x i,n − ˆ µ )( x j,n − ˆ µ ) N − 1 n Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 19 Recall the example from the probability theory lecture #$C;'(;2D4,E2+F(+($2 GHI%)054()*+J,K L.M7N0.O//P " '()$*+ ! %&& " '()$*+ ! %&& " "#$ ! " "#$ ! ! ! Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 20

The correlational structure is described by the covariance #$C;'(;2D4,E2+F(+($2 9'(2D(%;< G()42C4D+$' $R, # GHI%)054()*+J,K L.M7N0.O//P " '()$*+ ! %&& " '()$*+ ! %&& " "#$ ! " "#$ ! ! ! What about distributions in higher dimensions? Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 21 Multivariate covariance matrices and principal components Head measurements on two college-age groups (Bryce and Barker): 1) football players (30 subjects) 2) non-football players (30 subjects) Use six di ff erent measurements: variable measurement WDMI head width at widest dimention CIRCUM head circumference FBEYE front to back at eye level EYEHD eye to top of head EARHD ear to top of head JAW jaw width Are these measures independent? Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 22

The covariance matrix . 370 . 602 . 149 . 044 . 107 . 209 . 602 2 . 629 . 801 . 666 . 103 . 377 . 149 . 801 . 458 . 012 − . 013 . 120 S = . 044 . 666 . 011 1 . 474 . 252 − . 054 . 107 . 103 − . 013 . 252 . 488 − . 036 . 209 . 377 . 120 − . 054 − . 036 . 324 N 1 � S ij = ( x i,n − ¯ x i )( x j,n − ¯ x j ) N − 1 n =1 Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 23 The eigenvalues Proportion Cumulative Eigenvalue of Variance Proportion 3.323 .579 .579 1.374 .239 .818 .476 .083 .901 .325 .057 .957 .157 .027 .985 .088 .015 1.000 How many PCs should we select? Artificial Intelligence: Clustering Michael S. Lewicki � Carnegie Mellon 24

Recommend

More recommend