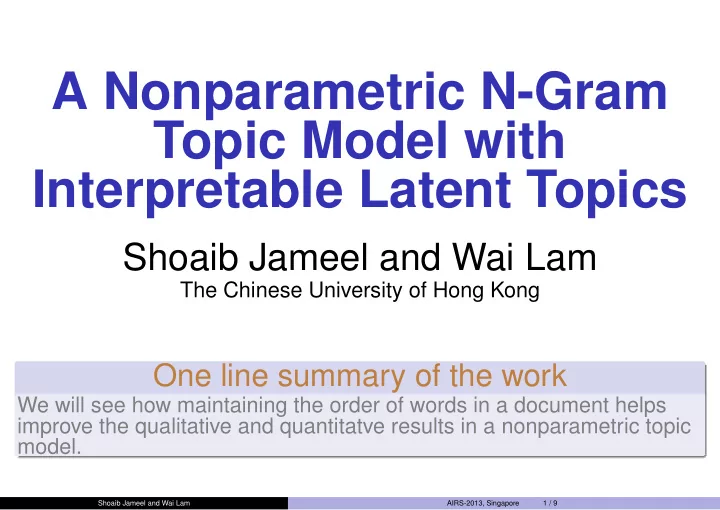

A Nonparametric N-Gram Topic Model with Interpretable Latent Topics - PowerPoint PPT Presentation

A Nonparametric N-Gram Topic Model with Interpretable Latent Topics Shoaib Jameel and Wai Lam The Chinese University of Hong Kong . One line summary of the work . We will see how maintaining the order of words in a document helps improve

A Nonparametric N-Gram Topic Model with Interpretable Latent Topics Shoaib Jameel and Wai Lam The Chinese University of Hong Kong . One line summary of the work . We will see how maintaining the order of words in a document helps improve the qualitative and quantitatve results in a nonparametric topic model. . Shoaib Jameel and Wai Lam AIRS-2013, Singapore 1 / 9

Introduction and Motivation Popular nonparametric topic models such as Hierarchical Dirichlet Processes (HDP) assume a bag-of-words paradigm. They thus lose important collocation information in the document. . Example . They cannot capture a compound word “neural network” in a topic. . Parametric n-gram topic models also exist, but they require the user to supply the number of topics. The bag-of-words assumption makes the latent topics discovered less interpretable. Shoaib Jameel and Wai Lam AIRS-2013, Singapore 2 / 9

Related Work Our work extends the Hierarchical Dirichlet Processes (HDP) (Teh et al. JASA-2006) (Goldwater et al. ACL-2006) presented nonparametric word segmentation models where order of words in the document is maintained. Deane (Deane. ACL-2005) presented a nonparametric approach to extract phrasal terms. Parametric n-gram topic models such as Bigram Topic Model (Wallach. ICML-2006), LDA-Collocation Model (Griffiths et al. Psy. Rev-2005), and Topical N-gram Model (Wang et al. IDCM-2007) all need the number of topics to be specified by the user. Shoaib Jameel and Wai Lam AIRS-2013, Singapore 3 / 9

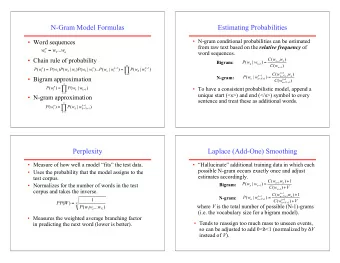

Background - HDP Model The Hierarchical Dirichlet Processes (HDP), when used as a topic model, discovers the latent topics in a collection. The model does not require the number of topics to be specified by the user. The model can be regarded as a nonparametric version of the Latent Dirichlet Allocation (LDA) (Blei et al., JMLR-2003) . . Generative Process Graphical Model in Plate Diagram . . G 0 | γ, H ∼ DP ( γ, H ) γ G 0 H G d | α, G 0 ∼ DP ( α, G 0 ) α z di | G d ∼ G d G d w di | z di ∼ Multinomial ( z di ) z di γ and α are the concentration parameters. The base probability measure H provides the prior distribution for the factors or topics z di . . w di N d D . Shoaib Jameel and Wai Lam AIRS-2013, Singapore 4 / 9

N-gram Hierarchical Dirichlet Processes Model . . This is our proposed model, called as NHDP . . Our Model - NHDP . We introduce a set of binary indicator variables between words in sequence in the HDP model. The binary variables are set to 1 if the words form a bigram, else they are 0. If the words in sequence form a bigram, then the words are generated solely based on the previous word. Unigram words are generated from the topic. This framework helps us capture the word order in the document. . Shoaib Jameel and Wai Lam AIRS-2013, Singapore 5 / 9

N-gram Hierarchical Dirichlet Processes Model . . Generative Process Graphical Model in Plate Diagram . . G 0 | γ, H ∼ DP ( γ, H ) ; γ G 0 H G d | α, G 0 ∼ DP ( α, G 0 ) ; α z di | G d ∼ G d ; ǫ x di | w d , i − 1 ∼ Bernoulli ( ψ w d , i − 1 ) ; G d if x di = 1 then z d,i − 1 z d,i +1 z di w di | w d , i − 1 ∼ Multinomial ( σ w d , i − 1 ) ψ V end x d,i +1 σ x di else V w di | z di ∼ F ( z di ) w d,i +1 w d,i − 1 w di δ D end . . . As one can see, our model can capture word dependencies in the text data. . Shoaib Jameel and Wai Lam AIRS-2013, Singapore 6 / 9

Posterior Inference using Gibbs Sampling . First Condition: x di = 0 . Under this condition, sampling is same as in the HDP model. . . Second Condition: x di = 1 → Prob. of a topic in a document. . . k f ¬ w dt { m ¬ dt ( w dt ) if k is already used P ( k dt = k | t , k ¬ dt ) ∝ k γ f ¬ w dt if k = ˆ ( w dt ) k ˆ k Γ( n ¬ w dt ϑ Γ( n ¬ w dt ,ϑ + n w dt ,ϑ + η ) ∏ + V η ) f ¬ w dt .. k .. k ( w dt ) = × Γ( n ¬ w dt k ϑ Γ( n ¬ w dt ,ϑ + n w dt + V η ) ∏ + η ) .. k . .. k k dt is the topic index variable for each table t in d w as ( w di : ∀ d , i ) and w dt as ( w di : ∀ i with t di = t ), t as ( t di : ∀ d , i ) and k as ( k dt : ∀ d , t ) . x as ( x di : ∀ d , i ). ( k ¬ dt , t ¬ di ), it means that the variables corresponding to the superscripted index are removed from the set or from the calculation of the count. V is the vocabulary size. n ¬ w di is the number of words belonging to the topic k in the corpus whose x di = 0 .. k excluding w di . n w dt is the total number of words at the table t whose x di = 0. Shoaib Jameel and Wai Lam AIRS-2013, Singapore 7 / 9

Experimental Results . Qualitative Results . HDP HDP HDP NHDP NHDP NHDP neurons patterns sports week color windows neural network usenet cortex sun microsystems television british activity neurons ins summer olympics anonymous ftp cbs neural networks ftp activation broadcast usenet miss pattern world news tonight comp simulations bit television Comp Dataset NIPS Dataset AP Dataset . . Quantitative Results - Perplexity Analysis . AP Dataset Training Data=30% Training Data=50% Training Data=70% Training Data=90% Perplexity Perplexity Perplexity Perplexity 6 , 720 3 , 410 . . . . . 3 , 370 6 , 700 . . 3 , 470 . . . . 3 , 370 . . . . 6 , 680 . . 3 , 350 . . . 3 , 430 . . . 6 , 660 3 , 330 . 3 , 330 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6 , 640 . 3 , 390 3 , 290 3 , 310 . . . . L P P P L P P P L P P P L P P P O O O O D D D D D D D D D D D D C C C C H H H H H H H H H H H H A A A A N N N N N N N N D D D D - - - - i i i i L B L B L B L B . Shoaib Jameel and Wai Lam AIRS-2013, Singapore 8 / 9

Conclusion and References We proposed an n-gram nonparametric topic model which discovers more interpretable latent topics. Our model introduces a new set of binary random variables in the HDP model. Our model extends the posterior inference scheme of the HDP model. Results demonstrate that our model has outperformed state-of-the-art results. . References . Blei, D. M., Ng, A. Y., and Jordan, M. I. (2003). Latent dirichlet allocation. JMLR, 3, 993-1022. Wallach, H. M. (2006). Topic modeling: Beyond bag-of-words. Proc of ICML (pp. 977-984). Griffiths, T. L., Steyvers, M., and Tenenbaum, J. B. (2007). Topics in semantic representation. Psychological review, 114(2), 211. Wang, X., McCallum, A., and Wei, X. (2007). Topical n-grams: Phrase and topic discovery, with an application to information retrieval. In Proc. of ICDM, (pp. 697-702). Teh, Y. W., Jordan, M. I., Beal, M. J., and Blei, D. M. (2006). Hierarchical Dirichlet Processes. Journal of the American Statistical Association 101: pp. 15661581. Deane, P ., 2005. A nonparametric method for extraction of candidate phrasal terms. In Proc. of ACL. 605-613. Goldwater, S., Griffiths, T. L., and Johnson, M,. (2006). Contextual dependencies in unsupervised word segmentation. In Proc. of ACL. 673-680. . Shoaib Jameel and Wai Lam AIRS-2013, Singapore 9 / 9

Recommend

More recommend

Explore More Topics

Stay informed with curated content and fresh updates.