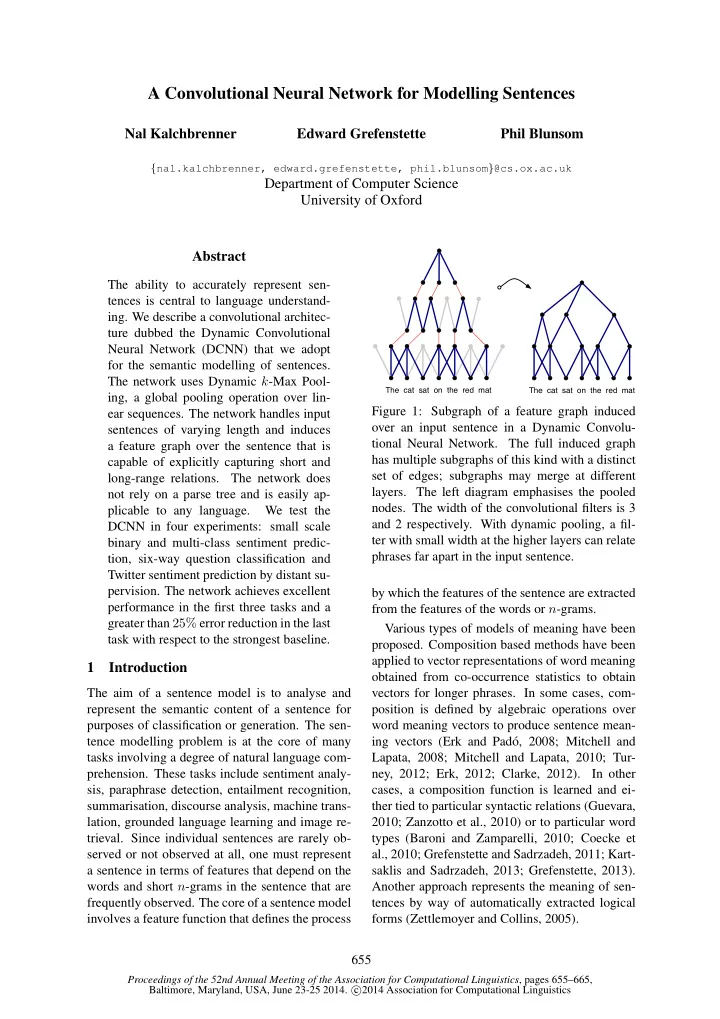

A Convolutional Neural Network for Modelling Sentences Nal Kalchbrenner Edward Grefenstette Phil Blunsom { nal.kalchbrenner, edward.grefenstette, phil.blunsom } @cs.ox.ac.uk Department of Computer Science University of Oxford Abstract The ability to accurately represent sen- tences is central to language understand- ing. We describe a convolutional architec- ture dubbed the Dynamic Convolutional Neural Network (DCNN) that we adopt for the semantic modelling of sentences. The network uses Dynamic k -Max Pool- The cat sat on the red mat The cat sat on the red mat ing, a global pooling operation over lin- Figure 1: Subgraph of a feature graph induced ear sequences. The network handles input over an input sentence in a Dynamic Convolu- sentences of varying length and induces tional Neural Network. The full induced graph a feature graph over the sentence that is has multiple subgraphs of this kind with a distinct capable of explicitly capturing short and set of edges; subgraphs may merge at different long-range relations. The network does layers. The left diagram emphasises the pooled not rely on a parse tree and is easily ap- nodes. The width of the convolutional filters is 3 plicable to any language. We test the and 2 respectively. With dynamic pooling, a fil- DCNN in four experiments: small scale ter with small width at the higher layers can relate binary and multi-class sentiment predic- phrases far apart in the input sentence. tion, six-way question classification and Twitter sentiment prediction by distant su- pervision. The network achieves excellent by which the features of the sentence are extracted performance in the first three tasks and a from the features of the words or n -grams. greater than 25% error reduction in the last Various types of models of meaning have been task with respect to the strongest baseline. proposed. Composition based methods have been applied to vector representations of word meaning 1 Introduction obtained from co-occurrence statistics to obtain The aim of a sentence model is to analyse and vectors for longer phrases. In some cases, com- represent the semantic content of a sentence for position is defined by algebraic operations over purposes of classification or generation. The sen- word meaning vectors to produce sentence mean- tence modelling problem is at the core of many ing vectors (Erk and Pad´ o, 2008; Mitchell and tasks involving a degree of natural language com- Lapata, 2008; Mitchell and Lapata, 2010; Tur- prehension. These tasks include sentiment analy- ney, 2012; Erk, 2012; Clarke, 2012). In other sis, paraphrase detection, entailment recognition, cases, a composition function is learned and ei- summarisation, discourse analysis, machine trans- ther tied to particular syntactic relations (Guevara, lation, grounded language learning and image re- 2010; Zanzotto et al., 2010) or to particular word trieval. Since individual sentences are rarely ob- types (Baroni and Zamparelli, 2010; Coecke et served or not observed at all, one must represent al., 2010; Grefenstette and Sadrzadeh, 2011; Kart- a sentence in terms of features that depend on the saklis and Sadrzadeh, 2013; Grefenstette, 2013). words and short n -grams in the sentence that are Another approach represents the meaning of sen- frequently observed. The core of a sentence model tences by way of automatically extracted logical involves a feature function that defines the process forms (Zettlemoyer and Collins, 2005). 655 Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics , pages 655–665, Baltimore, Maryland, USA, June 23-25 2014. c � 2014 Association for Computational Linguistics

A central class of models are those based on these layers form an order-4 tensor. The resulting neural networks. These range from basic neu- architecture is dubbed a Dynamic Convolutional ral bag-of-words or bag-of- n -grams models to the Neural Network. more structured recursive neural networks and Multiple layers of convolutional and dynamic to time-delay neural networks based on convo- pooling operations induce a structured feature lutional operations (Collobert and Weston, 2008; graph over the input sentence. Figure 1 illustrates Socher et al., 2011; Kalchbrenner and Blunsom, such a graph. Small filters at higher layers can cap- 2013b). Neural sentence models have a num- ture syntactic or semantic relations between non- ber of advantages. They can be trained to obtain continuous phrases that are far apart in the input generic vectors for words and phrases by predict- sentence. The feature graph induces a hierarchical ing, for instance, the contexts in which the words structure somewhat akin to that in a syntactic parse and phrases occur. Through supervised training, tree. The structure is not tied to purely syntactic neural sentence models can fine-tune these vec- relations and is internal to the neural network. tors to information that is specific to a certain We experiment with the network in four set- task. Besides comprising powerful classifiers as tings. The first two experiments involve predict- part of their architecture, neural sentence models ing the sentiment of movie reviews (Socher et can be used to condition a neural language model al., 2013b). The network outperforms other ap- to generate sentences word by word (Schwenk, proaches in both the binary and the multi-class ex- 2012; Mikolov and Zweig, 2012; Kalchbrenner periments. The third experiment involves the cat- and Blunsom, 2013a). egorisation of questions in six question types in the TREC dataset (Li and Roth, 2002). The net- We define a convolutional neural network archi- work matches the accuracy of other state-of-the- tecture and apply it to the semantic modelling of art methods that are based on large sets of en- sentences. The network handles input sequences gineered features and hand-coded knowledge re- of varying length. The layers in the network in- sources. The fourth experiment involves predict- terleave one-dimensional convolutional layers and ing the sentiment of Twitter posts using distant su- dynamic k -max pooling layers. Dynamic k -max pervision (Go et al., 2009). The network is trained pooling is a generalisation of the max pooling op- on 1.6 million tweets labelled automatically ac- erator. The max pooling operator is a non-linear cording to the emoticon that occurs in them. On subsampling function that returns the maximum the hand-labelled test set, the network achieves a of a set of values (LeCun et al., 1998). The op- greater than 25% reduction in the prediction error erator is generalised in two respects. First, k - with respect to the strongest unigram and bigram max pooling over a linear sequence of values re- baseline reported in Go et al. (2009). turns the subsequence of k maximum values in the The outline of the paper is as follows. Section 2 sequence, instead of the single maximum value. describes the background to the DCNN including Secondly, the pooling parameter k can be dynam- central concepts and related neural sentence mod- ically chosen by making k a function of other as- els. Section 3 defines the relevant operators and pects of the network or the input. the layers of the network. Section 4 treats of the The convolutional layers apply one- induced feature graph and other properties of the dimensional filters across each row of features in network. Section 5 discusses the experiments and the sentence matrix. Convolving the same filter inspects the learnt feature detectors. 1 with the n -gram at every position in the sentence allows the features to be extracted independently 2 Background of their position in the sentence. A convolutional layer followed by a dynamic pooling layer and The layers of the DCNN are formed by a convo- a non-linearity form a feature map. Like in the lution operation followed by a pooling operation. convolutional networks for object recognition We begin with a review of related neural sentence (LeCun et al., 1998), we enrich the representation models. Then we describe the operation of one- in the first layer by computing multiple feature dimensional convolution and the classical Time- maps with different filters applied to the input Delay Neural Network (TDNN) (Hinton, 1989; sentence. Subsequent layers also have multiple Waibel et al., 1990). By adding a max pooling feature maps computed by convolving filters with all the maps from the layer below. The weights at 1 Code available at www.nal.co 656

Recommend

More recommend