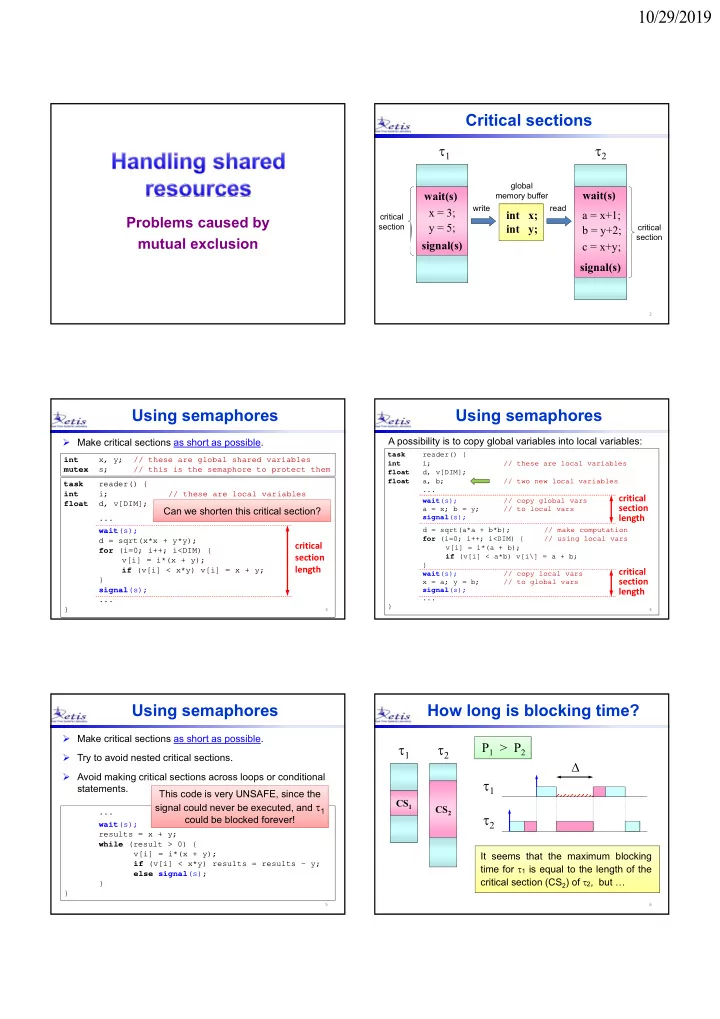

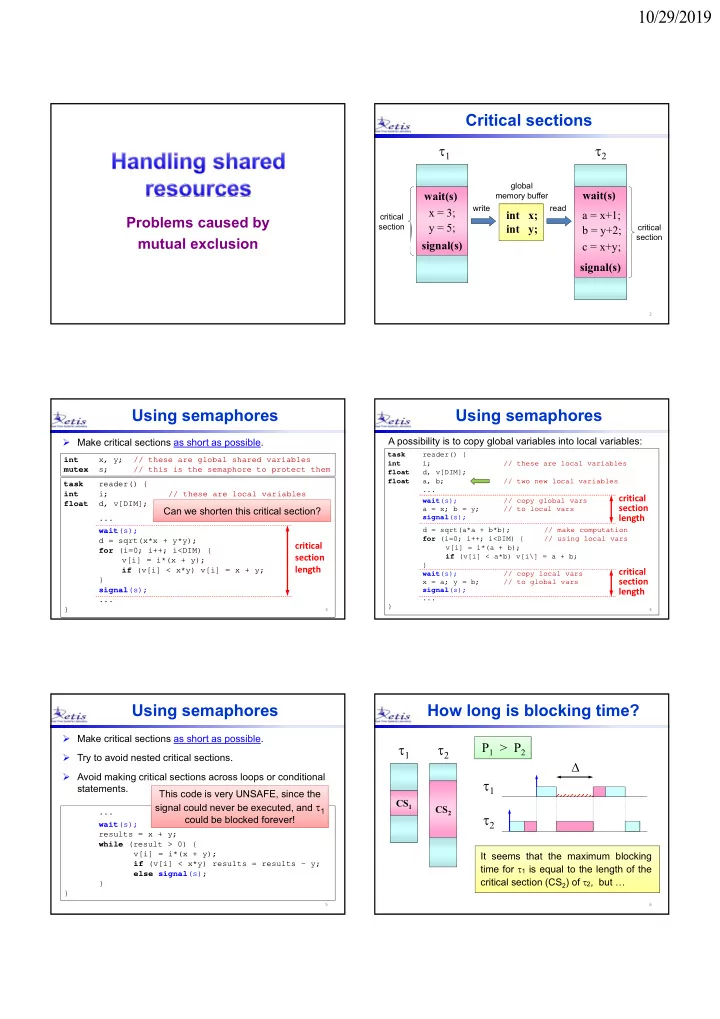

10/29/2019 Critical sections 1 2 global wait(s) wait(s) memory buffer write read x = 3; int x; a = x+1; critical Problems caused by y = 5; section int y; b = y+2; critical section mutual exclusion signal(s) c = x+y; signal(s) 2 Using semaphores Using semaphores A possibility is to copy global variables into local variables: Make critical sections as short as possible. task reader() { int x, y; // these are global shared variables i; // these are local variables int mutex s; // this is the semaphore to protect them float d, v[DIM]; float a, b; // two new local variables task reader() { ... i; // these are local variables int critical wait (s); // copy global vars d, v[DIM]; float section Can we shorten this critical section? a = x; b = y; // to local vars length signal (s); ... d = sqrt(a*a + b*b); // make computation wait (s); for (i=0; i++; i<DIM) { // using local vars d = sqrt(x*x + y*y); critical v[i] = i*(a + b); for (i=0; i++; i<DIM) { section if (v[i] < a*b) v[i\] = a + b; v[i] = i*(x + y); } length if (v[i] < x*y) v[i] = x + y; critical wait (s); // copy local vars } section x = a; y = b; // to global vars signal (s); signal (s); length ... ... } } 3 4 Using semaphores How long is blocking time? Make critical sections as short as possible. 1 2 P 1 > P 2 Try to avoid nested critical sections. Avoid making critical sections across loops or conditional 1 statements. This code is very UNSAFE, since the CS 1 signal could never be executed, and 1 CS 2 ... 2 could be blocked forever! wait (s); results = x + y; while (result > 0) { v[i] = i*(x + y); It seems that the maximum blocking if (v[i] < x*y) results = results - y; time for 1 is equal to the length of the else signal (s); critical section (CS 2 ) of 2 , but … } } 5 6

10/29/2019 Schedule with no conflicts Conflict on a critical section priority priority B 1 1 2 2 3 3 7 8 Conflict on a critical section Priority Inversion A high priority task is blocked by a lower priority priority B task a for an unbounded interval of time 1 2 Solution Introduce a concurrency control protocol for accessing critical sections. 3 9 10 Protocol key aspects Rules for classical semaphores The following rules are normally used for classical semaphores: Access Rule: Decides whether to block and when. Access Rule (Decides whether to block and when): Progress Rule: Decides how to execute inside a critical section. Enter a critical section if the resource is free, block if the resource is locked. Release Rule: Decides how to order the pending requests of the blocked tasks. Progress Rule (Decides how to execute in a critical section): Execute the critical section with the nominal priority. Other aspects Release Rule (Decides how to order pending requests): Analysis: estimates the worst-case blocking times. Wake up the blocked task in FIFO order. Implementation: finds the simplest way to encode the protocol rules. Wake up the blocked task with the highest priority. 11 12

10/29/2019 Resource Access Protocols Assumption Critical sections are correctly accessed by tasks: Classical semaphores (No protocol) Non Preemptive Protocol ( NPP ) wait(S A ) wait(S A ) Highest Locker Priority ( HLP ) wait(S B ) wait(S B ) Priority Inheritance Protocol ( PIP ) signal(S A ) Priority Ceiling Protocol ( PCP ) signal(S B ) signal(S B ) Stack Resource Policy ( SRP ) signal(S A ) 13 14 Non Preemptive Protocol Conflict on a critical section (using classical semaphores) Access Rule: A task never blocks at the entrance of a priority B critical section, but at its activation time. 1 Progress Rule: Disable preemption when executing inside a critical section. 2 Release Rule: At exit, enable preemption so that the 3 resource is assigned to the pending task with the highest priority. 16 15 NPP: example NPP: implementation notes Each task i must be assigned two priorities: B a nominal priority P i (fixed) assigned by the application priority developer; 1 a dynamic priority p i (initialized to P i ) used to schedule the task and affected by the protocol. 2 Then, the protocol can be implemented by changing the 3 behavior of the wait and signal primitives: wait(s): p i = max( P 1 , …, P n ) signal(s): p i = P i 17 18

10/29/2019 NPP: pro & cons NPP: problem 1 Long critical sections delay all high priority tasks: ADVANTAGES: simplicity and efficiency. B 1 B 1 is useless: Semaphores queues are not needed, because tasks priority 1 cannot preempt, never block on a wait(s). although it could! 1 Each task can block at most on a single critical section. It prevents deadlocks and allows stack sharing. B 2 2 It is transparent to the programmer. 3 PROBLEMS: 1. Tasks may block even if they do not use resources. Priority assigned to i 2. Since tasks are blocked at activation, blocking could be p i = P max = max( P 1 , …, P n ) inside critical sections: unnecessary (pessimistic assumption). 20 19 Highest Locker Priority NPP: problem 2 A task could block even if not accessing a critical section: 2 Access Rule: A task never blocks at the entrance of a 1 1 blocks just in case ... critical section, but at its activation time. 1 test Progress Rule: Inside resource R, a task executes at the highest priority of the tasks that use R. 2 CS CS Release Rule: At exit, the dynamic priority of the task is p 2 reset to its nominal priority P i . P max P 2 21 22 HLP: implementation notes HLP: example 2 is blocked, but Each task i is assigned a nominal priority P i and a priority 1 can preempt dynamic priority p i . 1 Each semaphore S is assigned a resource ceiling C(S) : B 2 2 C(S) = max { P i | i uses S } 3 Then, the protocol can be implemented by changing the behavior of the wait and signal primitives: p 3 P 2 wait(S): p i = C(S) P 3 signal(S): p i = P i Priority assigned to i p i (R) = max { P j | j uses R } Note : HLP is also known as Immediate Priority Ceiling ( IPC ). inside a resource R: 23 24

10/29/2019 HLP: pro & cons Priority Inheritance Protocol ADVANTAGES: simplicity and efficiency. Access Rule: A task blocks at the entrance of a critical Semaphores queues are not needed, because tasks section if the resource is locked. never block on a wait(s). Each task can block at most on a single critical section. Progress Rule: Inside resource R, a task executes with the It prevents deadlocks. highest priority of the tasks blocked on R. It allows stack sharing. Release Rule: At exit, the dynamic priority of the task is PROBLEMS: reset to its nominal priority P i . Since tasks are blocked at activation, blocking could be unnecessary (same pessimism as for NPP). It is not transparent to programmers (due to ceilings). 25 26 PIP: types of blocking PIP: example Direct blocking priority direct blocking A task blocks on a locked semaphore 1 Indirect blocking (Push-through blocking) push-through blocking 2 A task blocks because a lower priority task inherited a higher priority. 3 inherits the priority of 1 3 BLOCKING: P 1 a delay caused by lower priority tasks P 3 27 28 PIP: implementation notes Identifying blocking resources Inside a resource R the dynamic priority p i is set as Under PIP, a task i can be blocked on a semaphore p i (R) = max { P h | h blocked on R } S k only if: wait(s): if (s == 0) { 1. S k is directly shared between i and lower priority <suspend the calling task exe in the semaphore queue> <find the task k that is locking the semaphore s> tasks (direct blocking) p k = P exe // priority inheritance <call the scheduler> OR } else s = 0; 2. S k is shared between tasks with priority lower signal(s): if (there are blocked tasks) { than i and tasks having priority higher than i <awake the highest priority task in the semaphore queue> p exe = P exe (push-through blocking). <call the scheduler> } else s = 1; 29 30

Recommend

More recommend