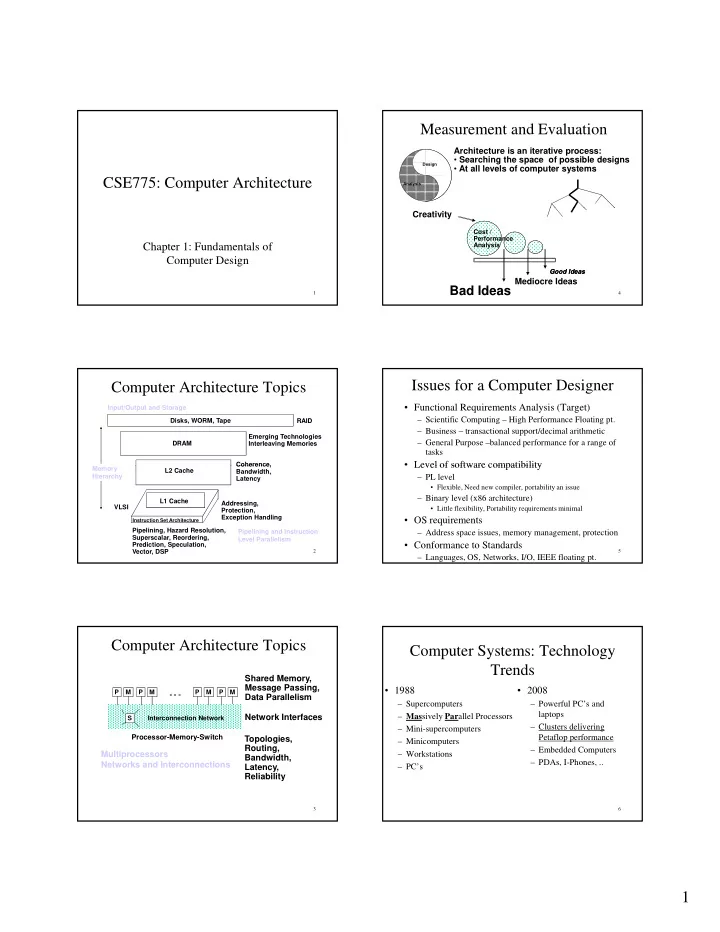

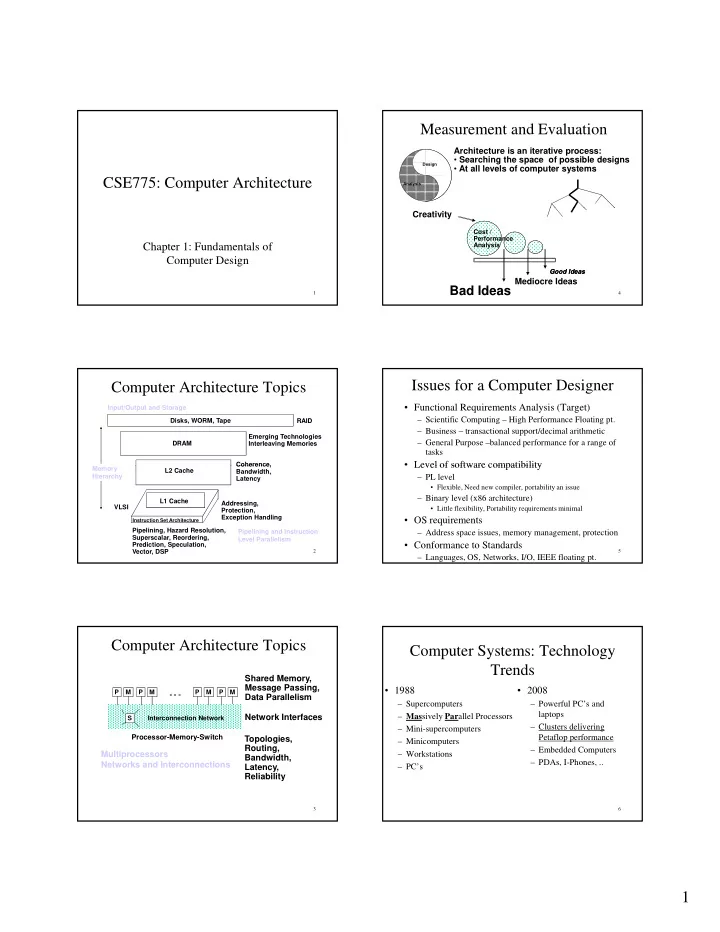

Measurement and Evaluation Architecture is an iterative process: • Searching the space of possible designs Design • At all levels of computer systems CSE775: Computer Architecture Analysis Creativity Cost / Performance Chapter 1: Fundamentals of Analysis Computer Design Good Ideas Good Ideas Mediocre Ideas Bad Ideas 1 4 Issues for a Computer Designer Computer Architecture Topics • Functional Requirements Analysis (Target) Input/Output and Storage – Scientific Computing – High Performance Floating pt. Disks, WORM, Tape RAID – Business – transactional support/decimal arithmetic Emerging Technologies – General Purpose –balanced performance for a range of DRAM Interleaving Memories tasks • Level of software compatibility Level of software compatibility Coherence, Coherence, Memory L2 Cache Bandwidth, Hierarchy – PL level Latency • Flexible, Need new compiler, portability an issue – Binary level (x86 architecture) L1 Cache Addressing, VLSI • Little flexibility, Portability requirements minimal Protection, Exception Handling • OS requirements Instruction Set Architecture Pipelining, Hazard Resolution, Pipelining and Instruction – Address space issues, memory management, protection Superscalar, Reordering, Level Parallelism • Conformance to Standards Prediction, Speculation, Vector, DSP 2 5 – Languages, OS, Networks, I/O, IEEE floating pt. Computer Architecture Topics Computer Systems: Technology Trends Shared Memory, Message Passing, • 1988 • 2008 P M P M P M P M ° ° ° Data Parallelism – Supercomputers – Powerful PC’s and laptops – Mas sively Par allel Processors Network Interfaces S Interconnection Network – Clusters delivering Cl d li i – Mini-supercomputers Processor-Memory-Switch Petaflop performance Topologies, – Minicomputers Routing, – Embedded Computers Multiprocessors – Workstations Bandwidth, – PDAs, I-Phones, .. Networks and Interconnections Latency, – PC’s Reliability 3 6 1

Growth in Performance of RAM & CPU Technology Trends • Integrated circuit logic technology – a growth in transistor count on chip of about 40% to 55% per year. • Semiconductor RAM – capacity increases by 40% per Figure 5.2 year, while cycle time has improved very slowly, decreasing by about one-third in 10 years. Cost has decreased at rate about the rate at which capacity increases. • Magnetic disc technology – in 1990’s disk density had been improving 60% to100% per year, while prior to 1990 about 30% per year. Since 2004, it dropped back to 30% per year. • Network technology – Latency and bandwidth are important. Internet infrastructure in the U.S. has been doubling in bandwidth • Mismatch between CPU performance growth and memory performance growth!! every year. High performance Systems Area Network (such as • And, almost unchanged memory latency InfiniBand) delivering continuous reduced latency. • Little instruction-level parallelism left to exploit efficiently • Maximum power dissipation of air-cooled chips reached 7 10 Why Such Change in 20 years? Cost of Six Generations of • Performance DRAMs – Technology Advances • CMOS (complementary metal oxide semiconductor) VLSI dominates older technologies like TTL (Transistor Transistor Logic) in cost AND performance – Computer architecture advances improves low-end • RISC, pipelining, superscalar, RAID, … , p p g, p , , • Price: Lower costs due to … – Simpler development • CMOS VLSI: smaller systems, fewer components – Higher volumes – Lower margins by class of computer, due to fewer services 8 11 Growth in Microprocessor Performance Cost of Microprocessors Figure 1.1 In 90’s, the main source of innovations in computer design has come from RISC-style pipelined processors. In the last several years, the annual growth rate is (only) 10-20%. 9 12 2

Components of Price for a $1000 Performance and Cost PC Throughput Plane DC to Paris Speed Passengers (pmph) Boeing 747 6.5 hours 610 mph 470 286,700 BAD/Sud 3 hours 1350 mph 132 178,200 Concodre • Time to run the task (ExTime) – Execution time, response time, latency • Tasks per day, hour, week, sec, ns … (Performance) – Throughput, bandwidth 13 16 Integrated Circuits Costs The Bottom Line: IC cost = Die cost + Testing cost + Packaging cost Performance (and Cost) Final test yield Die cost = Wafer cost "X is n times faster than Y" means Dies per Wafer * Die yield ExTime(Y) Performance(X) Dies per wafer = š * ( Wafer_diam / 2) 2 – š * Wafer_diam – Test dies Die Area Die Area ¦ 2 * Die Area ¦ 2 Die Area --------- = --------------- ExTime(X) Performance(Y) • Speed of Concorde vs. Boeing 747 − α Defects_per_unit_area * Die_Area } { • Throughput of Boeing 747 vs. Concorde Die Yield = Wafer yield * 1 + α Die Cost goes roughly with die area 4 14 17 DAP.S98 1 Failures and Dependability Metrics of Performance • Failures at any level costs money – Integrated circuits (processor, memory) Application Answers per month Operations per second – Disks Programming Language – Networks Compiler • Costs Millions of Dollars for 1hour downtime • Costs Millions of Dollars for 1hour downtime (millions) of Instructions per second: MIPS (Amazon, Google, ..) (millions) of (FP) operations per second: MFLOP/s ISA • No concept of downtime at the middle of night Datapath Megabytes per second Control • Systems need to be designed with fault- Function Units Cycles per second (clock rate) Transistors Wires Pins tolerance – Hardware – Software 15 18 3

SPEC: System Performance Computer Engineering Evaluation Cooperative Methodology • First Round 1989 – 10 programs yielding a single number (“SPECmarks”) Evaluate Existing Evaluate Existing Implementation Systems for Systems for • Second Round 1992 Complexity Bottlenecks Bottlenecks – SPECInt92 (6 integer programs) and SPECfp92 (14 floating point programs) g p p g ) Benchmarks Benchmarks – “benchmarks useful for 3 years” Technology • SPEC CPU2000 (11 integer benchmarks – CINT2000, and Trends 14 floating-point benchmarks – CFP2000 Implement Next Implement Next Simulate New Simulate New • SPEC 2006 (CINT2006, CFP2006) Generation System Generation System Designs and Designs and • Server Benchmarks Organizations Organizations – SPECWeb – SPECFS Workloads • TPC (TPA-A, TPC-C, TPC-H, TPC-W, …) 19 22 Measurement Tools SPEC 2000 (CINT 2000)Results • Benchmarks, Traces, Mixes • Hardware: Cost, delay, area, power estimation • Simulation (many levels) – ISA, RT, Gate, Circuit • Queuing Theory Q i Th • Rules of Thumb • Fundamental “Laws”/Principles • Understanding the limitations of any measurement tool is crucial. 20 23 Issues with Benchmark SPEC 2000 (CFP 2000)Results Engineering • Motivated by the bottom dollar, good performance on classic suites � more customers, better sales. , • Benchmark Engineering � Limits the longevity of benchmark suites • Technology and Applications � Limits the longevity of benchmark suites. 21 24 4

Performance Evaluation Reporting Performance Results • “For better or worse, benchmarks shape a field” • Good products created when have: – Good benchmarks • Reproducibility – Good ways to summarize performance • � Apply them on publicly available • Given sales is a function in part of performance benchmarks. Pecking/Picking order benchmarks Pecking/Picking order relative to competition, investment in improving relative to competition investment in improving product as reported by performance summary – Real Programs • If benchmarks/summary inadequate, then choose – Real Kernels between improving product for real programs vs. – Toy Benchmarks improving product to get more sales; Sales almost always wins! – Synthetic Benchmarks • Execution time is the measure of computer performance! 25 28 How to Summarize Performance Simulations • Arithmetic mean (weighted arithmetic mean) tracks execution time: sum(T i )/n or sum(W i *T i ) • When are simulations useful? • What are its limitations, I.e. what real world • What are its limitations I e what real world phenomenon does it not account for? • Harmonic mean (weighted harmonic mean) of rates (e.g., MFLOPS) tracks execution time: n/sum(1/R i ) or 1/sum(W i /R i ) • The larger the simulation trace, the less tractable the post-processing analysis. 26 29 How to Summarize Performance Queuing Theory (Cont’d) • What are the distributions of arrival rates • Normalized execution time is handy for scaling and values for other parameters? performance (e.g., X times faster than SPARCstation 10) SPARCstation 10) • But do not take the arithmetic mean of • Are they realistic? normalized execution time, use the Geometric Mean = (Product(R i )^1/n) • What happens when the parameters or distributions are changed? 27 30 5

Recommend

More recommend