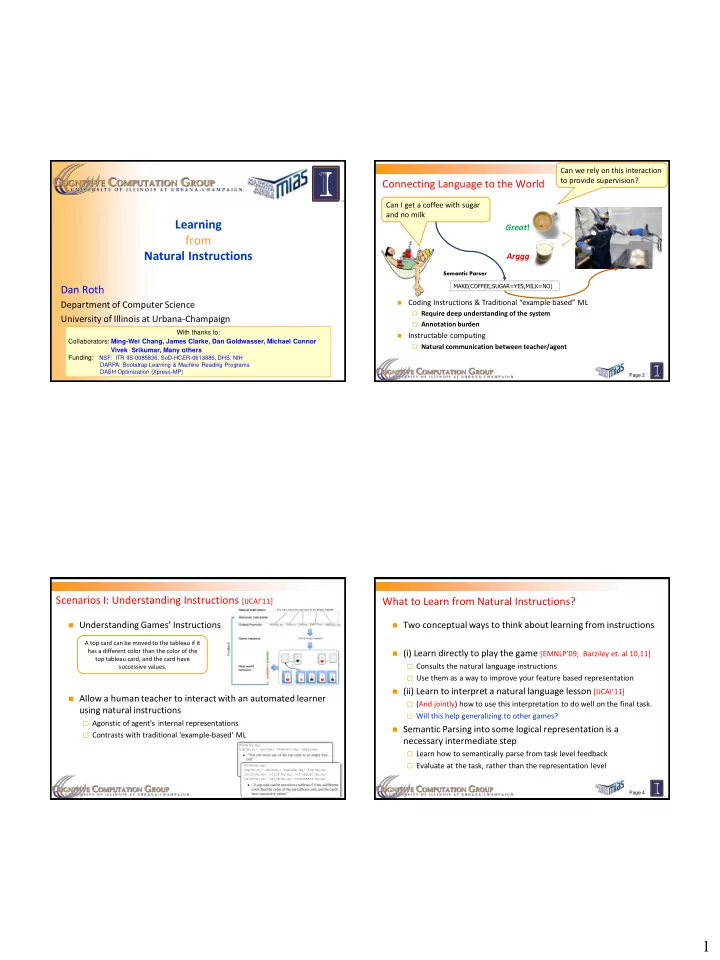

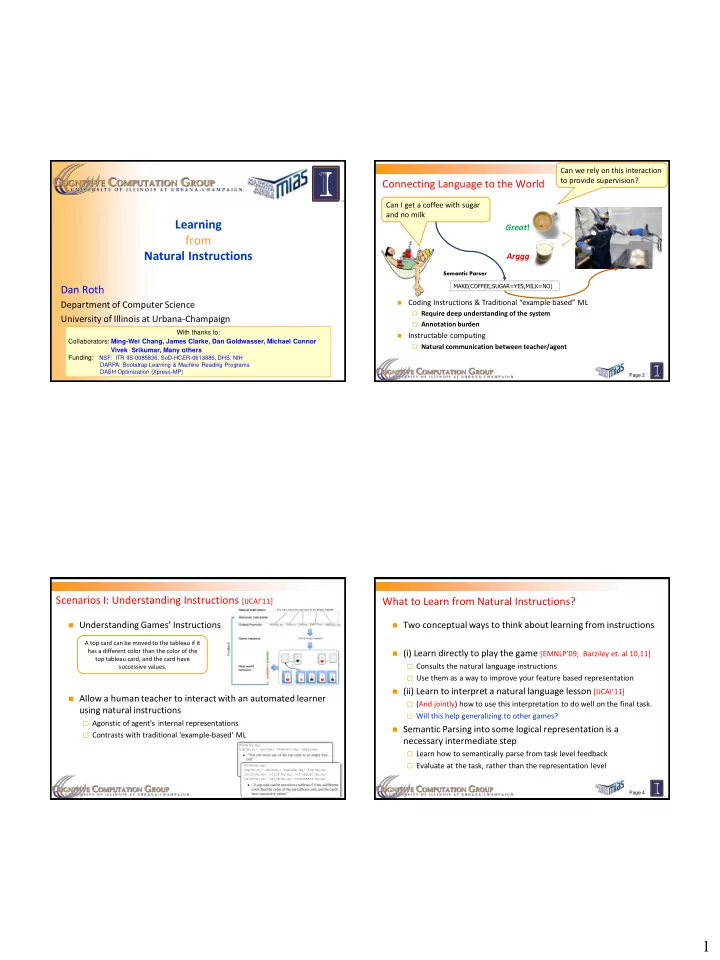

Can we rely on this interaction to provide supervision? Connecting Language to the World Can I get a coffee with sugar and no milk Learning Great ! from Natural Instructions Arggg Semantic Parser MAKE(COFFEE,SUGAR=YES,MILK=NO) Dan Roth Coding Instructions & Traditional “example based” ML Department of Computer Science Require deep understanding of the system University of Illinois at Urbana-Champaign Annotation burden With thanks to: Instructable computing Collaborators: Ming-Wei Chang, James Clarke, Dan Goldwasser, Michael Connor July 2011 Natural communication between teacher/agent Vivek Srikumar, Many others ALIHT-2011, IJCAI, Barcelona, Spain Funding: NSF: ITR IIS-0085836, SoD-HCER-0613885, DHS; NIH DARPA: Bootstrap Learning & Machine Reading Programs DASH Optimization (Xpress-MP) Page 2 Scenarios I: Understanding Instructions [IJCAI’11] What to Learn from Natural Instructions? Understanding Games’ Instructions Two conceptual ways to think about learning from instructions A top card can be moved to the tableau if it has a different color than the color of the (i) Learn directly to play the game [EMNLP’09; Barziley et. al 10,11] top tableau card, and the card have Consults the natural language instructions successive values. Use them as a way to improve your feature based representation (ii) Learn to interpret a natural language lesson [IJCAI’ 11] Allow a human teacher to interact with an automated learner (And jointly) how to use this interpretation to do well on the final task. using natural instructions Will this help generalizing to other games? Agonstic of agent's internal representations Semantic Parsing into some logical representation is a Contrasts with traditional 'example-based' ML necessary intermediate step Learn how to semantically parse from task level feedback Evaluate at the task, rather than the representation level Page 4 1

Scenario I’: Semantic Parsing [CoNLL’ 10 ,ACL’ 11 …] Scenario II. The language-world mapping problem “the world” [IJCAI’11, ACL’10,…] X : “What is the largest state that borders New York and Maryland ?" How do we acquire language? Y: largest( state( next_to( state(NY)) AND next_to (state(MD)))) Successful interpretation involves multiple decisions “the language” What entities appear in the interpretation? “New York” refers to a state or a city? How to compose fragments together? [Topid rivvo den marplox.] state(next_to()) >< next_to(state()) Question: How to learn to semantically parse from “task Is it possible to learn the meaning of verbs from natural, level” feedback. behavior level, feedback? (no intermediate representation) Page 5 Page 6 Outline Interpret Language Into An Executable Representation Background: NL Structure with Integer Linear Programming X : “What is the largest state that borders New York and Maryland ?" Global Inference with expressive structural constraints in NLP Y: largest( state( next_to( state(NY) AND next_to (state(MD)))) Constraints Driven Learning with Indirect Supervision Successful interpretation involves multiple decisions Training Paradigms for latent structure What entities appear in the interpretation? Indirect Supervision Training with latent structure (NAACL’10) “New York” refers to a state or a city? Training Structure Predictors by Inventing binary labels (ICML’10) How to compose fragments together? Response based Learning state(next_to()) >< next_to(state()) Driving supervision signal from World’s Response (CoNLL’10,IJCAI’11) Semantic Parsing ; playing Freecell; Language Acquisition Question: How to learn to semantically parse from “task level” feedback. Page 7 Page 8 2

Constrained Conditional Models (aka ILP Inference) Learning and Inference in NLP Penalty for violating the constraint. Natural Language Decisions are Structured Global decisions in which several local decisions play a role but there (Soft) constraints component are mutual dependencies on their outcome. Weight Vector for “local” models It is essential to make coherent decisions in a way that takes How far y is from Features, classifiers; log- a “legal” assignment the interdependencies into account. Joint, Global Inference . linear models (HMM, CRF) or a combination But: Learning structured models requires annotating structures. How to solve? How to train? This is an Integer Linear Program Training is learning the objective Interdependencies among decision variables should be function exploited in Decision Making (Inference) and in Learning. Solving using ILP packages gives an exact solution. Decouple? Decompose? Goal: learn from minimal, indirect supervision Amplify it using interdependencies among variables Cutting Planes, Dual Decomposition & How to exploit the structure to other search techniques are possible minimize supervision? Examples: CCM Formulations (aka ILP for NLP) Three Ideas Modeling Idea 1: Separate modeling and problem formulation from algorithms CCMs can be viewed as a general interface to easily combine Similar to the philosophy of probabilistic modeling declarative domain knowledge with data driven statistical models Inference Idea 2: Formulate NLP Problems as ILP problems (inference may be done otherwise) 1. Sequence tagging (HMM/CRF + Global constraints) Keep model simple, make expressive decisions (via constraints) 2. Sentence Compression (Language Model + Global Constraints) Unlike probabilistic modeling, where models become more expressive 3. SRL (Independent classifiers + Global Constraints) Sequential Prediction Sentence Linguistics Constraints Linguistics Constraints Idea 3: Learning Compression/Summarization: Expressive structured decisions can be supervised indirectly via HMM/CRF based: Cannot have both A states and B states Argmax ¸ ij x ij Language Model based: in an output sequence. If a modifier chosen, include its head related simple binary decisions Argmax ¸ ijk x ijk If verb is chosen, include its arguments Global Inference can be used to amplify the minimal supervision. 3

Any Boolean rule can be encoded as a (collection of) linear constraints. Information extraction without Prior Knowledge Example: Sequence Tagging LBJ: allows a developer to encode Lars Ole Andersen . Program am analy alysis and special ializ izat ation for the constraints in FOL, to be compiled Example: the man saw the dog HMM / CRF: C Program amming ing languag age. PhD thesis. is. DIKU , into linear inequalities automatically. D D D D D n ¡ 1 Y y ¤ = argmax Universit ity of Cope penhag agen, May 1994 . N N N N N P ( y 0 ) P ( x 0 j y 0 ) P ( y i j y i ¡ 1 ) P ( x i j y i ) y 2Y i =1 A A A A A As an ILP: V V V V V Prediction result of a trained HMM Lars Ole Andersen . Program analysis and X [AUTHOR] 1 f y 0 = y g = 1 Discrete predictions specialization for the [TITLE] E] y 2Y X C [EDITOR] R] 8 y; 1 f y 0 = y g = 1 f y 0 = y ^ y 1 = y 0 g Programming language y 0 2 Y [BOOKTITLE] E] output consistency X X 8 y; i > 1 1 f y i ¡ 1 = y 0 ^ y i = y g = 1 f y i = y ^ y i + 1 = y 00 g . PhD thesis . [TECH-REP REPORT RT] y 0 2Y y 00 2Y DIKU , University of Copenhagen , May [INST STITUTION] n ¡ 1 X X 1994 . E] [DATE] 1 f y 0 = \V" g + 1 f y i ¡ 1 = y ^ y i = \V" g ¸ 1 Other constraints i =1 y 2Y Violates lots of natural constraints! Strategies for Improving the Results Examples of Constraints (Pure) Machine Learning Approaches Each field must be a consecutive list of words and can appear at most once in a citation. Higher Order HMM/CRF? Increasing the model complexity Increasing the window size? Adding a lot of new features State transitions must occur on punctuation marks. Requires a lot of labeled examples The citation can only start with AUTHOR or EDITOR . What if we only have a few labeled examples? Can we keep the learned model simple and The words pp., pages correspond to PAGE. still make expressive decisions? Four digits starting with 20xx and 19xx are DATE . Other options? Quotations can appear only in TITLE Easy to express pieces of “knowledge” Constrain the output to make sense ……. Push the (simple) model in a direction that makes sense Non Propositional; May use Quantifiers Page 15 Page 16 4

Recommend

More recommend