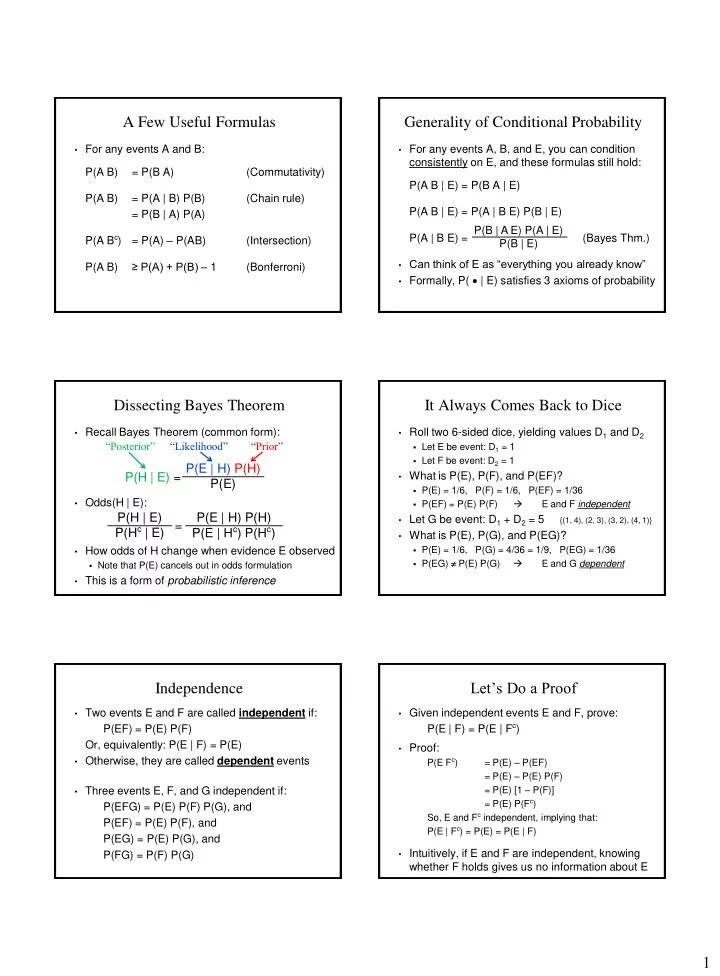

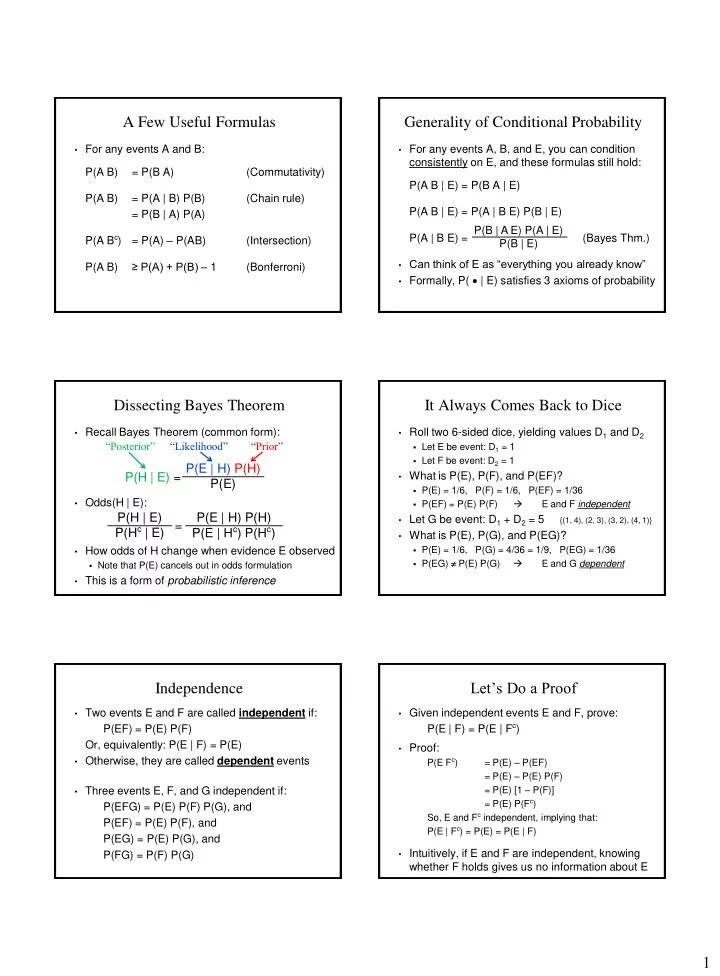

A Few Useful Formulas Generality of Conditional Probability • For any events A and B: • For any events A, B, and E, you can condition consistently on E, and these formulas still hold: P(A B) = P(B A) (Commutativity) P(A B | E) = P(B A | E) P(A B) = P(A | B) P(B) (Chain rule) P(A B | E) = P(A | B E) P(B | E) = P(B | A) P(A) P(B | A E) P(A | E) P(A B c ) = P(A) – P(AB) P(A | B E) = (Bayes Thm.) (Intersection) P(B | E) • Can think of E as “everything you already know” ≥ P(A) + P(B) – 1 P(A B) (Bonferroni) • Formally, P( | E) satisfies 3 axioms of probability Dissecting Bayes Theorem It Always Comes Back to Dice • Recall Bayes Theorem (common form): • Roll two 6-sided dice, yielding values D 1 and D 2 “Posterior” “Likelihood” “Prior” Let E be event: D 1 = 1 Let F be event: D 2 = 1 P(E | H) P(H) P(H | E) = • What is P(E), P(F), and P(EF)? P(E) P(E) = 1/6, P(F) = 1/6, P(EF) = 1/36 • Odds(H | E): P(EF) = P(E) P(F) E and F independent P(H | E) P(E | H) P(H) • Let G be event: D 1 + D 2 = 5 {(1, 4), (2, 3), (3, 2), (4, 1)} = P(H c | E) P(E | H c ) P(H c ) • What is P(E), P(G), and P(EG)? • How odds of H change when evidence E observed P(E) = 1/6, P(G) = 4/36 = 1/9, P(EG) = 1/36 P(EG) P(E) P(G) E and G dependent Note that P(E) cancels out in odds formulation • This is a form of probabilistic inference Let’s Do a Proof Independence • Two events E and F are called independent if: • Given independent events E and F, prove: P(E | F) = P(E | F c ) P(EF) = P(E) P(F) Or, equivalently: P(E | F) = P(E) • Proof: • Otherwise, they are called dependent events P(E F c ) = P(E) – P(EF) = P(E) – P(E) P(F) = P(E) [1 – P(F)] • Three events E, F, and G independent if: = P(E) P(F c ) P(EFG) = P(E) P(F) P(G), and So, E and F c independent, implying that: P(EF) = P(E) P(F), and P(E | F c ) = P(E) = P(E | F) P(EG) = P(E) P(G), and • Intuitively, if E and F are independent, knowing P(FG) = P(F) P(G) whether F holds gives us no information about E 1

Generalized Independence Two Dice • General definition of Independence: • Roll two 6-sided dice, yielding values D 1 and D 2 Events E 1 , E 2 , ..., E n are independent if for every Let E be event: D 1 = 1 subset E 1’ , E 2’ , ..., E r’ (where r n ) it holds that: Let F be event: D 2 = 6 Are E and F independent? Yes! P ( E E E ... E ) P ( E ) P ( E ) P ( E )... P ( E ) 1 ' 2 ' 3 r ' 1 ' 2 ' 3 ' r ' • Let G be event: D 1 + D 2 = 7 Are E and G independent? Yes! • Example: outcomes of n separate flips of a coin P(E) = 1/6, P(G) = 1/6, P(E G) = 1/36 [roll (1, 6)] are all independent of one another Are F and G independent? Yes! Each flip in this case is called a “trial” of the experiment P(F) = 1/6, P(G) = 1/6, P(F G) = 1/36 [roll (1, 6)] Are E, F and G independent? No! P(EFG) = 1/36 1/216 = (1/6)(1/6)(1/6) Generating Random Bits Coin Flips • A computer produces a series of random bits, • Say a coin comes up heads with probability p with probability p of producing a 1. Each coin flip is an independent trial Each bit generated is an independent trial E = first n bits are 1’s, followed by a 0 • P( n heads on n coin flips) = p n What is P(E)? • P( n tails on n coin flips) = (1 – p) n • Solution ) = P(1 st bit=1) P(2 nd bit=1) ... P(n th bit=1) k n k P(first n 1’s) • P(first k heads, then n – k tails) = p ( 1 p = p n n P( n+1 bit=0) = (1 – p) k n k p ( 1 p ) • P(exactly k heads on n coin flips) = k P(E) = P(first n 1’s) P( n+1 bit=0) = p n (1 – p) Hash Tables Yet More Hash Table Fun • m strings are hashed (equally randomly) into a • m strings are hashed (unequally) into a hash table hash table with n buckets with n buckets Each string hashed is an independent trial Each string hashed is an independent trial, with probability p i of getting hashed to bucket i E = at least one string hashed to first bucket E = At least 1 of buckets 1 to k has ≥ 1 string hashed to it What is P(E)? • Solution • Solution F i = at least one string hashed into i -th bucket F i = string i not hashed into first bucket (where 1 i m ) = P(F 1 F 2 … F k ) = 1 – P((F 1 F 2 … F k ) c ) P(F i ) = 1 – 1/n = (n – 1)/n (for all 1 i m ) P(E) c … F k c F 2 = 1 – P(F 1 c ) ( DeMorgan’s Law) Event (F 1 F 2 …F m ) = no strings hashed to first bucket c … F k c F 2 c ) = P(no strings hashed to buckets 1 to k ) P(F 1 = 1 – P(F 1 F 2 …F m ) = 1 – P(F 1 )P(F 2 )…P(F m ) P(E) = (1 – p 1 – p 2 – … – p k ) m = 1 – ((n – 1)/n) m = 1 – (1 – p 1 – p 2 – … – p k ) m Similar to ≥ 1 of m people having same birthday as you P(E) 2

No, Really, it’s More Hash Table Fun Sending Messages Through a Network • m strings are hashed (unequally) into a hash table • Consider the following parallel network: with n buckets p 1 p 2 Each string hashed is an independent trial, with A B probability p i of getting hashed to bucket i p n E = Each of buckets 1 to k has ≥ 1 string hashed to it n independent routers, each with probability p i of • Solution functioning (where 1 i n ) F i = at least one string hashed into i -th bucket E = functional path from A to B exists. What is P(E)? = P(F 1 F 2 … F k ) = 1 – P((F 1 F 2 … F k ) c ) P(E) c F 2 c … F k • Solution: = 1 – P(F 1 c ) ( DeMorgan’s Law) = 1 – P(all routers fail) P(E) k k c c c c = 1 – ( r 1 ) P F 1 ( 1 ) P ( F F ... F ) = 1 – (1 – p 1 )(1 – p 2 )…(1 – p n ) i i i i 1 2 r i 1 r 1 i ... i 1 r n c c c m where P ( F F ... F ) ( 1 p p ... p ) = 1 ( 1 p ) i i i i i i i 1 2 r 1 2 r i 1 Reminder of Geometric Series Simplified Craps n • Two 6-sided dice repeatedly rolled (roll = ind. trial) 0 1 2 3 n i • Geometric series: x x x x ... x x E = 5 is rolled before a 7 is rolled i 0 What is P(E)? • From your “Calculation Reference” handout: • Solution n 1 n 1 x F n = no 5 or 7 rolled in first n – 1 trials, 5 rolled on n th trial i x 1 x P i 0 P(E) = F P ( F ) n n n 1 n 1 P(5 on any trial) = 4/36 P(7 on any trial) = 6/36 • As n , and | x | < 1, then P(F n ) = (1 – (10/36)) n-1 (4/36) = (26/36) n-1 (4/36) n n 1 n 1 n 1 x 1 4 26 4 26 4 1 2 i x P(E) = 26 1 x 1 x 36 36 36 36 36 5 n 1 n 0 i 0 1 36 DNA Paternity Testing • Child is born with (A, a) gene pair (event B A,a ) Mother has (A, A) gene pair Two possible fathers: M 1 : (a, a) M 2 : (a, A) P(M 1 ) = p P(M 2 ) = 1 - p What is P(M 1 | B A,a )? • Solution P ( M 1 | B A,a ) = P ( M 1 B A,a ) / P ( B A,a ) P ( B | M ) P ( M ) A , a 1 1 P ( B | M ) P ( M ) P ( B | M ) P ( M ) A , a 1 1 A , a 2 2 1 p 2 p M 1 more likely to be father p 1 1 p than he was before, since 1 p ( 1 p ) P(M 1 | B A,a ) > P(M 1 ) 2 3

Recommend

More recommend