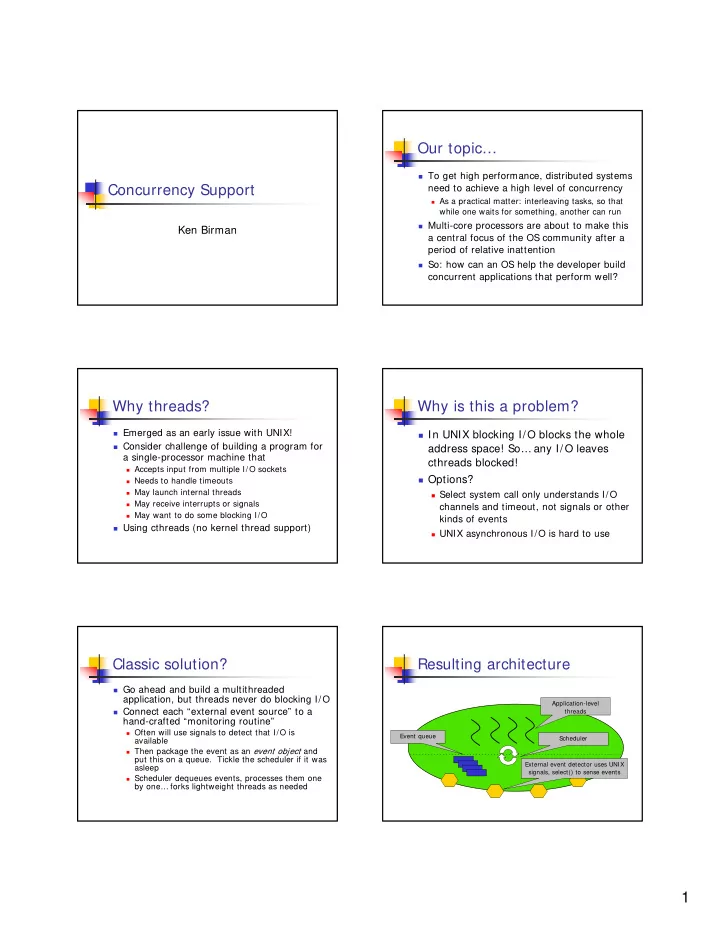

Our topic… � To get high performance, distributed systems Concurrency Support need to achieve a high level of concurrency � As a practical matter: interleaving tasks, so that while one waits for something, another can run � Multi-core processors are about to make this Ken Birman a central focus of the OS community after a period of relative inattention � So: how can an OS help the developer build concurrent applications that perform well? Why threads? Why is this a problem? � Emerged as an early issue with UNIX! � In UNIX blocking I/O blocks the whole � Consider challenge of building a program for address space! So… any I/O leaves a single-processor machine that cthreads blocked! � Accepts input from multiple I/O sockets � Options? � Needs to handle timeouts � May launch internal threads � Select system call only understands I/O � May receive interrupts or signals channels and timeout, not signals or other � May want to do some blocking I/O kinds of events � Using cthreads (no kernel thread support) � UNIX asynchronous I/O is hard to use Classic solution? Resulting architecture � Go ahead and build a multithreaded application, but threads never do blocking I/O Application-level � Connect each “external event source” to a threads hand-crafted “monitoring routine” � Often will use signals to detect that I/O is Event queue Scheduler available � Then package the event as an event object and put this on a queue. Tickle the scheduler if it was External event detector uses UNIX asleep signals, select() to sense events � Scheduler dequeues events, processes them one by one… forks lightweight threads as needed 1

Problems with this? Classic issues � Only works if all the events show up as � Threads that get forked off, then block for signals some reason � Depends on UNIX not “losing” signals � Address space soon bloats, causing application to crash � Often must process a signal and also do a � Program is incredibly hard to debug, some select call to receive an event problems seen only now and then � Scheduler needs a way to block when no � Outright mistakes because C/C+ + don’t work to do (probably select()) and must be support “monitor style” synchronization sure that signal handlers can wake it up � (Easier in Java, C# ) Bottom line? Hauser et. al. case study � Concurrency bugs are incredibly common and � Their focus is on how the world’s best very hard to track down and fix hackers actually use threads � We want our cake but not the calories � They learned the hard way � Programmers find concurrency unnatural � Maybe we can learn from them � Try to package it better? � Identify software engineering paradigms that can ease the task of gaining high performance Paradigms of thread usage Defer work � Defer work � Deadlock avoidance � A very common scenario for them � General pumps � Rejuvenation � Client sees snappy response… something client requested is fired off � Slack processes � Serializers to happen in the background � Sleepers � Encapsulated fork � Examples: forking off a document print � One-shots � Exploiting parallelism operation, or code that updates window � Issue? What if thread hangs for some reason. Client may see confusing behavior on a subsequent request! 2

Pumps Sleepers, one-shots � Components of producer-consumer � These are threads that wait for some pipelines that take input in, operate on event, then trigger, then wait again it, then output it “downstream” � Examples: � Value is that they can absorb transient � Call this procedure every 20ms, or after rate mismatches some timeout � Slack process : a pump used to explicitly � Can think of device interrupt handler as a add delay, employed when trying to kind of sleeper thread group small operations into batches Deadlock avoiders Task rejuvenation � Thread created to perform some action � A nasty style of thread that might have blocked, launched by a � Application had multiple major caller who holds a lock and doesn’t subactivities, such as input handler, renderer. Something awful happened. want to wait � So create a new instance and pray � A fairly dangerous paradigm… � Seems to invite “heisenbugs” � Thread may be created with X true, but by the time it executes, X may be false! � Surprising scheduling delays a big risk here Aside: Bohr-bugs and Heisenbugs Others � Bruce Lindsey, refers to models of the atom � Serializers: a queue, and a thread that removes work from it and processes � A Bohr nuclear was a nice solid little thing. Same with a Bohr-bug. You can hit it that work item by item reproducibly and hence can fix it � Used heavily in SEDA � A Heisenbug is hard to pin down: if you � Concurrency exploiters: for multiple localize an instance, the bug shifts elsewhere. CPUs Results from non-deterministic executions, old corruption in data structures, etc…. � Encapsulated forks: threads packaged with other paradigms � Some thread paradigms invite trouble! 3

Threads considered marvelous Threads considered harmful � They are fundamentally non- � Threads are wonderful when some deterministic, hence invite Heisenbugs action may block for a while � Reentrant code is really hard to write � Like a slow I/O operation, RPC, etc � Surprising scheduling can be a huge � Your code remains clean and “linear” headache � Moreover, aggregated performance is � When something “major” changes the often far higher than without threading state of a system, cleaning up threads running based on the old state is a pain Classic threading paradigm SEDA paradigm and a performance issue � Tries to replace most threads with: � Small pools of threads � Event queues � Idea is to build a pipelined architecture that doesn’t fork threads dynamically Staged, Event Driven Event-oriented paradigm Approach (SEDA) � A SEDA stage 4

SEDA performance Criticisms of SEDA � It can still bloat, by having event queues get very large � Demands a delicate match of processing power (basically, stages to the right need to be faster than stages to the left) � Some claim that Haboob didn’t work as asserted in this paper… “Why SEDA sucks” Background (from an anonymous über-hacker) � SEDA was developed by Matt Welsh � First, if you read between the lines, the paper’s own numbers show how much SEDA � Now at Harvard sucks. � Matt ran afoul of some of the über-hackers � For example, it shows comparable throughput of the systems world to Flash where flash has half the number of � Huge fight ensued. Matt ultimately shifted file descriptors, which means he's at least to work on sensor networks (safer crowd) twice as slow as flash. � Comments that follow came from one � (Since usually performance of these systems of these angry über-hackers scales relatively linearly with file descriptors.) “Why SEDA sucks” “Why SEDA sucks” (from an anonymous über-hacker) (from an anonymous über-hacker) � The paper shows graphs like CDFs of � But on top of that the comparison is still dishonest, response time for clients serviced, when because the other servers are production servers SEDA was dumping most requests (and with logging and everything. hence they weren't showing up in the graph), � SEDA lacks a bunch of necessary facilities that would while the other servers were serving way presumably decrease its performance if implemented. more clients. The authors annoyed some members of the systems community by giving talks saying, "You might ask if � So yeah, SEDA's latency is lower than other the use of Java would put SEDA at a performance servers if SEDA serves fewer clients and thus disadvantage. It doesn't because fortunately I'm a has lower throughput. And SEDA's very, very good programmer." throughput is comparable to other servers if � Not a good move. you cut the other servers' concurrency. 5

“Why SEDA sucks” Threads: What next? (from an anonymous über-hacker) � Language community is exploring threads “Almost as embarrassing as the performance and the complete � with integrated transactional rollback… failure to solve the stated problem (namely cnn.com's overload on September 11, 2001, which he never demonstrated SEDA � Idea: thread is created for parallelism could fix) is the fact that the authors completely ignored the related work from Mogul and Ramakrishnan on eliminating � It tracks data that was changed (undo list) receive livelock.” � At commit point, check for possible concurrent That work appeared in Journal form in 1997, and earlier in � execution by some other thread accessing same conference form). data. If so, roll back, retry They made a strong case for dropping events as early as possible, � eliminating most queues, and processing events all the way � Concerns? Many. Recalls Argus system through whenever you can. Viewed in this light, SEDA should not have been published (years � (MIT) which used threads, transactions on later) without even attempting to rebut their argument. abstract data types…. Life gets messy! Bottom line? � Threads? � Events? � Transactions? (With top-level actions, orphan termination, nested commit???) � A mixture? 6

Recommend

More recommend