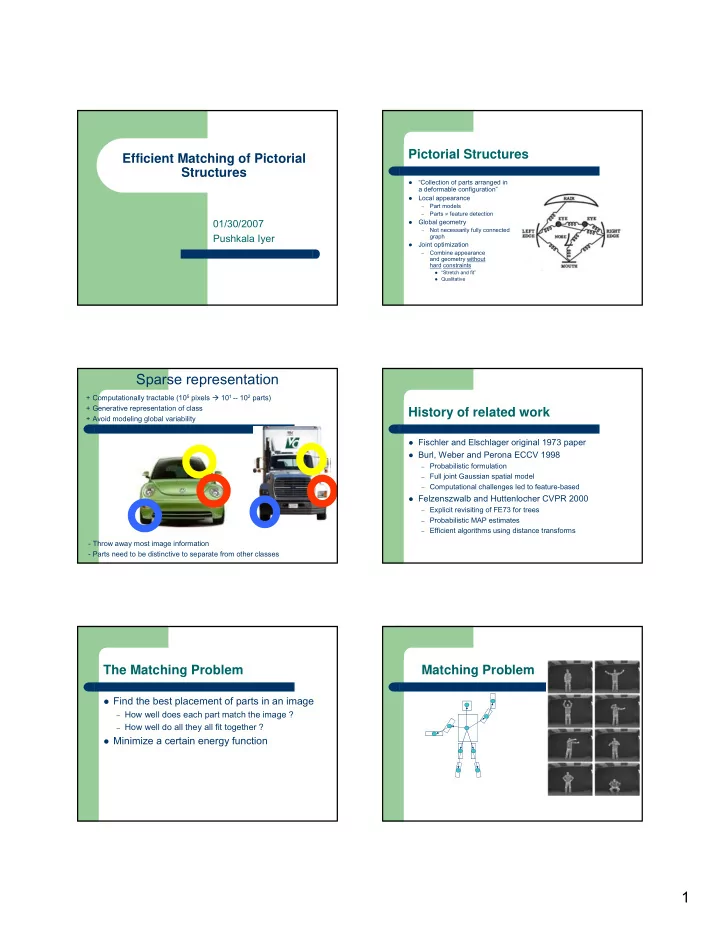

Pictorial Structures Efficient Matching of Pictorial Structures “Collection of parts arranged in � a deformable configuration” Local appearance � Part models – Parts ≠ feature detection – 01/30/2007 Global geometry � Not necessarily fully connected – Pushkala Iyer graph Joint optimization � Combine appearance – and geometry without hard constraints � “Stretch and fit” � Qualitative Sparse representation + Computationally tractable (10 5 pixels � 10 1 -- 10 2 parts) + Generative representation of class History of related work + Avoid modeling global variability + Success in specific object recognition � Fischler and Elschlager original 1973 paper � Burl, Weber and Perona ECCV 1998 – Probabilistic formulation – Full joint Gaussian spatial model – Computational challenges led to feature-based � Felzenszwalb and Huttenlocher CVPR 2000 – Explicit revisiting of FE73 for trees – Probabilistic MAP estimates – Efficient algorithms using distance transforms - Throw away most image information - Parts need to be distinctive to separate from other classes The Matching Problem Matching Problem � Find the best placement of parts in an image – How well does each part match the image ? – How well do all they all fit together ? � Minimize a certain energy function 1

The Solution Approach Recognition Framework Model Pictorial Structure model [EF73] � Graph Model G = (V, E) � Restrictions on relationships � – Parts are the vertices V = {v 1 , v 2 , … v n } Tree structure – Natural skeletal structure of many animate objects – If v i , v j are connected, then (v i , v j ) є E. – Dynamic programming – � Instance of a part in an image specified by location l . Pairwise relationships � – Position, Rotation, Scale for 2D parts. Broad range of objects – � Match cost function m i (I, l ) measures how well the Generalized Distance Transforms – part matches the image I when placed at location l . Globally best match of generic objects � FH2000 vs other approaches � Deformation cost function d ij ( l i , l j ) for every edge (v i , � Perona et al – central coordinate system, limited to one articulation point. – v j ) measures how well the locations l i of v i and l j of v j No hard decisions. – Valid configurations are not treated as being equally good. agree with the object model. – Model Framework Problem reduction � A configuration L = ( l 1 , l 2 , …, l n ) specifies a � Minimization of L* = arg min L ( ∑ (vi,vj) є E d ij ( l i , l j ) + ∑ vi є V m i (I, l i )) is O(m n ) location for each of the parts v i in V w.r.t the Where m is the number of discrete values for each l i and n is the – number of vertices in the graph. image. Markov Random Fields, Dynamic Contour Models (snakes). – � Best configuration is the configuration that � Restricted graphs reduce time complexity For first order snakes (chain) reduces to O(m 2 n) from O(m n ) minimizes the total cost: match cost of – Dynamic programming - is a method of solving problems exhibiting – individual parts + pair wise cost of the the properties of overlapping subproblems and optimal substructure in a way better than naïve methods.(Wikipedia) connected pairs of parts. Memoization and bottom-up approach. – � L* = arg min L ( ∑ (vi,vj) є E d ij ( l i , l j ) + ∑ vi є V m i (I, l i )) Tree structured graphs enable similar reduction to be achieved. – Problem Reduction Efficient Minimization O(m 2 n) algorithm is not practical – large number of possible locations � � Dynamic programming to find the for each part. Restriction on pairwise cost function dij yields a minimization algorithm configuration L* = ( l 1 *, …. l n *) that minimizes � that is O(mn). the cost. d ij ( l i , l j ) = || T ij ( l i ) – T ji ( l j ) || � d ij measures the degree of deformation. – � Computation involves n-1 functions, each of Is restricted to be a Norm. – A norm is a function which assigns a positive length or size to all vectors in – which specifying the best location of one part a vector space, other than the zero vector. (wikipedia) T ij and T ji should be invertible, together capture the ideal relative – w.r.t the possible locations of another part. configurations of parts v i and v j . T ij ( l i ) = T ji ( l j ) => l i and l j are ideal locations for v i and v j – T ji ( l j ) should be discretized in a grid. – 2

Efficient Minimization Efficient Minimization The best location of a leaf node v j (6) � For non leaf vertices v j ≠ v r , if B c ( l j ) � can be computed as a function of the is known for every child v c є of C j , location of just its parent v i (5). then the best location of v j given its Only contribution of l j to the energy is � parent v i is d ij (l i ,l j ) + m j (I,l j ) – the contribution of the edge (5,6) and the position of 6. Best location of v j given location l i of � B j (l i ) = v i is min lj (d ij ( l i , l j ) + m j (I, l j ) + ∑ vc є Cj B c ( l j )) B j (l i ) = min lj (d ij (l i ,l j ) + m j (I, l j )) � Replacing min with arg-min yeilds Replacing min by argmin, we get the � the best location of v j as a function best location of v j as a function of the of l i . location l i of its parent v i . Efficient Minimization Algorithm � For the root node v r , if B c ( l r ) is � Recursive equations specify an algorithm. � For every leaf node, compute its best location as a function of known for every child v c є C r , the location of its parent. then the best location of the � For every non leaf node X, compute its best location as a root is function of the location of X’s parent, also taking into consideration the cost of placing X’s children (previous step). L r * = � Repeat until the best location of the root is calculated. arg min l r (m r (I, l r ) + � Now traverse the tree starting at the root, to find the optimum ∑ vc є Cr B c ( l j )) configuration. � O(nM) – n (# nodes) M (time to compute B j (l i ) and B’ j (l i )) Distance Transforms Generalized Distance Transforms D B (Z) = min w є B || z- w || where B is a subset of G A distance transform , also � � � D B (Z) = min w є G (|| z- w || + 1 B (w)) where 1 B (w) is an indicator function for membership in known as distance map or B. distance field , is a representation of a digital Algorithm (G.Borgefors) computes this in O(mD) time for a D dimensional grid. � image. (Wikipedia) - Two pass, local neighborhoods of 7x7 pixels used. Meijster, Roerdink & Hesselink: � The map supplies each pixel of � - Generic distance transform algorithm in linear time. the image with the distance to - 2 Phases, first columnwise, second rowwise, each phase 2 scans. the nearest obstacle pixel . A - Per row computation independent of per column computation, can be parallelized. most common type obstacle pixel is a boundary pixel in a D f (Z) = min w є G (|| z- w || + f(w)) � binary image. How that helps: � An example of a chessboard � distance transform on a binary Given d ij ( l i , l j ) = || T ij ( l i ) – T ji ( l j ) || � image. B j (l i ) = D f (T ij (l i )) where f(w) = m j (I, T ji -1 (w)) + ∑ T ji -1 (w)) � 3

Computation Person Model D is 4 (x, y, rotation, scale) � Flexible revolute joints. � D[x,y, θ ,s] is initialized to the values of function f(w) � � Ideal relative orientation given by θ ij . D[x,y, θ ,s] = min(D[x, y, θ ,s], � � Deformation cost measures observed D[x-1, y, θ , s] + k x , deviation from ideal. D[x, y-1, θ , s] + k y , D[x, y, θ -1, s] + k θ , � Given the observed locations l i = ( θ i , s i , x i , y i ) D[x, y, θ , s-1] + k s ) and l j = ( θ j , s j , x j , y j ) D[x,y, θ ,s] = min(D[x, y, θ ,s], � θ |( θ j – θ i ) – θ ij | � d ij ( l i , l j ) = w ij D[x+1, y, θ , s] + k x , s |(log s j – log s i ) – log s ij | D[x, y+1, θ , s] + k y , + w ij D[x, y, θ +1, s] + k θ , x | x’ ij – x’ ji | + w ij D[x, y, θ , s+1] + k s ) y |y’ ij – y’ ji | + w ij Doesn’t consider periodic θ . � Special handling of boundary cases, additional passes. s ,w ij x ,w ij y and Small w ij � � Large w ij θ B j (l i ) computable in O(m) time. � Recognition Results Car Model � Flexible prismatic joints � d ij ( l i , l j ) = Infinity |( θ j – θ i )| s |(log s j – log s i ) – + w ij log s ij | x | x’ ij – x’ ji | + w ij y |y’ ij – y’ ji | + w ij Bayesian Formulation Summary � Best match by MAP estimation No decisions until the end. � No feature detection – L* = arg max L (Pr(L|I)) � Quality maps or likelihoods – No hard geometric constraints � Applying Bayes rule, � Deformation costs or priors Efficient algorithms. � L* = arg max L (Pr(I|L) Pr(L)) Dynamic programming critical – Not applicable to all problems, need good factorizations of geometry and appearance – � Prior information – from spring connections Good for categorical object recognition. � Qualitative descriptions of appearance – � Likelihood – approx product of match qualities for Factoring variability in appearance and geometry – Deals well with occlusion. individual parts. � In contrast to hard feature detection – Most applicable to 2D objects defined by relatively small number of parts. � To minimize the energy function, take the negative � � Unclear how to extend to large number of transformation parameters per part. logarithm: Explicit representation grows exponentially – No known way of using to index into model databases. � L* = arg min L ( ∑ (vi,vj) є E d ij ( l i , l j ) - ∑ vi є V ln g i (I, l i )) 4

Recommend

More recommend