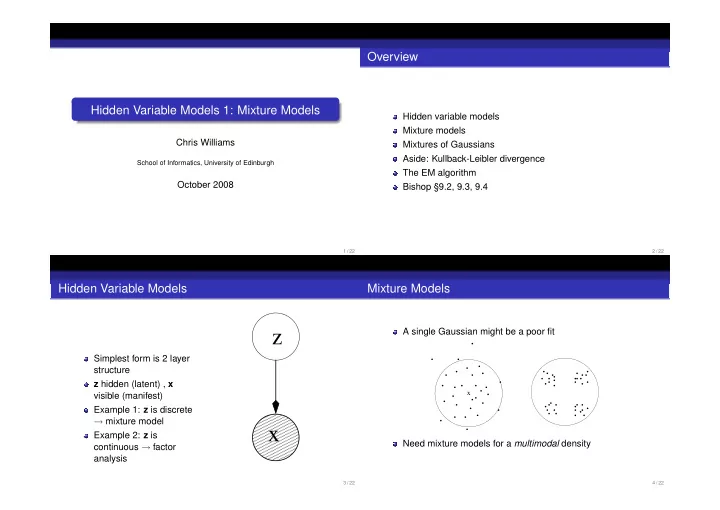

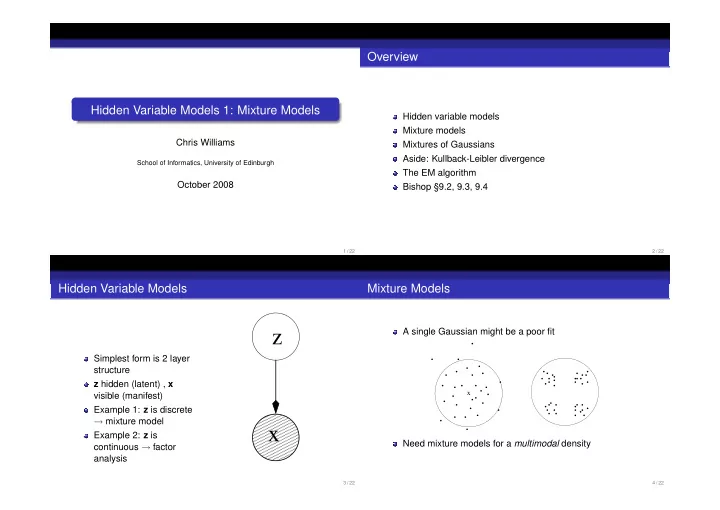

Overview Hidden Variable Models 1: Mixture Models Hidden variable models Mixture models Chris Williams Mixtures of Gaussians Aside: Kullback-Leibler divergence School of Informatics, University of Edinburgh The EM algorithm October 2008 Bishop §9.2, 9.3, 9.4 1 / 22 2 / 22 Hidden Variable Models Mixture Models z A single Gaussian might be a poor fit . . . . . Simplest form is 2 layer . . . ... . .. . . ... . . .... . structure . . . . . . . . . . . z hidden (latent) , x . . . . x . . . .... . . ... visible (manifest) . . .. . . . . . . . . Example 1: z is discrete . ���� ���� . → mixture model x ���� ���� ���� ���� Example 2: z is ���� ���� ���� ���� Need mixture models for a multimodal density continuous → factor ���� ���� ���� ���� analysis ���� ���� 3 / 22 4 / 22

Generating data from a mixture distribution Let z be a 1-of- k indicator variable, with � j z j = 1. for each datapoint p ( z j = 1 ) = π j is the probability of that the j th component is Choose a component with probability π j active Generate a sample from the chosen component density 0 ≤ π j ≤ 1 for all j , and � k end for j = 1 π j = 1 The π j ’s are called the mixing proportions k k � � p ( x ) = p ( z j = 1 ) p ( x | z j = 1 ) = π j p ( x | θ j ) j = 1 j = 1 The p ( x | θ j ) ’s are called the mixture components 5 / 22 6 / 22 Responsibilities Maximum likelihood estimation for mixture models n k � � L ( θ ) = ln π j p ( x i | θ j ) i = 1 j = 1 p ( z j = 1 ) p ( x | z j = 1 ) γ ( z j ) ≡ p ( z j = 1 | x ) = � ℓ p ( z ℓ = 1 ) p ( x | z ℓ = 1 ) ∂ p ( x i | θ j ) ∂ L π j � = ∂θ j � ℓ π ℓ p ( x i | θ ℓ ) ∂θ j π j p ( x | z j = 1 ) i = � ℓ π ℓ p ( x | z ℓ = 1 ) now use ∂ p ( x i | θ j ) = p ( x i | θ j ) ∂ ln p ( x i | θ j ) ∂θ j ∂θ j γ ( z j ) is the posterior probability (or responsibility) for and therefore component j to have generated datapoint x γ ( z ij ) ∂ ln p ( x i | θ j ) ∂ L � = ∂θ j ∂θ j i 7 / 22 8 / 22

Example: 1-d Gaussian mixture At a maximum, set derivatives = 0 � n i = 1 γ ( z ij ) x i � � ( x − µ j ) 2 1 µ j = ˆ � n p ( x | θ j ) = j ) 1 / 2 exp − i = 1 γ ( z ij ) ( 2 πσ 2 2 σ 2 j � n µ j ) 2 i = 1 γ ( z ij )( x i − ˆ σ 2 γ ( z ij )( x i − µ j ) ∂ L ˆ j = � � n = i = 1 γ ( z ij ) σ 2 ∂µ j j i π j = 1 � ˆ γ ( z ij ) . � � ( x i − µ j ) 2 ∂ L = 1 − 1 n � γ ( z ij ) i ∂σ 2 σ 4 σ 2 2 j j j i 9 / 22 10 / 22 Example Generalize to multivariate case Initial configuration Final configuration � n i = 1 γ ( z ij ) x i 2 µ j = ˆ � n i = 1 γ ( z ij ) 1 � n µ j ) T 0 i = 1 γ ( z ij )( x i − ˆ µ j )( x i − ˆ ˆ Σ j = −1 � n i = 1 γ ( z ij ) −2 0 2 4 6 π j = 1 � Mixture p( x ) Posteriors P(j| x ) ˆ γ ( z ij ) . n 1 2 Component 1 : i µ = (4.97,−0.10) 0.8 1 σ 2 = 0.60 1 prior = 0.40 0.6 0 Component 2 : 0.4 µ = (0.11,−0.15) −1 2 σ 2 = 0.46 0.2 prior = 0.60 −2 0 What happens if a component becomes responsible for a 0 2 4 6 100 200 single data point? (Tipping, 1999) 11 / 22 12 / 22

Example 2 Kullback-Leibler divergence Initial configuration Final configuration Measuring the “distance” between two probability densities P ( x ) 1.5 and Q ( x ) . 1 0.5 P ( x i ) log P ( x i ) � KL ( P || Q ) = 0 Q ( x i ) −0.5 i −1 −1.5 Also called the relative entropy −1 0 1 2 3 Mixture p( x ) Posteriors P(j| x ) Using log z ≤ z − 1, can show that KL ( P || Q ) ≥ 0 with equality 1 Component 1 : 1.5 when P = Q . µ = (1.98,0.09) 0.8 1 1 σ 2 = 0.49 0.5 prior = 0.42 0.6 Note that KL ( P || Q ) � = KL ( Q || P ) 0 Component 2 : 0.4 −0.5 µ = (0.15,0.01) −1 2 σ 2 = 0.51 0.2 −1.5 prior = 0.58 0 −1 0 1 2 3 100 200 (Tipping, 1999) 13 / 22 14 / 22 The EM algorithm The EM algorithm Q: How do we estimate parameters of a Gaussian mixture distribution? EM = Expectation-Maximization A: Use the re-estimation equations Applies where there is incomplete (or missing ) data If this data were known a maximum likelihood solution would be � n i = 1 γ ( z ij ) x i relatively easy µ j ← ˆ � n i = 1 γ ( z ij ) In a mixture model, the missing knowledge is which component � n µ j ) 2 i = 1 γ ( z ij )( x i − ˆ generated a given data point σ 2 ˆ j ← � n i = 1 γ ( z ij ) Although EM can have slow convergence to the local maximum, π j ← 1 � it is usually relatively simple and easy to implement. For ˆ γ ( z ij ) . n Gaussian mixtures it is the method of choice. i This is intuitively reasonable, but the EM algorithm shows that these updates will converge to a local maximum of the likelihood 15 / 22 16 / 22

The nitty-gritty From the non-negativity of the KL divergence, note that n � L ( θ ) = ln p ( x i | θ ) L i ( q i , θ ) ≤ log p ( x i | θ ) i = 1 Consider for just one x i first i.e. L i ( q i , θ ) is a lower bound on the log likelihood log p ( x i | θ ) = log p ( x i , z i | θ ) − log p ( z i | x i , θ ) . We now set q ( z i ) = p ( z i | x i , θ old ) [E step] Now introduce q ( z i ) and take expectations � p ( z i | x i , θ old ) log p ( x i , z i | θ ) − � p ( z i | x i , θ old ) log p ( z i | x i , θ old ) L i ( q i , θ ) = z i z i � � log p ( x i | θ ) = q ( z i ) log p ( x i , z i | θ ) − q ( z i ) log p ( z i | x i , θ ) def = Q i ( θ | θ old ) + H ( q i ) z i z i q ( z i ) log p ( x i , z i | θ ) q ( z i ) log p ( z i | x i , θ ) Notice that H ( q i ) is independent of θ (as opposed to θ old ) � � = − q ( z i ) q ( z i ) z i z i def = L i ( q i , θ ) + KL ( q i || p i ) 17 / 22 18 / 22 Now sum over cases i = 1 , . . . , n n n � � L ( q , θ ) = L i ( q i , θ ) ≤ log p ( x i | θ ) ln p ( X | θ ) i = 1 i = 1 and n n � Q i ( θ | θ old ) + � L ( q , θ ) = H ( q i ) i = 1 i = 1 n def � = Q ( θ | θ old ) + H ( q i ) i = 1 where Q is called the expected complete-data log likelihood. L ( q, θ ) Thus to increase L ( q , θ ) wrt θ we need only increase Q ( θ | θ old ) θ old θ new Best to choose [M step] θ = argmax θ Q ( θ | θ old ) Chris Bishop, PRML 2006 19 / 22 20 / 22

EM algorithm: Summary k -means clustering E-step Calculate Q ( θ | θ old ) using the responsibilities p ( z i | x i , θ old ) initialize centres µ 1 , . . . , µ k M-step Maximize Q ( θ | θ old ) wrt θ while (not terminated) EM algorithm for mixtures of Gaussians for i = 1 , . . . , n calculate | x i − µ j | 2 for all centres assign datapoint i to the closest centre � n i = 1 p ( j | x i , θ old ) x i µ new ← end for j � n i = 1 p ( j | x i , θ old ) recompute each µ j as the mean of the � n i = 1 p ( j | x i , θ old )( x i − µ new ) 2 j ) new ← j ( σ 2 datapoints assigned to it � n i = 1 p ( j | x i , θ old ) end while n ← 1 � π new p ( j | x i , θ old ) . j n k -means algorithm is equivalent to the EM algorithm for i = 1 spherical covariances σ 2 j I in the limit σ 2 j → 0 for all j [Do mixture of Gaussians demo here] 21 / 22 22 / 22

Recommend

More recommend