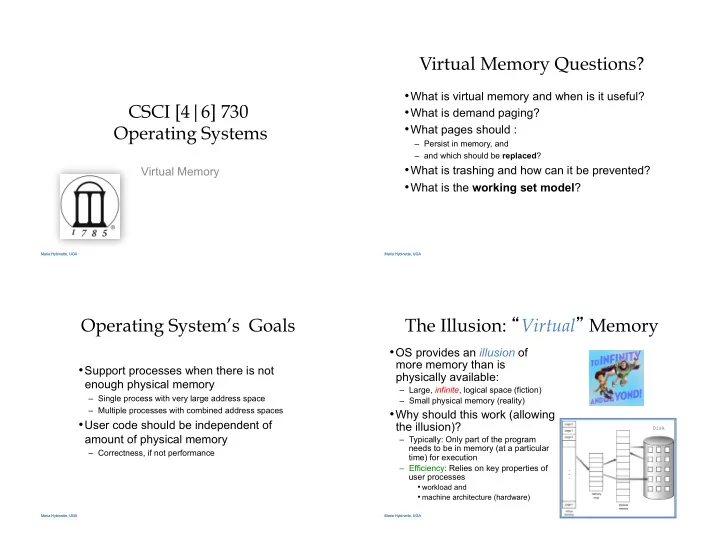

Virtual Memory Questions? • What is virtual memory and when is it useful? CSCI [4|6] 730 • What is demand paging? • What pages should : Operating Systems – Persist in memory, and – and which should be replaced ? • What is trashing and how can it be prevented? Virtual Memory • What is the working set model ? Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA Operating System’s Goals The Illusion: � Virtual � Memory • OS provides an illusion of more memory than is • Support processes when there is not physically available: enough physical memory – Large, infinite , logical space (fiction) – Single process with very large address space – Small physical memory (reality) – Multiple processes with combined address spaces • Why should this work (allowing • User code should be independent of the illusion)? Disk amount of physical memory – Typically: Only part of the program needs to be in memory (at a particular – Correctness, if not performance time) for execution – Efficiency: Relies on key properties of user processes • workload and • machine architecture (hardware) Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA

The Million Dollar Question? • How do the OS decide what is in � main � memory and what is on disk? • How can we decide? – We can: • Memory Access Patterns Maria Hybinette, UGA Maria Hybinette, UGA Observations: Memory Access Approach: Demand Paging Patterns • Sequential memory accesses of a process • (Old) Approach 1: Favor one are predictable and tend to have locality of process and bring (swap in) in its reference : entire address space. – Spatial: reference memory addresses near • (New) Approach 2: Demand Paging: previously referenced addresses (in physical memory) Bring in pages into memory only – Temporal: reference memory addresses that when needed (lazy pager instead of have referenced in the past (or recent past). a swapper). • Processes spend majority of time in small – Less memory portion of code – Faster response time? – Estimate: 90% of time in it is 10% of code, • Process viewed as a sequence of doesn’t jump around much. page accesses rather than • Implication: contiguous address space – Process only uses small amount of address space at any moment – Only small amount of address space must be resident in physical memory Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA

Virtual Memory Approach: Reflect: Virtual Address Space Intuition Mechanisms • Idea : OS keeps unreferenced pages on disk and • Each page in virtual address space maps to one of three referenced pages in physical memory locations: – Slower, cheaper backing store than memory – Physical main memory: Small, fast, expensive • Process can run – Disk (backing store): Large, slow, cheap – Even when not all pages are loaded into main memory – Nothing (error): Free • OS and hardware cooperate to provide illusion of large disk as fast as main memory – Same behavior as if all of address space in main memory Smaller, faster – Hopefully have similar performance! and more Leverage memory • Requirements: registers expensive hierarchy of machine – OS must have mechanism to identify location of each page in architecture cache address space in memory, or on disk Each layer acts as – OS must have (allocation) policy for � backing store � for the Bigger, slower main memory and cheaper • determining which pages live in memory, and layer above • which (remain) on disk – OS must have (replacement) policy which pages should be Disk Storage evicted . Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA Virtual Memory Mechanisms Virtual Address Space Mechanisms (cont) The TLB factor: Hardware and OS cooperate to Frame # valid-invalid bit Extend page tables with an extra bit to logical 1 translate addresses address indicate whether it is in memory or 1 CPU p d • First, hardware checks TLB for virtual address 1 on disk (a resident bit): page frame number number 1 – TLB hit: Address translation is done; page in • valid (or invalid) 0 physical memory TLB hit physical ! • Page in memory: valid bit set in page – TLB miss: address f d 0 table entry (PTE) • Hardware or OS walk page tables TLB 0 • If PTE designates page is valid, then page in physical • Page out to disk: valid bit cleared page table memory ( invalid ) p • Main Memory Miss: Not in main memory: Page TLB miss • PTE points to block on disk f fault (i.e., invalid ) • Causes trap into OS when page is physical – Trap into OS (not handled by hardware) memory referenced – [if memory is full)] OS selects victim page in page table • Trap: page fault memory to replace • Page table ? • Write victim page out to disk if modified (add dirty bit to PTE) – Main memory – OS reads referenced page from disk into memory – Cache (the look-aside buffer) TLB – Page table is updated, valid bit is set – Process continues execution Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA

Flow of � Paging � Operations Virtual Memory Policies PTE in TLB? logical address CPU p d CPU checks TLB page frame • OS needs to decide on policies on page faults number number Yes concerning: TLB hit PTE in TLB? physical address f d No – Page selection (When to bring in) TLB Access page table • When should a page (or pages) on disk be brought into memory? Page fault routine p • Two cases OS Instructs CPU TLB miss No Page in MM? f to read the page – When process starts, code pages begin on disk from disk physical Yes memory – As process runs, code and data pages may be moved to disk CPU activates page table Update TLB I/O hardware – Page replacement (What to replace) • Which resident page (or pages) in memory should be thrown out to Page transferred from disk to CPU generates main memory disk? physical address • Goal: Minimize number of page faults Yes Memory Full? – Page faults require milliseconds to handle (reading from disk) No Page replacement – Implication: Plenty of time for OS to make good decision Page tables updated Maria Hybinette, UGA Maria Hybinette, UGA The When : Page Selection Page Selection Continued • Pre-paging (anticipatory, prefetching): OS loads page into memory before page is referenced • When should a page be brought from disk into memory? – OS predicts future accesses (the oracle) and brings pages into memory • Request paging: User specifies which pages are needed for ahead of time (neighboring pages). process • How? – Problems: • Works well for some access patterns (e.g., sequential) • Manage memory by hand – Advantages: May avoid page faults • Users do not always know future references – Problems? : • Users are not impartial (and infact they may be wrong) • Hints: Combine demand or pre-paging with user- supplied hints • Demand paging: Load page only when page fault occurs about page references – Intuition: Wait until page must absolutely be in memory – User specifies: may need page in future, don � t need this page anymore, – When process starts: No pages are loaded in memory or sequential access pattern, ... – Advantage: Less work for user – Example: madvise() in Unix (1994 4.4 BSD UNIX) – Disadvantage: Pay cost of page fault for every newly accessed page Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA

What happens if there is no free Virtual Page Optimizations frame? • Page replacement • Copy-on-Write: on process creation allow parent and child to share the same page in memory – find some page in memory, that is not really in use, and swap it out. until one modifies the page. • Observation: Same page may be brought into memory several times (so try to keep that one in memory) – Frequently used. copy page C Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA Page Replacement Strategies Page Replacement Continued • Which page in main memory should selected as victim? – Write out victim page to disk if modified (dirty bit set) • FIFO: Replace page that has been in memory the longest – If victim page is not modified (clean), just discard (cheaper to – Intuition: First referenced long time ago, done with it now replace) – Advantages: 3 Frames • Fair: All pages receive equal residency A • Easy to implement (circular buffer) B D A B B A B A C D C – Disadvantage: Some pages may always be needed • L(east)RU: Replace page not used for longest time in past Future • OPT: Replace page not used for longest time in future – Intuition: Use the past to predict the future – Advantage: Guaranteed to minimize number of page faults – Advantages: MRU – Disadvantage: Requires that OS predict the future • With locality, LRU approximates OPT (but look backwards) • Not practical, but is good to use comparison (best you can do) – Disadvantages: • Random: Replace any page at random • Harder to implement, must track which pages have been accessed – Advantage: Easy to implement • Does not handle all workloads well – Surprise?: Works okay when memory is not severely over- committed (recall lottery scheduling, random is not too shabby, in many areas) LRU Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA Maria Hybinette, UGA MFR, LFU

Recommend

More recommend