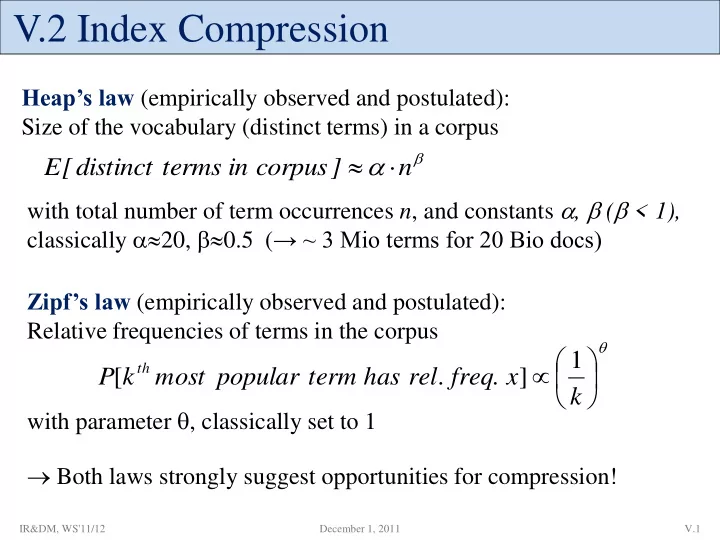

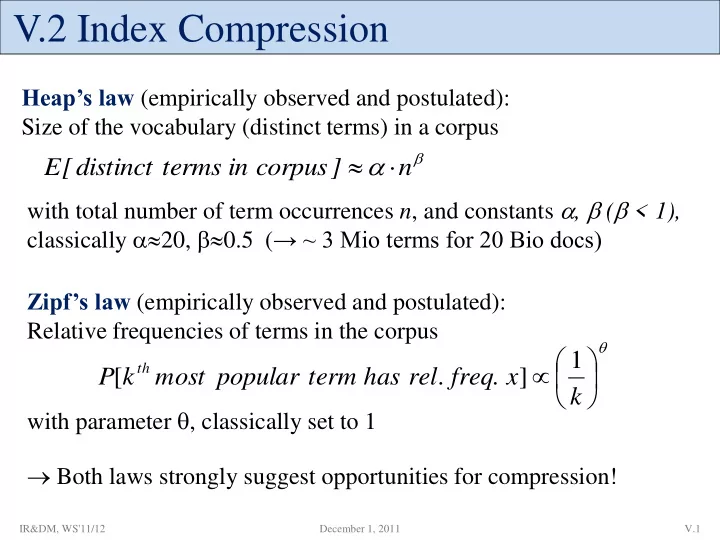

V.2 Index Compression Heap’s law (empirically observed and postulated): Size of the vocabulary (distinct terms) in a corpus n E [ distinct terms in corpus ] with total number of term occurrences n , and constants , ( < 1), classically 20, 0.5 ( → ~ 3 Mio terms for 20 Bio docs) Zipf’s law (empirically observed and postulated): Relative frequencies of terms in the corpus 1 k th P [ k most popular term has rel . freq . x ] with parameter , classically set to 1 Both laws strongly suggest opportunities for compression! IR&DM, WS'11/12 December 1, 2011 V.1

Recap: Huffman Coding Variable-length unary code based on frequency analysis of the underlying distribution of symbols (e.g., words or tokens) in a text. Key idea: choose shortest unary sequence for most frequent symbol. Symbol x Frequency f(x) Huffman Huffman tree Encoding 1 0 a 0.8 0 peter 0.1 10 10 11 picked 0.07 110 110 111 peck 0.03 111 peck a peter picked Let f(x) be the probability (or relative frequency) of the x-th symbol in some text d. The entropy of the text 1 (or the underlying prob. distribution f) is: H ( d ) f ( x ) log 2 f ( x ) x H(d) is a lower bound for the average (i.e., expected) amount of bits per symbol needed with optimal compression. Huffman comes close to H(d). IR&DM, WS'11/12 December 1, 2011 V.2

Overview of Compression Techniques • Dictionary-based encoding schemes: – Ziv-Lempel: LZ77 (entire family of Zip encodings: GZip, BZIP2, etc.) • Variable-length encoding schemes: – Variable-Byte encoding (byte-aligned) – Gamma , Golomb/Rice (bit-aligned) – S16 (byte-aligned, actually creates entire 32- or 64-bit words) – P-FOR-Delta (bit- aligned, with extra space for “exceptions”) – Interpolative Coding (IPC) (bit-aligned, can actually plug in various schemes for binary code) IR&DM, WS'11/12 December 1, 2011 V.3

Ziv-Lempel Compression LZ77 (Adaptive Dictionary) and further variants: • Scan text & identify in a lookahead window the longest string that occurs repeatedly and is contained in a backward window . • Replace this string by a “pointer” to its previous occurrence. Encode text into list of triples < back, count, new > where • back is the backward distance to a prior occurrence of the string that starts at the current position, • count is the length of this repeated string, and • new is the next symbol that follows the repeated string. Triples themselves can be further encoded (with variable length). Better variants use explicit dictionary with statistical analysis (need to scan text twice). IR&DM, WS'11/12 December 1, 2011 V.4

Example: Ziv-Lempel Compression peter_piper_picked_a_peck_of_pickled_peppers <0, 0, p> for character 1: p <0, 0, e> for character 2: e <0, 0, t> for character 3: t <-2, 1, r> for characters 4-5: er <0, 0, _> for character 6: _ <-6, 1, i> for characters 7-8: pi <-8, 2, r> for characters 9-11: per <-6, 3, c> for charaters 12-13: _pic <0, 0, k> for character 16 k <-7,1,d> for characters 17-18 ed ... great for text, but not appropriate for index lists IR&DM, WS'11/12 December 1, 2011 V.5

Variable-Byte Encoding • Encode sequence of numbers into variable-length bytes using one status bit per byte indicating whether the current number expands into next byte. Example: To encode the decimal number 12038, write: 1 st 8-bit word = 1 byte 2 nd 8-bit word = 1 byte Thus needs 2 bytes 1 1011110 0 0000110 instead of 4 bytes (regular 32-bit integer)! 1 status bit 7 data bits per byte IR&DM, WS'11/12 December 1, 2011 V.6

Gamma Encoding Delta-encode gaps in inverted lists (successive doc ids): Unary coding: Binary coding: gap of size x encoded by: gap of size x encoded by log 2 (x) times 0 followed by one 1 binary representation of number x (log 2 (x) + 1 bits) (log 2 x bits) good for short gaps good for long gaps Gamma ( γ ) coding: length := floor(log 2 x) in unary, followed by offset := x 2^(floor(log 2 x)) in binary Results in (1 + log 2 x + log 2 x) bits per input number x generalization: Golomb/Rice code (optimal for geometr. distr. x) still need to pack variable-length codes into bytes or words IR&DM, WS'11/12 December 1, 2011 V.7

Example: Gamma Encoding Number x Gamma Encoding 1 = 2 0 1 5 = 2 2 + 2 0 00101 15 = 2 3 +2 2 +2 1 +2 0 0001111 16 = 2 4 000010000 Particularly useful when: • Distribution of numbers (incl. largest number) is not known ahead of time • Small values (e.g., delta-encoded docIds or low TF*IDF scores) are frequent IR&DM, WS'11/12 December 1, 2011 V.8

Golomb/Rice Encoding For a tunable parameter M, split input number x into: • Quotient part q := floor(x/M) stored in unary code (using q x 1 + 1 x 0) • Remainder part r:= (x mod M) stored in binary code – If M is chosen as a power of 2, then r needs log 2 (M) bits ( → Rice encoding ) – else set b := ceil(log 2 (M)) • If r < 2 b −M, then r as plain binary number using b -1 bits • else code the number r + 2 b − M in plain binary representation using b bits M=10 b=4 Number x q Output bits q r Binary (b bits) Output bits r Example: 0 0000 000 0 0 0 3 0011 011 33 3 1110 57 5 111110 7 1101 1101 99 9 1111111110 9 1111 1111 IR&DM, WS'11/12 December 1, 2011 V.9

S9/S16 Compression [Zhang, Long, Suel : WWW’08] 32-bit word (integer) = 4 bytes 1001 1011110000100001101100101111 28 data bits 4 status bits • Byte aligned encoding (32-bit integer words of fixed length) • 4 status bits encode 9 or 16 cases for partitioning the 28 data bits – Example: If the above case 1001 denotes 4 x 7 bit for the data part, then the data part encodes the decimal numbers: 94, 8, 54, 47 • Decompression implemented by case table or by hardcoding all cases • High cache locality of decompression code/table • Fast CPU support for bit shifting integers on 32-bit to 128-bit platforms IR&DM, WS'11/12 December 1, 2011 V.10

P-FOR-Delta Compression [Zukowski, Heman, Nes, Boncz : ICDE’06] For “Patched Frame -of- Reference” w/Delta -encoded Gaps • Key idea: encode individual numbers such that “most” numbers fit into b bits . • Focuses on encoding an entire block at a time by choosing a value of b bits such that [high coded , low coded ] is small . • Outliers (“exceptions”) stored Encoding of 31415926535897932 in extra exception section at the using b=3 bitwise coding blocks end of the block in reverse order. for the code section. IR&DM, WS'11/12 December 1, 2011 V.11

Interpolative Coding (IPC) [Moffat, Stuiver : IR’00] • IPC directly encodes docIds rather than gaps. • Specifically aims at bursty/clustered docId’s of similar range. • Recursively splits input sequence into low-distance ranges. <1; 3 ; 8; 9; 11; 12; 13; 17> <1; 3 ; 8; 9;> <11; 12; 13; 17> <1; 3 > <8; 9;> <11; 12> <13; 17> • Requires ceil(log 2 (high i – low i + 1)) bits per number for bucket i in binary! • But: → Requires the decoder to know all high i /low i pairs. → Need to know large blocks of the input sequence in advance. IR&DM, WS'11/12 December 1, 2011 V.12

Comparison of Compression Techniques [Yan, Ding, Suel : WWW’09] Distribution of docID-gaps on TREC Compressed docId sizes (MB/query) on GOV2 (~25 Mio docs) reporting TREC GOV2 (~25 Mio docs) reporting averages over 1,000 queries averages over 1,000 queries • Variable-length encodings usually win by far in (de-) compression speed over dictionary & entropy-based schemes, at comparable compression ratios! Decompression speed (MB/query) for TREC GOV2, 1,000 queries IR&DM, WS'11/12 December 1, 2011 V.13

Layout of Index Postings [J. Dean: WSDM 2009] word word skip table block 1 block N … one block (with n postings): delta to last docId in block header #docs in block: n docId postings n-1 docId deltas: Rice M encoded tf values : Gamma encoded layout allows payload term attributes: Huffman encoded incremental (of postings) term positions: Huffman encoded decoding IR&DM, WS'11/12 December 1, 2011 V.14

Recommend

More recommend