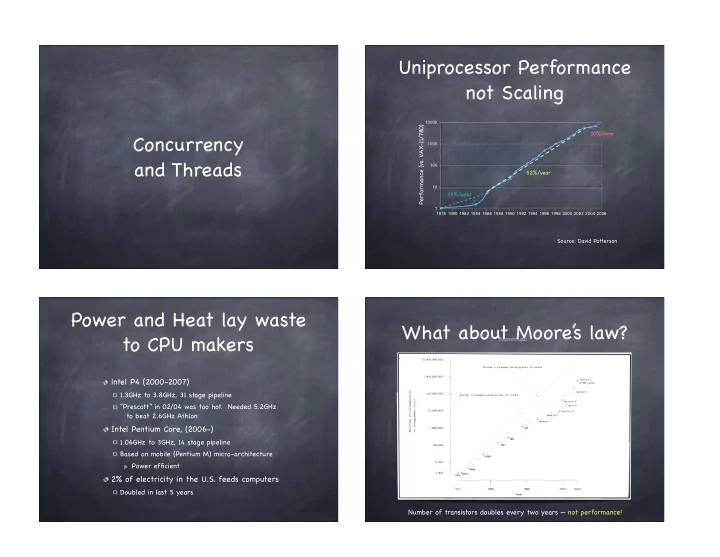

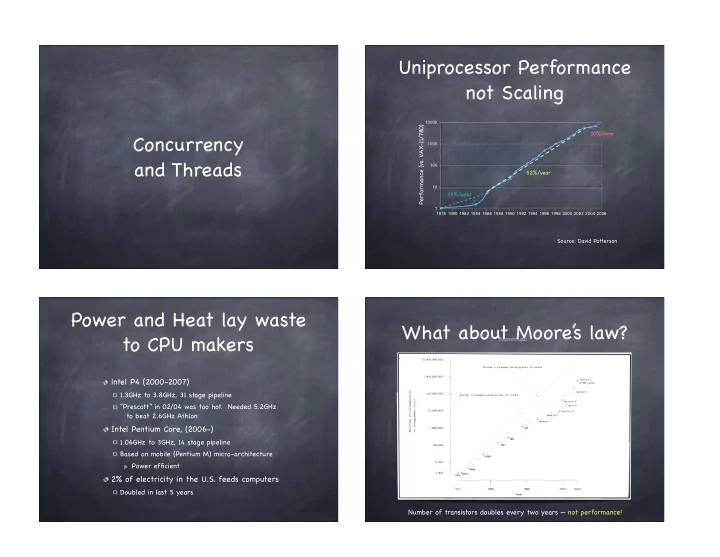

Uniprocessor Performance not Scaling Performance (vs. VAX-11/780) 10000 20%/year Concurrency 1000 and Threads 100 52%/year 10 25%/year 1 1978 1980 1982 1984 1986 1988 1990 1992 1994 1996 1998 2000 2002 2004 2006 Source: David Patterson Power and Heat lay waste What about Moore’ s law? to CPU makers I ntel P4 (2000-2007) 1.3GHz to 3.8GHz, 31 stage pipeline “Prescott” in 02/04 was too hot. Needed 5.2GHz to beat 2.6GHz Athlon Intel Pentium Core, (2006-) 1.06GHz to 3GHz, 14 stage pipeline Based on mobile (Pentium M) micro-architecture Power efficient 2% of electricity in the U.S. feeds computers Doubled in last 5 years Number of transistors doubles every two years — not performance!

Multicore is here - Transistor budget plain and simple We have an increasing glut of transistors (at least for a few more years) Raise your hand if your laptop is single core But we can’ t use them to make things faster Your phone? what worked in the 90s blew up heat faster than we can dissipate it That’ s what I thought What to do? make more cores! Multicore Programming: Processes and Threads Essential Skill The Process abstraction combines two concepts Concurrency: each process is a sequential execution stream of instructions Hardware manufacturers betting big on multicore Protection: Each process defines an address space that identifies what can be touched by the program Software developers are needed Threads Writing concurrent programs is not easy Key idea: decouple concurrency from protection A thread represents a sequential execution stream of You will learn how to do it in this class! instructions A process defines the address space that may be shared by multiple threads

Thread: an abstraction Why threads? for concurrency A single-execution stream of instructions that represents To express a natural program structure a separately schedulable task updating the screen, fetching new data, receiving user OS can run, suspend, resume thread at any time input bound to a process To exploit multiple processors Finite Progress Axiom: execution proceeds at some different threads may be mapped to distinct processors unspecified, non-zero speed To maintain responsiveness Virtualizes the processor splitting commands, spawn threads to do work in the programs run on machine with an infinite number of background processors (hint: not true) Masking long latency of I/O devices Allows to specify tasks that should be run concurrently... ...and lets us code each task sequentially do useful work while waiting How can they help? How can they help? Consider the following code segment: Consider a Web server get network message from client for (k = 0; k < n; k++) get URL data from disk a[k] = b[k] × c[k] + d[k] × e[k] compose response Is there a missed opportunity here? send response

Overlapping I/O & How can they help? Computation Request 1 Request 2 Consider a Web server Thread 1 Thread 2 get network message Create a number of threads, and for each thread do (URL) from client get network message get network message from client get URL from disk (URL) from client get URL data from disk get URL from disk (disk access latency) compose response (disk access latency) send response send data over network send data over network Time What did we gain? Total time is less than Request 1 + Request 2 Implementing the thread Processes vs. Threads abstraction: the state Processes Threads No data segment or heap Per-Thread Shared Per-Thread Have data/code/heap and other State segments Needs to live in a process State State More than one can be in a Include at least one thread process. First calls main. Thread Control Block Thread Control Block Heap If a process dies, its resources (TCB) (TCB) If a thread dies, its stack is are reclaimed and its threads die reclaimed Stack pointer Stack pointer Other Registers (PC, etc) Interprocess communication via Other Registers (PC, etc) Have own stack and registers, Global OS and data copying but no isolation from other Thread metadata (ID, priority, etc) Thread metadata (ID, priority, etc) threads in the same process Variables Have own address space, isolated Inter-thread communication via from other processes’ Stack Stack memory Stack frame Stack frame Each process can run on a Code Each thread can run on a Stack frame different processor different processor Stack frame Expensive creation and context Inexpensive creation and context Note: No protection enforced at the thread level! switch switch Processes

Multithreaded Processing A simple API Paradigms void thread_create(thread, func, arg) User Space creates a new thread in thread , which will execute function func with arguments arg Dispatcher Workers void thread_yield() calling thread gives up the processor Web page thread_join(thread) cache wait for thread to finish, then return the value thread passed to sthread_exit . Kernel thread_exit(ret) finish caller; store ret in caller’ s TCB and wake up any Dispatcher/Workers thread that invoked sthread_join(caller) Multithreaded Processing Multithreaded Processing Paradigms Paradigms User Space User Space Requests Request Kernel Kernel Specialists Pipelining

Threads Life Cycle Threads Life Cycle Threads (just like processes) go through a sequence of Threads (just like processes) go through a sequence of Init , Ready , Running , Waiting , and Finished states Init , Ready , Running , Waiting , and Finished states Thread creation (e.g. thread_create() ) Ready Running Ready Running Init Finished Init Finished TCB: being created Waiting Waiting Registers: in TCB Threads Life Cycle Threads Life Cycle Threads (just like processes) go through a sequence of Threads (just like processes) go through a sequence of Init , Ready , Running , Waiting , and Finished states Init , Ready , Running , Waiting , and Finished states Scheduler Scheduler Thread creation Thread creation resumes thread resumes thread (e.g. thread_create() ) (e.g. thread_create() ) Ready Running Ready Running Init Finished Init Finished TCB: Ready list TCB: Running list Waiting Waiting Registers: in TCB Registers: Processor

Threads Life Cycle Threads Life Cycle Threads (just like processes) go through a sequence of Threads (just like processes) go through a sequence of Init , Ready , Running , Waiting , and Finished states Init , Ready , Running , Waiting , and Finished states Scheduler Scheduler Thread creation Thread creation resumes thread resumes thread (e.g. thread_create() ) (e.g. thread_create() ) Ready Running Ready Running Init Finished Init Finished Thread yields Thread yields Scheduler suspends thread Scheduler suspends thread (e.g. thread_yield() ) (e.g. thread_yield() ) TCB: Ready list TCB: Running list Waiting Waiting Registers: in TCB Registers: Processor Threads Life Cycle Threads Life Cycle Threads (just like processes) go through a sequence of Threads (just like processes) go through a sequence of Init , Ready , Running , Waiting , and Finished states Init , Ready , Running , Waiting , and Finished states Scheduler Scheduler Thread creation Thread creation resumes thread resumes thread (e.g. thread_create() ) (e.g. thread_create() ) Ready Running Ready Running Init Finished Init Finished Thread yields Thread yields Scheduler suspends thread Scheduler suspends thread Event occurs Thread waits for event Thread waits for event (e.g. thread_yield() ) (e.g. thread_yield() ) (e.g. other thread (e.g. thread_join() ) (e.g. thread_join() ) calls thread_exit() ) TCB: Synchronization TCB: Ready list variable’ s waiting list Waiting Waiting Registers: in TCB Registers: TCB

Threads Life Cycle Threads Life Cycle Threads (just like processes) go through a sequence of Threads (just like processes) go through a sequence of Init , Ready , Running , Waiting , and Finished states Init , Ready , Running , Waiting , and Finished states Scheduler Scheduler Thread exit Thread creation Thread creation resumes thread resumes thread (e.g. thread_exit() ) (e.g. thread_create() ) (e.g. thread_create() ) Ready Running Ready Running Init Finished Init Finished Thread yields Thread yields Scheduler suspends thread Scheduler suspends thread Event occurs Event occurs Thread waits for event Thread waits for event (e.g. thread_yield() ) (e.g. thread_yield() ) (e.g. other thread (e.g. other thread (e.g. thread_join() ) (e.g. thread_join() ) calls thread_exit() ) calls thread_exit() ) TCB: Finished list (to pass TCB: Running list exit value), then deleted Waiting Waiting Registers: Processor Registers: TCB One abstraction, Context switching many flavors in-kernel threads Kernel-level threads You know the drill: execute kernel code. Common in today’ s OSs Thread is running Kernel level threads and single-threaded processes Switch to kernel system call handlers run concurrently with kernel threads Save thread state (to TCB and stack) Multithreaded processes using kernel threads Choose new thread to run thread within process make sys calls into kernel Load its state (from TCB and stack) User-level threads Thread is running thread ops in user-level library, without informing kernel TCB in user level ready list

Recommend

More recommend