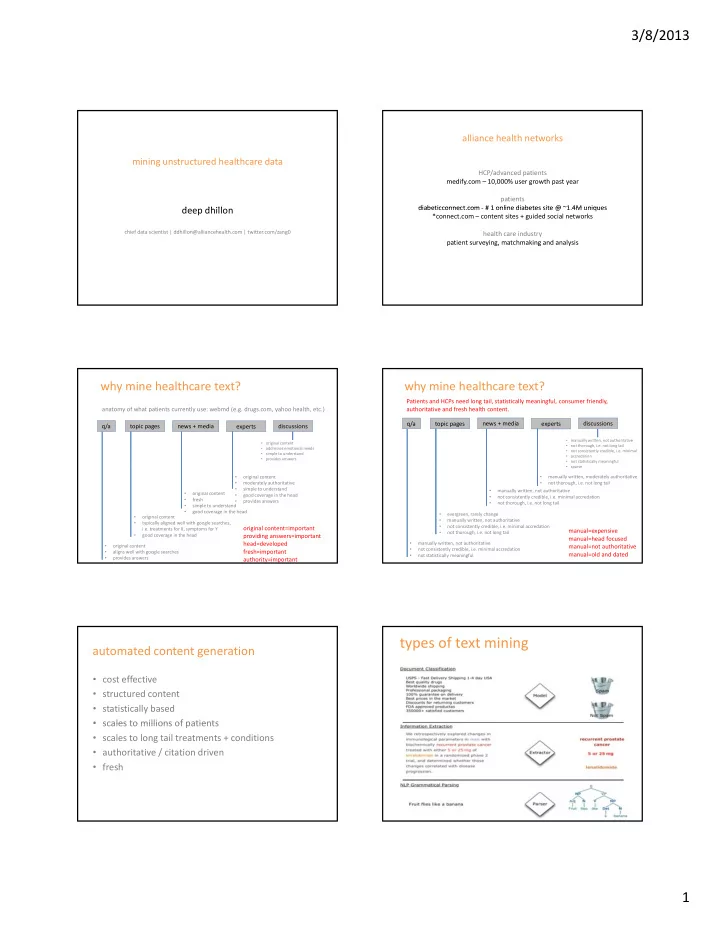

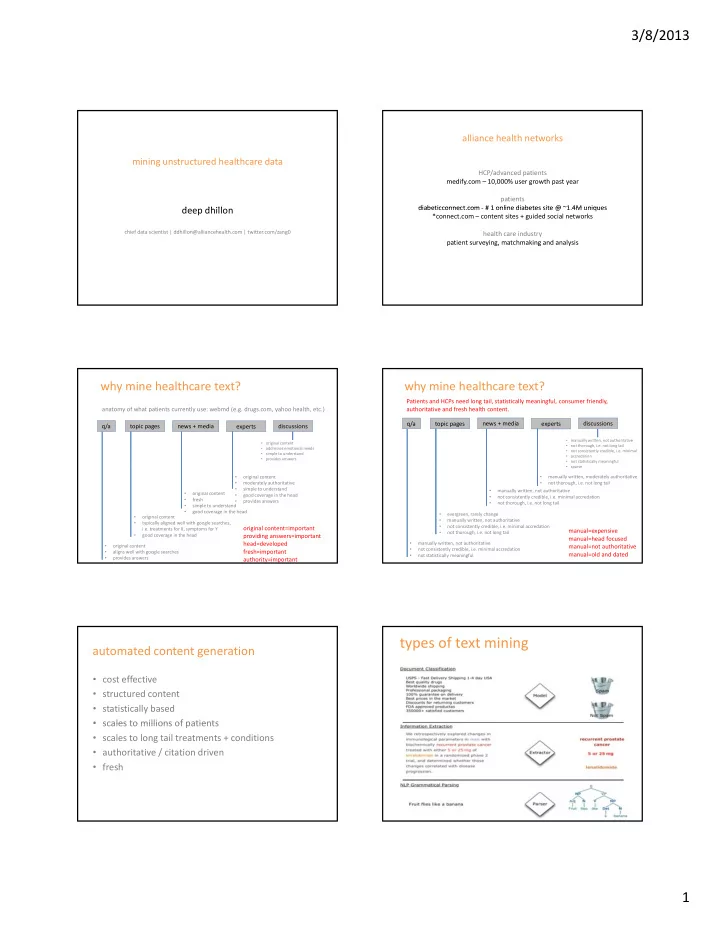

3/8/2013 alliance health networks mining unstructured healthcare data HCP/advanced patients medify.com – 10,000% user growth past year patients diabeticconnect com # 1 online diabetes site @ ~1 4M uniques diabeticconnect.com ‐ # 1 online diabetes site @ 1.4M uniques deep dhillon d dhill *connect.com – content sites + guided social networks chief data scientist | ddhillon@alliancehealth.com | twitter.com/zang0 health care industry patient surveying, matchmaking and analysis why mine healthcare text? why mine healthcare text? Patients and HCPs need long tail, statistically meaningful, consumer friendly, anatomy of what patients currently use: webmd (e.g. drugs.com, yahoo health, etc.) authoritative and fresh health content. q/a topic pages news + media experts discussions q/a topic pages news + media discussions experts • manually written, not authoritative • original content • not thorough, i.e. not long tail • addresses emotional needs • not consistently credible, i.e. minimal • simple to understand • accredation • provides answers • not statistically meaningful • sparse • original content • manually written, moderately authoritative • moderately authoritative • not thorough, i.e. not long tail • simple to understand • manually written, not authoritative • original content • good coverage in the head • not consistently credible, i.e. minimal accredation • fresh • provides answers • not thorough, i.e. not long tail • simple to understand • good coverage in the head • evergreen, rarely change • original content • manually written, not authoritative • typically aligned well with google searches, • not consistently credible, i.e. minimal accredation original content=important i.e. treatments for X, symptoms for Y manual=expensive • not thorough, i.e. not long tail • good coverage in the head providing answers=important manual=head focused head=developed • manually written, not authoritative • original content manual=not authoritative not consistently credible, i.e. minimal accredation • fresh=important • aligns well with google searches manual=old and dated • not statistically meaningful • provides answers authority=important types of text mining automated content generation • cost effective • structured content • statistically based • scales to millions of patients • scales to millions of patients • scales to long tail treatments + conditions • authoritative / citation driven • fresh 1

3/8/2013 how do we mine text? how do we mine text? medify demo assignments curators examples knowledge parser Index external repos Page Search like UMLS Module annotate in the product: • Easier for people to give explicit GT feedback • Channels high visibility error angst productively • Channels more GT toward areas most seen by users • Override high visibility system mistakes with human data gt demo: • http://www.diabeticconnect.com/discussions/4790 • https://www.medify.com/internal/annotate/abstract?abstractId=8181575 2

3/8/2013 Detailed Signals Preliminary Mining From Discussion Threads Distribution of Signals Mined From Diabetic Connect Discussion Threads 5,000 4,500 4,000 Medical History - Newly Lifestyle Adj ustment Diagnosed Medical History - S ymptom 6% 3,500 0% 3% 3,000 2,500 Medical History - Condition 10% 2,000 Helper/ Influencer H l / I fl 1,500 33% 1,000 500 Personal Account 12% 0 Treatment Experience 15% Medical History 21% abstract parser work flow document sentence selection 1 1. sentences w/ high conclusion presence selected 2. shallow entity tagging applied based on umls 2 3. sentence text is modified to retain entities, optimize shallow tagging for performance, and eliminate unnecessary filler. 4. deep typed dependency parsing of modified text API 5. 3 tier text classification applied on BOW + entities 3 sentence 6. SVO triples extracted from dependencies modification 7. rule based conclusions generated from triples Research (Outcome) Discussion (Gromin) 8. confidence models applied to rule and classifier based conclusions 9 curator 9. knowledge base used to represent domain and dependency tree discourse style. treatment/symptom/condition/demographic 4 knowledge parse 10. errors measured against curated data applied for improvements • identity (i.e. UMLS id) UMLS 5 • taxonomical relations • hierarchical is_a 6 triple extraction 3 tier classification 10 • condition/arthritis/Rhematoid Arthritis • synonym relations • RA=Rhematoid Arthritis • metformim 7 concluder • polarity knowledge base • effective=positive 8 ineffective=negative • • parsing clues (ambiguity) confidence assignment annotations • anaphor clues discussion parser work flow message sentence split 1 1. sentences split from discussion thread messages 2. shallow entity tagging applied based on umls feature engineering 3. sentence text is modified to retain entities, optimize 2 for performance, and eliminate unnecessary filler. shallow tagging 4. deep typed dependency parsing of modified text for select conclusion types 5. select conclusion type based text classification • entities, i.e. normalize synonyms, id new 3 applied on BOW + entities after dimensionality sentence reduction modification 6. SVO triples extracted from dependencies entity types, like social relations 7. rule based conclusions generated from triples 9 8. rule based KB driven anaphora resolution applied. Classification based conclusions added. • entity types, i.e. metformin > dependency tree 9. knowledge base used to represent domain and 4 knowledge parse discourse style. treatment_medication treatment medication 10. 10 errors measured against curated data applied for errors measured against curated data applied for improvements 5 • phrase driven cues, i.e. [have] [you] 6 triple extraction classification 10 [considered] > suggestion_indicator 7 concluder 8 anaphora resolution conclusions 3

3/8/2013 anaphora resolution technology • relation structure, i.e. [person]>takes>it • Lang: Java + Python + Ruby it refers to treatment (i.e. not condition/symptom), and specifically: medication but not device • statistically driven, manually curated cues • DB: Solr 4, Mongo DB, S3 i.e. drug > treatment/medication • Work: Map Reduce • filter • Dependencies: Malt Parser, Stanford Parser – non matching antecedent candidates – singular/plural agreement • Misc: Tomcat, Spring, Mallet, Reverb, Minor Third • score candidates: • Tagging: Peregrine + home grown – antecedent occurrence frequency – distance (#sents) from antecedent to anaphor – co ‐ occurrence of anaphor w/ antecedent Data Pipeline Solr 2 Solr N Solr 1 Load Balancer – API Cache Portal 1 Portal 2 Portal N Load Balancer – www.medify.com request transaction flow browser 21 questions? 4

3/8/2013 5

3/8/2013 Advair: Experts vs. Patients • “medicalese” vs. patients words • more granularity • a story like perspective w/ words of inspiration My Pulmonologist today said that he had just come from the hospital bedside of a patient with my exact symptoms who is on oxygen and not doing well. If I hadn't been so diligent in following my asthma plan that could easily have been me. His telling me that really hit home. By the way, my Pulmonologist surprised me with his view on many of his patients. He actually got excited and thanked me for being knowledgeable about asthma, understanding my own body and health needs and for following my treatment plan. He said he doesn't get many patients who can actually talk about their disease, symptoms, time frames, treatments/medications, and non ‐ medical measures they are taking. He also doesn't get many people who ask questions. My response to this: What are people thinking? They need to take control of their own health or noone else will. 6

Recommend

More recommend